Abstract

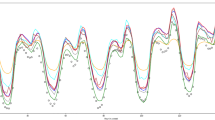

Based on the Nyström approximation and the primal-dual formulation of the least squares support vector machines, it becomes possible to apply a nonlinear model to a large scale regression problem. This is done by using a sparse approximation of the nonlinear mapping induced by the kernel matrix, with an active selection of support vectors based on quadratic Renyi entropy criteria. The methodology is applied to the case of load forecasting as an example of a real-life large scale problem in industry. The forecasting performance, over ten different load series, shows satisfactory results when the sparse representation is built with less than 3% of the available sample.

Similar content being viewed by others

References

Björkstrom A, Sundberg R (1999) A Generalized view on continuum regression. Scand J Stat 26:17–30

Bunn D (2000) Forecasting load and prices in competitive power markets. Invited paper, Proc IEEE 88(2): 163–169

Chen BJ, Chang MW, Lin CJ (2002) Load forecasting using support vector machines: a study on EUNITE competition 2001. Technical report, Department of computer science and information engineering, National Taiwan University, Taipei Taiwan

Cristianini N, Shawe-Taylor J (2000) An introduction to support vector machines. Cambridge University Press, London

Engle R, Granger CJ, Rice J, Weiss A (1986) Semiparametric estimates of the relation between weather and electricity sales. J Am Stat Assoc 81:394, 310–320

Espinoza M, Suykens JAK, De Moor B (2003) Least squares support vector machines and primal space estimation. In: Proceedings of the IEEE 42nd conference on decision and control, Maui, USA, pp 3451–3456

Espinoza M, Pelckmans K, Hoegaerts L, Suykens JAK, De Moor B (2004) A comparative study of LS-SVM applied to the silverbox Identification problem. In: Proceedings of the 6th IFAC conference on nonlinear control systems (NOLCOS), Stuttgart, Germany

Espinoza M, Joye C, Belmans R, De Moor B (2005) Short term load forecasting, profile identification and customer segmentation: a methodology based on periodic time series. IEEE Trans Power Syst 20(3):1622–1630

Fay D, Ringwood J, Condon M, Kelly M (2003) 24-h electrical load data – a sequential or partitioned time series. Neurocomputing 55:469–498

Frank I, Friedman J (1993) A statistical view of some chemometrics regression tools. Technometrics 35:109–148

Girolami M (2003) Orthogonal series density estimation and the kernel eigenvalue problem. Neural Comput 14(3):669–688

Girosi F (1998) An equivalence between sparse approximation and support vector machines. Neural Comput 10(6):1455–1480

Hylleberg S (1992) Modelling Seasonality. Oxford University Press, New York

Hoerl AE, Kennard RW (1970) Ridge regression: biased estimation for non orthogonal problems. Technometrics 8:27–51

Ljung L (1999) Systems identification: theory for the user. 2nd Edn, Prentice Hall, New Jersey

Lotufo ADP, Minussi CR (1999) Electric power systems load forecasting: a survey. IEEE Power Tech Conference, Budapest, Hungary

MacKay DJC (1995) Probable networks and plausible predictions – a review of practical Bayesian methods for supervised neural networks. Netw Comput Neural Syst 6:469–505

Mariani E, Murthy SS (1997) Advanced load dispatch for power systems. Advances in industrial control. Springer, Berlin Heidelberg New York

Poggio T, Girosi F (1990) Networks for approximation and learning. Proc IEEE 78(9):1481–1497

Ramanathan R, Engle R, Granger CWJ, Vahid-Aragui F, Brace C (1997) Short-run forecasts of electricity load and peaks. Int J Forecast 13:161–174

Shawe-Taylor J, Williams CKI (2003) The stability of kernel principal components analysis and its relation to the process eigenspectrum. In: Advances in neural information processing systems Vol 15, MIT Press, cambridge

Steinherz H, Pedreira C, Castro R (2001) Neural networks for short-term load forecasting: a review and evaluation. IEEE trans power syst 16(1):69–96

Stone M, Brooks RJ (1990) Continuum regression: cross-validated sequentially contructed prediction embracing ordinary least squares, partial least squares and principal components regression. J R Stat Soc B 52:237–269

Sundberg R (1993) Continuum Regression and ridge regression. J R Stat Soc B 55:653–659

Suykens JAK, Vandewalle J (1999) Least squares support vector machines classifiers. Neural Process Lett 9:293–300

Suykens JAK, De Brabanter J, Lukas L, Vandewalle J (2002a) Weighted least squares support vector machines: robustness and sparse approximation. Neurocomputing 48(1–4):85–105 (Special issue on fundamental and information processing aspects of neurocomputing)

Suykens JAK, Van Gestel T, De Brabanter J, De Moor B, Vandewalle J (2002b) Least squares support vector machines. World Scientific, Singapore

Vapnik V (1998) Statistical learning theory. Wiley, New York

Verbeek M (2000) A guide to modern econometrics. Wiley, New York

Weigend AS, Gershenfeld NA ed. (1994) Time series predictions: forecasting the future and understanding the past. Addison-Wesley, Reading

Williams CKI (1998). Prediction with gaussian processes: from linear regression to linear prediction and beyond. In: Jordan MI (eds). Learning and inference in graphical models. Kluwer, Dordrecht

Williams CKI, Seeger M (2000a). The effect of the input density distribution on kernel-based classifiers. In: Langley (eds). Proceedings of the 17th international conference on machine learning (ICML 2000). Morgan Kauffmann, San Fransisco

Williams CKI, Seeger M (2000b). Using the Nyström method to speed up kernel machines. In: Leen T, Dietterich T, Tresp V (eds). Proceedings NIPS’2000, vol 13, MIT press, Cambridge

Author information

Authors and Affiliations

Corresponding author

Additional information

This work is supported by grants and projects for the Research Council K.U.Leuven (GOA-Mefisto 666, GOA-Ambiorics, several PhD/ Postdocs & fellow grants), the Flemish Government (FWO: PhD/ Postdocs grants, projects G.0211.05, G.0240.99, G.0407.02, G.0197.02, G.0141.03, G.0491.03, G.0120.03, G.0452.04, G.0499.04, ICCoS, ANMMM; AWI; IWT: PhD grants, GBOU (McKnow) Soft4s), the Belgian Federal Government (Belgian Federal Science Policy Office: IUAP V-22; PODO-II (CP/ 01/40), the EU (FP5- Quprodis; ERNSI, Eureka 2063- Impact; Eureka 2419- FLiTE) and Contracts Research / Agreements (ISMC /IPCOS, Data4s, TML, Elia, LMS, IPCOS, Mastercard). J. Suykens and B. De Moor are an associate professor and a full professor with K.U.Leuven, Belgium, respectively. The scientific responsibility is assumed by its authors.

Rights and permissions

About this article

Cite this article

Espinoza, M., Suykens, J.A.K. & Moor, B.D. Fixed-size Least Squares Support Vector Machines: A Large Scale Application in Electrical Load Forecasting. CMS 3, 113–129 (2006). https://doi.org/10.1007/s10287-005-0003-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10287-005-0003-7