Abstract

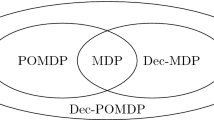

POMDPs and their decentralized multiagent counterparts, DEC-POMDPs, offer a rich framework for sequential decision making under uncertainty. Their high computational complexity, however, presents an important research challenge. One way to address the intractable memory requirements of current algorithms is based on representing agent policies as finite-state controllers. Using this representation, we propose a new approach that formulates the problem as a nonlinear program, which defines an optimal policy of a desired size for each agent. This new formulation allows a wide range of powerful nonlinear programming algorithms to be used to solve POMDPs and DEC-POMDPs. Although solving the NLP optimally is often intractable, the results we obtain using an off-the-shelf optimization method are competitive with state-of-the-art POMDP algorithms and outperform state-of-the-art DEC-POMDP algorithms. Our approach is easy to implement and it opens up promising research directions for solving POMDPs and DEC-POMDPs using nonlinear programming methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Amato, C., Bernstein, D. S., & Zilberstein, S. (2007). Solving POMDPs using quadratically constrained linear programs. In: Proceedings of the twentieth international joint conference on artificial intelligence. (pp. 2418–2424.) Hyderabad, India.

Becker R., Zilberstein S., Lesser V., Goldman C.V. (2004) Solving transition-independent decentralized Markov decision processes. Journal of AI Research 22: 423–455

Bernstein, D. S., Hansen, E., & Zilberstein, S. (2005). Bounded policy iteration for decentralized POMDPs. In: Proceedings of the nineteenth international joint conference on artificial intelligence. (pp. 1287–1292). Edinburgh, Scotland.

Bernstein D.S., Amato C., Hansen E.A., Zilberstein S. (2009) Policy iteration for decentralized control of Markov decision processes. Journal of AI Research 34: 89–132

Bertsekas D.P. (2004) Nonlinear programming. Belmont, MA, Athena Scientific

Cassandra, A. R. (1998a). A survey of POMDP applications. In: AAAI fall symposium: Planning with POMDPs. Orlando, FL.

Cassandra, A. R. (1998b). Exact and approximate algorithms for partially observable Markov decision processes. PhD thesis. Brown University Providence, RI.

Eckles J.E. (1968) Optimum maintenance with incomplete information. Operations Research 16: 1058–1067

Emery-Montemerlo, R., Gordon, G., Schneider, J., & Thrun, S. (2004). Approximate solutions for partially observable stochastic games with common payoffs. In: Proceedings of the third international joint conference on autonomous agents and multiagent systems (pp. 136–143). New York, NY.

Gill P. E., Murray W., Saunders M. (2005) Snopt: An SQP algorithm for large-scale constrained optimization. SIAM Review 47: 99–131

Hansen, E. A. (1998). Solving POMDPs by searching in policy space. In: Proceedings of the fourteenth conference on uncertainty in artificial intelligence. (pp. 211–219). Madison, WI.

Hansen, E. A., Bernstein, D. S., & Zilberstein, S. (2004). Dynamic programming for partially observable stochastic games. In: Proceedings of the nineteenth national conference on artificial intelligence. (pp. 709–715). San Jose, CA.

Hauskrecht, M., & Fraser, H. (1998). Modeling treatment of ischemic heart disease with partially observable Markov decision processes. In: Proceedings of American medical informatics association annual symposium on computer applications in health care. (pp. 538–542). Orlando, Florida.

Horst R., Tuy H. (1996) Global optimization: Deterministic approaches. Springer, New York

Ji, S., Parr, R., Li, H., Liao, X., & Carin, L. (2007). Point-based policy iteration. In: Proceedings of the twenty-second national conference on artificial intelligence. (pp. 1243–1249). Vancouver, Canada.

Littman M.L., Cassandra A.R., Kaelbling L.P. (1995) Learning policies for partially observable environments: Scaling up. Technical report CS-95-11. Brown University, Department of Computer Science, Providence, RI

Marecki, J., Gupta, T., Varakantham, P., Tambe, M., & Yokoo, M. (2008). Not all agents are equal: Scaling up distributed POMDPs for agent networks. In: Proceedings of the seventh international joint conference on autonomous agents and multiagent systems. (pp. 485–492). Estoril, Portugal.

Meuleau, N., Kim, K. E., Kaelbling, L. P., & Cassandra, A. R. (1999). Solving POMDPs by searching the space of finite policies. In: Proceedings of the fifteenth conference on uncertainty in artificial intelligence. (pp. 417–426). Stockholm, Sweden.

Nair, R., Pynadath, D., Yokoo, M., Tambe, M., & Marsella, S. (2003). Taming decentralized POMDPs: Towards efficient policy computation for multiagent settings. In: Proceedings of the nineteenth international joint conference on artificial intelligence. (pp. 705–711). Acapulco, Mexico.

Petrik, M., & Zilberstein, S. (2007). Average-reward decentralized Markov decision processes. In Proceedings of the twentieth international joint conference on artificial intelligence (pp. 1997–2002). Hyderabad, India.

Pineau, J., Gordon, G., & Thrun, S. (2003). Point-based value iteration: An anytime algorithm for POMDPs. In: Proceedings of the eighteenth international joint conference on artificial intelligence. (pp. 1025–1032). Acapulco, Mexico.

Poupart, P. (2005). Exploiting structure to efficiently solve large scale partially observable Markov decision processes. PhD thesis. University of Toronto.

Poupart P., Boutilier C. (2004) Bounded finite state controllers. In: Thrun S., Saul L., Schölkopf B. (eds) Advances in neural information processing systems 16. MIT Press, Cambridge, MA

Seuken, S., & Zilberstein, S. (2007a). Memory-bounded dynamic programming for DEC-POMDPs. In: Proceedings of the twentieth international joint conference on artificial intelligence. (pp. 2009–2015). Hyderabad, India.

Seuken, S., & Zilberstein, S. (2007b). Improved memory-bounded dynamic programming for decentralized POMDPs. In: Proceedings of the twenty-third conference on uncertainty in artificial intelligence. Vancouver, Canada.

Simmons, R., & Koenig, S. (1995). Probabilistic navigation in partially observable environments. In: Proceedings of the fourteenth international joint conference on artificial intelligence. (pp. 1080–1087). Montral, Canada.

Singh, S., Jaakkola, T., & Jordan, M. (1994). Learning without state-estimation in partially observable Markovian decision processes. In: Proceedings of the eleventh international conference on machine learning. (pp. 284–292). New Brunswick, NJ.

Smith, T., & Simmons, R. (2004). Heuristic search value iteration for POMDPs. In: Proceedings of the twentieth conference on uncertainty in artificial intelligence. (pp. 520–527). Banff, Canada.

Smith, T., & Simmons, R. (2005). Point-based POMDP algorithms: Improved analysis and implementation. In: Proceedings of the twenty-first conference on uncertainty in artificial intelligence. Edinburgh, Scotland.

Sondik, E. J. (1971). The optimal control of partially observable Markov processes. PhD thesis. Stanford University.

Spaan M. T. J., Vlassis N. (2005) Perseus: Randomized point-based value iteration for POMDPs. Journal of AI Research 24: 195–220

Sutton R. S., Barto A. G. (1995) Reinforcement learning: An introduction. MIT Press, Cambridge, MA

Szer, D., & Charpillet, F. (2005). An optimal best-first search algorithm for solving infinite horizon DEC-POMDPs. In: Proceedings of the sixteenth European conference on machine learning. (pp. 389–399). Porto, Portugal.

Szer, D., Charpillet, F., & Zilberstein, S. (2005). MAA*: A heuristic search algorithm for solving decentralized POMDPs. In: Proceedings of the twenty-first conference on uncertainty in artificial intelligence. (pp. 576–583). Edinburgh, Scotland.

Wah, B. W., & Chen, Y. (2005). Solving large-scale nonlinear programming problems by constraint partitioning. In: Proceedings of the eleventh international conference on principles and practice of constraint programming. (pp. 697–711).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Amato, C., Bernstein, D.S. & Zilberstein, S. Optimizing fixed-size stochastic controllers for POMDPs and decentralized POMDPs. Auton Agent Multi-Agent Syst 21, 293–320 (2010). https://doi.org/10.1007/s10458-009-9103-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10458-009-9103-z