Abstract

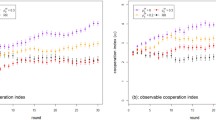

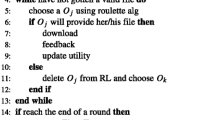

In game theoretical analysis of incentive mechanisms, all players are assumed to be rational. Since it is likely that mechanism participants in the real world may not be fully rational, such mechanisms may not work as effectively as in the idealized settings for which they were designed. Therefore, it is important to evaluate the robustness of incentive mechanisms against various types of agents with bounded rational behaviors. Such evaluations would provide us with the information needed to choose mechanisms with desired properties in real environments. In this article, we first propose a general robustness measure, inspired by research in evolutionary game theory, as the maximal percentage of invaders taking non-equilibrium strategies such that the agents sustain the desired equilibrium strategy. We then propose a simulation framework based on evolutionary dynamics to empirically evaluate the equilibrium robustness. The proposed simulation framework is validated by comparing the simulated results with the analytical predictions based on a modified simplex analysis approach. Finally, we implement the proposed simulation framework for evaluating the robustness of incentive mechanisms in reputation systems for electronic marketplaces. The results from the implementation show that the evaluated mechanisms have high robustness against a certain non-equilibrium strategy, but is vulnerable to another strategy, indicating the need for designing more robust incentive mechanisms for reputation management in e-marketplaces.

Similar content being viewed by others

Notes

For ease of analysis, we assume a single equilibrium for a mechanism. Our analysis can be extended to handle multiple equilibria.

When the game is symmetric and the positions of the \(n\) players are equivalent, a single population could represent all the players who interact with each other.

We do not consider the case of \(\eta <1\) in Eq. (7) as in that case \(f_1<\frac{1}{N}\), which is a very small value for a large population size and hence equilibrium strategies are very likely to be robust against one or few invaders.

There are two considerations for choosing an appropriate value for the parameter \(\epsilon \): (1) \(\epsilon \) should be no less than its measurable accuracy \(\frac{1}{T}\); (2) it is better to use a smaller value for \(\epsilon \).

For a symmetric game played in a single population, it can be considered as two replicated populations.

We assume that the strategy of bounded rational agents cannot evolve as they are not rational.

The strategy of rational buyers can be various, and we assume that the rational buyers would not investigate the strategies not existing in the system.

The main purpose of conducting the empirical evaluation is to verify whether the proposed simulation framework can output meaningful robustness evaluation results of different incentive mechanisms against different attacks. By considering those typical pure attacking strategies, we can (1) leverage the analytical results in the literature such as [14]; (2) perform qualitative analysis on the robustness of the incentive mechanisms. Then, we can easily check whether our simulation framework implemented in the empirical evaluation gives the same results.

References

Aghassi, M., & Bertsimas, D. (2006). Robust game theory. Mathematical Programming: Series A and B, 107(1–2), 231–273.

Aiyer, A. S., Alvisi, L., & Clement, A. (2005). Bar fault tolerance for cooperative services. In Proceedings of ACM symposium on operating systems principles (SOSP), pp. 45–58.

Bergemann, D., & Morris, S. (2012). Robust mechanism design: The role of private information and higher order beliefs. Singapore and London: World Scientific Publishing and Imperial College Press.

Díaz, J., Goldberg, L. A., Mertzios, G. B., Richerby, D., Serna, M., & Spirakis, P. G. (2012). Approximating fixation probabilities in the generalized moran process. In Proceedings of the ACM-SIAM symposium on discrete algorithms (SODA), pp. 954–960.

Eliaz, K. (2002). Fault tolerant implementation. Review of Economic Studies, 69(3), 589–610.

Halpern, J. Y. (2008). Beyond Nash equilibrium: Solution concepts for the 21st century. In Proceedings of the 27th ACM symposium on principles of distributed computing (PODC), pp. 1–10.

Halpern, J. Y., Pass, R., & Seeman, L. (2012). I’m doing as well as i can: Modelling people as rational finite automata. In Proceedings of the AAAI conference on artificial intelligence, pp. 1917–1923.

Helbing, D., & Yu, W. (2009). The outbreak of cooperation among success-driven individuals under noisy conditions. In Proceedings of the National Academy of Sciences, pp. 3680–3685.

Irissappane, A. A., Jiang, S., & Zhang, J. (2012). Towards a comprehensive testbed to evaluate the robustness of reputation systems against unfair rating attacks. In Proceedings of the twentieth conference on user modeling, adaption, and personalization (UMAP) workshop on trust, reputation, and user modelling.

Jackson, M. O. (2003). Mechanism design. Oxford, UK: Encyclopedia of Life Support Systems (EOLSS) Publisher.

Jiang, S. (2013). Towards the design of robust trust and reputation systems. In Proceedings of the twenty-third international conference on artificial intelligence (IJCAI), pp. 3225–3226.

Jøsang, A., Ismail, R., & Boyd, C. (2007). A survey of trust and reputation systems for online service provision. Decision Support System, 43(2), 795–825.

Jurca, R. (2007). Truthful reputation mechanisms for online systems. Ph.D. thesis, EPFL.

Kerr, R., & Cohen, R. (2009). Smart cheaters do prosper: Defeating trust and reputation systems. In AAMAS, pp. 993–1000.

Leyton-Brown, K. (2013). Chapter 7: Mechanism design and auctions in multiagent systems (2nd ed.). Cambridge, MA: MIT Press.

Moran, P. A. P. (1962). The statistical process of evolutionary theory. Oxford, UK: Clarendon Press.

Nowak, M. A. (2006). Evolutionary dynamics: Exploring the equations of life. Cambridge: Harvard University Press.

Nowak, M. A., Sasaki, A., Taylor, C., & Fudenberg, D. (2004). Emergence of cooperation and evolutionary stability in finite populations. Nature, 428, 646–650.

Papaioannou, T. G., & Stamoulis, G. D. (2010). A mechanism that provides incentives for truthful feedback in p2p systems. Electronic Commerce Research, 10(3–4), 331–362.

Ponsen, M., Tuyls, K., Kaisers, M., & Ramon, J. (2009). An evolutionary game-theoretic analysis of poker strategies. Entertainment Computing, 1(1), 39–45.

Raghunandan, M. A., & Subramanian, C. A. (2012). Sustaining cooperation on networks: An analytical study based on evolutionary game theory. In Proceedings of the International Conference on Autonomous Agents and Multiagent Systems (AAMAS), pp. 913–920.

Sandholm, W. H. (2012). Evolutionary game theory. Computational Complexity, 1, 1000–1029.

Smith, J. M. (1982). Evolution and the theory of games. Cambridge: Cambridge University Press.

Smith, J. M., & Price, G. R. (1973). The logic of animal conflict. Nature, 246(5427), 15–18.

Taylor, P. D. (1978). Evolutionarily stable strategies and game dynamics. Mathematical Biosciences, 6, 145–156.

Traulsen, A., Hauert, C., Silva, H. D., Nowak, M. A., & Sigmund, K. (2009). Exploration dynamics in evolutionary games. In Proceedings of National Academy of Sciences of the United States of America, pp. 709–712.

Tuyls, K., & Parsons, S. (2007). What evolutionary game theory tells us about multiagent learning. Artificial Intelligence, 171(7), 406–416.

Tuyls, K., Hoen, P. J., & Vanschoenwinkel, B. (2006). An evolutionary dynamical analysis of multi-agent learning in iterated games. Journal of Autonomous Agents and Multi-Agent Systems, 12(1), 115–153.

Ventura, D. (2012). Rational irrationality. In Proceedings of the AAAI spring symposium on game theory for security sustainability and health, pp. 83–90.

von Neumann, J., & Morgenstern, O. (1944). Theory of games and economic behaviour. Princeton: Princeton University Press.

Walsh, W. E., Das, R., Tesauro, G., & Kephart, J. O. (2002). Analyzing complex strategic interactions in multi-agent systems. In In AAAI-03 workshop on game theoretic and decision theoretic agents, pp. 109–118.

Wellman, M. P. (1997). Evolutionary game theory. Cambridge: MIT Press.

Wellman, M. P. (2006). Methods for empirical game-theoretic analysis. In Proceedings of the twenty-first national conference on artificial intelligence (AAAI), pp. 1552–1553.

Witkowski, J., Seuken, S., & Parkes, D. C. (2011). Incentive-compatible escrow mechanisms. In Proceedings of the 25th conference on artificial intelligence (AAAI), pp. 751–757.

Wright, J. R., & Leyton-Brown, K. (2013). Behavioral game-theoretic models: A Bayesian framework for parameter analysis. In Proceedings of the 11th international conference on autonomous agents and multiagent systems (AAMAS), pp. 921–928.

Zhang, J., Cohen, R., & Larson, K. (2012). Combinding trust modeling and mechanism design for promoting honesty in e-marketplaces. Computational Intelligence, 28(4), 549–578.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Liu, Y., Zhang, J., An, B. et al. A simulation framework for measuring robustness of incentive mechanisms and its implementation in reputation systems. Auton Agent Multi-Agent Syst 30, 581–600 (2016). https://doi.org/10.1007/s10458-015-9296-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10458-015-9296-2