Abstract

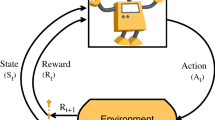

In recent trends, artificial intelligence (AI) is used for the creation of complex automated control systems. Still, researchers are trying to make a completely autonomous system that resembles human beings. Researchers working in AI think that there is a strong connection present between the learning pattern of human and AI. They have analyzed that machine learning (ML) algorithms can effectively make self-learning systems. ML algorithms are a sub-field of AI in which reinforcement learning (RL) is the only available methodology that resembles the learning mechanism of the human brain. Therefore, RL must take a key role in the creation of autonomous robotic systems. In recent years, RL has been applied on many platforms of the robotic systems like an air-based, under-water, land-based, etc., and got a lot of success in solving complex tasks. In this paper, a brief overview of the application of reinforcement algorithms in robotic science is presented. This survey offered a comprehensive review based on segments as (1) development of RL (2) types of RL algorithm like; Actor-Critic, DeepRL, multi-agent RL and Human-centered algorithm (3) various applications of RL in robotics based on their usage platforms such as land-based, water-based and air-based, (4) RL algorithms/mechanism used in robotic applications. Finally, an open discussion is provided that potentially raises a range of future research directions in robotics. The objective of this survey is to present a guidance point for future research in a more meaningful direction.

Similar content being viewed by others

References

Abul O, Polat F, Alhajj R (2000) Multiagent reinforcement learning using function approximation. IEEE Trans Syst Man Cybern Part C (Appl Rev) 30(4):485–497

Adam S, Busoniu L, Babuska R (2011) Experience replay for real-time reinforcement learning control. IEEE Trans Syst Man Cybern Part C (Appl Rev) 42(2):201–212

Ansari Y, Manti M, Falotico E, Cianchetti M, Laschi C (2017) Multiobjective optimization for stiffness and position control in a soft robot arm module. IEEE Robot Autom Lett 3(1):108–115

Antonelo EA, Schrauwen B (2014) On learning navigation behaviors for small mobile robots with reservoir computing architectures. IEEE Trans Neural Netw Learn Syst 26(4):763–780

Arulkumaran K, Deisenroth MP, Brundage M, Bharath AA (2017) Deep reinforcement learning: a brief survey. IEEE Signal Process Mag 34(6):26–38

Averbeck BB, Costa VD (2017) Motivational neural circuits underlying reinforcement learning. Nat Neurosci 20(4):505–512

Baird L (1995) Residual algorithms: reinforcement learning with function approximation. In: Machine learning proceedings 1995, Elsevier, pp 30–37

Baird III LC, Moore AW (1999) Gradient descent for general reinforcement learning. In: Advances in neural information processing systems, pp 968–974

Barto AG, Sutton RS, Anderson CW (1983) Neuron like adaptive elements that can solve difficult learning control problems. IEEE Trans Syst Man Cybern 5:834–846

Bejar E, Moran A (2019) A preview neuro-fuzzy controller based on deep reinforcement learning for backing up a truck-trailer vehicle. In: 2019 IEEE canadian conference of electrical and computer engineering (CCECE), IEEE, pp 1–4

Beom HR, Cho HS (1995) A sensor-based navigation for a mobile robot using fuzzy logic and reinforcement learning. IEEE Trans Syst Man Cybern 25(3):464–477

Bertsekas DP (1995) Dynamic programming and optimal control. Athena scientific, Belmont

Bertsekas DP (2018) Feature-based aggregation and deep reinforcement learning: a survey and some new implementations. IEEE/CAA J Autom Sin 6(1):1–31

Böhmer W, Springenberg JT, Boedecker J, Riedmiller M, Obermayer K (2015) Autonomous learning of state representations for control: an emerging field aims to autonomously learn state representations for reinforcement learning agents from their real-world sensor observations. KI-Künstliche Intelligenz 29(4):353–362

Bonarini A, Bonacina C, Matteucci M (2001) An approach to the design of reinforcement functions in real world, agent-based applications. IEEE Trans Syst Man Cybern Part B (Cybern) 31(3):288–301

Bowling M, Veloso M (2001) Rational and convergent learning in stochastic games. Int Joint Conf Artif Intell 17:1021–1026

Bowling M, Veloso M (2002) Multiagent learning using a variable learning rate. Artif Intell 136(2):215–250

Boyan JA (2002) Technical update: least-squares temporal difference learning. Mach Learn 49(2–3):233–246

Bradtke SJ, Ydstie BE, Barto AG (1994) Adaptive linear quadratic control using policy iteration. In: Proceedings of 1994 American control conference-ACC’94, IEEE, vol 3, pp 3475–3479

Breyer M, Furrer F, Novkovic T, Siegwart R, Nieto J (2019) Comparing task simplifications to learn closed-loop object picking using deep reinforcement learning. IEEE Robot Autom Lett 4(2):1549–1556

Bu L, Babu R, De Schutter B et al (2008) A comprehensive survey of multiagent reinforcement learning. IEEE Trans Syst Man Cybern Part C (Appl Rev) 38(2):156–172

Caarls W, Schuitema E (2015) Parallel online temporal difference learning for motor control. IEEE Trans Neural Netw Learn Syst 27(7):1457–1468

Cao X, Sun C, Yan M (2019) Target search control of auv in underwater environment with deep reinforcement learning. IEEE Access 7:96549–96559

Carlucho I, De Paula M, Villar SA, Acosta GG (2017) Incremental q-learning strategy for adaptive pid control of mobile robots. Expert Syst Appl 80:183–199

Carlucho I, De Paula M, Wang S, Petillot Y, Acosta GG (2018) Adaptive low-level control of autonomous underwater vehicles using deep reinforcement learning. Robot Auton Syst 107:71–86

Chalvatzaki G, Papageorgiou XS, Maragos P, Tzafestas CS (2019) Learn to adapt to human walking: a model-based reinforcement learning approach for a robotic assistant rollator. IEEE Robot Autom Lett 4(4):3774–3781

Cheng Y, Zhang W (2018) Concise deep reinforcement learning obstacle avoidance for underactuated unmanned marine vessels. Neurocomputing 272:63–73

Colomé A, Torras C (2018) Dimensionality reduction for dynamic movement primitives and application to bimanual manipulation of clothes. IEEE Trans Robot 34(3):602–615

Cruz F, Magg S, Weber C, Wermter S (2016) Training agents with interactive reinforcement learning and contextual affordances. IEEE Trans Cogn Dev Syst 8(4):271–284

Cutler M, Walsh TJ, How JP (2015) Real-world reinforcement learning via multifidelity simulators. IEEE Trans Robot 31(3):655–671

Da Silva B, Konidaris G, Barto A (2012) Learning parameterized skills. Preprint arXiv:12066398

Dai X, Li CK, Rad AB (2005) An approach to tune fuzzy controllers based on reinforcement learning for autonomous vehicle control. IEEE Trans Intell Transp Syst 6(3):285–293

Dayan P, Niv Y (2008) Reinforcement learning: the good, the bad and the ugly. Curr Opin Neurobiol 18(2):185–196

de Bruin T, Kober J, Tuyls K, Babuška R (2018) Integrating state representation learning into deep reinforcement learning. IEEE Robot Autom Lett 3(3):1394–1401

Deisenroth M, Rasmussen CE (2011) Pilco: a model-based and data-efficient approach to policy search. In: Proceedings of the 28th international conference on machine learning (ICML-11), pp 465–472

Deisenroth MP, Fox D, Rasmussen CE (2013a) Gaussian processes for data-efficient learning in robotics and control. IEEE Trans Pattern Anal Mach Intell 37(2):408–423

Deisenroth MP, Neumann G, Peters J et al (2013b) A survey on policy search for robotics. Found Trends Robot 2(1–2):1–142

Deng Z, Guan H, Huang R, Liang H, Zhang L, Zhang J (2017) Combining model-based \(q\)-learning with structural knowledge transfer for robot skill learning. IEEE Trans Cogn Dev Syst 11(1):26–35

Dong D, Chen C, Chu J, Tarn TJ (2010) Robust quantum-inspired reinforcement learning for robot navigation. IEEE/ASME Trans Mech 17(1):86–97

Doroodgar B, Liu Y, Nejat G (2014) A learning-based semi-autonomous controller for robotic exploration of unknown disaster scenes while searching for victims. IEEE Trans Cybern 44(12):2719–2732

Doshi-Velez F, Pfau D, Wood F, Roy N (2013) Bayesian nonparametric methods for partially-observable reinforcement learning. IEEE Trans Pattern Anal Mach Intell 37(2):394–407

Duan Y, Cui BX, Xu XH (2012) A multi-agent reinforcement learning approach to robot soccer. Artif Intell Rev 38(3):193–211

El-Fakdi A, Carreras M (2013) Two-step gradient-based reinforcement learning for underwater robotics behavior learning. Robot Auton Syst 61(3):271–282

Er MJ, Deng C (2005) Obstacle avoidance of a mobile robot using hybrid learning approach. IEEE Trans Ind Electron 52(3):898–905

Falco P, Attawia A, Saveriano M, Lee D (2018) On policy learning robust to irreversible events: an application to robotic in-hand manipulation. IEEE Robot Autom Lett 3(3):1482–1489

Farahmand AM, Ahmadabadi MN, Lucas C, Araabi BN (2009) Interaction of culture-based learning and cooperative co-evolution and its application to automatic behavior-based system design. IEEE Trans Evol Comput 14(1):23–57

Faust A, Ruymgaart P, Salman M, Fierro R, Tapia L (2014) Continuous action reinforcement learning for control-affine systems with unknown dynamics. IEEE/CAA J Autom Sin 1(3):323–336

Foglino F, Christakou CC, Leonetti M (2019) An optimization framework for task sequencing in curriculum learning. In: 2019 Joint IEEE 9th international conference on development and learning and epigenetic robotics (ICDL-EpiRob), IEEE, pp 207–214

Frost G, Maurelli F, Lane DM (2015) Reinforcement learning in a behaviour-based control architecture for marine archaeology. In: OCEANS 2015-Genova, IEEE, pp 1–5

Fu C, Chen K (2008) Gait synthesis and sensory control of stair climbing for a humanoid robot. IEEE Trans Ind Electron 55(5):2111–2120

Gordon GJ (1995) Stable function approximation in dynamic programming. In: Machine learning proceedings 1995, Elsevier, pp 261–268

Gosavi A (2009) Reinforcement learning: a tutorial survey and recent advances. INFORMS J Comput 21(2):178–192

Gottipati SK, Seo K, Bhatt D, Mai V, Murthy K, Paull L (2019) Deep active localization. IEEE Robot Autom Lett 4(4):4394–4401

Greenwald A, Hall K, Serrano R (2003) Correlated q-learning. ICML 3:242–249

Grigorescu S, Trasnea B, Marina L, Vasilcoi A, Cocias T (2019) Neurotrajectory: a neuroevolutionary approach to local state trajectory learning for autonomous vehicles. Preprint arXiv:190610971

Grondman I, Busoniu L, Lopes GA, Babuska R (2012) A survey of actor-critic reinforcement learning: standard and natural policy gradients. IEEE Trans Syst Man Cybern Part C (Appl Rev) 42(6):1291–1307

Gu D, Hu H (2007) Integration of coordination architecture and behavior fuzzy learning in quadruped walking robots. IEEE Trans Syst Man Cybern Part C (Appl Rev) 37(4):670–681

Gullapalli V (1990) A stochastic reinforcement learning algorithm for learning real-valued functions. Neural Netw 3(6):671–692

Guo M, Liu Y, Malec J (2004) A new q-learning algorithm based on the metropolis criterion. IEEE Trans Syst Man Cybern Part B (Cybern) 34(5):2140–2143

Hansen N, Ostermeier A (2001) Completely derandomized self-adaptation in evolution strategies. Evol Comput 9(2):159–195

Hasegawa Y, Fukuda T, Shimojima K (1999) Self-scaling reinforcement learning for fuzzy logic controller-applications to motion control of two-link brachiation robot. IEEE Trans Ind Electron 46(6):1123–1131

Hazara M, Kyrki V (2019) Transferring generalizable motor primitives from simulation to real world. IEEE Robot Autom Lett 4(2):2172–2179

He W, Li Z, Chen CP (2017) A survey of human-centered intelligent robots: issues and challenges. IEEE/CAA J Autom Sin 4(4):602–609

Heidrich-Meisner V, Igel C (2008) Evolution strategies for direct policy search. In: International conference on parallel problem solving from nature, Springer, pp 428–437

Ho MK, Littman ML, Cushman F, Austerweil JL (2015) Teaching with rewards and punishments: Reinforcement or communication? In: CogSci

Hu H, Song S, Chen CP (2019) Plume tracing via model-free reinforcement learning method. IEEE Trans Neural Netw Learn Syst

Hu J, Wellman MP (2003) Nash q-learning for general-sum stochastic games. J Mach Learn Res 4:1039–1069

Hu J, Zhang H, Song L (2018) Reinforcement learning for decentralized trajectory design in cellular uav networks with sense-and-send protocol. IEEE Internet of Things Journal

Huang R, Cheng H, Qiu J, Zhang J (2019) Learning physical human–robot interaction with coupled cooperative primitives for a lower exoskeleton. IEEE Trans Autom Scie Eng

Huang Z, Xu X, He H, Tan J, Sun Z (2017) Parameterized batch reinforcement learning for longitudinal control of autonomous land vehicles. IEEE Trans Syst Man Cybern Syst 49(4):730–741

Hung SM, Givigi SN (2016) A q-learning approach to flocking with uavs in a stochastic environment. IEEE Trans Cybern 47(1):186–197

Hwang KS, Lo CY, Liu WL (2009) A modular agent architecture for an autonomous robot. IEEE Trans Instrum Meas 58(8):2797–2806

Hwang KS, Lin JL, Yeh KH (2015) Learning to adjust and refine gait patterns for a biped robot. IEEE Trans Syst Man Cybern Syst 45(12):1481–1490

Hwangbo J, Sa I, Siegwart R, Hutter M (2017) Control of a quadrotor with reinforcement learning. IEEE Robot Autom Lett 2(4):2096–2103

Iwata K, Ikeda K, Sakai H (2004) A new criterion using information gain for action selection strategy in reinforcement learning. IEEE Trans Neural Netw 15(4):792–799

Juang CF, Hsu CH (2009) Reinforcement ant optimized fuzzy controller for mobile-robot wall-following control. IEEE Trans Ind Electron 56(10):3931–3940

Kaelbling LP, Littman ML, Moore AW (1996) Reinforcement learning: a survey. J Artif Intell Res 4:237–285

Kamio S, Iba H (2005) Adaptation technique for integrating genetic programming and reinforcement learning for real robots. IEEE Trans Evol Comput 9(3):318–333

Khamassi M, Velentzas G, Tsitsimis T, Tzafestas C (2018) Robot fast adaptation to changes in human engagement during simulated dynamic social interaction with active exploration in parameterized reinforcement learning. IEEE Trans Cogn Dev Syst 10(4):881–893

Kim B, Park J, Park S, Kang S (2009) Impedance learning for robotic contact tasks using natural actor-critic algorithm. IEEE Trans Syst Man Cybern Part B (Cybern) 40(2):433–443

Kiumarsi B, Vamvoudakis KG, Modares H, Lewis FL (2017) Optimal and autonomous control using reinforcement learning: a survey. IEEE Trans Neural Netw Learn Syst 29(6):2042–2062

Kober J, Peters J (2011) Policy search for motor primitives in robotics. Mach Learn 84:171–203

Kober J, Bagnell JA, Peters J (2013) Reinforcement learning in robotics: a survey. Int J Robot Res 32(11):1238–1274

Koç O, Peters J (2019) Learning to serve: an experimental study for a new learning from demonstrations framework. IEEE Robot Autom Lett 4(2):1784–1791

Konda VR, Tsitsiklis JN (2000) Actor-critic algorithms. In: Advances in neural information processing systems, pp 1008–1014

Koryakovskiy I, Kudruss M, Vallery H, Babuška R, Caarls W (2018) Model-plant mismatch compensation using reinforcement learning. IEEE Robot Autom Lett 3(3):2471–2477

La HM, Lim R, Sheng W (2014) Multirobot cooperative learning for predator avoidance. IEEE Trans Control Syst Technol 23(1):52–63

Lambert NO, Drew DS, Yaconelli J, Levine S, Calandra R, Pister KS (2019) Low-level control of a quadrotor with deep model-based reinforcement learning. IEEE Robot Autom Lett 4(4):4224–4230

Lasheng Y, Zhongbin J, Kang L (2012) Research on task decomposition and state abstraction in reinforcement learning. Artif Intell Rev 38(2):119–127

Le TP, Ngo VA, Jaramillo PM, Chung T (2019) Importance sampling policy gradient algorithms in reproducing kernel hilbert space. Artif Intell Rev 52(3):2039–2059

Li G, Gomez R, Nakamura K, He B (2019) Human-centered reinforcement learning: a survey. IEEE Trans Hum Mach Syst

Li THS, Su YT, Lai SW, Hu JJ (2010) Walking motion generation, synthesis, and control for biped robot by using pgrl, lpi, and fuzzy logic. IEEE Trans Syst Man Cybern Part B (Cybern) 41(3):736–748

Li Z, Liu J, Huang Z, Peng Y, Pu H, Ding L (2017a) Adaptive impedance control of human-robot cooperation using reinforcement learning. IEEE Trans Ind Electron 64(10):8013–8022

Li Z, Zhao T, Chen F, Hu Y, Su CY, Fukuda T (2017b) Reinforcement learning of manipulation and grasping using dynamical movement primitives for a humanoidlike mobile manipulator. IEEE/ASME Trans Mech 23(1):121–131

Lin JL, Hwang KS, Wang YL (2013) A simple scheme for formation control based on weighted behavior learning. IEEE Trans Neural Netw Learn Syst 25(6):1033–1044

Lin Y, Makedon F, Xu Y (2011) Episodic task learning in markov decision processes. Artif Intell Rev 36(2):87–98

Littman ML (2015) Reinforcement learning improves behaviour from evaluative feedback. Nature 521(7553):445–451

Liu S, Ngiam KY, Feng M (2019) Deep reinforcement learning for clinical decision support: a brief survey. Preprint arXiv:190709475

Luo B, Liu D, Huang T, Liu J (2017) Output tracking control based on adaptive dynamic programming with multistep policy evaluation. IEEE Trans Syst Man Cybern Syst

Luo B, Yang Y, Liu D (2018) Adaptive q-learning for data-based optimal output regulation with experience replay. IEEE Trans Cybern 48(12):3337–3348

Luo B, Yang Y, Liu D, Wu HN (2019) Event-triggered optimal control with performance guarantees using adaptive dynamic programming. IEEE Trans Neural Netw Learn Syst 31(1):76–88

Lv L, Zhang S, Ding D, Wang Y (2019) Path planning via an improved dqn-based learning policy. IEEE Access

Madden MG, Howley T (2004) Transfer of experience between reinforcement learning environments with progressive difficulty. Artif Intell Rev 21(3–4):375–398

Markova VD, Shopov VK (2019) Knowledge transfer in reinforcement learning agent. In: 2019 international conference on information technologies (InfoTech), IEEE, pp 1–4

McPartland M, Gallagher M (2010) Reinforcement learning in first person shooter games. IEEE Trans Comput Intell AI Games 3(1):43–56

Meeden LA (1996) An incremental approach to developing intelligent neural network controllers for robots. IEEE Trans Syst Man Cybern Part B (Cybern) 26(3):474–485

Melo FS, Meyn SP, Ribeiro MI (2008) An analysis of reinforcement learning with function approximation. In: Proceedings of the 25th international conference on Machine learning, ACM, pp 664–671

Mnih V, Kavukcuoglu K, Silver D, Rusu AA, Veness J, Bellemare MG, Graves A, Riedmiller M, Fidjeland AK, Ostrovski G et al (2015a) Human-level control through deep reinforcement learning. Nature 518(7540):529

Mnih V, Kavukcuoglu K, Silver D, Rusu AA, Veness J, Bellemare MG, Graves A, Riedmiller M, Fidjeland AK, Ostrovski G et al (2015b) Human-level control through deep reinforcement learning. Nature 518(7540):529–533

Modares H, Ranatunga I, Lewis FL, Popa DO (2015) Optimized assistive human–robot interaction using reinforcement learning. IEEE Trans Cybern 46(3):655–667

Modares H, Lewis FL, Kang W, Davoudi A (2017) Optimal synchronization of heterogeneous nonlinear systems with unknown dynamics. IEEE Trans Autom Control 63(1):117–131

Muelling K, Kober J, Peters J (2010) Learning table tennis with a mixture of motor primitives. In: 2010 10th IEEE-RAS international conference on humanoid robots, IEEE, pp 411–416

Neftci E, Averbeck B (2019) Reinforcement learning in artificial and biological systems. Nat Mach Intell. https://doi.org/10.1038/s42256-019-0025-4

Neftci EO, Averbeck BB (2002) Reinforcement learning in artificial and biological systems. Environment p 3

Ng AY, Harada D, Russell S (1999) Policy invariance under reward transformations: theory and application to reward shaping. ICML 99:278–287

Nguyen ND, Nguyen T, Nahavandi S (2017) System design perspective for human-level agents using deep reinforcement le arning: a survey. IEEE Access 5:27091–27102

Nguyen TT, Nguyen ND, Nahavandi S (2018) Deep reinforcement learning for multi-agent systems: a review of challenges, solutions and applications. Preprint arXiv:181211794

O’Flaherty R, Egerstedt M (2014) Low-dimensional learning for complex robots. IEEE Trans Autom Sci Eng 12(1):19–27

Ohnishi M, Wang L, Notomista G, Egerstedt M (2019) Barrier-certified adaptive reinforcement learning with applications to brushbot navigation. IEEE Trans Robot 35(5):1186–1205

Palomeras N, El-Fakdi A, Carreras M, Ridao P (2012) Cola2: a control architecture for auvs. IEEE J Ocean Eng 37(4):695–716

Parunak HVD (1999) Industrial and practical applications of dai. Multiagent systems: a modern approach to distributed artificial intelligence pp 337–421

Peters J, Schaal S (2008) Reinforcement learning of motor skills with policy gradients. Neural netw 21(4):682–697

Peters J, Vijayakumar S, Schaal S (2005) Natural actor-critic. In: European conference on machine learning, Springer, pp 280–291

Peters J, Mulling K, Altun Y (2010) Relative entropy policy search. In: Twenty-Fourth AAAI conference on artificial intelligence

Plaza MG, Martínez-Marín T, Prieto SS, Luna DM (2009) Integration of cell-mapping and reinforcement-learning techniques for motion planning of car-like robots. IEEE Trans Instrum Meas 58(9):3094–3103

Polat F et al (2002) Learning intelligent behavior in a non-stationary and partially observable environment. Artif Intell Rev 18(2):97–115

Puterman ML (2014) Markov decision processes: discrete stochastic dynamic programming. John Wiley & Sons

Rescorla R, Wagner A, Black AH, Prokasy WF (1972) Classical conditioning ii: current research and theory pp 64–99

Ribeiro C (2002) Reinforcement learning agents. Artif Intell Rev 17(3):223–250

Riedmiller M, Peters J, Schaal S (2007) Evaluation of policy gradient methods and variants on the cart-pole benchmark. In: 2007 IEEE international symposium on approximate dynamic programming and reinforcement learning, IEEE, pp 254–261

Rombokas E, Malhotra M, Theodorou EA, Todorov E, Matsuoka Y (2012) Reinforcement learning and synergistic control of the act hand. IEEE/ASME Trans Mech 18(2):569–577

Roveda L, Pallucca G, Pedrocchi N, Braghin F, Tosatti LM (2017) Iterative learning procedure with reinforcement for high-accuracy force tracking in robotized tasks. IEEE Trans Ind Inform 14(4):1753–1763

Rummery GA, Niranjan M (1994) On-line Q-learning using connectionist systems, vol 37. University of Cambridge, Department of Engineering Cambridge, England

Rylatt M, Czarnecki C, Routen T (1998) Connectionist learning in behaviour-based mobile robots: a survey. Artif Intell Rev 12(6):445–468

Sallab AE, Abdou M, Perot E, Yogamani S (2017) Deep reinforcement learning framework for autonomous driving. Electron Imaging 19:70–76

dos Santos SRB, Givigi SN, Nascimento CL (2015) Autonomous construction of multiple structures using learning automata: description and experimental validation. IEEE Syst J 9(4):1376–1387

Santucci VG, Baldassarre G, Cartoni E (2019) Autonomous reinforcement learning of multiple interrelated tasks. Preprint arXiv:190601374

Schaul T, Horgan D, Gregor K, Silver D (2015) Universal value function approximators. In: International conference on machine learning, pp 1312–1320

Sharma R, Gopal M (2008) A markov game-adaptive fuzzy controller for robot manipulators. IEEE Trans Fuzzy Syst 16(1):171–186

Sharma RS, Nair RR, Agrawal P, Behera L, Subramanian VK (2018) Robust hybrid visual servoing using reinforcement learning and finite-time adaptive fosmc. IEEE Syst J

Shi H, Li X, Hwang KS, Pan W, Xu G (2016) Decoupled visual servoing with fuzzyq-learning. IEEE Trans Ind Inform 14(1):241–252

Silver D, Lever G, Heess N, Degris T, Wierstra D, Riedmiller M (2014) Deterministic policy gradient algorithms

Stanley KO, Clune J, Lehman J, Miikkulainen R (2019) Designing neural networks through neuroevolution. Nat Mach Intell 1(1):24–35

Stone P, Veloso M (2000) Multiagent systems: a survey from a machine learning perspective. Auton Robots 8(3):345–383

Stulp F, Buchli J, Ellmer A, Mistry M, Theodorou EA, Schaal S (2012) Model-free reinforcement learning of impedance control in stochastic environments. IEEE Trans Auton Mental Dev 4(4):330–341

Such FP, Madhavan V, Conti E, Lehman J, Stanley KO, Clune J (2017) Deep neuroevolution: genetic algorithms are a competitive alternative for training deep neural networks for reinforcement learning. Preprint arXiv:171206567

Sutton RS (1988) Learning to predict by the methods of temporal differences. Mach Learn 3(1):9–44

Sutton RS (1992) A special issue of machine learning on reinforcement learning. Mach Learn 8

Sutton RS, Barto AG (2018) Reinforcement learning: an introduction. MIT press

Sutton RS, McAllester DA, Singh SP, Mansour Y (2000) Policy gradient methods for reinforcement learning with function approximation. In: Advances in neural information processing systems, pp 1057–1063

Tenorio-Gonzalez AC, Morales EF, Villaseñor-Pineda L (2010) Dynamic reward shaping: training a robot by voice. In: Ibero-American conference on artificial intelligence, Springer, pp 483–492

Theodorou E, Buchli J, Schaal S (2010) A generalized path integral control approach to reinforcement learning. J Mach Learn Res 11:3137–3181

Thomaz AL, Breazeal C (2008) Teachable robots: understanding human teaching behavior to build more effective robot learners. Artif Intell 172(6–7):716–737

Truong XT, Ngo TD (2017) Toward socially aware robot navigation in dynamic and crowded environments: a proactive social motion model. IEEE Trans Autom Sci Eng 14(4):1743–1760

Tsitsiklis JN, Van Roy B (1996) Feature-based methods for large scale dynamic programming. Mach Learn 22(1–3):59–94

Tsitsiklis JN, Van Roy B (1997) Analysis of temporal-diffference learning with function approximation. In: Advances in neural information processing systems, pp 1075–1081

Turan M, Almalioglu Y, Gilbert HB, Mahmood F, Durr NJ, Araujo H, Sarı AE, Ajay A, Sitti M (2019) Learning to navigate endoscopic capsule robots. IEEE Robot Autom Lett 4(3):3075–3082

Tzafestas SG, Rigatos GG (2002) Fuzzy reinforcement learning control for compliance tasks of robotic manipulators. IEEE Trans Syst Man Cybern Part B (Cybern) 32(1):107–113

Van Hasselt H, Guez A, Silver D (2016) Deep reinforcement learning with double q-learning. In: Thirtieth AAAI conference on artificial intelligence, IEEE, pp 2094–2100

Viseras A, Garcia R (2019) Deepig: multi-robot information gathering with deep reinforcement learning. IEEE Robot Autom Lett 4(3):3059–3066

Vlassis N (2007) A concise introduction to multiagent systems and distributed artificial intelligence. Synth Lect Artif Intell Mach Learn 1(1):1–71

Wang C, Wang J, Shen Y, Zhang X (2019) Autonomous navigation of uavs in large-scale complex environments: a deep reinforcement learning approach. IEEE Trans Veh Technol 68(3):2124–2136

Wang J, Xu X, Liu D, Sun Z, Chen Q (2013) Self-learning cruise control using kernel-based least squares policy iteration. IEEE Trans Control Syst Technol 22(3):1078–1087

Wang JP, Shi YK, Zhang WS, Thomas I, Duan SH (2018a) Multitask policy adversarial learning for human-level control with large state spaces. IEEE Trans Ind Inform 15(4):2395–2404

Wang S, Chaovalitwongse W, Babuska R (2012) Machine learning algorithms in bipedal robot control. IEEE Trans Syst Man Cybern Part C (Appl Rev) 42(5):728–743

Wang Y, Lang H, De Silva CW (2010) A hybrid visual servo controller for robust grasping by wheeled mobile robots. IEEE/ASME Trans Mech 15(5):757–769

Wang Y, He H, Sun C (2018b) Learning to navigate through complex dynamic environment with modular deep reinforcement learning. IEEE Trans Games 10(4):400–412

Watkins CJ, Dayan P (1992) Q-learning. Mach Learn 8(3–4):279–292

Watkins CJCH (1989) Learning from delayed rewards

Whitbrook AM, Aickelin U, Garibaldi JM (2007) Idiotypic immune networks in mobile-robot control. IEEE Trans Syst Man Cybern Part B (Cybern) 37(6):1581–1598

Williams RJ (1992) Simple statistical gradient-following algorithms for connectionist reinforcement learning. Mach Learn 8(3–4):229–256

Witten IH (1977) An adaptive optimal controller for discrete-time markov environments. Inf Control 34(4):286–295

Wu C, Ju B, Wu Y, Lin X, Xiong N, Xu G, Li H, Liang X (2019) Uav autonomous target search based on deep reinforcement learning in complex disaster scene. IEEE Access 7:117227–117245

Xi A, Mudiyanselage TW, Tao D, Chen C (2019) Balance control of a biped robot on a rotating platform based on efficient reinforcement learning. IEEE/CAA J Autom Sin 6(4):938–951

Xiao L, Xie C, Min M, Zhuang W (2017) User-centric view of unmanned aerial vehicle transmission against smart attacks. IEEE Trans Veh Technol 67(4):3420–3430

Xu X, Liu C, Yang SX, Hu D (2011) Hierarchical approximate policy iteration with binary-tree state space decomposition. IEEE Trans Neural Netw 22(12):1863–1877

Yang E, Gu D (2004) Multiagent reinforcement learning for multi-robot systems: a survey. Tech. rep., tech. rep

Yang X, He H, Liu D (2017) Event-triggered optimal neuro-controller design with reinforcement learning for unknown nonlinear systems. IEEE Trans Syst Man Cybern Syst

Yang Z, Merrick K, Jin L, Abbass HA (2018) Hierarchical deep reinforcement learning for continuous action control. IEEE Trans Neural Netw Learn Syst 29(11):5174–5184

Ye C, Yung NH, Wang D (2003) A fuzzy controller with supervised learning assisted reinforcement learning algorithm for obstacle avoidance. IEEE Trans Syst Man Cybern Part B (Cybern) 33(1):17–27

Yin S, Zhao S, Zhao Y, Yu FR (2019) Intelligent trajectory design in uav-aided communications with reinforcement learning. IEEE Trans Veh Technol 68(8):8227–8231

Yu C, Zhang M, Ren F, Tan G (2015a) Multiagent learning of coordination in loosely coupled multiagent systems. IEEE Trans Cybern 45(12):2853–2867

Yu J, Wang C, Xie G (2015b) Coordination of multiple robotic fish with applications to underwater robot competition. IEEE Trans Ind Electron 63(2):1280–1288

Yung NH, Ye C (1999) An intelligent mobile vehicle navigator based on fuzzy logic and reinforcement learning. IEEE Trans Syst Man Cybern Part B (Cybern) 29(2):314–321

Zalama E, Gomez J, Paul M, Peran JR (2002) Adaptive behavior navigation of a mobile robot. IEEE Trans Syst Man Cybern Part A Syst Hum 32(1):160–169

Zeng Y, Zhang R, Lim TJ (2016) Wireless communications with unmanned aerial vehicles: opportunities and challenges. IEEE Commun Mag 54(5):36–42

Zhang H, Jiang H, Luo Y, Xiao G (2016) Data-driven optimal consensus control for discrete-time multi-agent systems with unknown dynamics using reinforcement learning method. IEEE Trans Ind Electron 64(5):4091–4100

Zhang J, Tai L, Yun P, Xiong Y, Liu M, Boedecker J, Burgard W (2019) Vr-goggles for robots: real-to-sim domain adaptation for visual control. IEEE Robot Autom Lett 4(2):1148–1155

Zhou L, Yang P, Chen C, Gao Y (2016) Multiagent reinforcement learning with sparse interactions by negotiation and knowledge transfer. IEEE Trans Cybern 47(5):1238–1250

Zhu J, Zhu J, Wang Z, Guo S, Xu C (2018) Hierarchical decision and control for continuous multitarget problem: policy evaluation with action delay. IEEE Trans Neural Netw Learn Syst 30(2):464–473

Zhu Y, Mottaghi R, Kolve E, Lim JJ, Gupta A, Fei-Fei L, Farhadi A (2017) Target-driven visual navigation in indoor scenes using deep reinforcement learning. In: 2017 IEEE international conference on robotics and automation (ICRA), IEEE, pp 3357–3364

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Singh, B., Kumar, R. & Singh, V.P. Reinforcement learning in robotic applications: a comprehensive survey. Artif Intell Rev 55, 945–990 (2022). https://doi.org/10.1007/s10462-021-09997-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10462-021-09997-9