Abstract

Graph neural network is a deep learning approach widely applied on structural and non-structural scenarios due to its substantial performance and interpretability recently. In a non-structural scenario, textual and visual research topics like visual question answering (VQA) are important, which need graph reasoning models. VQA aims to build a system that can answer related questions about given images as well as understand the underlying semantic meaning behind the image. The critical issues in VQA are to effectively extract the visual and textual features and subject both features into a common space. These issues have a great impact in handling goal-driven, reasoning, and scene classification subtasks. In the same vein, it is difficult to compare models' performance because most existing datasets do not group instances into meaningful categories. With the recent advances in graph-based models, lots of efforts have been devoted to solving the problems mentioned above. This study focuses on graph convolutional networks (GCN) studies and recent datasets for visual question answering tasks. Specifically, we reviewed current related studies on GCN for the VQA task. Also, 18 common and recent datasets for VQA are well studied, though not all of them are discussed at the same level of detail. A critical review of GCN, datasets and VQA challenges is further highlighted. Finally, this study will help researchers to choose a suitable dataset for a particular VQA subtask, identify VQA challenges, the pros and cons of its approaches, and improve more on GCN for the VQA.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

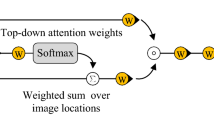

Anderson P, He X, Buehler C, Teney D, Johnson M, Gould S, Zhang L (2018) Bottom-up and top-down attention for image captioning and visual question answering. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6077–6086

Andreas J, Rohrbach M, Darrell T, Klein D (2016) Neural module networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 39–48

Antol S, Agrawal A, Lu J, Mitchell M, Batra D, Zitnick CL, Parikh D (2015) Vqa: visual question answering. In: Proceedings of the IEEE international conference on computer vision, pp 2425–2433

Asif NA, Sarker Y, Chakrabortty RK, Ryan MJ, Ahamed MH, Saha DK, Tasneem Z (2021) Graph neural network: a comprehensive review on non-euclidean space. IEEE Access

Auer S, Bizer C, Kobilarov G, Lehmann J, Cyganiak R, Ives Z (2007) Dbpedia: a nucleus for a web of open data. The semantic web. Springer, Berlin, pp 722–735

Ben-Younes H, Cadene R, Cord M, Thome N (2017) Mutan: multimodal tucker fusion for visual question answering. In: Proceedings of the IEEE international conference on computer vision, pp 2612–2620

Bian T, Xiao X, Xu T, Zhao P, Huang W, Rong Y, Huang J (2020) Rumor detection on social media with bi-directional graph convolutional networks. In: Proceedings of the AAAI conference on artificial intelligence, vol 34, no 01, pp 549–556

Biten AF, Tito R, Mafla A, Gomez L, Rusinol M, Valveny E, Karatzas D (2019) Scene text visual question answering. In: Proceedings of the IEEE/CVF International conference on computer vision, pp 4291–4301

Cadene R, Ben-Younes H, Cord M, Thome N (2019) Murel: multimodal relational reasoning for visual question answering. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1989–1998

Chen L, Wu L, Hong R, Zhang K, Wang M (2020) Revisiting graph based collaborative filtering: a linear residual graph convolutional network approach. In: Proceedings of the AAAI conference on artificial intelligence, vol 34, no 01, pp 27–34

Cho K, van Merriënboer B, Bahdanau D, Bengio Y (2014) On the properties of neural machine translation: encoder–decoder approaches. In: Proceedings of SSST-8, eighth workshop on syntax, semantics and structure in statistical translation, pp 103–111

Chou SH, Chao WL, Lai WS, Sun M, Yang MH (2020) Visual question answering on 360deg images. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 1607–1616

Dai H, Li C, Coley CW, Dai B, Song L (2019) Retrosynthesis prediction with conditional graph logic network. In: Proceedings of the 33rd international conference on neural information processing systems, pp 8872–8882

Do K, Tran T, Venkatesh S (2019) Graph transformation policy network for chemical reaction prediction. In: Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining, pp 750–760

Fukui A, Park DH, Yang D, Rohrbach A, Darrell T, Rohrbach M (2016) Multimodal compact bilinear pooling for visual question answering and visual grounding. In: Conference on empirical methods in natural language processing, pp 457–468, ACL

Gao D, Wang R, Shan S, Chen X (2020b) Learning to recognize visual concepts for visual question answering with structural label space. IEEE J Sel Top Signal Process 14(3):494–505

Gao D, Li K, Wang R, Shan S, Chen X (2020a). Multi-modal graph neural network for joint reasoning on vision and scene text. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 12746–12756

Goceri E (2019) Analysis of deep networks with residual blocks and different activation functions: classification of skin diseases. In: 2019 ninth international conference on image processing theory, tools and applications (IPTA). IEEE, pp 1–6

Goyal Y, Khot T, Summers-Stay D, Batra D, Parikh D (2017) Making the v in vqa matter: elevating the role of image understanding in visual question answering. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 6904–6913

Guo M, Chou E, Huang DA, Song S, Yeung S, Fei-Fei L (2018) Neural graph matching networks for fewshot 3d action recognition. In: Proceedings of the European conference on computer vision (ECCV), pp 653–669

Guo S, Lin Y, Feng N, Song C, Wan H (2019) Attention based spatial-temporal graph convolutional networks for traffic flow forecasting. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, no 01, pp 922–929

Gupta D, Suman S, Ekbal A (2021) Hierarchical deep multi-modal network for medical visual question answering. Expert Syst Appl 164:113993

Gurari D, Li Q, Stangl AJ, Guo A, Lin C, Grauman K, Bigham JP (2018) Vizwiz grand challenge: answering visual questions from blind people. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3608–3617

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Hu Z, Wei J, Huang Q, Liang H, Zhang X, Liu Q (2020) Graph convolutional network for visual question answering based on fine-grained question representation. In: 2020 IEEE fifth international conference on data science in cyberspace (DSC), pp 218–224, IEEE

Hudson DA, Manning CD (2019) Gqa: a new dataset for real-world visual reasoning and compositional question answering. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6700–6709

Ilievski I, Yan S, Feng J (2016) A focused dynamic attention model for visual question answering. Preprint http://arxiv.org/abs/1604.01485

Johnson J, Hariharan B, Van Der Maaten L, Fei-Fei L, Lawrence Zitnick C, Girshick R (2017) Clevr: a diagnostic dataset for compositional language and elementary visual reasoning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2901–2910

Kafle K, Price B, Cohen S, Kanan C (2018) Dvqa: understanding data visualizations via question answering. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5648–5656

Kafle K, Kanan C (2017) Visual question answering: datasets, algorithms, and future challenges. Comput vis Image Underst 163:3–20

Kahou SE, Michalski V, Atkinson A, Kádár Á, Trischler A, Bengio Y (2018) FigureQA: an annotated figure dataset for visual reasoning. ICLR 2018

Kallooriyakath LS, Jithin MV, Bindu PV, Adith PP (2020) Visual question answering: methodologies and challenges. In: 2020 international conference on smart technologies in computing, electrical and electronics (ICSTCEE). IEEE, pp 402–407

Kembhavi A, Seo M, Schwenk D, Choi J, Farhadi A, Hajishirzi H (2017) Are you smarter than a sixth grader? Textbook question answering for multimodal machine comprehension. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4999–5007

Kim J, On KW, Lim W, Kim J, Ha J, Zhang B (2017) Hadamard product for low-rank bilinear pooling. In: proceeding of international conference on learning representations

Kim ES, Kang WY, On KW, Heo YJ, Zhang BT (2020) Hypergraph attention networks for multimodal learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 14581–14590

Kipf TN, Welling M (2017) Semi-supervised classification with graph convolutional networks Preprint http://arxiv.org/abs/1609.02907. ICLR 2017

Krishna R, Zhu Y, Groth O, Johnson J, Hata K, Kravitz J, Fei-Fei L (2017) Visual genome: connecting language and vision using crowdsourced dense image annotations. Int J Comput vis 123(1):32–73

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 25:1097–1105

Kumar A, Irsoy O, Ondruska P, Iyyer M, Bradbury J, Gulrajani I, Socher R (2016) Ask me anything: dynamic memory networks for natural language processing. In: International conference on machine learning. PMLR, pp 1378–1387

Malinowski M, Rohrbach M, Fritz M (2015) Ask your neurons: a neural-based approach to answering questions about images. In: Proceedings of the IEEE international conference on computer vision, pp 1–9

Manmadhan S, Kovoor BC (2020) Visual question answering: a state-of-the-art review. Artif Intell Rev 53(8):5705–5745

Marino K, Rastegari M, Farhadi A, Mottaghi R (2019) Ok-vqa: a visual question answering benchmark requiring external knowledge. In: Proceedings of the IEEE/cvf conference on computer vision and pattern recognition, pp 3195–3204

Mathew M, Karatzas D, Jawahar CV (2021) DocVQA: a dataset for vqa on document images. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 2200–2209

Mishra A, Shekhar S, Singh AK, Chakraborty A (2019) OCR-VQA: visual question answering by reading text in images. In: 2019 international conference on document analysis and recognition (ICDAR), Sydney, NSW, pp 947–952. https://doi.org/10.1109/ICDAR.2019.00156

Narasimhan M, Lazebnik S, Schwing AG (2018) Out of the box: reasoning with graph convolution nets for factual visual question answering. In: Proceedings of the 32nd international conference on neural information processing systems, pp 2659–2670

Nguyen TH, Grishman R (2018) Graph convolutional networks with argument-aware pooling for event detection. In: Thirty-second AAAI conference on artificial intelligence.

Noh H, Han B (2016) Training recurrent answering units with joint loss minimization for vqa. Preprint http://arxiv.org/abs/1606.03647

Norcliffe-Brown W, Vafeias E, Parisot S (2018) Learning conditioned graph structures for interpretable visual question answering. In: Proceedings of the 32nd international conference on neural information processing systems, pp 8344–8353

Pei X, Yu L, Tian S (2020) AMalNet: a deep learning framework based on graph convolutional networks for malware detection. Comput Secur 93:101792

Ren S, He K, Girshick R, Sun J (2016) Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 39(6):1137–1149

Ren M, Kiros R, Zemel RS (2015) Exploring models and data for image question answering. In: Proceedings of the 28th international conference on neural information processing systems, vol 2, pp 2953–2961

Schlichtkrull M, Kipf TN, Bloem P, Van Den Berg R, Titov I, Welling M (2018) Modeling relational data with graph convolutional networks. In: European semantic web conference. Springer, Cham. pp 593–607

Shah S, Mishra A, Yadati N, Talukdar PP (2019) Kvqa: knowledge-aware visual question answering. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, no 01, pp 8876–8884

Shih KJ, Singh S, Hoiem D (2016) Where to look: focus regions for visual question answering. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4613–4621

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. Preprint http://arxiv.org/abs/1409.1556

Singh AK, Mishra A, Shekhar S, Chakraborty A (2019a) From strings to things: knowledge-enabled VQA model that can read and reason. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 4602–4612

Singh A, Natarajan V, Shah M, Jiang Y, Chen X, Batra D, Rohrbach M (2019b) Towards vqa models that can read. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8317–8326

Speer R, Chin J, Havasi C (2017) Conceptnet 5.5: an open multilingual graph of general knowledge. In: Proceedings of the AAAI conference on artificial intelligence, vol 31, no 1

Sundermeyer M, Schlüter R, Ney H (2012) LSTM neural networks for language modeling. In: Thirteenth annual conference of the international speech communication association

Tandon N, De Melo G, Suchanek F, Weikum G (2014) Webchild: harvesting and organizing commonsense knowledge from the web. In: Proceedings of the 7th ACM international conference on web search and data mining, pp 523–532

Teney D, Liu L, van Den Hengel A (2017) Graph-structured representations for visual question answering. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1–9

Trott A, Xiong C, Socher R (2018) Interpretable counting for visual question answering. In: International conference on learning representations

Wang Z, Luo N, Zhou P (2020) GuardHealth: Blockchain empowered secure data management and graph convolutional network enabled anomaly detection in smart healthcare. J Parallel Distrib Comput 142:1–12

Wang P, Wu Q, Shen C, Dick A, van den Hengel A (2018a) FVQA: fact-based visual question answering. IEEE Trans Pattern Anal Mach Intell 40(10):2413–2427

Wang X, Ye Y, Gupta A (2018b) Zero-shot recognition via semantic embeddings and knowledge graphs. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6857–6866

Wang Z, Lv Q, Lan X, Zhang Y (2018c) Cross-lingual knowledge graph alignment via graph convolutional networks. In: Proceedings of the 2018c conference on empirical methods in natural language processing, pp 349–357

Wang Y, Yin H, Chen H, Wo T, Xu J, Zheng K (2019) Origin-destination matrix prediction via graph convolution: a new perspective of passenger demand modeling. In: Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining, pp 1227–1235

Wu Z, Palmer M (1994) Verbs semantics and lexical selection. In: Proceedings of 32nd annual meeting on association for computational linguistic, pp 133–138

Wu Q, Wang P, Shen C, Dick A, Van Den Hengel A (2016) Ask me anything: free-form visual question answering based on knowledge from external sources. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4622–4630

Wu C, Liu J, Wang X, Dong X (2018) Object-difference attention: a simple relational attention for visual question answering. In: Proceedings of the 26th ACM international conference on multimedia, pp 519–527

Wu Y, Lian D, Xu Y, Wu L, Chen E (2020a) Graph convolutional networks with markov random field reasoning for social spammer detection. In: Proceedings of the AAAI conference on artificial intelligence, vol 34, no 01, pp 1054–1061

Wu Z, Pan S, Chen F, Long G, Zhang C, Philip SY (2020b) A comprehensive survey on graph neural networks. IEEE Trans Neural Netw Learn Syst 32(1):4–24

Xu X, Wang T, Yang Y, Hanjalic A, Shen HT (2020) Radial graph convolutional network for visual question generation. IEEE Trans Neural Netw Learn Syst 32(4):1654–1667

Yang Z, He X, Gao J, Deng L, Smola A (2016) Stacked attention networks for image question answering. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 21–29

Yang J, Lu J, Lee S, Batra D, Parikh D (2018). Graph r-cnn for scene graph generation. In: Proceedings of the European conference on computer vision (ECCV), pp 670–685

Yang Z, Qin Z, Yu J, Hu Y (2019) Scene graph reasoning with prior visual relationship for visual question answering. Preprint http://arxiv.org/abs/1812.09681

Yao L, Mao C, Luo Y (2019) Graph convolutional networks for text classification. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, no 01, pp 7370–7377

Yu J, Zhu Z, Wang Y, Zhang W, Hu Y, Tan J (2020) Cross-modal knowledge reasoning for knowledge-based visual question answering. Pattern Recognit 108:107563

Zhang Y, Hare J, Prügel-Bennett A (2018a) Learning to count objects in natural images for visual question answering. In: International conference on learning representations.

Zhang Y, Qi P, Manning CD (2018b) Graph convolution over pruned dependency trees improves relation extraction. In: Proceedings of the 2018b conference on empirical methods in natural language processing, pp 2205–2215

Zhang J, Shi X, Zhao S, King I (2019a) STAR-GCN: stacked and reconstructed graph convolutional networks for recommender systems. In IJCAI

Zhang S, Tong H, Xu J, Maciejewski R (2019b) Graph convolutional networks: a comprehensive review. Comput Soc Netw 6(1):1–23

Zhou X, Shen F, Liu L, Liu W, Nie L, Yang Y, Shen HT (2020) Graph convolutional network hashing. IEEE Trans Cybern 50(4):1460–1472

Zhu Y, Groth O, Bernstein M, Fei-Fei L (2016) Visual7w: grounded question answering in images. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4995–5004

Zhu X, Mao Z, Chen Z, Li Y, Wang Z, Wang B (2021) Object-difference drived graph convolutional networks for visual question answering. Multimed Tools Appl 80(11):16247–16265

Zitnik M, Agrawal M, Leskovec J (2018) Modeling polypharmacy side effects with convolutional networks. Bioinformatics 34(13):i457–i466

Acknowledgements

We thank the anonymous reviewers for their valuable comments and suggestions. This work is supported by the National Key R&D Program of China No. 2017YFB1002101 and the Joint Advanced Research Foundation of China Electronics Technology Group Corporation No. 6141B08010102.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

Datasets | Link |

|---|---|

VISUAL7W | |

CLEVR | |

VISUAL GENOME | |

TDIUC | |

TQA | |

VQA 2.0 | |

FIGUREQA | |

FVQA | www.metatext.io/datasets/fact-based-visual-question-answering-(fvqa) |

VIZWIZ | |

DVQA | |

VQA-MED | |

OCR-VQA | |

OK-VQA | |

ST-VQA | |

GQA | |

TEXTVQA | |

VQA 3600 | |

DOCVQA |

Appendix 2

Rights and permissions

About this article

Cite this article

Yusuf, A.A., Chong, F. & Xianling, M. An analysis of graph convolutional networks and recent datasets for visual question answering. Artif Intell Rev 55, 6277–6300 (2022). https://doi.org/10.1007/s10462-022-10151-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10462-022-10151-2