Abstract

Several deep learning algorithms have emerged for the automatic classification of environmental sounds. However, the non-availability of adequate labeled data for training limits the performance of these algorithms. Data augmentation is an appropriate solution to this problem. Generative Adversarial Networks (GANs) can successfully generate synthetic speech and sounds of musical instruments for classification applications. In this paper, we present a method for GAN-based augmentation in the context of environmental sound classification. We introduce an architecture named EnvGAN for the adversarial generation of environmental sounds. To validate the quality of the generated sounds, we have conducted subjective and objective evaluations. The results indicate that EnvGAN can produce samples of various domains with an acceptable target quality. We applied this augmentation technique on three benchmark ESC datasets (ESC-10, UrbanSound8K, and TUT Urban Acoustic Scenes development dataset) and used it for training a CNN-based classifier. Experimental results show that this new augmentation method can outperform a baseline method with no augmentation by a relatively wide margin (10–12% on ESC-10, 5–7% on UrbanSound8K, and 4–5% on TUT). In particular, the GAN-based approach reduces the confusion between all pairs of classes on UrbanSound8K. That is, the proposed method is especially suitable for handling class-imbalanced datasets.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Ali-Gombe A, Elyan E (2019) MFC-GAN: class-imbalanced dataset classification using Multiple Fake Class Generative Adversarial Network. Neurocomputing 361:212–221. https://doi.org/10.1016/j.neucom.2019.06.043

Arjovsky M, Chintala S, Bottou L (2017) Wasserstein generative adversarial networks. In: Precup D, Teh YW (eds) Proceedings of the 34th international conference on machine learning, PMLR, proceedings of machine learning research, vol 70, pp 214–223. https://proceedings.mlr.press/v70/arjovsky17a.html

Aytar Y, Vondrick C, Torralba A (2016) Soundnet: learning sound representations from unlabeled video. Advances in neural information processing systems. pp 892–900

Berthelot D, Schumm T, Metz L (2017) BEGAN: boundary equilibrium generative adversarial networks. ArXiv:1703.10717

Boddapati V, Petef A, Rasmusson J, Lundberg L (2017) Classifying environmental sounds using image recognition networks. Procedia Comput Sci 112:2048–2056

Bousmalis K, Silberman N, Dohan D, Erhan D, Krishnan D (2016) Unsupervised pixel-level domain adaptation with generative adversarial networks. IEEE conference on computer vision and pattern recognition

Chandna P, Blaauw M, Bonada J, Gmez E (2019) Wgansing: a multi-voice singing voice synthesizer based on the wasserstein-gan. In: 2019 27th European signal processing conference (EUSIPCO), pp 1–5. https://doi.org/10.23919/EUSIPCO.2019.8903099

Chen H et al (2019) Integrating the data augmentation scheme with various classifiers for acoustic scene modeling. 1907.06639

Chollet F (2015) Keras. https://github.com/fchollet/keras

Chu SM, Narayanan SS, Kuo CCJ (2009) Environmental sound recognition with time-frequency audio features. IEEE Trans Audio Speech Lang Process 17(6):1142–1158

Cristani M, Bicego M, Murino V (2007) Audio-visual event recognition in surveillance video sequences. IEEE Trans Multimed 9(2):257–267

Cui X, Goel V, Kingsbury B (2015) Data augmentation for deep neural network acoustic modeling. IEEE/ACM Trans Audio Speech Lang Process 23(9):1469–1477. https://doi.org/10.1109/TASLP.2015.2438544

Demir F, Abdullah D, Sengur A (2020) A new deep CNN model for environmental sound classification. IEEE Access. https://doi.org/10.1109/ACCESS.2020.2984903

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) Imagenet: a large-scale hierarchical image database. In: Computer vision and pattern recognition, 2009. IEEE conference on CVPR 2009. IEEE, pp 248–255. https://ieeexplore.ieee.org/abstract/document/5206848/

Devries T, Taylor GW (2017) Dataset augmentation in feature space. CoRR abs/1702.05538. http://arxiv.org/abs/1702.05538, 1702.05538

Donahue C, McAuley J, Puckette M (2019) Adversarial Audio Synthesis. In: ICLR. arXiv:1802.04208

Doom 9’s Forum (2020) https://forum.doom9.org/showthread.php?t=165807

Duan S, Zhang J, Roe P, Towsey M (2014) A survey of tagging techniques for music, speech and environmental sound. Artif Intell Rev 42(4):637–661

Engel JH, Agrawal KK, Chen S, Gulrajani I, Donahue C, Roberts A (2019) Gansynth: Adversarial neural audio synthesis. In: 7th international conference on learning representations, ICLR 2019, New Orleans, LA, USA, May 6–9, 2019, OpenReview.net. https://openreview.net/forum?id=H1xQVn09FX

Font F, Roma G, Serra X (2013) Freesound technical demo. In: Proceedings of the ACM international conference on multimedia, pp 411–412

Goodfellow IJ, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. Adv Neural Inf Process Syst 27:2672–2680

Gulrajani I, Ahmed F, Arjovsky M, Dumoulin V, Courville A (2017) Improved training of wasserstein gans. In: Proceedings of the 31st international conference on neural information processing systems, Curran Associates Inc., Red Hook, NY, USA, NIPS’17, pp 5769–5779

Gygi B et al (2007) Similarity and categorization of environmental sounds. Percept Psychophys 69(6):839–855. https://doi.org/10.3758/BF03193921

Han Y, Lee K (2016) Convolutional neural network and multiple-width frequency-delta data augmentation for acoustic scene classification DCASE 2016 Challenge. TechRep

Heusel M, Ramsauer H, Unterthiner T, Nessler B, Hochreiter S (2017) Gans trained by a two time-scale update rule converge to a local nash equilibrium. In: Proceedings of the 31st international conference on neural information processing systems, Curran Associates Inc., Red Hook, NY, USA, NIPS’17, pp 6629–6640

Humphrey EJ, Bello JP, LeCun Y (2012) Moving beyond feature design: deep architectures and automatic feature learning in music informatics. In: International society for music information retrieval conference, pp 403–408

Jaitley N, Hinton GE (2013) Vocal tract length perturbation (VTLP) improves speech recognition. In: Proceedings ICML workshop on deep learning for audio, speech and language

Kim HY, Yoon JW, Cheon SJ, Kang WH, Kim NS (2021) A multi-resolution approach to gan-based speech enhancement. Appl Sci. https://doi.org/10.3390/app11020721

Ko T, Peddinti V, Povey D, Khudanpur S (2015) Audio augmentation for speech recognition. In: Proceedings of the annual conference of the international speech communication association, INTERSPEECH pp 3586–3589

Koenig M (2019) Background sounds | free sound effects | sound clips | sound bites. [online] soundbible.com. https://soundbible.com/tags-background.html. Accessed 7, 2019

Kong Q et al (2019) Acoustic scene generation with conditional samplernn. In: IEEE international conference on acoustics, speech and signal processing, ICASSP 2019, Brighton, United Kingdom, May 12-17, 2019, IEEE, pp 925–929. https://doi.org/10.1109/ICASSP.2019.8683727

Lecun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Lee CY, Toffy A, Jung GJ, Han WJ (2018) Conditional WaveGAN. arXiv:1809.10636

Li S, Sung Y (2021) Inco-gan: variable-length music generation method based on inception model-based conditional gan. Mathematics. https://doi.org/10.3390/math9040387

Li TLH, Chan AB (2011) Genre classification and the invariance of MFCC features to key and tempo”. In: Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and. Lecture Notes in Bioinformatics) 6523: 317–327

Liu JY, Chen YH, Yeh YC, Yang YH (2020) Unconditional audio generation with generative adversarial networks and cycle regularization. In: Proceeding of the Interspeech 2020, pp 1997–2001. https://doi.org/10.21437/Interspeech.2020-1137

Madhu A, Kumaraswamy S (2019) Data augmentation using generative adversarial network for environmental sound classification. In: 2019 27th European signal processing conference (EUSIPCO) pp 1–5

Mao X, Li Q, Xie H, Lau RY, Wang Z (2016) Multi-class generative adversarial networks with the L2 loss function. CoRR abs/1611.04076. http://arxiv.org/abs/1611.04076

McFee B, Raffel C, Liang D, Ellis DP, McVicar M, Battenberg E, Nieto O (2015) librosa: Audio and music signal analysis in python. In: Proceedings of the 14th python in science conference

McHugh ML (2012) Interrater reliability: the kappa statistic. Biochem Med 22(3):276–282. https://doi.org/10.11613/bm.2012.031

Mesaros A, Heittola T, Virtanen T (2018) A multi-device dataset for urban acoustic scene classification. DCASE2018 Workshop

Mirza M, Osindero S (2014) Conditional generative adversarial nets. CoRR abs/1411.1784. http://arxiv.org/abs/1411.1784

Mun S, Park S, Han DK, Ko H (2017a) Generative adversarial network based acoustic scene training set augmentation and selection using SVM hyper-plane. In: Proceedings of the detection and classification of acoustic scenes and events 2017 workshop (DCASE2017), pp 93–102

Mun S, Shon S, Kim W, Han DK (2017b) Deep neural network based learning and transferring mid-level audio features for acoustic scene classification. In: 2017 IEEE international conference on acoustics, speech, and signal processing, ICASSP 2017—proceedings, Institute of Electrical and Electronics Engineers Inc., pp 796–800, 10.1109/ICASSP.2017.7952265, 2017 IEEE international conference on acoustics, speech, and signal processing, ICASSP 2017 conference date: 05-03-2017 Through 09-03-2017

Mushtaq Z, Su SF (2020) Environmental sound classification using a regularized deep convolutional neural network with data augmentation. Appl Acoust 167:107389–107389

Mushtaq Z, Su SF, Tran QV (2021) Spectral images based environmental sound classification using CNN with meaningful data augmentation. Appl Acoust 172:107581

Odena A, Olah C, Shlens J (2017) Conditional image synthesis with auxiliary classifier gans. http://arxiv.org/abs/1610.09585

Pan Z et al (2019) Recent progress on generative adversarial networks (gans): a survey. IEEE Access 7:36322–36333. https://doi.org/10.1109/ACCESS.2019.2905015

Parascandolo G, Huttunen H, Virtanen T (2016) Recurrent neural networks for polyphonic sound event detection in real life recordings. In: International conference on acoustics, speech and signal processing (ICASSP), pp 6440–6444

Piczak KJ (2015a) Environmental sound classification with convolutional neural networks. In: 25th international workshop on machine learning for signal processing, pp 1–6

Piczak KJ (2015b) ESC: dataset for environmental sound classification. ACM international conference on multimedia, pp 1015–1018

Radford A, Metz L, Chintala S (2016) Unsupervised representation learning with deep convolutional generative adversarial networks. In: Bengio Y, LeCun Y (eds) 4th International conference on learning representations, ICLR 2016, San Juan, Puerto Rico, May 2-4, 2016, conference track proceedings. http://arxiv.org/abs/1511.06434

Ragab MG, Abdulkadir SJ, Aziz N, Alhussian H, Bala A, Alqushaibi A (2021) An ensemble one dimensional convolutional neural network with Bayesian optimization for environmental sound classification. Appl Sci. https://doi.org/10.3390/app11104660

Salamon J, Bello JP (2017) Deep convolutional neural networks and data augmentation for environmental sound classification. IEEE Signal Process Lett 24(3):279–283

Salamon J, Jacoby C, Bello JP (2014) A dataset and taxonomy for urban sound research. In: 22nd ACM international conference on multimedia (ACM-MM14)

Salimans T, Goodfellow I, Zaremba W, Cheung V, Radford A, Chen X (2016) Improved techniques for training gans. In: Lee D, Sugiyama M, Luxburg U, Guyon I, Garnett R (eds) Advances in neural information processing systems, Curran Associates, Inc., vol 29. https://proceedings.neurips.cc/paper/2016/file/8a3363abe792db2d8761d6403605aeb7-Paper.pdf

Sharma J, Granmo OC, Goodwin M (2019) Environment sound classification using multiple feature channels and attention based deep convolutional neural network. arXiv:1908.11219

SoX (2020) http://sox.sourceforge.net/sox.html

Stowell D, Plumbley MD (2014) Automatic large-scale classification of bird sounds is strongly improved by unsupervised feature learning. PeerJ 2:e488

Streijl RC, Winkler S, Hands DS (2014) Mean opinion score (MOS) revisited: methods and applications, limitations and alternatives. Multimed Syst 22:213–227

Szegedy C et al (2016) Rethinking the inception architecture for computer vision. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), IEEE, pp 2818–2826. https://doi.org/10.1109/CVPR.2016.308. http://ieeexplore.ieee.org/document/7780677/

Tang B, Li Y, Li X, Xu L, Yan Y, Yang Q (2019) Deep CNN framework for environmental sound classification using weighting filters. In: 2019 IEEE international conference on mechatronics and automation (ICMA), pp 2297–2302. https://doi.org/10.1109/ICMA.2019.8816567

Thanh-Tung H, Tran T, Venkatesh S (2019) Improving generalization and stability of generative adversarial networks. In: 7th international conference on learning representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019, OpenReview.net. https://openreview.net/forum?id=ByxPYjC5KQ

Tharwat A (2020) Classification assessment methods. Applied computing and informatics ahead-of-print(ahead-of-print):1–13. https://dx.doi.org/10.1016/j.aci.2018.08.003

Tokozume Y, Ushiku Y, Harada T (2018) Learning from between-class examples for deep sound recognition. 1711.10282

Virtanen T, Helen M (2007) Probabilistic model based similarity measures for audio query-by-example. IEEE workshop on applications of signal processing to audio and acoustics, pp 82–85

Xia X et al (2019) Auxiliary classifier generative adversarial network with soft labels in imbalanced acoustic event detection. IEEE Trans Multimed 21(6):1359–1371. https://doi.org/10.1109/TMM.2018.2879750

Yang F, Wang Z, Li J, Xia R, Yan Y (2020) Improving generative adversarial networks for speech enhancement through regularization of latent representations. Speech Commun 118(C):1–9. https://doi.org/10.1016/j.specom.2020.02.001

Zhang X, Zou Y, Shi W (2017) Dilated convolution neural network with leakyrelu for environmental sound classification. In: 2017 22nd international conference on digital signal processing (DSP), pp 1–5. https://doi.org/10.1109/ICDSP.2017.8096153

Zhang Z, Xu S, Cao S, Zhang S (2018) Deep convolutional neural network with mixup for environmental sound classification. arXiv:1808.08405

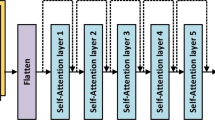

Zhang Z, Xu S, Zhang S, Qiao T, Cao S (2019) Learning attentive representations for environmental sound classification. IEEE Access 7:130327–130339

Zhang C, Zhu L, Zhang S, Yu W (2020) PAC-GAN: an effective pose augmentation scheme for unsupervised cross-view person re-identification. Neurocomputing 387:22–39. https://doi.org/10.1016/j.neucom.2019.12.094

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no known competing financial, general and institutional interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Madhu, A., K., S. EnvGAN: a GAN-based augmentation to improve environmental sound classification. Artif Intell Rev 55, 6301–6320 (2022). https://doi.org/10.1007/s10462-022-10153-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10462-022-10153-0