Abstract

With the growth of real-time and latency-sensitive applications in the Internet of Everything (IoE), service placement cannot rely on cloud computing alone. In response to this need, several computing paradigms, such as Mobile Edge Computing (MEC), Ultra-dense Edge Computing (UDEC), and Fog Computing (FC), have emerged. These paradigms aim to bring computing resources closer to the end user, reducing delay and wasted backhaul bandwidth. One of the major challenges of these new paradigms is the limitation of edge resources and the dependencies between different service parts. Some solutions, such as microservice architecture, allow different parts of an application to be processed simultaneously. However, due to the ever-increasing number of devices and incoming tasks, the problem of service placement cannot be solved today by relying on rule-based deterministic solutions. In such a dynamic and complex environment, many factors can influence the solution. Optimization and Machine Learning (ML) are two well-known tools that have been used most for service placement. Both methods typically use a cost function. Optimization is usually a way to define the difference between the predicted and actual value, while ML aims to minimize the cost function. In simpler terms, ML aims to minimize the gap between prediction and reality based on historical data. Instead of relying on explicit rules, ML uses prediction based on historical data. Due to the NP-hard nature of the service placement problem, classical optimization methods are not sufficient. Instead, metaheuristic and heuristic methods are widely used. In addition, the ever-changing big data in IoE environments requires the use of specific ML methods. In this systematic review, we present a taxonomy of ML methods for the service placement problem. Our findings show that 96% of applications use a distributed microservice architecture. Also, 51% of the studies are based on on-demand resource estimation methods and 81% are multi-objective. This article also outlines open questions and future research trends. Our literature review shows that one of the most important trends in ML is reinforcement learning, with a 56% share of research.

Similar content being viewed by others

1 Introduction

Service placement is the selection of an appropriate execution zone for service instances. In this regard, the service instances are mounted on the underlying computing resources of the network (Taheri-abed et al. 2023). This is done according to various performance criteria such as Quality of Service (QoS), energy consumption, latency, availability, etc. Today, with the advent of the Internet of Everything (IoE), most services include latency-sensitive and computation-sensitive components (Zabihi et al. 2023). Some of these important services include virtual reality, augmented reality, healthcare, museum monitoring, smart transportation, weather monitoring, e-health, and the Internet of Vehicles (IoV) (Gasmi et al. 2022). A huge amount of data is expected to be collected by sensors/connected objects, which have previously been processed centrally by large data centers in traditional ways. These new services cause more latency and energy consumption than before (Alenazi et al. 2022). The Mobile Cloud Computing (MCC) paradigm that emerged nearly two decades ago is not sufficient to fulfill these applications alone. The most important problem with MCC is the long delays due to the remoteness of the cloud data centers from the end devices. In response to this need, several computing paradigms have emerged, such as Mobile Edge Computing (MEC), Ultradense Edge Computing (UDEC), and Fog Computing (FC). The purpose of these paradigms is to bring computing resources closer to the end user and thus reduce the delay and waste of backhaul bandwidth.

Service placement is based on various parameters such as functional layout, service type, workload type, and application model. There are also other restrictions on resource management. The most important constraints are interaction method, resource type, resource orientation, and resource estimation. In addition, since IoT services experience workload fluctuations over time, it is important to automatically provision a sufficient number of resources. In this regard, overprovisioning/underprovisioning should be avoided to meet QoS (Etemadi et al. 2020). Also, if the edge resources are not enough for processing, we need to offload the service to fog devices. This, in turn, raises new issues. Among the most important issues of offloading, we can mention offloading approaches, placement strategy, and workload prediction (Shahraki et al. 2023). In addition to the limitation of edge resources, some services may have interdependencies with each other. This makes service placement more complicated. On the other hand, new applications have a distributed microservice architecture (Shahraki et al. 2023). As an advantage, the microservice allows the simultaneous processing of several parts of an application.

1.1 Motivation

A network service can consist of multiple functions that are placed in a specific order as a Service Function Chain (SFC). Service placement is the selection of appropriate execution zones for SFCs. One of the key challenges in service placement is when an application service is to be delivered in partnership with edge and cloud servers. Today, one of the most important technologies used for SFC is the use of containers. This is a well-known way to virtualize application services. Some well-known container technologies are Docker, Kubernetes, and Rocket (John 2023). The main purpose of service placement is to maintain “service chaining,” where multiple services follow a hierarchical order depending on their predefined goals. For example, Netflix may distribute key elements of its service, such as parental controls, traffic encryption, and acceleration, in a particular order (Mohan et al. 2016).

Figure 1 shows a service chain with two separate services in the edge-fog-cloud infrastructure consisting of two Edge Providers (EPs). Suppose one of the EPs has three servers and the other has two servers. Presumably, because EP1 is closer to the user, it can provide better QoS than EP2. Suppose the price of EP1 is higher than that of EP2. This chain ends at the fog platform, which consists of two Fog Servers (FSs). There are several combinations of service placement. Figure 1 shows two of the possible chains, SFC1 and SFC2. Because SFC1 provides both services on the EP1 platform, it has lower latency but higher cost. In contrast, because SFC2 distributes the services over both providers, it has higher experienced latency but lower cost. The reason for the lower cost of SFC2 is that it allows the program owner to limit its placement costs. However, SFC2 suffers from a lack of flexibility to adapt to changing user requirements due to the high management costs of combining two different providers.

An example of service placement at the edge-fog-cloud (Mohan et al. 2016)

There are two categories of distributed microservice programs for service placement. Some of them include parts with low interdependencies (loosely-coupled microservices), while others have high interdependencies (tightly-coupled microservices) (Mahmud et al. 2020). For tightly-coupled services, static methods are inefficient. To place the service, requirements such as the mobility of nodes and the limitation of energy and capacity of the underlying resources should be considered. Nowadays, due to the ever-increasing number of devices and input tasks, it is not possible to solve the service placement problem by relying on deterministic, rule-based solutions. In such a dynamic and complex environment, many factors can influence the solution.

So far, many optimization methods have been used to handle service placement. Due to the NP-hard nature of the problem, classical optimization methods are not sufficient. It cannot be solved on a large scale by methods such as Mixed Integer Programming (MIP) (Taka et al. 2022) or game theory (Shakarami et al. 2020). Instead, meta-heuristic and heuristic methods are widely used (Zabihi et al. 2023; Taka et al. 2022). These methods define a state space for the problem. The search starts from an initial state and continues until the closest solution is found (Tavakoli-Someh and Rezvani 2019). In heuristic algorithms, the search domain is limited by using a specific heuristic to reach the solution faster. A heuristic is an approximation to the problem. The main problem with heuristic methods is that they get stuck in local optima (Maia et al. 2021). Again, getting rid of local optima in metaheuristic algorithms is one of the major concerns (Sarrafzade et al. 2022). Unlike heuristic methods, which are usually tailored to a specific problem, metaheuristic methods do not depend on a specific problem. They define a general strategy according to which all problems should be solved (Lu et al. 2022). For example, the authors in Canali and Lancellotti (2019) use the genetic algorithm to reduce the convergence time to the near-optimal solution. In another study, Sarrafzade et al. (2022) propose a penalty-based approach to reduce response time in the cloud. Embedding a penalty mechanism helps to explore a larger space initially. The penalty effect is gradually increased in the next iterations. This is done through a chromosome selection process using a priority value. The closer the dependent modules are to the user, the higher their selection probability. Despite the advantages, metaheuristic methods have very sensitive hyperparameters that are not easy to tune.

Recently, the use of Machine Learning (ML) methods to predict service placement has attracted the attention of researchers. Both optimization and ML often use a cost function. Optimization is usually a way to define the difference between predicted and actual value, while ML aims to minimize the cost function. Simply put, the goal of ML is to minimize the gap between prediction and reality based on historical data. Instead of relying on explicit rules, ML uses predictions based on historical patterns (Shahraki et al. 2023). One of the reasons for the popularity of ML is its ability to handle increasingly large data volumes in IoE environments.

ML is divided into four important categories: supervised learning, unsupervised learning, semisupervised learning, and Reinforcement Learning (RL). RL methods can exploit environmental experiences to enable the agent to make the best decision for short-term future predictions (Sutton and Barto 2018). These methods have the characteristics of self-learning and self-adaptation, which reduce the sensitivity to hyperparameters. RL methods generally perform global searches effectively. This makes RL ideal for modeling high-dimensional problems in real-world scenarios. RL has a diverse set of techniques that make it ideal for service placement. Unlike MDPs, where the exact state of the system is always fully observable by the agent, sometimes it may be difficult to detect the exact state of the edge/fog system. Processes in which the decision-maker may have incomplete knowledge of the system state are called Partially-observable Markov Decision Process (POMDP). Here, keeping the information of the previous states in memory helps the agent to understand more about the nature of the environment and find the optimal policy. Observability affects the computational complexity of an optimal policy. For example, in MDPs, dynamic programming can be used to calculate an optimal policy for a finite horizon in polynomial time. Also, one of the methods used in POMDP environments is the Deep Recurrent Q-learning (DRQN). Here, recurrent neural networks are used to understand and retain information about past states.

Despite many advantages, ML methods also have limitations. For example, in supervised learning methods, the selection of training data is a challenge (Sutton and Barto 2018). Similarly, in unsupervised learning methods, the learning rate is a challenge. Usually, the biggest challenge of RL methods is establishing a tradeoff between exploration and exploitation. The agent cannot practically explore in an infinite state/action space. The agent should try to exploit the potential of current points by limiting exploration to new points (Sutton and Barto 2018). Note that ML methods may have some optimization operations at their core. For example, one of these operations may be to compute the minimum value of the cost function. In such cases, ML methods may use metaheuristic/heuristic techniques to find the optimal solution. For example, the authors in Shen et al. (2023) use an evolutionary algorithm to reduce the search overhead during the construction of the neural network model. Recently, the combination of evolutionary methods with ML, especially neural networks, has received much attention from researchers. For example, Shen et al. (2023) use evolutionary methods to prune Sparse Neural Networks (SNNs) to have no additional connections after training. The pruning and regeneration of synaptic connections in SNNs evolve dynamically during learning, but the structure dispersion is maintained at a certain level. Since our main focus in this survey is on ML methods in service placement, we refrain from further explanation of evolutionary research. Interested readers can refer to Shen et al. (2021), Natesha and Guddeti (2022), Hu et al. (2023).

In this systematic review, we present a taxonomy for the service placement problem using ML. This review includes a description of the most important performance criteria for each method. We will also outline open issues and future research trends.

1.2 Comparison with previous studies

A literature review reveals that researchers have studied the problem of service placement with different objectives. Some have ignored important issues that conflict with real-world needs. Some of these include not considering mobility (Gasmi et al. 2022; Donyagard Vahed et al. 2019), heterogeneity and dynamism (Torabi et al. 2022; Santos et al. 2022a), amount of resources, and environment (Haibeh et al. 2022). None of these surveys have been included in the field of the IoE, and the paradigms related to this field, i.e., UDEC (Eyckerman et al. 2022; Fang et al. 2022) and FRAN (Xiao et al. 2020; Yu et al. 2020), have not been studied.

To increase the efficiency of the methods, it is necessary to be able to analyze their applications at the software level, and this requires understanding the architecture of the programs. Unfortunately, only a few surveys (Mahmud et al. 2020; Salaht et al. 2020) have addressed the types of program architecture. As we know, service placement can be used for various purposes, such as offloading (Mahmud et al. 2020; Salaht et al. 2020), resource management (Gallego-Madrid et al. 2022; Nayeri et al. 2021), and scheduling (Malazi et al. 2022; Shuja et al. 2021). Some studies have investigated service placement from the perspective of optimization theories (Wang et al. 2022a; Shao et al. 2021).

In this paper, we examine service placement from an ML perspective. As will be explained in Sect. 3, we present 8 key requirements for service placement. These include ML technique, resource estimation, objective, application architecture, paradigm, and simulator used. The tables in the Appendix explain all these requirements in detail. All major MCC complementary computing paradigms, including FC, MEC, EC, UDEC, and FRAN, are covered in the context of IoT and IoT. Table 1 shows a comprehensive comparison of our survey with the most important previous articles in terms of paradigms and key features. As can be seen, the focus of previous studies is mainly on static and dynamic methods. Some of them focus exclusively on heuristic/metaheuristic optimization methods. Among the 19 surveys, only 10 are about ML methods. None of them has comprehensively addressed all computing paradigms. In addition, as shown in Table 1, none of them provides a comprehensive overview of the type of application and mobility.

1.3 Contributions

Our most important contributions to this survey are:

-

We make a comprehensive classification of the research conducted on service placement using ML methods. It includes the four well-known categories of supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning. Descriptive statistics of the use of each method in each ML category are presented along with the research trends.

-

Based on a systematic review, we propose seven basic requirements for service placement. These requirements address important issues such as resource estimation techniques, performance metrics, application architecture, interface paradigm, simulation tools, and objective algorithms.

-

In describing each technique, we have paid special attention to the application architecture and resource estimation method. Solving the problem of service placement in environments where resources are constantly changing requires short-term and medium-term resource estimation. In addition, distributed microservice architecture can be effective in choosing a problem-solving algorithm. We have highlighted these requirements in the attached tables.

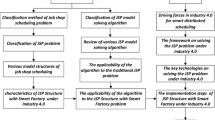

The organization of this paper is shown in Fig. 2. Section 2 provides an overview of service placement and ML fundamentals; Sect. 3 describes the research methodology and basic questions; Sect. 4 describes critical ML techniques used for service placement; Sects. 5 and 6 are devoted to the discussion of results and future directions; Finally, Sect. 7 concludes the survey. Also, Table 2 shows a summary of the abbreviations.

2 Background

This section first explains the basics of service placement. It then discusses service placement based on machine learning.

2.1 Service Placement

In this survey, we look for machine learning methods that have been used to solve the problem of service placement. Figure 3 shows the main computing paradigms related to service placement. End devices do not have enough processing power to handle an independent task, so there is a need to offload the processes and place the service in the fog or cloud. Usually, two metrics of delay and energy consumption are present in the service placement. A service delivery method should consider both user deadlines and minimize the energy consumption of the entire network.

The main computing paradigms related to service placement (Zabihi et al. 2023)

Typically, end devices in Fog Computing (FC) and Mobile Edge Computing (MEC) are mobile (Taheri-abed et al. 2023). They also differ in terms of the operating system, hardware, and processing power. In addition, the interface connections for offloading user requests are heterogeneous. All these factors make the service placement problem the basis of a multi-objective optimization problem. A balance must be struck between multiple objectives and metrics. The general goal is to determine which request should be placed on which underlying physical node to achieve the best performance. For example, if we want to optimize delay, we cannot simply look for the physically closest nodes. If we want to optimize delay, we cannot ignore network availability (Eyckerman et al. 2022).

Service placement methods are provided according to different factors. Some important factors are node mobility, resource availability, network dynamics, application architecture, network resource arrangement, and placement technique. Available resources can be measured by profiling, predictive, on-demand, and dynamic strategies and can be static or dynamic. Existing application architectures may be monolithic or interdependent. They can be distributed or centralized, depending on how network resources are arranged, the type of online/offline placement, and how service placement is controlled (Salaht et al. 2020).

Some of the most important methods for solving the service placement problem are integer programming, constraint function optimization, game theory, and machine learning. Typically, each of these methods can be integrated with other optimization methods, such as metaheuristics, as needed (Salaht et al. 2020). As an example, we consider one of the most common problems of typical service provisioning. In the service placement problem based on the pigeon nest principle, we want to understand how to place different \(M\) service components on \(N\) underlying nodes. An important constraint is that the capacity of the underlying nodes is not less than the total number of services. The optimization problem (e.g., minimization) of the objective function \(F(i,j),\,\,i \in M,\,\,\,j \in N\) is defined as follows

In the above formula, the goal is to minimize an objective function F with O different parameters, while we have k number of different constraints in the form of functions g and h. Each of the functions \(f_{1} (x)\), \(f_{2} (x)\), … and \(f_{o} (x)\), describes one of the important metrics such as energy, delay, etc. The constraints \(g\) and \(h\) can also be things like computing capacity, available memory, available bandwidth, and application limits like the maximum possible delay between two services (Eyckerman et al. 2022).

2.2 Metrics

The main challenges in communications in the Internet of Things (IoT) include the heterogeneity of devices in terms of the amount of resources, operating system, and mobility (Oliveira et al. 2023). This network is used for purposes such as real-time applications (streaming video, gaming, virtual reality, and augmented reality), processing-based applications, and storage-based applications (Ghobaei-Arani et al. 2018). To overcome the limitations between the end-device layer and the cloud layer, improved intermediate layers have emerged, including the edge layer, and fog layer. In general, the goal of these intermediate paradigms is to improve Quality of Service (QoS) and Quality of Experience (QoE) based on a predefined scenario. We have identified eight important metrics: energy consumption, processing time, I/O operation time, resource utilization, mobility support, cost, QoS and QoE improvement, and scalability. Important categories such as component placement, system integration, application elasticity, and remediation, are also included in these topics (Duc et al. 2019). Table 3 shows important metrics and sub-metrics in service placement.

Service placement can be combined with other independent goals such as offloading, and cashing. Our literature review shows that among the above eight areas, four areas are more important and well-known. For service placement purposes, the following abbreviated list can be provided:

-

Service placement for latency reduction

-

Service placement for (optimal) resource utilization

-

Service placement to reduce energy consumption

-

Service placement to reduce cost

Two general methods for service placement can be imagined: (a) on a virtual machine in response to a user request and (b) on different instances of physical machines (Sami et al. 2020). For example, in Sami et al. (2020), service switching is used to optimize the use of resources of each fog layer cluster. In previous research, it has been used for dynamic management through orchestration and containerization technology. On the contrary, in this research, a proactive method is used for horizontal scaling of resources according to workload fluctuations in the fog layer. The authors also consider the cost metric for scalable placement of the service in an instance. According to Table 3, they optimize the response time by preventing instability, detecting failures, and inspecting maintenance. They model the processing of user requests and available resources using a Markov Decision Process (MDP). The technique used in this research is SARSA.

Another classification of service placement is done according to the dynamics of fog computing. In general, there are two main methods: static and dynamic. The fog layer is created to overcome the limitations of the distant cloud layer. Here, computing and storage resources are closer to the end user. A flexible solution is to use the virtual machine to facilitate service hosting, which is supported by many operating systems. Unfortunately, as the number of user requests increases, the cost of resources, especially in the fog layer, increases dramatically. This necessitates the need for dynamic service provisioning. In such situations, containers are now being used instead of virtual machines. Container technology is much lighter than a virtual machine because, unlike a virtual machine, it uses the device’s operating system instead of creating a copy of it. Therefore, it is very easy to manage a large number of containers and resources in fog clusters using the orchestrator. To manage the large number of requests in the dynamic management style, horizontal scalability is used. Horizontal scalability means adding or removing container instances to achieve the response rate desired by the user or to free up resources that were being used by other resources. Unfortunately, a potential challenge with horizontal scalability is the heterogeneity of fog resources. In such an environment, using automated methods may be better than other methods and more adaptable to real-world requirements.

Sami et al. (2020) propose a container-based service placement. They use reinforcement learning to proactively monitor horizontal resources. Their goal is to simultaneously minimize response time and cost due to the heterogeneity of resources. In such a heterogeneous environment, the network can become unstable. In the fog environment, not only do user requests change randomly, but the workload also changes randomly. The placement of the service is highly dependent on the availability of resources. When a device in the fog layer receives a request from a user, it first sends performance-related information to a central controller. Then, taking into account the information from all devices, the controller determines which device is best suited to meet the response time and cost requirements. In addition to response time and cost, the system also ensures that the workload of the fog devices is not overloaded by the addition of a new service (Sami et al. 2020).

Consider another example of the importance of latency and energy in healthcare applications (Eyckerman et al. 2022). Here, the patient’s vital signs need to be monitored in real-time and continuously. The cloud network is not conducive to low-latency applications, and end devices suffer losses due to missed deadlines. This challenge can be solved with the advent of the 5G network. The 5G network is ultra-reliable, with low latency and high throughput. One of the problems with 5G is the heterogeneity of the environment, which in turn causes a lack of bandwidth and increasing latency. To solve this problem and bring end devices closer to network resources, the 5G network is used in combination with fog computing. Fog computing is an intermediate paradigm that is compute-intensive, latency-aware, and even energy-aware. However, to achieve acceptable performance, the best placement of the service should be done according to the desired strategy and important parameters.

2.3 Service placement and machine learning

The most important reason for the success of machine learning methods is the dynamism in solving prediction problems (Rodrigues et al. 2019). Machine learning methods do not guarantee optimality, but they have relatively low complexity in solving problems with many variables. Figure 4 shows a taxonomy of machine learning methods for service placement. In a general classification, machine learning methods fall into four categories: supervised, semi-supervised, unsupervised, and reinforcement learning. In supervised learning, an optimal training set is used to learn how to interact with the environment. In semi-supervised learning, the machine’s response is based on a combination of an optimal training set and previous experience with the environment. In unsupervised learning, the machine learns how to interact with the environment based on previous experience. Reinforcement learning has two main phases: exploration and exploitation. Here, the agent first explores the dimensions of the problem in the environment and then exploits its findings (Rodrigues et al. 2019).

3 Research methodology

The goal of this study is to systematically review machine learning algorithms for service placement. The methodology of this Systematic Literature Review (SLR) is based on the guidelines of Zabihi et al. (2023). This section includes research questions, the search process, inclusion/exclusion criteria, and quality assessment.

-

Research questions

To plan the review process, it is first necessary to extract the research questions. These questions determine the motivation for the research. We address seven research requirements, which are

- RQ1::

-

Most of the algorithms used in service placement are related to which area of machine learning?

- Motivation::

-

The answer to this question indicates which machine learning techniques are commonly used among the algorithms used in service placement.

- RQ2::

-

What methods are used to estimate the amount of current network resources, and which resource estimation method is more popular?

- Motivation::

-

The answer to this question determines which of the resource estimation methods (dynamic, profiling, or on-demand) are used in service placement and which of these methods is used more often.

- RQ3::

-

The purpose of service placement is optimization/trade-off between which parameters and metrics?

- Motivation::

-

The answer to this question determines what percentage of the algorithms seek to optimize one metric and what percentage seek to find a compromise between multiple metrics.

- RQ4::

-

Can the type of application architecture influence the choice of method and its efficiency?

- Motivation::

-

Architecture refers to the type of distribution of the application and its components. Depending on whether it is monolithic or distributed, it can be useful in the resource estimation algorithm.

- RQ5::

-

What is the dominant paradigm used in the interface layer?

- Motivation::

-

The answer to this question determines what percentage of interface paradigms can be useful in increasing the functionality of the cloud network in different technologies, including IoT and IoV.

- RQ6::

-

What simulation tools have been used in previous research to evaluate efficiency?

- Motivation::

-

The answer to this question indicates what tools researchers have used more for simulation. This can shed light on young researchers and save them from confusion.

- RQ7::

-

What were the main objectives of previous research?

- Motivation::

-

The answer to this question indicates what the purpose of the previous research was and what algorithms the researchers used to achieve which goal.

- RQ8::

-

What are the most important open issues in the future?

- Motivation::

-

Answering this question is the key for young researchers. It allows them to save valuable time in identifying hot research topics.

We will answer the above questions in detail in the following sections.

-

Search process

The most important scientific databases in the world used in this survey are Wiley (www.interscience.wiley.com), Springer (www.springerlink.com), ACM Digital Library (www.acm.org/dl), ScienceDirect (or Elsevier) (www.sciencedirect.com), IEEExplore (ieeexplore.ieee.org), MDPI (www.mdpi.com). Keywords used to describe the search string include terms such as “machine learning,” “reinforcement learning,” “service delivery,” etc. By using keywords and combining them with Boolean “AND” and “OR” operators, the search strings were ultimately defined as follows: (“machine learning” or “ML”) (“reinforcement learning” or “RL”) (“supervised learning” or “unsupervised learning”) (“semi-supervised learning”).

-

Inclusion and exclusion criteria

Our literature review shows that after 2018, research on service placement and related techniques such as offloading, service scheduling, resource management, and resource provisioning has gained momentum. For this reason, we have chosen to focus on the period from the beginning of 2018 to the end of 2023. The article selection process is shown in Fig. 5. Note that we removed the non-English articles, even though their number was very small. Also, in the quality assessment phase, conference articles that were not indexed or were not highly relevant were removed. Table 4 in the Appendix shows the characteristics that were finally extracted from the articles.

-

Distribution of articles

Figures 5b and 6a show the distribution of articles by year and by publisher, respectively. As shown in the figure, IEEE has the largest share over other publishers with more than 50%. Other publishers follow with almost the same percentage. Figure 7 shows the number of publications per year. This figure shows a significant increase in the research done since 2018. In addition, our literature review shows 99 journal articles and 26 conference papers. To answer the research questions, the text of each article was carefully analyzed.

4 Service placement using machine learning

Middle-layer computing paradigms such as FC and MEC can solve the computational and network bottlenecks in large-scale IoE applications. These technologies complement cloud computing by providing processing and storage power at the edge of the network. Recently, there has been a growing trend to use ML to resolve middle-layer application problems such as resource management, security, latency, and power consumption. In this section, we examine the types of algorithms and technologies using machine learning algorithms. Figure 8 shows the different aspects according to the literature.

4.1 Unsupervised learning algorithms

Unsupervised learning is used to analyze and cluster unlabeled datasets (Taheri-abed et al. 2023). It can automatically discover hidden patterns or groupings of data. Items within a cluster should be similar and simultaneously dissimilar to other clusters’ items.

Definition 1

Suppose we represent a dataset containing N records with X = {X1, X2, … XN}. We also represent attributes of this dataset with \({\mathbf{A}} = \left[ {\begin{array}{*{20}c} {a_{1} } & {a_{2} } & {...} & {a_{D} } \\ \end{array} } \right]\). Therefore, each record \({\mathbf{X}}_{i}\) is in the form \({\mathbf{X}}_{i} = \left[ {\begin{array}{*{20}c} {a_{i,1} } & {a_{i,2} } & {...} & {a_{i,D} } \\ \end{array} } \right]\). The purpose of clustering is to classify records into Kclusters so that each cluster Cj meets the following conditions:

-

(a)

\(\begin{gathered} \bigcup\limits_{j = 1}^{K} {C_{j} = {\text{X}}} \hfill \\ \hfill \\ \end{gathered}\)

-

(b)

\(C_{j} \ne \emptyset ,\quad \quad j = 1, \, 2, \, \ldots , \, K\)

-

(c)

\(C_{j} \bigcap {C_{k} = \emptyset }\)

4.1.1 K-means

Traditionally, K-means has been used for clustering (Memon 2019). The K-means pseudo-code is shown in Algorithm 1. In line 1, the number of initial centers of the clusters is given to the algorithm as a predefined parameter \(K\). In line 3, each record \({\mathbf{X}}_{i}\) is assigned to a cluster with the closest center. For this purpose, the distance between \({\mathbf{X}}_{i}\) and each cluster center \({\mathbf{m}}_{j}\) is measured. Then, the nearest cluster center to the record \({\mathbf{X}}_{i}\) is found as follows:

In line 4, the center of each cluster is updated as follows:

where Nj denotes the number of records in the cluster Cj. The above operation is repeated until the centers M = {m1, m2, …, mK} do not change.

Pseudo-code of K-means clustering algorithm

In some research (Li et al. 2019a), clustering is used to create several clusters of fog nodes. Each of these clusters is managed by a cluster head. It is determined based on various metrics such as residual energy. Table 5 in the Appendix shows clustering research using k-means. Farhat et al. (2022) propose an online mechanism for dynamic service chain deployment to optimize operational costs in a limited time frame. In some studies such as (Raghavendra et al. 2021; Yuan et al. 2020), a node is assumed to be a member of more than one cluster due to energy consumption and response time considerations.

4.1.2 G-means

In G-means, the number of clusters is determined iteratively using geometric evaluation and arithmetic analysis. de Oliveira et al. (2023) use G-means to reduce the load of parallel position-based query processing. The position of the source node in the wireless search zones is automatically determined by G-means. This research is a single-objective optimization problem.

4.1.3 Federated learning

In this method, a central server is used to coordinate the participating nodes during the learning process (Qian et al. 2019). It aggregates model updates after node selection. The weakness of this method is that the links leading to the server may become a bottleneck. Also, some complex topologies may affect the performance of the learning process. On the contrary, it has the advantage of avoiding single-point failures, since model updates are performed only between connected nodes, without the intervention of a central server.

In federated learning, the local models are assumed to have the same architecture as the global model. Recently, a new federated learning framework called HeteroFL (Mei et al. 2022) has been developed to handle heterogeneous clients with very different computing and communication capabilities. It can train heterogeneous local models with a global inference model. Federated learning provides the ability to analyze the social behavior of users based on their preferences on an hourly, daily, or weekly basis (Qian et al. 2019). Balasubramanian et al. (Balasubramanian et al. 2021) use a federated learning algorithm to manage the placement of content files in EDC and MDC sites. Federated learning has new applications, especially with the help of blockchain (Kim et al. 2018).

4.1.4 Unsupervised neural network

Neural networks are used to predict the reputation of nodes in combination with clustering (Hallappanavar et al. 2021). The reputation value of a new service is determined based on the correlation of various features and the reputation value of previous services. The features used to estimate service reputation are very diverse and depend on the application used. Neural networks consist of three layers: input, hidden, and output layers. Each neuron represents one service parameter. The output of the network is the prediction value of the service reputation. If the number of neurons in the hidden layer is small, the convergence of the neural network will be disturbed. On the contrary, if the number of neurons is large, overfitting may occur. Table 6 in the Appendix shows unsupervised neural network research.

4.2 Semi-supervised learning

Semi-supervised learning is a type of machine learning that can make predictions about other unlabeled samples based on a small number of labeled samples (Salaht et al. 2020).

4.2.1 Fuzzy systems

In fuzzy clustering, each sample can belong to more than one cluster. Three types of systems are mostly used in service placement: neural fuzzy system, fuzzy neural network, and neuro-fuzzy hybrid system. In neural fuzzy systems, neural networks are used to determine fuzzy rules. They change the weights during training to minimize the cost function. In other words, the fuzzy functions and rules act as the weights of the neural network. Conversely, in a fuzzy neural network, the inputs to the neural network are non-fuzzy. Finally, in hybrid neuro-fuzzy systems, each technique is used independently to perform a task (Alli and Alam 2019). Neuro-fuzzy systems are used to manage the parameters of a fuzzy system. They are used to predict and classify problems. They combine features of neural networks and fuzzy techniques. Here, fuzzy models work with if–then-else rules. In Son and Huh (2019), a fuzzy semi-supervised algorithm is used to meet the user’s needs.

4.2.2 Semi-supervised classifier

Suppose a dataset contains \(N\) samples \(N = N_{1} + N_{2}\). Of these, \(N_{1}\) samples have a label and the rest (\(N_{2}\) samples) are unlabeled (Mohammed et al. 2022). To build a semi-supervised classifier, first, the training phase is performed on a small set of labeled samples. Then, a semi-supervised learning process is performed iteratively. In the test phase, probability theory is used to identify unlabeled samples. Labels with the highest probability prediction values are added to the dataset as new labels. Also, in some research (Mohammadi et al. 2017), deep semi-supervised learning is used to improve the performance and accuracy of the learning agent. The details of the semi-supervised learning algorithm and its characteristics are shown in Table 7 in the Appendix.

4.3 Supervised learning

Generally, supervised learning is divided into classification and regression (Taheri-abed et al. 2023). Our literature review shows that classification has been used more than regression for service placement. It is used when records of a dataset are labeled. Each sample \(i\) has a feature vector \({\mathbf{X}}_{i}\) and a judgment label \(y_{i}\). Let us denote the number of samples by \(N\) and the number of attributes of each sample by \(D\). For example, in binary classification, each sample \(i\) has two possible values for the judgment label.

Definition 2

Suppose we represent a dataset containing N records with X = {X1, X2, …, XN}. We also represent attributes of this dataset with \({\mathbf{A}} = \left[ {\begin{array}{*{20}c} {a_{1} } & {a_{2} } & {...} & {a_{D} } \\ \end{array} } \right]\). Therefore, each record \({\mathbf{X}}_{i}\) is in the form \({\mathbf{X}}_{i} = \left[ {\begin{array}{*{20}c} {a_{i,1} } & {a_{i,2} } & {...} & {a_{i,D} } \\ \end{array} } \right]\). Each sample \(i\) has a label \(y_{i}\). Our goal in the classification is to build a model that can predict the label \(y_{j}\).

There are various classification methods, each of which has advantages and disadvantages. Interested readers can refer to Tan et al. (2016) for further study. Some of the most important classification methods are K-nearest Neighbors (KNN), Support Vector Machine (SVM), Decision Tree, Random Forest, naïve Bayes, Bayesian belief network, Adaptive Boosting (AdaBoost), Extreme Gradient Boosting (XGBoost), the ensemble of classifiers.

According to the above explanations, supervised learning has two phases: training and predicting. As shown in Fig. 9, based on the labeled data X, a model, also known as a classifier, is built in the training phase. In the second phase, the model’s accuracy is evaluated by injecting new labeled data. For each sample, if the label predicted by the model is the same as the actual label, it indicates that the model is working correctly.

4.3.1 Linear regression

We demonstrate, for example, linear regression, which is the most straightforward regression (Kochovski et al. 2019). Here we define a hypothesis weight vector \({{\varvec{\uptheta}}} = \left[ {\begin{array}{*{20}c} {\theta_{1} } & {\theta_{2} } & {...} & {\theta_{D} } \\ \end{array} } \right]\). Now, for a data record \({\mathbf{X}}_{i} = \left[ {\begin{array}{*{20}c} {a_{i,1} } & {a_{i,2} } & {...} & {a_{i,D} } \\ \end{array} } \right]\), we describe the hypothesis function \(h_{\theta } ({\mathbf{X}}_{i} )\) as follows:

Our goal is to minimize the cost function \(J({{\varvec{\uptheta}}})\) (Baek et al. 2019). There are many ways to define a cost function. One of the most well-known cost functions is the Sum of Squared Error (SSE), which is defined as follows:

As seen from Eq. (7), it calculates the squared difference of the hypothesis \(h_{\theta } ({\mathbf{X}}_{i} )\) with the actual label \(y_{i}\) for all records (Yan et al. 2020). The ultimate goal of all regression methods is to minimize the cost function \(J({{\varvec{\uptheta}}})\). There are many methods for this, the most famous of which is the descent gradient. In this method, we must first obtain the derivative of the cost function, \(\partial J({{\varvec{\uptheta}}})/\partial \theta_{j}\), concerning each weight \(\theta_{j}\). If we assume that the cost function is in the SSE form of Eq. (7), the derivative is obtained as follows (Mahmud et al. 2020):

Then we update each weight \(\theta_{j}\) based on the following equation:

where \(\alpha\) is called the learning rate and is a hyperparameter. Many methods in ML use descent gradient, the most known of which are logistic regression and neural network. Algorithm 2 shows the linear regression pseudo-code using the descent gradient.

So far, a lot of research has been done with regression methods, including logistic regression for service placement. For example, in Bashir et al. (2022), using logistic regression, a request is sent to a suitable fog node that can fulfill it. The designed system can take into account the maximum utilization of resources of fog nodes. Table 8 in the Appendix shows the supervised algorithms for service placement. Since 2012, the growth in the use of neural networks has accelerated. This has led to less use of traditional methods. Interested readers can refer to relevant references to study the details of methods such as decision trees (Manikandan et al. 2020), Support Vector Machines (SVMs) (Adege et al. 2018), and K-nearest Neighbor (KNN) (Kim et al. 2018). For example, in Manikandan et al. (2020), the decision tree is used to place the service in e-health. Different parties (e.g. patient, hospital, etc.) register at the key generation center. It issues a pair of public–private keys to the users. The service placement operation is then performed. In Kim et al. (2018), KNN is also used to place the service in the IoT. Here, the KNN algorithm only returns the number of the nearest k providers. If the provider is not able to provide the service, the user has to find another provider. One of the most well-known types of neural networks is the Recurrent Neural Network (RNN). In Memon and Maheswaran (2019), it is used to optimize delivery in vehicular fog computing. The output of the RNN is fed into a feed-forward neural network with only one hidden layer containing 20 neurons with a sigmoid activation function. In recent years, the use of deep neural networks has become very popular (Ran et al. 2019). Table 9 in the Appendix shows the major neural network research in service placement. In addition, Table 10 shows the most important research on deep supervised learning.

Pseudo-code of linear regression using descendent gradient

4.3.2 Decision tree

A decision tree is a supervised learning approach. In a tree structure, leaves represent class labels and branches represent combinations of features. A special type of decision tree in which the target variable can take continuous values is called a regression tree. In a decision tree, data comes in records as \(({\mathbf{X}}_{i} ,y_{i} ) = (x_{i,1} ,\,\,x_{i,2} ,...,\,\,x_{i,D} ,y_{i} )\). Here, \(y_{i}\) is called the dependent variable and is a label to be predicted. Also, \({\mathbf{X}}_{i}\) represents the feature vector for instance \(i\), which was previously explained in Definition 2.

In some research, the decision tree has been used for service placement. For example, Manikandan et al. (2020) address the integration of Smart Health Care (SHC) systems with the cloud environment. To reduce the response time to patients, they propose an IoT-based scheduling method using a decision tree. It consists of three stages: registration, data collection, and scheduling. Using entropy and information gain, they include the decision tree in the third stage for scheduling. The results of their experiments show that the use of decision trees reduces the computational overhead and improves the scheduling efficiency. Interested readers can refer to Alsaffar et al. (2016), Noulas et al. (2012), Sriraghavendra et al. (2022) to study other decision tree research. The details of these studies are described in Table 8 in the Appendix.

4.3.3 Transfer learning

The Transfer Learning (TL) technique uses the knowledge gained during the construction of an initial model to create a new model for a secondary task. TL can dramatically reduce training time. It also reduces the amount of data needed to train the secondary model. As a result, it improves performance, especially in neural networks. In TL terminology, the primary (input) model is called the source domain and the secondary (output) model is called the target domain. Before proceeding, the reader is advised to review Definition 1 in Sect. 4.1 and Definition 2 in Sect. 4.3. First of all, let us provide the definitions of the “domain” and the “task”.

Definition 3 (Domain)

Let A and P(X) denote the feature space and the marginal distribution, respectively (Zhuang et al. 2020). Now we represent the domain by D = {A, P(X)}. Recall from Definition 1 that we represent a dataset containing N records with X = {X1, X2, … XN}. We also represent attributes of this dataset with \({\mathbf{A}} = \left[ {\begin{array}{*{20}c} {a_{1} } & {a_{2} } & {...} & {a_{D} } \\ \end{array} } \right]\). Therefore, each record Xi is in the form \({\mathbf{X}}_{i} = \left[ {\begin{array}{*{20}c} {a_{i,1} } & {a_{i,2} } & {...} & {a_{i,D} } \\ \end{array} } \right]\). Each instance i has a label yi.

Definition 4 (Task)

Let T and Y represent the task and label space, respectively (Zhuang et al. 2020). Also, f represents the decision function. The task space T is now represented by T = {Y, f}. In other words, each task T depends on a label and a decision function. As in other ML methods, f is an implicit function learned from the datasets in the training phase. The decision function f, like some ML models (e.g., Bayesian), outputs a predicted conditional distribution for each input instance Xi. This output is defined as

Suppose we have different sources of data. For example, let \({\mathbf{D}}_{S}\) and \({\text{T}}_{S}\) denote the domain and the task space of a source S, respectively. Now we represent an observation with instance-label pairs as follows

Note that these instances can be labeled or unlabeled.

Definition 5 (Transfer Learning (TL))

Suppose we have some observations about \(m^{S} \in {\mathbb{N}}^{ + }\) source domains and tasks (Zhuang et al. 2020). These observations are represented by \(\left\{ {\left. {\left( {{\text{D}}_{{S_{i} }} ,{\text{T}}_{{S_{i} }} } \right)} \right|\quad i = 1, \ldots m^{s} } \right\}\). Also, suppose we have some observations about \(m^{T} \in {\mathbb{N}}^{ + }\) target domains and tasks. These observations are represented by \(\left\{ {\left( {{\text{D}}_{{T_{j} }} ,{\text{T}}_{{T_{j} }} } \right)|\,\,\,j = 1,\,...,\,m^{T} } \right\}\). Note that \(m^{T}\) denotes the number of TL tasks. The purpose of TL is to use the knowledge of the source domains to improve the performance of the decision functions in the target domain, i.e., \(f^{{T_{j} }} \left( {\,j = 1,\,...,\,m^{T} } \right)\).

In the special case where \(m^{S} = 1\), the scenario is called single-source transfer learning. Also, the literature review shows that most researchers currently focus on studying scenarios with \(m^{T} = 1\) (Liu et al. 2021; Chen et al. 2019; Girelli Consolaro et al. 2023; Anwar and Raychowdhury 2020). This assumes that many labeled instances are available and that the model is well-trained in the source domain. On the contrary, there are usually limited instances available in the target domain. In these scenarios, resources such as instances and models are observations. The goal of TL is to obtain a decision function f with maximum accuracy in the target domain.

Another important concept in TL is domain adaptation (Zhuang et al. 2020; Hou et al. 2018). Here, the goal is to reduce differences between source domains to maximize knowledge transfer and improve learner performance.

TL problems are divided into three categories: Transductive, inductive, and unsupervised (Zhuang et al. 2020). If the instances of the target domain have labels, we have an inductive TL. If the source and target instances do not have labels, we have an unsupervised TL. If \({\text{X}}^{2} = {\text{X}}^{T}\) and \({\text{Y}}^{2} = {\text{Y}}^{T}\), this scenario is a homogeneous TL. Otherwise, if \({\text{X}}^{S} \ne {\text{X}}^{T}\) or/and \({\text{Y}}^{S} \ne {\text{Y}}^{T}\), this scenario is a heterogeneous TL. As shown in Fig. 10, TL approaches are also divided into four groups: instance-based, feature-based, parameter-based, and relation-based. In instance-based approaches, instance-weighting techniques are used. Also, in feature-based methods, transformations of original features are used to obtain new features. It has two types, asymmetric and symmetric. In the asymmetric approach, it is tried to change the features of the source to match the features of the target. On the contrary, in symmetric approaches, the commonality between the source and the target is calculated and new features are created based on it. Parameter-based approaches try to transfer knowledge based on the model. The relational approach tries to extend the rules learned in the source domain to the target domain.

TL has been used in some important research. Hou et al. (2018) propose a proactive caching mechanism using TL. Their goal is to minimize the transmission cost while improving the QoE. They use TL to estimate the popularity of the content. Due to the NP-hardness of the problem, they devise a greedy algorithm to solve the cache content placement problem.

Typically, the training of the “generic” model is done offline in the cloud data center because it is computationally intensive. The resulting model is then deployed on the local nodes to make corrections through incremental training. For example, for real-time vision applications (such as face recognition), a “general” model is first trained on millions of faces. This model is then copied to the edge nodes so that users can customize the model according to their preferences (Yu et al. 2020). The “generic” model may be retrained from time to time to improve the initial accuracy. In such cases, updates should be sent periodically to the edge servers (Nisha 2018).

4.4 Reinforcement learning

Reinforcement learning (RL) mainly relies on the Markov Decision Process (MDP). It is a stochastic process, where the transition between states depends only on the current state of the process, not on a previous sequence of events. So far, a lot of research has been done on MDP-based computer networks. Some examples are estimation of service prices (Naghdehforoushha et al. 2022), and allocation of network resources (Besharati et al. 2023; Rawashdeh et al. 2021). It allows for a description of the potential system behavior with an automatic model. In its most general form, an MDP is a tuple \(\left( {{\text{S}},\,\,{\text{A}},\,{\text{P}},\,{\text{R}},\,\,\gamma } \right)\) where \({\text{S}} = \left\{ {s_{0} ,\,\,s_{1} ,\,...,\,\,s_{N} } \right\}\) and \({\text{A}} = \left\{ {a_{0} ,\,\,a_{1} ,\,...,\,\,a_{N} } \right\}\) represent a finite set of states and actions, respectively. Also, \({\text{P}} = \left\{ {s_{t + 1} = s^{\prime}|,\,\,s_{t} = s,\,\,a_{t} = a} \right\}\) is the probability of transition from state \(s\) in stage \(t\) to state \(s^{\prime}\) in the next stage. Finally, the expected reward received after the transfer is represented by \(R\left( {s,\,\,s^{\prime}} \right)\). Here, to show the difference in importance between current and future rewards, the discount factor \(\gamma\) is used, which is a value between 0 and 1. An example of a state is the benefit a certain QoS may bring to the network (Kochovski et al. 2019). Usually, the use of RL for service placement leads to a significant improvement in performance (Baek et al. 2019). For example, in Sefati and Navimipour (2021), MDP is used to increase reliability in combination with Ant Colony Optimization (ACO). The authors prove that it is more efficient than previous studies (Liu et al. 2022).

Recently, Multi-objective Reinforcement Learning (MORL) has gained much attention in research (Eyckerman et al. 2022). In addition to the elements of a normal MDP, it has an additional parameter called the preference space. It takes a preference as input and returns a utility scalar for each action in a given state. The goal is to maximize utility.

4.4.1 Q-learning

Q-learning is a model-free RL algorithm in the sense that it does not require a model of the environment. It finds an optimal policy by maximizing the expected value of the total reward in all successive steps, where “Q” refers to the function computed by the algorithm (Gasmi et al. 2022). The agent tries to add the weighted reward of future states to the reward of the current state to maximize the total reward. The weight of each step is represented by \(\gamma .\Delta t\). It has a function to compute the quality of the state-action, which is defined as \({\text{Q:S}} \times {\text{A}} \to {\text{R}}\). At any time t, the agent chooses an action α, observes a reward rt, and then enters a new state. This updates the value of Q. Based on the Bellman equation, Q-learning updates the Q-value as follows:

In the above equation, α is the learning rate and is a value between 0 and 1. The Q-learning algorithm continues until it reaches a terminal state.

Appendix A-8 shows the Q-learning algorithms used for service placement. For example, Wang et al. (2019) use Q-learning to solve the online service tree placement problem. They show that for an edge network of size n and a service tree with m nodes, the action space has an exponential time complexity of \(O\left( {n^{m} } \right)\). To resolve this problem, they propose an efficient hierarchical placement strategy to significantly reduce the action space. In another research (Yan et al. 2020), Q-learning is used for content placement (cache) to maximize the efficiency of the F-RAN. Also, Nsouli et al. (2022) address the accessibility of cars in VEC. In another study (Ghobaei-Arani et al. 2018), hybrid resource provisioning for cloud networks is addressed.

4.4.2 R-learning

R-learning has a similar concept to the Q-learning method. Both use Q-tables, states, actions, rewards, and losses. However, the main difference is that R-learning uses the concept of average reward rather than discount reward. It calculates the average total transfer reward between all states and actions. R-learning provides total rewards sometimes, but punishments whenever the model fails to meet expectations. Farhat et al. (2020) use R-learning to maximize voluntary resources. The predictions of this algorithm help to increase QoS and also keep the pressure on cloud resources low. This is done by studying user behavior during service requests.

4.4.3 Meta-learning

The data of a meta-learning system usually comes from different domains. It is based on learning biases. Here, bias refers to the assumptions that influence the choice of explanatory hypotheses. Meta-learning is concerned with two aspects of learning biases, which are

- Declarative bias::

-

It determines how the hypothesis space is represented and has a major impact on the search space (e.g., representing hypotheses using only linear functions).

- Procedural bias::

-

It imposes restrictions on the ordering of inductive hypotheses (e.g., favoring smaller hypotheses).

Recently, meta-learning systems have been equipped with the power of neural networks. It has a tremendous effect on increasing accuracy by injecting too much training data. However, since it involves second-order gradient computation, it can increase the execution time, especially for large-scale problems. Therefore, in problems such as service placement, which inherently have a large search space, meta-learning can greatly affect the portability of the model and the level of decision-making. To overcome this problem, some research (Chen et al. 2022a) uses heuristics. For example, we can mention the elimination of the second-order gradient calculations. For further reading, you can refer to Chen et al. (2020), Zhang et al. (2021), Arif et al. (2020).

4.4.4 K-armed Bandit

In this classic problem, you have the opportunity to select \(K\) levers in a game city. Each lever fires an action. After each choice, you receive a numerical reward, which corresponds to a probability. The goal of this method, like other RL methods, is to maximize the total expected reward (action value) in a given period. For each action taken, we denote its value by \(q(a)\). Now, the action reward is calculated as follows

The most important point in this process is that you do not know the value of an action in advance. Instead, you try to greedily choose the action that you think is likely to be worth more than the others (Borelli et al. 2022). For this, you may have estimates of an action \(a\), which we denote by \(Q_{t} (a)\). Let us denote the best estimate by \(q^{*} (a)\). Therefore, we want the value of \(Q_{t} (a)\) to be as close as possible to the value of \(q^{*} (a)\).

Some research has used the K-Armed Bandit for service placement. For example, in Huang et al. (2022), service storage at the edge is solved by considering edge servers as agents. Here, services are considered as arms, service placement decisions are considered as actions, and system tools are considered as rewards.

4.4.5 Bayesian optimization

In Bayesian Optimization (BO), operations are performed based on previous observations and the posterior distribution of the solution vector (Eyckerman et al. 2022). We assume that the utility function \(T_{resp}^{\max } (A)\) follows a normal distribution. After \(d\) iterations, BO modifies the prior belief function based on the posterior distributions. It uses an “acquisition function” (EI) to select the settings of the iterations. Let \(D_{d} = \{ (a_{1} ,\,\,T_{resp}^{\max } (a_{1} )),\,...,\,\,(a_{1} ,\,\,T_{resp}^{\max } (a_{d} ))\}\) be the set of prior observations after \(d\) iterations. We assume that it follows a normal distribution with mean \(\mu (0)\) and covariance kernel function \(K(0,0)\), i.e., \(p(T_{resp}^{\max } (A)) = N(\mu ,K)\). We also formulate the mean and variance as follows:

Roberts et al. (2018) use BO to automate the placement of time series models for IoT healthcare applications. For details of other research, see Table 11 in the Appendix.

4.4.6 SARSA

Like Q-learning, SARSA is one of the well-known reinforcement learning methods. Here, the agent tries to find the optimal policy \(\pi^{*} (s):{\text{S}} \to {\text{A}}\) by minimizing a cost function (Sami et al. 2020). After checking the previous rewards, the next action \(a_{t + 1}\) is selected using the state value function \(V\pi (s)\). Since the SARSA method assumes that the agent does not know the environment, the action value function \(Q(s,a)\) is used. Action selection is greedy based on \(Q(s,a)\). In SARSA, the optimal policy \(\pi^{*}\) is defined as follows:

Sami et al. (2020) perform optimal scaling and placement in each cluster of fog nodes by finding \(\pi^{*}\). For the details of other research, see Table 11 in the Appendix.

4.4.7 Deep RL

One of the drawbacks of Q-learning is low scalability due to the large number of state-action pairs. This can mislead the agent in choosing the optimal policy (Zabihi et al. 2023). A deep Q-network (DQN) is used to overcome this problem. Previous research has used several variants, which we do not review here (Liu et al. 2020; Qi et al. 2020). DQN uses a neural network to generate a value function vector. Despite its many advantages, DQN may suffer from overfitting due to the high correlation between inputs. To overcome this problem, a replay buffer is typically used (Taheri-abed et al. 2023). The loss function in DQN is defined as follows:

In the above equation, the target value \(y = r + \gamma \mathop {\max }\limits_{{a^{\prime}}} Q(s^{\prime},\,\,a^{\prime}|\theta^{\prime}_{i} )\) is obtained from the Bellman equation. It minimizes the error between the target Q-values and the predicted value. Table 12 shows the major studies on service placement using deep RL. For example, Memon and Maheswaran (2019) use DDPG for SFC-DOP in dynamic and complex IoT. It can handle high-dimensional input and continuous output actions. Therefore, it is suitable for more complex IoT network scenarios with large and continuous operation space. Qi et al. (2020) use deep RL for the policy and value function. Here, the input to the neural network is a temporal state. The parameters of the shared layers can understand the resource differences between multiple tasks and learn to minimize the total task execution time in each application.

5 Discussion and analysis of results

In this section, a complete analysis of questions RQ1 to RQ7 will be given based on the information obtained. The future research process will also be highlighted.

- RQ1::

-

Most of the algorithms used in service placement are related to which area of machine learning?

- Analysis result::

-

As can be seen in Fig. 11, the use of reinforcement learning methods is associated with a significant jump starting in 2020. At almost the same time, the number of clustering studies has decreased slightly. Due to the emergence of new reinforcement learning methods combined with deep learning, this area is expected to expand further in the coming years. The emergence of new methods such as adversarial RL, actor-critic, and Soft Actor-Critic (SAC) may also accelerate this growth. Interested readers are advised to refer to Zabihi et al. (2023) for a more in-depth study. Figure 12 also shows that deep RL methods have been used almost 30% of the time in service placement research. The details of the deep RL method are shown in Table 12 in the Appendix. As can be seen from Fig. 12, after deep RL, about 27% of service placement studies are conducted with pure RL methods. As mentioned above, most of the learning that has happened in the world of artificial intelligence is related to the field of reinforcement learning. From 2012, after deep and neural network technologies were added to these methods, they became more popular. The main reason for this popularity is the compatibility of these methods with model-free environments. As shown in Fig. 12, among other machine learning methods, unsupervised methods are more popular (11%) than supervised and semi-supervised methods (8 and 3%, respectively). As we can see from the figure, the combination of deep and neural network technologies has created a new trend in the methods of solving the problem of service placement. It is expected that in the coming years, we will see an increase in these algorithms combined with newer technologies.

- RQ2::

-

What methods are used to estimate the amount of current network resources, and which resource estimation method is more popular?

- Analysis result::

-

As we can see in Fig. 13a, among the resources studied, 51% use the on-demand method to estimate system resources. About 28% of them use the dynamic method to estimate the available resources in the system. Also, 17% of the studies use the predictive method and about 4% use the profiling method. Profiling methods are mostly used in static environments to estimate resources.

- RQ3::

-

The purpose of service placement is optimization/trade-off between which parameters and metrics?

- Analysis result::

-

The answer to this question determines what percentage of the algorithms try to optimize a single metric and what percentage tries to find a compromise between several metrics. As we can see in Fig. 13b, about 81% of the methods try to find a compromise between several parameters, and about 19% of them try to optimize a single parameter. Compromising among multiple parameters is more in line with real-world requirements.

- RQ4::

-

Can the type of application architecture influence the choice of method and its efficiency?

- Analysis result::

-

In the real world, most applications today are developed in a distributed manner. Managing separate parts of programs that have interdependencies requires methods that can dynamically perform resource management. According to our findings in Fig. 14a, about 96% of applications and related programs are developed in a distributed fashion, and a small number, about 4%, are developed in a monolithic fashion. Managing service placement in monolithic applications is very simple. However, proper performance may not be achieved.

- RQ5::

-

What is the dominant paradigm used in the interface layer?

- Analysis result::

-

The answer to this question determines the percentage of interface paradigms that can play a major role in increasing network efficiency. As shown in Fig. 14b, IoE is an emerging technology that will gradually impact human applications in the network. Soon, complementary technologies such as Fog Radio Access Network (FRAN), FC, and MEC will be integrated. Currently, IoT and FC technologies are the most popular in studies. The emergence of cloud computing was in 2012 by Cisco. However, with the increase of mobile wireless devices with high processing power, it seems that technologies such as FRAN and UDEC will be more popular in the near future.

- RQ6::

-

What simulation tools have been used in previous research to evaluate efficiency?

- Analysis result::

-

As can be seen in Fig. 15, 20% of the studies have performed simulations using the Python language. Also, about 30% of them have used MATLAB tools to analyze the results. Well-known tools such as CloudSim and iFogSim are both used in the analysis of the results with the same amount of 11%. Reinforcement learning methods often use MATLAB and Python tools for simulation.

- RQ7::

-

What were the main objectives of previous research?

- Analysis result::

-

Fig. 16 shows the main metrics used in previous research. As can be seen from the figure, delay, energy, QoS, and response time are the most important metrics used in the research.

6 Future directions and research challenges

In general, the fundamental challenges in service placement are task correlation, dynamic environment, server heterogeneity, resource management, mobility, scheduling handling, destination node selection for offloading, energy consumption, delay, and efficient resource allocation. The eighth question of this research deals with the challenges and open issues and the research process of service placement.

- RQ8::

-

What are the most important open issues in the future?

- Analysis result::

-

Based on the literature review, we have identified several open issues, which we describe below:

7 IoE

Recently, in addition to IoT, a new paradigm called the Internet of Everything (IoE) has emerged. IoT emerged to meet the needs of communication and connectivity between things, while IoE emerged as a more general paradigm. In addition to communication between things, it seeks to address human-to-human communication, processes, and data to create a digital world. In simpler terms, IoT discusses issues such as data collection, sending, and receiving, while IoE addresses the collaboration of intelligent systems, and advanced analytics for processing the big data. Therefore, service placement in heterogeneous and dynamic environments is more related to IoE. In new network applications, the heterogeneity of nodes can cause many inconsistencies in inter-communication.

7.1 Mobility management

One example of the heterogeneity of nodes in IoT and IoE is mobility. The network topology may change rapidly due to the movement of nodes. In such a situation, a service placement strategy may be useless in the near future. Mobility management is closely related to issues such as power consumption, latency, and security. Next Generation Critical Communications (NGCC) has a strong relationship with IoT and IoE. It provides emergency services to the community. The main application areas of NGCC are disaster management, smart city, citizen security, protection of human life, and e-health. It relies heavily on cellular communication, MEC, and FC. It is expected that an important part of IoE research will flow into this area. Suppose a resource has been detected in a position a few seconds ago. The algorithm must be able to work intelligently and quickly so that the resource does not become unavailable due to mobility. The problem becomes more difficult when often conflicting objectives are to be optimized by considering mobility. Therefore, it may be necessary to run the service placement algorithm almost continuously. Simply put, service placement algorithms must be intelligent enough to perform offloading based on a snapshot of the state of available resources.

7.2 Multi-objective trade-offs

Service placement in the real world is a multi-objective issue. This may impose more processing load on the system. In such a decision, it is important to pay attention to the limitations of resources and the environment. The trade-off between multiple metrics in a heterogeneous and dynamic network is likely to be much more complicated than in single-objective problems.

7.3 Processing overhead

Applications that generate large volumes of data are proliferating in the IoE. As a result, the consumption of processing resources becomes an issue in service placement. On the other hand, advanced ML methods such as deep neural networks themselves require huge processing resources. Handling such large volumes of data, especially for multi-objective problems, adds to the difficulties. Performing such tasks, even if they are not continuously iterated, requires a centralized entity. Currently, among the computing paradigms, only cloud data centers can handle such a volume of computations for training ML models. Moreover, cloud data centers have higher availability because they are constantly powered, unlike edge/fog servers.

Some ML methods, such as RL, require interaction with the environment. This can make service placement more difficult. Remember that in RL, the environment may be constantly changing. In addition, the existence of heterogeneity in resources can complicate the situation. For this reason, the execution frequency of RL algorithms can be much higher than other ML methods, which increases the cost. Currently, a large part of RL research is focused on finding a trade-off between exploration and exploitation (Xiao et al. 2022).

7.4 Environmental heterogeneity

One of the major limitations that computing paradigms impose on ML is environmental heterogeneity. For example, one of the emerging ML methods for service placement is Federated Learning (FL) (Brecko et al. 2022). It is a distributed technique for building a global model by learning from multiple decentralized edge clients. The main advantages of FL are scalability and user privacy (Qian et al. 2019). However, the inherently heterogeneous environment of the IoE can affect the computational complexity of FL (Dworzak et al. 2023). End devices and edge/fog servers may have very different hardware/software infrastructures. For example, they may have significant differences in CPU, RAM, link quality, and operating system. As mentioned earlier, one idea may be to perform the ML training phase using engines such as Spark and TensorFlow on the central cloud (Xu et al. 2023).

As a rule of thumb, the training phase can be run with large data on cloud data centers, and then the test/validation phase can be run with small data on edge/fog servers (Zhou et al. 2019). Recently, the use of pre-trained models to reduce the burden of the training phase has received a lot of attention from researchers (Sufian et al. 2020; Hsu et al. 2022). First, the model is trained with a lot of data in the cloud data center. Then, the pre-trained model is copied to the edge nodes and used many times. However, some entities/users may want to incrementally change the built model from time to time. Therefore, there is a need to formulate standards for integrated deployment of code and models based on different deployment policies. Also, debugging the models created by ML in a decentralized way may be another future challenge (Nisha 2018).

8 Conclusion and future trends

In this study, machine learning-based service placement research was addressed. A review of the literature showed that the use of reinforcement learning methods has increased significantly since 2020. The main reason for this popularity is the compatibility of these methods with model-free environments. Due to the emergence of new reinforcement learning methods combined with deep learning, this field is expected to expand further in the coming years. The emergence of new methods such as adversarial RL, Actor-Critic, and Soft Actor-Critic (SAC) may also accelerate this growth. In addition, deep RL methods have been used in service placement research for nearly 30% of the time. Among other machine learning methods, unsupervised methods are more popular (11%) than supervised and semi-supervised methods (8 and 3%, respectively).

Among the resources studied, 51% use the on-demand method to estimate system resources. About 19% of them use the dynamic method to estimate the available resources in the system. Also, 12% of the studies use the predictive method and about 7% use the profiling method. About 81% of the methods try to find a compromise between several parameters and about 19% of them try to optimize a single parameter. About 20% of the studies have done simulations with Python language. Also, about 30% of them have used MATLAB tools to analyze the results. Well-known tools such as CloudSim and iFogSim are both used in the analysis of the results with an equal amount of 11%. The literature review also revealed that delay, energy, QoS, and response time are the most important metrics used in service placement research.

Based on the literature review, we identified several open issues, including IoE, mobility, multi-objective trade-offs, processing overhead, and environmental heterogeneity.