Abstract

Machine learning and medical diagnostic studies often struggle with the issue of class imbalance in medical datasets, complicating accurate disease prediction and undermining diagnostic tools. Despite ongoing research efforts, specific characteristics of medical data frequently remain overlooked. This article comprehensively reviews advances in addressing imbalanced medical datasets over the past decade, offering a novel classification of approaches into preprocessing, learning levels, and combined techniques. We present a detailed evaluation of the medical datasets and metrics used, synthesizing the outcomes of previous research to reflect on the effectiveness of the methodologies despite methodological constraints. Our review identifies key research trends and offers speculative insights and research trajectories to enhance diagnostic performance. Additionally, we establish a consensus on best practices to mitigate persistent methodological issues, assisting the development of generalizable, reliable, and consistent results in medical diagnostics.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The class imbalance issue remains one of the main challenges in data mining. The fact that one class is underrepresented in a dataset while the other(s) is prevailing results in uneven class distribution. When the data is unevenly distributed, the prevalent class is called the majority class, while the one containing the rare cases is called the minority class. The minority class is usually ignored by the machine learning algorithms that prioritize the majority class (Sun et al. 2009). Due to the imbalance in the dataset, conventional machine learning algorithms are biased towards the class primarily present in the data, while those rare cases are neglected. The main reason for such a problem is how machine learning algorithms are constructed; they assume balanced datasets (Krawczyk 2016). The balance in real-world datasets is often unreached, and the ill-prepared machine learning algorithms cannot assist in detecting rare cases of interest, which is an immense concern in research.

Medical diagnosis data are becoming of great use and interest with the progress in big data and medicine (Haixiang et al. 2017). Hence, it is subject to improving medical care treatment and creating aid-medical diagnosis systems. Machine learning is availing in designing medical diagnosis systems (Huda et al. 2016; Xiao et al. 2021; Woźniak et al. 2023); however, the imbalanced medical data hinders the machine learning algorithms’ performance, thus the performance of medical diagnosis systems. Medical diagnosis data could be represented in two classes one of the non-diseased individuals (healthy) and the other of the diseased individuals (unhealthy). Accurately predicting unhealthy individuals (diseased patients) on time allows early access to medical treatment and saves patients’ lives, which is unachieved without appropriate handling of class imbalance in medical datasets.

Intensive research has been conducted through literature to deal with the issue of class imbalance in general. Consequently, several methods of learning from imbalanced data have been proposed, and they are grouped mainly into two approaches: data-level and algorithmic level. The latter modifies the learning algorithms to consider the minority class, and the former handles the class imbalance by modifying the data distribution, whether through undersampling that eliminates instances from the majority class, oversampling the minority class that creates synthetic instances, or hybridizing both under and oversampling to reduce the imbalance. In addition, researchers propose several basic and advanced class imbalance handling methods that are generally applied to various domains.

Many literature reviews have been carried out on class imbalance, whether focusing on class imbalance handling methods only (Galar et al. 2011; Abd Elrahman and Abraham 2013; Spelmen and Porkodi 2018; Ali et al. 2019), both methods and applications (Haixiang et al. 2017; Kumar et al. 2021), or methods for a specific application’s field (Patel et al. 2020). However, class imbalance in medical diagnosis is not well highlighted, yet specificities of the imbalanced medical data are unconsidered. Such specificities pose a unique challenge for working with medical data and require specialized techniques and methodologies to ensure the validity and generalizability of the findings. Improving existing medical diagnosis systems and improving human well-being rely on medical diagnosis research. Hence, researchers and practitioners in healthcare, in general, and in medical diagnosis need to be aware of these factors and be abreast of the recent advancements in the field to identify their starting research points. In this work, we analyze the literature on handling imbalanced medical datasets and formulate the following intended research questions to cover the knowledge gaps.

-

RQ1 How can we develop a comprehensive framework for categorizing and evaluating imbalanced learning techniques tailored specifically to the complexities of medical datasets?

-

RQ2 What emerging trends and future trajectories are envisaged for tackling imbalanced medical data?

-

RQ3 What methodological techniques and procedural recommendations for mitigating class imbalance in research studies with a focus on enhancing the validity and reliability of results?

We aim to emphasize the research on the intersection of class imbalance in structured data and medical diagnosis through a well-designed research methodology. This paper comprehensively reviews the last decade’s research and clusters the reviewed literature in medical imbalanced datasets in three main approaches by building up on the existing classification of class imbalance methods (Krawczyk 2016): preprocessing level entailing data level methods and feature level methods, learning level encloses algorithmic methods, and combined techniques hybridize the two mentioned approaches. Related research is meticulously classified into subgroups within each approach to specifically present the state-of-the-art and facilitate detailed tracking of advancements and areas for continued development. This review systematically extracts and presents detailed statistics on the medical datasets and evaluation metrics employed in existing literature, delineating the most and least commonly used resources to offer insights into prevailing research methodologies. It synthesizes prior research outcomes concerning class imbalance in medical datasets and discusses observations from the contextual analysis. This innovative exploration offers speculative insights into methodological concerns and practical aspects, critically evaluating the high performance of specific methodologies across diverse medical datasets. Subsequently, we acknowledge the inevitable limitations of our study due to non-reproducible experimental outcomes and other significant constraints encountered in the analysis of imbalanced medical data. In addition to presenting original contributions, this review identifies research trends in imbalanced medical datasets and highlights promising directions for future research that could enhance medical diagnosis performance. It also establishes best practices in this field, aiming to mitigate prevalent issues and proposing a consensus among researchers to guide future studies.

The structure of the review paper is as follows: Sect. 2 introduces the problem of class imbalance in medical datasets. Section 3 details the search methodology and describes the findings regarding used medical datasets and evaluation metrics. Section 4 presents the data-level approach proposed for imbalanced medical datasets, Sect. 5 exposes the learning-level proposed solutions, and Sect. 6 contains the proposed combined techniques in the literature. Section 7 synthesizes the outcomes of research works on several imbalanced medical datasets. Section 8 discusses reflections on the synthesis, highlighting speculative insights, whereas the value and limitation of the observatory synthesis are pointed out in Sect. 9. Section 10 summarizes the research trends and future directions in imbalanced medical datasets research. In Sect. 11, we highlighted the best practices amongst researchers in imbalanced medical data. Section 12 concludes the paper.

2 The problem of class imbalance in medical data

With the advancement of technologies, medical data is increasingly stored in the form of electronic medical records, where the historical medical data of an individual is saved and shared with authorized users (Fujiwara et al. 2020). Demographic data, clinical tests, X-ray images, MRIs, fMRI, EEG, and other types represent medical information. The access to voluminous medical data, along with the progress in the application of machine learning, has been helpful for medical care specialists and clinicians. Machine learning effectiveness in multiple domains encourages constructing aid-medical diagnosis systems to automate medical diagnosis and help with the scarcity of medical experts in specific domains and places and the vast demand for diagnosis for specific diseases. Those diagnosis systems are trained on historical medical data about a particular disease to perform well on unknown new medical data and predict the disease. However, such systems are constructed through well-designed processes depending on the disease and its data availability with the help of experts’ knowledge. Nonetheless, the class imbalance in medical data hardens the mission of machine learning algorithms and diagnostic systems.

While naturally unhealthy people are less than healthy, the class imbalance exists if the classes are unequally distributed in the dataset for training machine learning algorithms. There are numerous sources of imbalance in medical data. However, they can be grouped into four patterns:

-

Bias in data collection: resulting from the fact that certain groups, such as non-diabetics, are underrepresented in research because they are underdiagnosed.

-

The prevalence of rare classes: in this case, the imbalance is inherent to the disease because certain conditions occur in 1 per 100,000 in the population, making the positive class rare.

-

Longitudinal studies: medical studies investigated over time can result in an imbalance in the dataset due to the discharge of certain patients (lost to follow-up) or the change of class over time (such as the progression of one stage to another in the case of cancer).

-

Data privacy and ethics: the susceptibility of certain diseases, such as HIV, can limit access to positive classes, resulting in imbalanced datasets.

An imbalanced dataset is defined by a disproportionate distribution between classes, where the Imbalance Ratio (IR), calculated as \(IR = N_{maj} / N_{min}\), indicates the extent of this disproportion. In this formula, \(N_{maj}\) and \(N_{min}\) represent the number of instances in the majority and minority classes, respectively. In binary datasets, the degree of imbalance is usually defined as IR : 1, where the more significant the difference than 1, the more severe the imbalance is.

Many existing classifiers exhibit an inductive bias that favors the majority class when trained on imbalanced datasets, often at the expense of the minority class. This results in suboptimal performance in less-represented classes. For instance, in diagnoses such as cancer risk or Alzheimer’s disease, patients are typically outnumbered by healthy individuals. Unfortunately, conventional classifiers tend to prioritize high overall accuracy, potentially leading to the misclassification of at-risk patients as healthy. Such errors in classification can have grave consequences, including the inappropriate discharge of patients in need of critical care. Additionally, this predisposition can lead to unfair treatment and ethical dilemmas, as it systematically disadvantages those requiring the most medical attention, raising significant concerns about equity in healthcare diagnostics.

Class imbalance handling methods are created for general purposes and not for medical diagnosis data. Applying such methods without considering the context of the disease in matter or the data at hand may lead to uninterpretable yet inaccurate results (Han et al. 2019). For example, synthetic minority data are generated to balance the medical data so machine learning can learn equally on both existing classes (diseased patients and non-diseased patients). However, synthetic data needs to conform to the characteristics of original medical data. Besides, the application of machine learning algorithms for medical diagnosis needs to be adequately evaluated in case of imbalanced data. The cost of misclassifying a diseased patient is more critical than misclassifying a non-diseased patient. The first can lead to dangerous consequences that may affect the patient’s life, whereas the second may lead to a further clinical investigation (Fotouhi et al. 2019). Therefore, the evaluation of medical diagnosis machine learning models relies mainly on measuring their predictive power for minority cases (diseased patients) (Han et al. 2019). However, a well-performing medical diagnosis system is expected to provide the best compromise in predicting diseased and non-diseased patients and avoid all kinds of costs of misclassification.

On the other hand, synthetic data must adhere to the original medical data’s characteristics. Otherwise, the automatic application of generic methods such as SMOTE (Chawla et al. 2002) may introduce biases and patterns not present in the original data, as well as irrelevant biologically impossible information, which may affect overall model performance for a variety of reasons, including inaccurate representation of rare case characteristics leading to unreliable model predictions, creation of synthetic data only in the rare cases neighborhood causing overfitting and generalization problems, and worst feature representation by increasing, decreasing, or reversing a variable’s impact on the target. Researchers have thus worked over the last decade to find solutions that avoid the drawbacks mentioned earlier, such as creating synthetic instances more representative of the underlying distribution, reducing the risk of inducing noise, and ensuring better generalization. Misdiagnosis occurs due to the difficulty in learning rare cases, and the need for researchers to stay up-to-date with the latest advances motivates them to incorporate improvements in the field into their research to maximize the utility of available data. As a result, our motivation in this paper is to classify pertinent techniques into several strata and to provide a critical review of the relevant literature as well as a synthesis of the outcomes of research on reference class imbalance datasets based on several metrics, enriching this classification to enhance the advantages and disadvantages of each stratum, thus opening up new research directions in the field.

3 Research methodology and basic statistics

This section details the search methodology used for data collection and the statistics describing the extracted data from the reviewed literature. The proposed review process follows most of the common guidelines proposed by Kitchenham (2004) for performing systematic literature reviews in software engineering research.

3.1 Data collection

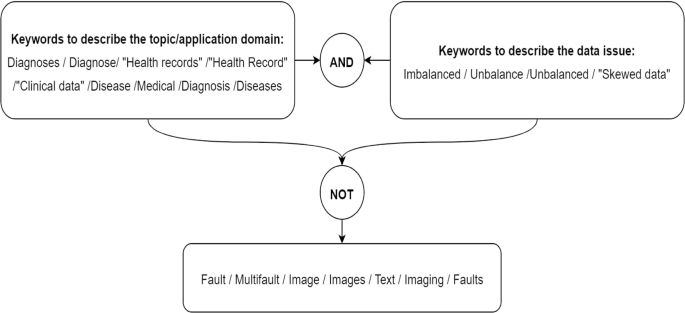

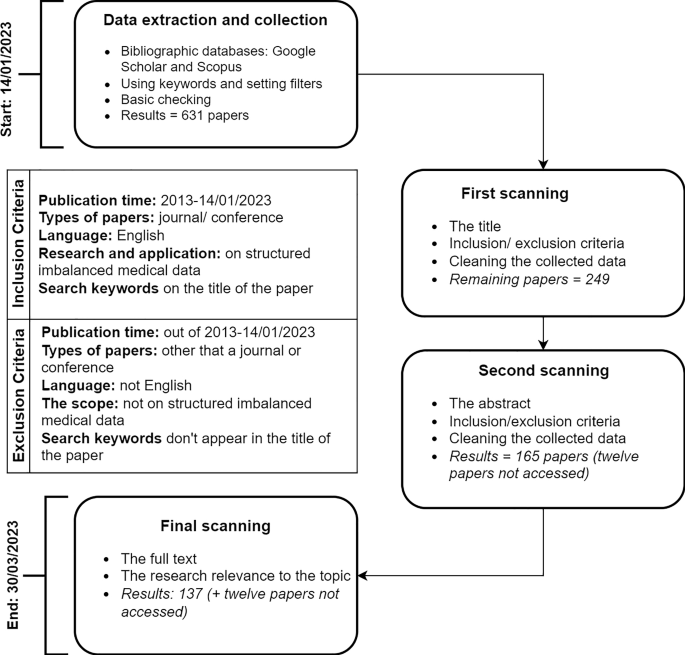

Our search methodology defined the bibliographic databases, search keywords, inclusion and exclusion criteria, and time range for our literature review. Regarding the bibliographic databases, we selected Google Scholar and Scopus to collect papers. Besides, the search keywords are shown in Fig. 1. The inclusion and exclusion criteria used for paper selection and the search methodology process are illustrated in the diagram (see Fig. 2).

We used advanced search in the Google Scholar and Scopus databases to find papers, setting the search for keywords in title only with a time range between 2013 and 14/01/2023. In our search, we used keywords to search for papers with class imbalance as a topic like “imbalanced” and keywords to capture papers that treated medical data in general like “medical” as depicted in Fig. 1. In addition, we eliminated some search terms due to their widespread occurrence with search keywords like “diagnosis”, based on some trials, and to ensure the relevance of the results. The preliminary results were 409 in Google Scholar and 222 in Scopus, which we added to our reference manager. The first cleaning of our collected dataset by removing the duplicates and some unrelated results ended up with 249 papers. Afterward, we scanned the collected papers through the title and abstract if needed to sort out only relevant papers according to our review scope and the selection criteria. This second scanning yielded 165 papers pertinent to our review topic. A final scanning of the remaining results through full text was necessary, and we ended up with 150 papers, among them twelve without access. For that, the reviewed papers in this article are 137. The diagram in Fig. 2 illustrates the proposed methodology for data collection.

3.2 Analyses of used datasets and classification-based metrics

3.2.1 Medical datasets

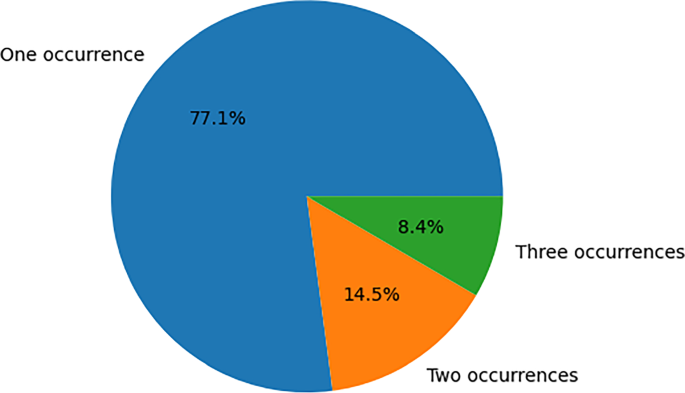

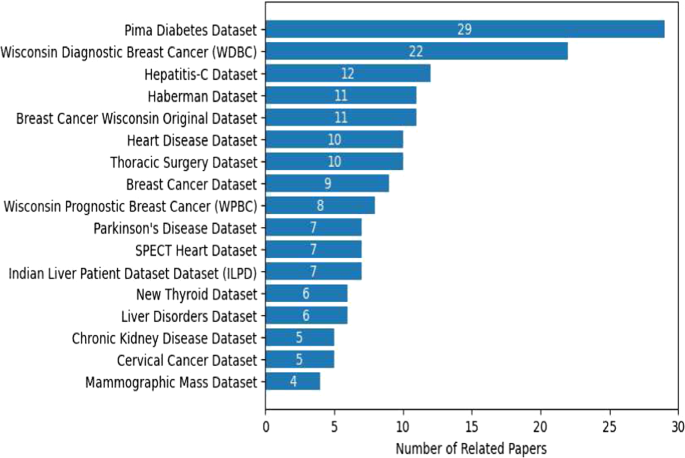

We extracted all the datasets used in the reviewed research articles and grouped them based on their availability: public or private. We found that 95 (69%) papers used publicly available medical datasets, and 44 papers used private ones. Some research articles use both public and private datasets, and three research papers could have mentioned their employed datasets more clearly. The public datasets used in research have been investigated by extracting their usage frequency. Therefore, those datasets are partitioned into two groups: reference class imbalance medical datasets, which are frequently used (see Fig. 4), are displayed in Table 1, and non-reference class imbalance medical datasets are less used than those above. Figure 3 illustrates the non-reference datasets based on their commonness in research.

Table 1 displays the main characteristics of the medical datasets as used in the reviewed research, including the dataset size, the number of features, and the number of instances in each of the minority and the majority classes. All the medical datasets are initially of binary class except for the “New Thyroid Disease Dataset,” which consists of three classes. For deeper insights into the procedural and contextual specifics of the dataset, it is advised to refer to the detailed discussions found in the referenced data sources and the foundational studies. The imbalance varies from one dataset to another, indicating the difference in its degree; while a dataset is highly imbalanced in research work, it is moderately imbalanced in another research framework; a class imbalance of one dataset is slight compared to another but could be more challenging. Although this points to the lack of an accepted universal quantification of the severity degree of the imbalance - as discussed later in Sect. 8, the imbalance of the datasets in Table 1 is highlighted and well-considered as an imbalance across the literature. While reviewing the reference medical datasets, we identified an underrepresentation of certain medical domains, such as psychiatry and psychology. This absence may be linked to the data scarcity as stated by Kumar et al. (2023), or the nature of these fields, which are often explored through unstructured, text-based data (Awon et al. 2022), thus falling outside the primary scope of our structured data analysis.

3.2.2 Evaluation metrics and statistical tests

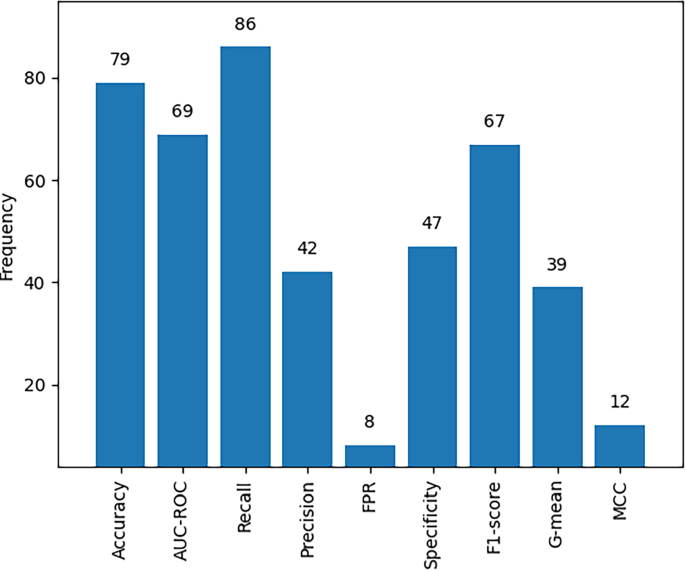

Reviewed research papers selected from different evaluation metrics to assess the performance of their proposed approach. Several metrics and statistical tests have been used in medical diagnosis using imbalanced datasets. We extracted all the used metrics and statistical tests in the reviewed literature and presented the findings in Fig. 5 and Table 2. The used metrics and statistical tests are split into two groups: the first group contains frequently used metrics used at least eight times in the literature. In contrast, the second group contains infrequently used both metrics and statistical tests used a maximum of seven times.

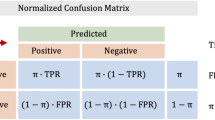

As seen in Fig. 5, nine classification-based metrics are primarily used: AUC-ROC, Recall (also known as sensitivity), Precision, Specificity, F1-score, G-Mean, FPR (False positive ratio), Matthews Correlation Coefficient (MCC), and Accuracy. We notice that recall is the most used metric. 62.8% papers selected the recall to assess their proposed approaches. In the case of imbalanced data, the focus is on classifying minority classes, especially when dealing with imbalanced data in medical diagnosis. For that, using sensitivity is essential in class imbalance research. Furthermore, accuracy, AUC-ROC, and F1-score are used in medical diagnosis systems evaluation. Accuracy is used in 57% of the reviewed literature, while it reflects the overall performance of models and hides the misclassification of minority examples. Research emphasizes the use of recall (sensitivity) to measure the model’s performance in identifying minority samples that are unhealthy or diseased patients in our case. However, we found that accuracy is still widely used in second place after sensitivity and is used solely to evaluate the imbalanced classification model in several studies (Sajana and Narasingarao 2018b; Mohd et al. 2019; Babar 2021; Lan et al. 2022). The area under the curve, also known as the AUC-ROC or AUC, is significantly used in 50.4% of the explored literature. The information added by the area under the curve indicates the ability of the proposed approach to discriminate between the minority class and the majority class. The higher the value of the AUC, the more powerful the model is in discrimination between different classes. Therefore, we notice the importance attributed to the AUC in medical diagnosis with imbalanced data research, where some researchers rely individually on it in experimental analysis (Çinaroğlu 2017; Hassan and Amiri 2019). Another commonly used metric is the F-value, used in 49% of reviewed literature. The F-value informs about balanced classification; the higher its value, the better the trade-off between precision and recall. Referring to the F-value, the misclassified minority examples and the misclassified majority examples are considered. As a result, the model performance in classifying both classes in binary classification is evaluated by the F-value. The high frequency of using the F-value indicates the attention to both minority and majority classes, hence the general performance of proposed approaches in handling imbalanced medical datasets.

Another cluster of quietly used metrics contains specificity, precision, and geometric mean (GM). 34% papers utilized specificity, while 30.7 and 28% of included literature used precision and geometric mean, respectively. The precision metric focuses on minimizing the false minority predicted examples, for that reference to it is vital to the excellent performance of medical diagnosis models; however, it is not as important as recall. Focusing only on recall minimizes the type one error in classification. Hence, we avoid predicting a diseased patient as non-diseased, and an accurate diagnosis saves human lives by allowing patients to access treatment as early as possible. However, by ignoring and minimizing the false predicted minority examples non-diseased patients are diagnosed as diseased patients, a type II error in classification, which may charge extra costs for all parts of society (like medical care and patients). The degree of attention to each classification error may be the reason for the difference in recall and precision in medical diagnosis research. Specificity and G-mean are used frequently but less frequently than other metrics like recall and accuracy. In some research works (Naghavi et al. 2019; Liu et al. 2020; Ibrahim 2022), we see that selecting one metric like specificity or G-mean or both with the recall to evaluate the proposed approaches. The G-mean of sensitivity and specificity shows the compromise between both metrics. When used with sensitivity, it can inform about the specificity score. Besides, the specificity quantifies the model’s ability to identify the majority class; knowing the specificity aside from the sensitivity illustrates the balance between them. Consequently, the relatively lower use of specificity and G-mean compared to recall is mainly explained as mentioned. However, we see considerable attention to recall in other research works without referring to the specificity and G-mean (Sun et al. 2021; Mienye and Sun 2021; Shi et al. 2022), which ignore the balance between both correctly identifying diseased patients as well as correctly identifying non-diseased patients.

Moreover, the Matthews correlation coefficient, also known as MCC, that informs about the classification performance could be even better than the F-value, and accuracy (Xu et al. 2020) is less frequently used. The False positive rate (FPR) is considered even though less than other metrics 9% papers used it. It refers to the misdiagnosed cases as diseased individuals. Thus, we notice a growing convergence of researchers to other metrics to quantify the performance of their proposed disease diagnosis models Accuracy, F-value instead of MCC, and FPR instead of sensitivity. Some research works used them simultaneously with standard metrics to better analyze the model’s effectiveness (Shilaskar et al. 2017; Sadrawi et al. 2018; Cheng and Wang 2020).

Table 2 groups uncommonly used metrics and statistical tests with their frequency of usage in reviewed research. We notice that statistical tests like the Friedman test, Wilcoxon paired signed rank, and Holm test are being used, even occasionally, which means researchers are referring to other tools, unlike evaluation metrics, to compare between proposed approaches and existing approaches. We find that the area under the precision-recall curve, also known as AUC-PR, is used only six times, although it is known as an appropriate metric for imbalanced classification (Huo et al. 2022; Albuquerque et al. 2022). A high AUC-PR means high precision and recall; therefore, it summarizes the model’s predictive power in minority and majority classes. Other evaluation metrics are used in a few studies, and the necessity of some adapted metrics to the proposed models may explain the variety of used metrics and change their interpretation.

4 Pre-processing level

4.1 Feature level

It entails all methods focusing on feature space to treat class imbalance in the data. One of the existing feature-level methods is feature selection, a widely used preprocessing procedure in different machine-learning tasks that employs various techniques to retain discriminating features. Another feature-level method is feature extraction, which creates new features from the initial feature space to keep most information in a smaller new set of features. Both methods, generally, are used to deal with high dimensional data, where the selected or extracted features are supposed to be informative features and facilitate the learning process and model generalization. Alternatively, feature weighting is found in the literature to improve recognition of the class of interest that is usually rare in medical applications such as medical diagnosis and risk prediction. Recently, mentioned methods are proposed to handle imbalanced learning, whether as self-standing approaches (Zhang and Chen 2019a; Li et al. 2022; Shakhgeldyan et al. 2020), discussed in this section, or combined with other class imbalance techniques (Wang et al. 2020; Tang et al. 2021; Lijun et al. 2018).

Feature-level methods are used to tackle the class imbalance and reduce the dimensionality (Zhang and Chen 2019a; El-Baz 2015; Sridevi and Murugan 2014; Li et al. 2022). Researchers in Zhang and Chen (2019a) selected the optimal features of the breast tumor using an improved Laplacian score (LS), which better compromised computational efforts and classification performance by surpassing rough set-EKNN (El-Baz 2015) and feature selection-multiple layer perceptron (FSMLP) (Sridevi and Murugan 2014). Similarly, in Li et al. (2022), insightful selection of interpretable features using functional principal component analysis on longitudinal data achieved more accurate data categorization and reduced computing complexity. Filters and wrappers have been used in disease and mortality prediction, respectively (Venkatanagendra and Ussenaiah 2019; Shakhgeldyan et al. 2020). In comparison, feature selection using filters improved the classification performance of Feed-Forward NN, SVM, XG Boost, Random Forest, and LDA in Venkatanagendra and Ussenaiah (2019). A four-stage feature selection based on filter and wrapper methods exceeded random forest and logistic regression in Shakhgeldyan et al. (2020). Promisingly feature weighting yielded high discrimination between majority and minority data (Polat 2018; Baniasadi et al. 2020). Polat used similarity and clustering considering the class label to weight each attribute’s data points, making them more linearly separable and illustrating superior results than random subsampling (Polat 2018). Baniasadi et al. applied linear interpolation for missing values imputation and sample weighting (Baniasadi et al. 2020). Feature-level methods are remarkably proposed once imbalanced data is highly dimensional. Unexpectedly, feature weighting provides promising results. It is necessary to investigate its efficiency in dealing with the class imbalance issue regardless of the high dimensionality. Table 3 briefly describes the feature-level methods.

4.2 Data level

This approach deals with class imbalance at the data level by modifying the data distribution to balance the dataset through oversampling, undersampling, or a combination. Oversampling augments the number of minority samples (rare cases) in the dataset using different techniques, and undersampling decreases the number of majority samples. Hybrid methods combine oversampling and undersampling to obtain evenly distributed data. Researchers commonly use data-level methods to address class imbalances due to their simple implementation in the preprocessing phase, which is independent of the learning process. In general, the versatility of resampling in imbalanced learning has been noticed earlier (Abd Elrahman and Abraham 2013; Haixiang et al. 2017); however, this section reviews their application in medical data.

4.2.1 Oversampling

Oversampling prevails in imbalanced medical data classification and is significantly referred to in assessing proposed class imbalance methods. Hereafter, oversampling is individually used to combat the imbalance issue.

Random oversampling with random forests showed optimal performance in identifying the severity of the Hepatitis C virus (Orooji and Kermani 2021). However, randomly duplicating original minority samples may lead to overfitting, which implies using advanced techniques. Popularly used technique SMOTE (Synthetic Minority Oversampling Technique) created by Chawla et al. (2002) outperformed with KNN classifier (Hassan and Amiri 2019), however, demonstrated similar results with logistic regression to threshold adjustment based on Youden index (YI) (Albuquerque et al. 2022). Recently, the data distribution of disease samples is emphasized in SMOTE oversampling (Xu et al. 2021; Sun et al. 2021). Xu et al. used SMOTE based on a filtered k-means clustering (KNSMOTE) to overcome noise generation, overlapping and borderline issues, which outpaced traditional and cluster-based oversampling (Xu et al. 2021). Sun et al. integrated a multi-dimensional Gaussian probability hypothesis test to add SMOTE synthesized samples (MDGPH-SMOTE) to the original minority samples, illustrating better classification accuracy and recall (Sun et al. 2021).

SMOTE was adapted to various data contexts and combined with machine learning algorithms (Mustafa et al. 2017; Wang et al. 2013; Mohd et al. 2019). Farther Distance-based SMOTE was used along with PCA to handle high dimensional imbalanced biomedical data, revealing superiority over correlation and information gain (Mustafa et al. 2017). Differently, Wang et al. structured a Minimum Spanning Tree based on the KNN graph for minority data, then SMOTE synthesized samples along the paths between two randomly selected samples (Wang et al. 2013). In multi-class medical data, SMOTE with MLP model attained the highest accuracy (Mohd et al. 2019). Sajana and Narasingarao (2018a), authors intentionally balanced the initial data with SMOTE then split it for learning and testing a Naive Bayes classifier. Researchers investigated the real class of artificial minority instances created by SMOTE (Sug 2016; Naseriparsa et al. 2020). Sug checked the class of synthetic data using MLP and accordingly trained tree classifiers; however, results revealed insignificant differences (Sug 2016). Generating synthetic samples within the region with a high density of minority samples reduced the class mixture (Naseriparsa et al. 2020) and exceeded SMOTE variants.

Alternatively, Oversampling-based diverse methods yielded positive results. Oversampling based on causal relationships between features exceeded CCR (combined cleaning and resampling algorithm), k-means SMOTE, GAN (Generative Adversarial Networks), and CUSBoost (cluster-based undersampling with boosting (Luo et al. 2021). Oversampling using improved ant colony to diagnose outpatients of TCM (Traditional Chinese medicine) exceeded traditional ML like C4.5. and SMOTE (Bi and Ma 2021).

The decomposition of minority data was extensively studied as a prior step to sampling (López et al. 2013; Napierala and Stefanowski 2016, 2012), yet no universal method was concluded. Han et al. (2019), the authors applied different sampling strategies based on minority data selection using a self-adaptive algorithm and enhanced the recognition of minority class. Very recent research investigated synthetic samples fitting in the minority data (Rodriguez-Almeida et al. 2022), unexpectedly, experiments revealed higher similarity between synthetic and real data did not necessarily improve the classification performance. Data generation-based deep learning approaches in structured data are emerging (Xiao et al. 2021; Lan et al. 2022). While GAN and SMOTE highly increased the classification accuracy in Lan et al. (2022), combining SMOTE variants with conditional tabular generative adversarial networks (CTGAN) yielded unstable results (Rodriguez-Almeida et al. 2022). In contrast, a Wasserstein generative adversarial network (WGAN) in gene expression data excelled popular sampling methods (Xiao et al. 2021).

Oversampling is relatively used on its own to treat class imbalance in disease prediction. Besides using existing oversampling techniques and combining or improving them, we see two recent lines of research. One that considers the data distribution and its specificities in medical diagnosis while sampling minority examples. The other line adopts generative adversarial networks in structured medical data, a newborn research topic, resulting in a hybridization of both lines as observed. However, both research topics are unexplored and open for investigation. Table 4 briefly describes the proposed oversampling techniques with their key ideas.

4.2.2 Undersampling

Undersampling decreases the number of prevalent class examples by removing noisy data or duplicates that are uninformative through basic techniques like random undersampling or advanced techniques like clustering-based ones. Although undersampling is less used than oversampling, it is inventively proposed in medical diagnosis research.

Random undersampling with Random Forest output superior performance in Covid-19 mortality prediction (Iori et al. 2022), Hereditary Angioedema disease diagnosis (Dai and Hua 2016), and melanoma prediction (Richter and Khoshgoftaar 2018). K-means clustering was integrated into undersampling and boosted the prediction of diseased patients (Augustine and Jereesh 2022; Neocleous et al. 2016; Babar and Ade 2016). Augustine & Jereesh balanced the data using random undersampling at the generated clusters level (Augustine and Jereesh 2022). While Neocleous et al. (2016) used k-nearest neighbours after clustering. Similarly, the authors in Babar and Ade (2016) designed a Multiple Linear Perceptron (MLPUS) using k-means clustering that outperformed SMOTE, where iteratively samples close to the cluster centroid were used to train MLP and only samples with the highest SM (Stochastic measure) values are added to the training data which keeps hard to learn samples. Simply, clustering the majority class into subsets equal to the minority class and combining each with the minority class for training modestly ameliorated the results in Li et al. (2018) and sometimes outperformed SMOTE in Rahman and Davis (2013). However, Ensembling base classifiers built on balanced subsets exceeded BalanceCascase and EasyEnsemble undersampling techniques (Parvin et al. 2013). Salman & Vomlel further weighted instances using mutual information at each cluster, and their trained Tree-Augmented Naive Bayes (TAN) surpassed TAN with SMOTE (Salman and Vomlel 2017). Recently, Ibrahim used Salp swarm optimization to efficiently determine the clusters’ centres, which sometimes exceeded cluster-based sampling techniques (Ibrahim 2022).

Adding high-quality majority samples to the minority class is variedly suggested (Zhang et al. 2020; Wang et al. 2020). After randomly selecting a subsample of the majority samples, only those with high entropy were selected based on the Gaussian Naive Bayes estimator which hastened the undersampling process (Zhang et al. 2020). The results in Wang et al. (2020) significantly outpaced SMOTE and random undersampling using Imbalanced Self-Paced Learning (ISPL) with logistic regression. The authors in Al-Shamaa et al. (2020) separated majority class instances and minority class instances based on the Hellinger distance, and majority instances most similar to their neighbouring minority instances were added to the original minority class. Investigations showed higher performance of the method than Tomeklinks, random undersampling, and edited nearest neighbours.

The data distribution is distinctly integrated into undersampling (Vuttipittayamongkol and Elyan 2020b; Kamaladevi and Venkatraman 2021). Vuttipittayamongkol and Elyan (2020b) identified overlapped instances using recursive search neighbouring then discarded the majority class instances. While in Kamaladevi and Venkatraman (2021), the authors imputed noise samples using the mean and relabeled borderline samples based on Tversky similarity Indexive regression. Investigations illustrated promising results yet better performance than Tomeklinks, random undersampling, and edited nearest neighbors technique. Jain and his colleagues in Jain et al. (2017, 2020) applied genetic algorithms to improve the recognition rate of diseased patients while maintaining high correct prediction of healthy patients. Their undersampling-based evolutionary optimization reduced the majority class samples by maximizing the geometric mean, significantly improving the classification performance. Table 5 summarizes the main ideas of the oversampling techniques proposed in the reviewed literature and other information.

4.2.3 Hybrid methods and comparative studies of resampling techniques

Hybrid techniques are uncommonly used to deal with imbalanced medical data by combining undersampling the majority class and oversampling the minority class. Comparably, studies contrasted various sampling techniques to reduce class discrepancy.

Resampling boosted the accuracy of liver disease detection (Arbain and Balakrishnan 2019). Fahmi et al. applied random resampling after weighting samples using the class distribution’s inverse proportions, which achieved superior performance than SMOTE (Fahmi et al. 2022). Hybridization of ROSE for majority and minority class and K-means to select boundary samples with SVM classifier improved the prediction of all diseases in Zhang and Chen (2019b).

SMOTE is commonly combined with various undersampling techniques (Shi et al. 2022; Xu et al. 2020; Wosiak and Karbowiak 2017). SMOTE-ENN with logistic regression remarkably identified chronic kidney patients at risk of end-stage and exceeded the Cox proportional hazard model (Shi et al. 2022). The authors in Xu et al. (2020) repeatedly changed the oversampling ratio of SMOTE by the misclassification rate of trained RF on a subset of data and combined it with ENN. This hybrid method minimized the MCC (Matthews Correlation Coefficient) and statistically demonstrated significant performance compared to different data-level methods. The classification performance based on the Hybridisation of SMOTE with random undersampling fluctuated in Wosiak and Karbowiak (2017). However, SMOTE with Tomek Links showed superior performance (Zeng et al. 2016).

Few novel hybrid sampling methods were designed for imbalanced medical data (Babar 2021; Vuttipittayamongkol and Elyan 2020a). Babar and Ade (2016), the authors combined the MLPUS with the Majority Weighted Minority Oversampling Technique (MWMOTE), which assigns selection weights to important and borderline minority samples and then synthesizes new samples using clustering. A clustering approach was used further in the generation of synthetic samples. Investigation illustrates the better performance of the combination compared to MLPUS and MWMOTE separately. The authors in Vuttipittayamongkol and Elyan (2020a) eliminated the majority of instances based on the overlapping degree and oversampled minority instances in borderline regions using Borderline SMOTE; they attained high performance based on boosting,

Frequent studies compared sampling techniques in cancer diagnosis (Fotouhi et al. 2019), no-show cases detection (Krishnan and Sangar 2021), stroke diagnosis (Alamsyah et al. 2021), pediatric acute-conditions detection (Wilk et al. 2016), chronic kidney disease prediction (Yildirim 2017), heart disease prediction (Fernando et al. 2022), Lymph node metastasis prediction in stage T1 Lung adenocarcinoma (Lv et al. 2022), osteoporosis detection (Werner et al. 2016), predicting the risk of chronic kidney disease in cardiovascular disease patients (Vinothini and Baghavathi Priya 2020), and multi-minority medical data (Shilaskar and Ghatol 2019), however, results varied depending on the data used and experiment configurations.

The hybrid approach in imbalanced medical data seems to be less considered compared to advances in sampling techniques. Moreover, comparisons of sampling techniques yield to select the best, yet a balancing technique’s outcome could vary based on many factors, including the medical data used. Table 6 describes the hybrid techniques in a nutshell.

5 Learning level

Modifications concerning the learning algorithms are grouped under this section and further classified into subgroups depending on the similarities in the used algorithm as described in the following.

5.1 Cost-sensitive learning

It attributes specific costs for misclassifying minority and majority samples. The misclassification costs are unknown; however, the cost matrix is usually inversely proportional to the distribution of classes in the original data. Therefore, more attention is given to the minority class.

Normally, cost-sensitive learning in medical data outperforms cost-insensitive learning (Wu et al. 2020; He et al. 2016; Phankokkruad 2020; Nguyen et al. 2020). Radial basis neural network (RBF-NN) based sample distribution adaptive cost function in Wu et al. (2020) exceeded different forms of RBF-NN, ensemble methods based on RBF, and single classifiers. He et al. used cost-sensitive neural networks and the cost as part of gradient descent (He et al. 2016); investigation showed its minimal costs and significant accuracy. Cost-sensitive XGBoost model with the tuning of class weights effectively diagnosed breast cancer (Phankokkruad 2020). Likewise, a cost-sensitive version of Multiple Layer Perceptron and convolutional neural networks outperformed in detecting Inflammatory Bowel Disease (IBD) (Nguyen et al. 2020). However, some traditional ML algorithms yielded comparable results to developed cost-sensitive models, the decision rules algorithm and the ensemble of cost-sensitive SVM indistinguishably performed (Zięba 2014). While Decision Tree and Logistic regression achieved better accuracy than their corresponding cost-sensitive models (Mienye and Sun 2021).

Some research newly defined the cost matrix (Huo et al. 2022; Zhu et al. 2018; Belarouci et al. 2016; Wan et al. 2014). The authors in Belarouci et al. (2016) introduced a version of the least mean square algorithm to associate weights to different samples according to the errors, and investigations illustrated its superiority over SMOTE in breast cancer detection. Recently, Huo et al. used neural networks and set the misclassification costs as learnable parameters which released high performance in risk prediction in binary and multi-class classification (Huo et al. 2022). Class weights random forest based on class weighting for each classifier with threshold voting gave very optimistic results in Zhu et al. (2018); while attributing weights based on a scoring function (RankCost) in Wan et al. (2014) outperformed cost-sensitive decision trees and Adaboost.

5.2 Optimization techniques

Recent methods applied Genetic algorithms to handle imbalanced medical data (Jain et al. 2020; Nalluri et al. 2020). Jain et al. (2020) optimized the specificity and sensitivity, where two models were constructed by employing the NSGA II algorithm and combined for the prediction of minority and majority samples. While the hybrid evolutionary learning with multiobjective exceeded optimization methods (Nalluri et al. 2020).

5.3 Simple classifier

It consists of using conventional machine learning algorithms to classify imbalanced medical data, which may include postprocessing or preprocessing procedures to tackle the imbalance issue and boost the classification performance.

Hyperparameter tuning with SVM models improved patient detection sometimes (Ksiaa et al. 2021), while performed similarly to cost-sensitive learning in Alzheimer’s prediction (Zhang et al. 2022). Contrast classification strategy-based feature elimination demonstrated superior results compared to decision trees, and SVM (Dhanusha et al. 2022). Modification on the used classifiers released good results (Alves et al. 2023). Alves et al. developed a generalization of complementary loglog link functions for binary regression that better fitted the data than binomial models (Alves et al. 2023). Differently, Kumar and Thakur proposed A fuzzy learning approach hybridizing adaptive and neighbor-weighted KNN for liver disease detection that outpaced Fuzzy Adaptive KNN (Kumar and Thakur 2019).

5.4 Ensemble learning

This approach combines a set of single classifiers to perform classification tasks. There are three types of ensembles: bagging, boosting, and stacking. Bagging consists of building multiple single classifiers on different samples of the primary dataset and then combining their prediction with some basic statistics. Boosting is an iterative approach combining weak learners where each focuses on the misclassified instances by the previous one and generates predictions using a weighted average of constructed models. Finally, stacking is based on stacking different classifier types built on the same dataset and aggregating their predictions using another model (combiner).

Various ensemble learning classifiers effectively diagnosed the disease in imbalanced data (Zhao et al. 2022; Wei et al. 2017; Bhattacharya et al. 2017; Potharaju and Sreedevi 2016). Weighted ensemble-based Knn algorithm with feature extraction released remarkable results in identifying the stage of Parkinson’s disease (Zhao et al. 2022). Similarly, ensemble Knn based with the relief-F method for feature selection accurately predicted the responses of breast cancer patients to neoadjuvant chemotherapy (Gao et al. 2018). Whereas the authors in Wei et al. (2017) used XGBoost based on EasyEnsemble, investigations demonstrated its high results in large-scale imbalanced diabetes data. Bhattacharya et al. (2017), the authors balanced the training subsets and employed a hierarchical Meta classification method, Experiments showed the high performance of random forest hierarchical meta-classifier in detecting later stages of chronic kidney disease that exceeded random oversampling and SMOTE. The majority voting ensemble of AdaBoost and Logistic regression outperformed AdaBoost and Logistic regression in heart disease detection (Rath et al. 2022). While ensemble by bootstrapping the majority class with a replacement and majority voting considerably detects different types of Parkinson’s disease (Roy et al. 2023). In contrast, Zhao et al. (2021) ensembles various machine learning algorithms, where AdaBoost and XGBoost comparably outpaced other ensemble models. Mathew and Obradovic (2013) used homomorphic encryption to secure multi-party computation with a distribution voting ensemble if collected encrypted data was imbalanced, illustrating the superiority of ensemble models over baseline models.

Random forest revealed significant results compared to boosting and bagging techniques in the prediction of malaria disease (Sajana and Narasingarao 2018b) and thyroid (Çinaroğlu 2017). Differently, the authors in Guo et al. (2018) used an ensemble of rotation trees (ERT) including undersampling and feature extraction, and investigations showed, statistically, the excellent performance of ERT compared to EasyEnsemble, Random Undersampling Random Forest (RURF), BalanceCascade, and bagging. While in Potharaju and Sreedevi (2016) the authors developed ensembles of rule-based algorithms on SMOTE-balanced data, the experiments showed the optimal accuracy of AdaBoost.

5.5 Deep learning algorithms

Modification of the structure and parameters of neural networks and deep learning algorithms is found as an approach to tackle class imbalance in medical data and improve the classification performance (Ghorbani et al. 2022; Izonin et al. 2022; Liu et al. 2019; Sribhashyam et al. 2022). The authors in Ghorbani et al. (2022) combined a Graph convolutional network (GCN) algorithm with weighting networks and employed an iterative adversarial training process, demonstrating stability and superior performance compared to other GCN methods. An improved imbalanced probability neural network (IPNN) by Izonin et al. (2022) yielded high performance. Liu et al. (2019), the authors automated hyperparameter optimization (AutoHPO) of deep neural network (DNN) including dimensionality reduction using PCA K-means and majority instance selection with batch reweighting using online learning; investigation demonstrated the excellence of AutoHPO based on DNN compared to DNN, XGB, etc. ResNet and GRU with weighted focal loss function exceeded ResNet in multi-class heart disease detection (Rong et al. 2020). A stacked denoising autoencoder (SDA) for anomaly detection excelled LSTM, SVM, MLP with Borderline SMOTE, and SVM with SMOTE (Alhassan et al. 2018). Recently, Sribhashyam et al. used multi-instance neural network architecture that exceeded state-of-the-art methods for disease diagnosis (Sribhashyam et al. 2022).

5.6 Unsupervised learning

Unsupervised learning approaches showed high performance and interpretability; however, it is uncommonly used (Zhou and Wong 2021; Chan et al. 2017). Chan et al. (2017), the authors used a pattern discovery and heuristic optimization of the geometric mean, which significantly performed and bettered logistic regression. Lately, the authors in Zhou and Wong (2021) identified relevant patterns, for which they established a matrix representing the frequency of co-occurrence of pairs-values (like in association rules). Then, they build another matrix representing the frequency deviation from the default frequencies (the parallel of the covariance matrix in PCA). They decomposed this matrix into several PCs and then projected these pairs of values in the sub-space. Then, they selected clusters (patterns). Experiments demonstrated the outperformance of the proposed algorithm over CART, Naive Bayes, and logistic regression.

Regarding structured medical data, deep learning is yet to be explored as a potential solution for class imbalance where many reasons may pop up, like the insufficiency of medical data or the model complexity. Another emerging research line is pattern recognition. A descriptive table (Table 7) provides all information about learning level techniques, like the year, the title, and the main idea.

6 Combined techniques and comparison of different approaches

Combining learning and data-level approaches is considered to treat imbalanced medical data. Studies contrasting different approaches or suggesting combined techniques are quite frequent as learning approaches in the last decade’s literature.

Recently, studies combined deep learning approaches with sampling techniques and exceeded the state-of-the-art techniques (Feng and Li 2021; Woźniak et al. 2023). Feng and Li (2021), the authors optimized the borderline SMOTE and ADASYN combination \(\alpha\)DBASMOTE where only minority samples in danger set are synthesized and used DenseNet convolutional neural network. Investigation illustrated the higher performance of \(\alpha\)DBASMOTE over Borderline SMOTE and ADASYN. The authors in Woźniak et al. (2023) combined oversampling by ADASYN and SMOTE with undersampling by Tomek-Links and used a Bidirectional Long Short-Term Memory deep learning model which output promising results. Rath et al. ensembled LSTM and GAN based on GAN for data generation, and the investigations showed excellent results in heart disease detection (Rath et al. 2021). SVM based on the active learning approach relied on the degree of the instance’s importance and yielded superior performance (Lee et al. 2015). Likewise, Suresh et al. (2022) used Radius SMOTE for balancing and Convolutional generative adversarial network for data generation with a modified CNN model, experimentation illustrates its optimal performance and lower computational time.

Preprocessing was integrated into class imbalance approaches (Cheng et al. 2022; Hallaji et al. 2021). Cheng et al. (2022) denoised signals and combined multi-scale features along with ADASYN for balancing different categories of Electrocardiogram (ECG). While Britto and Ali (2021) proposed balancing and augmenting the data and a deep learning model with adaptive weighting for minority classes. Hallaji et al. (2021) compared an adversarial imputation classification network (AICN) with hybrid models encompassing sampling with data imputation techniques. Miss-Forest was the most performant in imputation, and SMOTE was the best in balancing techniques, while AICN outperformed and showed stability in different missing value ratios. Ensemble learning combined with different approaches better-handled class imbalance in medical data (Gan et al. 2020; Gupta and Gupta 2022). AdaCost with tree-augmented naive Bayes network outpaced AdaCost variants (Gan et al. 2020), whereas experiments in Gupta and Gupta (2022) demonstrated the high performance of boosted ensemble stacking. Oversampling with Ensemble of PNN and weighted voting significantly outperformed PNN, biased random forest, and random undersampling boosting (Yuan et al. 2021). Liu et al. used hybrid sampling by SMOTE and Cross validated committee filter, then an ensemble of SVM with optimized weighted voting using simulated annealing genetic algorithm (SAGA) (Liu et al. 2020); investigation illustrated its optimal performance compared to the state-of-the-art classification models.

Sampling with ensemble learning combined in different manners effectively handled class imbalance in disease diagnosis (Naghavi et al. 2019; Kinal and Woźniak 2020; Li et al. 2021; Lamari et al. 2021). ADASYN for oversampling and the cost-sensitive ensemble classifier constructed on SVM, KNN, and MLP conquered deep learning-based models in freezing of gait (FoG) prediction (Naghavi et al. 2019). Dynamic ensemble selection, in particular, DES-KNN coupled with SMOTE, significantly treated non-severely unbalanced data (Kinal and Woźniak 2020). Likewise, SMOTE-ENN sampling with dynamic classifier selection using META-DES exceeded the META-DES on imbalanced data (Lamari et al. 2021). Li et al. designed a harmonized-centred ensemble (HCE) approach that iteratively undersampled the majority class samples based on their classification hardness level (Li et al. 2021). Investigations demonstrated the outperformance of HCE over the Under-Bagging method, RUSBoost method, and self-paced ensemble learning framework (SPE). A SMOTE-based stacked ensemble with Bayesian optimization for hyperparameters tuning released excellent results in breast cancer diagnosis (Cai et al. 2018). The combination of SMOTE with SVM and AdaBoost surpassed stacking and voting strategies (Wang et al. 2020). Undersampling using different techniques with AdaBoost for learning and prediction attained optimal results (Shaw et al. 2021). Feature extraction, along with random undersampling and XGBoost, effectively predicted acute kidney injury in intensive care unit patients and outperformed random oversampling, random forest, AdaBoost, KNN, and Naïve Bayes (Wang et al. 2020). Similarly, Liu et al. (2014) used random undersampling to train SVM classifiers and validated them on data synthesized by SMOTE accordingly specific weights were attributed to SVMs; investigation illustrated the effectiveness of the SVM ensemble in cardiac complications of patients with chest pain in the emergency at the hospital.

Modifications on the random forest algorithm had considerable results (Meher et al. 2014; Lyra et al. 2019). Meher et al. (2014) developed a combined random forest where each random forest was trained on a balanced subset of data clustered from the original data. According to experiments, the combined random forest outperformed weighted and biased random forests. A “nested forest” was developed by Lyra et al. (2019) using feature selection and reduction with random undersampling to create balanced subsets for decision tree training, and the best forests were used for sepsis prediction. Fujiwara et al. (2020), the authors used boosting weights to select misclassified majority samples iteratively in the next CART classifier and oversampled the minority samples based on their distribution. Experiments demonstrated the superior performance of the approach in severely imbalanced medical data compared to random undersampling with boosting and SMOTE. In contrast, the scholars in Silveira et al. (2022) combined manual oversampling by a nephrologist and automated oversampling by SMOTE and its variants, where the decision tree achieved superior and stable performance in the early detection of chronic kidney disease.

The research compared class imbalance strategies in disease diagnosis (Drosou et al. 2014; Gupta et al. 2021; Wang et al. 2023) had different outcomes. In comparisons of resampling and cost-sensitive learning approaches (Drosou et al. 2014), while SVM is used for classification, the best performance was achieved by hybrid sampling (SMOTE and random undersampling) with SVM. The authors in Gupta et al. (2021) examined various class imbalance techniques where extensive experiments illustrated the outperformance of weighted XGBoost and stacking ensemble of weighted classifiers in breast cancer diagnosis. Additionally, feature selection, SMOTE, and cost-sensitive learning were employed with a variety of machine learning classifiers (Wang et al. 2023); however, three strategies achieved the best results in identifying patients with chronic obstructive pulmonary disease: cost-sensitive logistic regression, cost-sensitive SVM, and logistic regression with SMOTE.

Feature selection noticeably improved the classification performance in imbalanced medical data (Porwik et al. 2016; Špečkauskienė 2016; Lijun et al. 2018; Razzaghi et al. 2019). Wrappers for feature selection with parallel ensemble based on a weighted Knn achieved better and more stable accuracy than C4.5 and naïve Bayes in multi-class imbalanced and incomplete HCV data (Porwik et al. 2016). Feature selection outperformed Oversampling with SMOTE in multi-class Parkinson’s disease detection (Špečkauskienė 2016) where the Clinical Decision Support system identified the best feature subset in Špečkauskienė (2011). Lijun et al. (2018) combined elastic net for feature selection and hybrid sampling using SMOTE and Random undersampling and used SVM multi-class investigations showed the superior overall accuracy achieved. Differently, ensemble learning methods with SMOTE and feature selection outperformed single classifiers particularly random forest and bagging yielded the highest results (Razzaghi et al. 2019). Tang et al. (2021), the authors combined feature selection and dimensionality reduction for biological data in breast cancer diagnosis and designed a twice-competitional ensemble method (TCEM) to select the optimal model, where results were promising. Cheng and Wang applied Particle Swarm Optimization (PSO) for feature selection with SMOTE and Random forest and achieved considerable breast cancer diagnosis results (Cheng and Wang 2020).

Optimization techniques were integrated into different approaches and largely improved the medical diagnosis (Shilaskar et al. 2017; Sadrawi et al. 2018; Desuky et al. 2021). Shilaskar et al. (2017) combined hybrid sampling with a modified particle swarm optimization to optimize the kernel function of SVM. The authors in (Sadrawi et al. 2018) used Fuzzy C-mean clustering to undersample the majority class and genetic algorithms to optimize the activation combination of the ensemble of activated ANN models. Including diversity within the ensemble and GA optimization yielded better results than single classifiers. Sampling using crossover genetic operator with adaptive boosting proposed by Desuky et al. (2021) improved classification performance better than SMOTE and safe level SMOTE (SLSMOTE). Feature selection and Principal Component Analysis with random oversampling and Ensemble voting exceeded SMOTE, SMOTE-ENN, and SMOTE-Tomek links (Alashban and Abubacker 2020). Srinivas et al. used rough set theory based on fuzzy c-mean clustering which exceeded the rough fuzzy classifier in heart disease detection (Srinivas et al. 2014). Table 8 is a descriptive table of all the combined techniques proposed for imbalanced medical data.

7 Synthesis of research outcomes on imbalanced medical datasets

Several benchmarking imbalanced datasets appear in the studied medical diagnosis research. Among the frequently medical diagnostics imbalanced data, we overview results on those frequently studied, namely: “Pima Diabetes Dataset”, “Wisconsin Diagnostic Breast Cancer (WDBC)”, “Wisconsin Prognostic Breast Cancer (WPBC)”, “Haberman Dataset”, “SPECT Heart Dataset”, “Breast Cancer Dataset”, “Indian Liver Patient Dataset (ILPD)”, “Hepatitis-C Dataset”, “Cervical Cancer Dataset”, “Heart Disease Dataset”, “Breast Cancer Wisconsin Original Dataset”, “Parkinson’s Disease Dataset”, “New Thyroid Dataset”, “Chronic Kidney Disease Dataset”, “Thoracic Surgery Dataset”, “Liver Disorder Dataset”, “Mammographic Mass Dataset”. This synthesis consolidates the findings from research utilized key imbalanced medical datasets, providing a cohesive understanding of how these datasets are analyzed within the framework of class imbalance.

This analysis is contextual, relying on the employed class imbalance methodology by the research authors and its performance quantified in terms of evaluation metrics they selected. Those experimental details were the most explicitly reported across the literature; clarifications on the underlying methodological procedures could enhance the informativeness of observations. Thus, we attempt to bridge the theoretical frameworks of machine learning with their practical applications in medical diagnostics, using an observatory approach to offer a detailed overview of current practices and performance metrics, highlighting the utilization and effectiveness of these methods in different medical contexts without drawing new conclusions or conducting experimental analysis. It is important to note that this synthesis cannot be classified as experimental or deeply analytical due to several constraints. Consequently, our reflections on the synthesis setting up and context are mentioned accordingly.

Eleven research papers on medical diagnosis in imbalanced data have employed the “Breast Cancer Wisconsin Original Dataset” in experimentation. Table 9 summarizes the results of each research work and mentions the used approach in tackling the class imbalance issue. While this dataset presents an imbalance ratio of 1.90, various class imbalance methods have been used to tackle this imbalance. The learning approach is the most prevalent and yields excellent performance in classifying breast cancer, where combined techniques are the most implemented (Yuan et al. 2021; Kinal and Woźniak 2020; Suresh et al. 2022; Cai et al. 2018) compared to cost-sensitive methods (Wu et al. 2020), ensemble methods (Guo et al. 2018), and optimization techniques (Nalluri et al. 2020). Scholars have used data-level approaches, though less frequently than previous approaches, the outcomes are considerable performance in terms of different metrics where we found a feature-level method (Zhang and Chen 2019a), an oversampling method (Mustafa et al. 2017), an undersampling method (Vuttipittayamongkol and Elyan 2020b), hybrid method (Zhang and Chen 2019b). There are slight differences in performance metrics observed. However, the effectiveness of a method can be influenced by numerous factors, including the specific characteristics of the data, the complexity of the model, and the research goals. In this analysis of the ’Breast Cancer Wisconsin Original Dataset,’ we observe subtle variations in performance metrics among the different methodologies employed. Despite these variations, the overall classification performance remains considerable, demonstrating robustness in addressing class imbalances within this dataset.

Table 10 summarizes the findings from eleven distinct studies on the “Heart Disease Dataset,” each employing different strategies to tackle the challenges of class imbalance in medical diagnostics. This dataset exhibits an imbalance ratio of 1.20; other versions of the datasets exist that could be differently imbalanced. The researchers experimenting always refer to the version presented in Table 1 unless other details are reported. This dataset has seen a variety of approaches, with combined techniques being particularly prevalent, as demonstrated in the works by Gan et al. (2020), Kinal and Woźniak (2020), Shilaskar et al. (2017), Desuky et al. (2021) and Srinivas et al. (2014), which display a range of outcomes across key metrics such as accuracy, sensitivity, specificity, and more. Other approaches include undersampling (Jain et al. 2020), which yielded high accuracy and sensitivity, and oversampling (Rodriguez-Almeida et al. 2022), although specific performance metrics for the latter are not reported; whereas optimization techniques employed by Nalluri et al. (2020) showed superior performance with nearly perfect metrics, indicating potential advantages depending on the specific methodological implementations and study goals. The hybrid approach by Shilaskar and Ghatol (2019) and optimization efforts by Chan et al. (2017) also added to the diversity of results, though with mixed effectiveness. This analysis reveals variations in how different methods perform under the constraints of the same dataset, reflecting a spectrum of effectiveness in the tools and strategies deployed. Despite these differences, the collective outcomes contribute significantly to advancing the diagnostic capabilities for heart disease, illustrating the value of diverse methodological approaches in enhancing overall classification performance.

Table 11 synthesizes the outcomes from five research studies on the “Cervical Cancer Dataset,” focusing on various methodologies used for cervical cancer diagnosis. This dataset, in particular, has the highest class imbalance among reference medical datasets, as seen in Table 1. It is observed a predominant reliance on combined techniques, as employed by Gan et al. (2020), Gupta and Gupta (2022), Kinal and Woźniak (2020), and Woźniak et al. (2023). Each study shows differing levels of effectiveness across metrics such as accuracy, AUC, precision, sensitivity, F-value, geometric mean, and specificity. Mienye and Sun (2021) utilized a cost-sensitive approach, which stands out with exceptional results—achieving perfect scores in accuracy, AUC, precision, and sensitivity. In contrast, the combined techniques exhibit a range of performances, with Woźniak et al. (2023) demonstrating notably high efficacy, almost reaching optimal scores across all evaluated metrics. This array of studies reflects the effectiveness of different learning strategies in diagnosing cervical cancer. It highlights the diversity in methodological success and underlines the particular strengths of more nuanced approaches, like the cost-sensitive method showcased by Mienye and Sun. Overall, two main learning methods are observed, whereas the aggregated findings from these studies highlight their contribution to advancements in cervical cancer diagnostics concerning the studied data.

Table 12 assembles findings from multiple research studies that have applied various approaches to the “Hepatitis Dataset,” characterized by an imbalance ratio of 3.84. This summary highlights how the twelve research papers employed different methods to address the challenges inherent in the imbalanced data, employing ensemble, cost-sensitive, hybrid, undersampling, oversampling, feature-level, combined techniques, and optimization strategies. Among the methodologies, the feature-level approach by Polat (2018) stands out with perfect scores across all metrics, showcasing the potential of finely tuned feature engineering in such contexts. Similarly, optimization techniques used by Nalluri et al. (2020) and combined techniques by Gupta and Gupta (2022) demonstrated high effectiveness, with near-perfect accuracy and other metrics. Conversely, approaches like the ensemble by Guo et al. (2018) and the hybrid technique by Wosiak and Karbowiak (2017) yielded more modest results, accentuating the variability in the efficacy of different methodologies within the same imbalanced dataset. The undersampling methods, particularly those implemented by Babar and Ade (2016) and Jain et al. (2020), showed remarkable improvements in handling class imbalance, reflected in their high accuracy and specificity. This aggregation of studies illustrates a broad expanse of success in managing class imbalance of the dataset, with some methods showing considerable effectiveness while others highlight areas for potential improvement.

Table 13 gathers the performance metrics from several studies that utilized the “Indian Liver Patient Dataset (ILPD)” to address its class imbalance of 2.49. The table provides a broad overview of the effectiveness of different class imbalance approaches, including simple classifiers, undersampling, combined techniques, and optimization strategies. The results demonstrate a range of effectiveness across methodologies. Combined Techniques employed by Gan et al. (2020), Yuan et al. (2021), and Kinal and Woźniak (2020), these methods yielded mixed results. Gan et al. and Yuan et al. reported relatively lower specificities and sensitivities, while Kinal and Woźniak achieved a high specificity of 0.95, indicating that the success of combined techniques can vary significantly based on their specific configurations and the aspects of the data they prioritize. On the other hand, the simple classifier approach by Kumar and Thakur (2019) showed a high F-value and precision, suggesting that even straightforward models can perform effectively within this dataset. Undersampling, proposed by Jain et al. (2017, 2020), showed improvements in specificity and sensitivity, indicating its utility in enhancing model accuracy by addressing data imbalance. Meanwhile, Nalluri et al. (2020) applied optimization techniques, which resulted in balanced performance across all metrics. This table of findings across different studies illuminates the varied effectiveness of each methodology in handling the dataset’s imbalance. Each demonstrates high values in some metrics and lower values in others. It illustrates the necessity of selecting an appropriate method based on specific dataset characteristics and desired outcomes in diagnostic accuracy.

Table 14 assembles the results from diverse research methodologies to diagnose breast cancer using the “Breast Cancer Dataset.” This dataset’s imbalance of 2.38 has prompted researchers to employ mixed techniques, including undersampling, cost-sensitive methods, ensemble approaches, hybrid strategies, and combined techniques. Undersampling is mostly used with varied results, as illustrated by Al-Shamaa et al. (2020) with modest outcomes in specificity and sensitivity, contrasting significantly with Ibrahim (2022), which achieved high values across these metrics. Similarly, Babar and Ade (2016) and Jain et al. (2020) also utilized undersampling, resulting in a particularly strong performance from the former. Wan et al. 2014 and Zięba (2014) applied cost-sensitive methods, showing lower performance metrics. Guo et al. (2018) employed an ensemble approach, yielding middling results, which suggest a complexity in achieving higher predictive accuracy through this method. In other studies, specific performance metrics are not fully detailed, highlighting a need for more comprehensive results. Babar (2021) implemented a hybrid method, achieving considerable accuracy, and Yuan et al. (2021) explored combined techniques and achieved an average trade-off of sensitivity and specificity. Significant variability in the literature outcomes is observed, suggesting the ongoing challenges and complexities in diagnosing breast cancer in this particular imbalanced dataset.

Table 15 showcases the results from seven distinct studies that have applied various methodologies to the “SPECT Heart Dataset,” which has an imbalance ratio of 3.85. These methodologies encompass miscellaneous methods to improve diagnostic accuracy and address the dataset’s imbalance. The study by Polat (2018) indicates the efficacy of feature level adjustments, yielding excellent performance metrics. Jain et al. (2017, 2020) both employed undersampling techniques. While the later study provides specific details on performance metrics like specificity, sensitivity, and accuracy—all marked consistently at 0.88—Jain et al. (2017) attained a geometric mean of 0.91, suggesting effective handling of class imbalances. Babar (2021) utilized a hybrid approach and achieved an accuracy of 0.84. Liu et al. (2020) and Kinal and Woźniak (2020) both opted for combined techniques, with varying levels of success across specific and general performance metrics. Nalluri et al. (2020) implemented optimization techniques, resulting in impressive specificity, sensitivity, and accuracy scores. The synthesis in Table 15 reflects the diverse strategies researchers can employ to tackle diagnostic challenges and underscores the complexity of achieving high accuracy in class imbalances.

Table 16 groups the results of research studies exploring various techniques to address the challenges presented by the “Haberman Dataset,” which exhibits an imbalance ratio of 2.78. This imbalance influences the choice of methodological approaches, including sampling strategies, learning techniques, and combined techniques. The outcomes of sampling methods vary, while the oversampling method in Xu et al. (2021) effectively mitigates class disparity, achieving optimal results in sensitivity and specificity, the results of Wang et al. (2013) denote a modest value of sensitivity, and the undersampling technique proposed in Jain et al. (2020) indicate relatively considerable performance. Other studies report their results in one metric, Jain et al. (2017) proposing an undersampling reported a high precision value, and Xu et al. (2020) used hybrid sampling reflected in a high F-value, suggesting an effective balance between recall and precision. Mienye and Sun (2021) adopts a cost-sensitive technique, achieving notable sensitivity and precision. Leveraged by Ghorbani et al. (2022) and Izonin et al. (2022), deep learning models excel in discerning complex patterns, with Izonin’s findings excelling in sensitivity and precision. Liu et al. (2020) and Desuky et al. (2021) employ combined techniques, achieving balanced values across various metrics. Nalluri et al. (2020) explores optimization techniques for class imbalance, leading to average metrics values. This synthesis stresses diverse approaches to enhancing model accuracy against the Haberman Dataset’s imbalance. We observe better performance in terms of sensitivity along recent studies achieved and significant differences between the findings of the literature on this dataset, while few achieved excellent performance, others potentially need to tackle effectively class imbalance in particular and understanding of the medical data in general.