Abstract

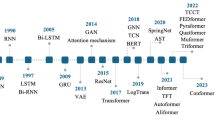

With the advancement of deep learning algorithms and the growing availability of computational power, deep learning-based forecasting methods have gained significant importance in the domain of time series forecasting. In the past decade, there has been a rapid rise in time series forecasting approaches. This paper comprehensively reviews the advancements in deep learning-based forecasting models spanning 2014 to 2024. We provide a comprehensive examination of the capabilities of these models in capturing correlations among time steps and time series variables. Additionally, we explore methods to enhance the efficiency of long-term time series forecasting and summarize the diverse loss functions employed in these models. Moreover, this study systematically evaluates the effectiveness of these approaches in both univariate and multivariate time series forecasting tasks across diverse domains. We comprehensively discuss the strengths and limitations of various algorithms from multiple perspectives, analyze their capacity to capture different types of time series information, including trend and season patterns, and compare methods for enhancing the computational efficiency of these models. Finally, we summarize the experimental results and discuss the future directions in time series forecasting. Codes and datasets are available at https://github.com/TCCofWANG/Deep-Learning-based-Time-Series-Forecasting.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

1.1 Objective

Time series forecasting plays a crucial role in numerous applications, such as energy consumption (Wu et al. 2021; Guo et al. 2023; Son and Van Cuong 2023; Dinh et al. 2023), transportation planning (Venkateshwari et al. 2023; Hu and Xiong 2023; Chen et al. 2023) and weather forecasting (Ma et al. 2023; Mung and Phyu 2023; Chen et al. 2023). In these practical application scenarios, forecasting future time series with the help of historical data is of great significance for long-term planning and early warning in related fields (Wang et al. 2023; Miller et al. 2024). This process can be shown in Fig. 1. Therefore, this paper aims to explore deep learning-based time series forecasting models from multiple perspectives, offering a comprehensive evaluation of current mainstream models and encouraging readers to consider future directions for development in this field.

1.2 Review of existing approaches

Next, we will summarize the existing methods from four main perspectives: time-step dependencies, correlations between temporal variables, the trade-off between expanding the model’s receptive field and reducing computational costs, and loss functions.

Time series information usually consists of time-step dependencies and correlations between temporal variables (Parzen 1961; Lacasa et al. 2015; Hsieh 2004; Orang et al. 2023). Fully exploiting these two types of information plays a crucial role in improving the model’s capability. Traditional models like the autoregressive integrated moving average (ARIMA) (Zhang 2003; Ariyo et al. 2014; Contreras et al. 2003) rely on statistical properties to extract information. However, they often fall short in fully capturing the time-step dependencies within complex time series. This is because traditional models primarily focus on linear features, while real-time series data usually contains intricate nonlinear correlations. As a result, traditional models struggle to adequately leverage these dependencies. In recent years, with the development of algorithms related to deep lCR121earning and the improvement of computational power, deep learning-based methods have become increasingly crucial in time series forecasting. Autoencoder and Stacked Autoencoders (SAE) (Lv et al. 2014) are utilized to extract the time-step features of the time series and obtain the prediction results directly. (CNNs) (Gudelek et al. 2017; Markova 2022; Lu et al. 2020; Zhao et al. 2017; Liu et al. 2018; Hatami et al. 2018) are often used to extract time-series features in the short-term range because of their ability to aggregate time-step data in the receptive field. To capture a broader range of temporal dependencies, the Temporal Convolutional Network (TCN) (Bai et al. 2018) expands the receptive field of its convolutional kernel. Specifically, TCN introduces dilated causal convolutions to time-series forecasting tasks. In contrast, Recurrent Neural Networks (RNNs) (Shi et al. 2015; Dey and Salem 2017; Salinas et al. 2020; Hajirahimi and Khashei 2023; Lai et al. 2018) are specialized for temporal sequences and, theoretically, do not suffer from the limited receptive fields characteristic of CNNs. However, related studies have shown that the recurrent structure of RNNs can lead to issues such as vanishing gradient, limiting their ability to leverage long-term dependencies in time series. To address this, researchers have developed models like Long Short-Term Memory (LSTM) (Shi et al. 2015; Fischer and Krauss 2018; Zheng et al. 2017) and Gated Recurrent Units (GRU) (Dey and Salem 2017), which employ gating mechanisms to better capture long-term temporal correlations. Despite these advancements, LSTM and GRU models still face challenges, such as vanishing gradient (Pascanu et al. 2013; Noh 2021; Le and Zuidema 2016) and error accumulation during training (Tang et al. 2021; Fan et al. 2019; Liao et al. 20185), which are common in RNN architectures. The effectiveness (Hewamalage et al. 2021; Pavlov-Kagadejev et al. 2024) of RNN-based forecasting models declines with longer forecasting time steps.

Given the exceptional performance of Transformer models in both natural language processing (Vaswani et al. 2017; Devlin et al. 2018) and image processing (Dosovitskiy et al. 2020) domains, these models are now being introduced to time series forecasting (Wu et al. 2021; Li et al. 2019; Zhou et al. 2021). Compared with RNN-based models, Transformer based models adopt an encoder-decoder structure (Wang et al. 2022; Woo et al. 2022; Lee et al. 2024) and the attention mechanism (Liu et al. 2023; Niu et al. 2021; Young et al. 2022). It can dramatically alleviate the error accumulation (Li et al. 2019; Zhou et al. 2021) in long-term time-series forecasting tasks. However, the authors of DLinear (Yun et al. 2019) point out that the attention mechanism is permutation invariance. The Transformer model does not make good use of time series order information. To solve this problem, DLinear uses a linear layer to implement time series forecasting.

Generally speaking, the correlation between time steps in a time series comprises multiple patterns (Cleveland et al. 1990; Hyndman and Athanasopoulos 2018; Dagum 2010), including trend (Verbesselt et al. 2010) [62] (Qi and Zhang 2008; Woodward and Gray 1993), seasonal patterns (Bell and Hillmer 1984; De Livera et al. 2011), etc. To reduce the complexity of time series forecasting and capture these temporal patterns, some models have introduced time series decomposition techniques (Wu et al. 2021; Zhou et al. 2022; Oreshkin et al. 2019; Woo et al. 2022). These approaches initially decompose the time series into several components, usually containing trend information, seasonal information, time-scale information (Taylor and Letham 2018; Jiang et al. 2021; Murray et al. 2000), and other time-series information. Subsequently, the model analyzes these distinct elements using specialized modules. For instance, Autoformer (Wu et al. 2021) utilizes a mean filter to convolve the input sequence, extracting trend terms that represent the time series’ trend patterns. Similarly, Fedformer (Zhou et al. 2022) employs multiple mean filters of varying sizes to derive trend terms, effectively addressing the limited receptive field issue. LSTnet (Lai et al. 2018) employs linear mapping to extract trend information from sequences and incorporates a predefined window to minimize the impact of distant information on trend forecasting. N-BEATS (Oreshkin et al. 2019) adopts a polynomial fitting method to model the time series’ trend terms. Similarly, both DLinear (Yun et al. 2019) and TDformer (Zhang et al. 2022) utilize shallow linear layers for this purpose. For seasonal information, LSTnet introduces a skip-connection architecture based on LSTM, enabling the model to account for information from time steps preceding a fixed interval, thereby capturing seasonal information in the data. Autoformer enhances the ability of the attention mechanism to discern seasonal patterns by using time-delayed similarities of the input. Fedformer, on the other hand, transforms the input time series into the frequency domain and employs an attention mechanism to analyze similarities between frequency components, effectively capturing the periodicity of the time series. ETSformer (Woo et al. 2022) filters frequency components based on their magnitude, reducing noise interference in seasonal information capturing. Time-scale information (Zhai et al. 2023), referring to the correlation of time steps at different scales, is addressed by models like Scaleformer (Shabani et al. 2022) and Pyraformer (Liu et al. 2021). The former applies downsampling to acquire inputs at various temporal scales and uses a forecasting model for multi-scale predictions. The latter processes time series at different scales and employs an attention mechanism to derive cross-scale attention correlations.

In addition to time-step dependencies, the essence of time series information also lies in the cross-time-step correlations among variables (Chakraborty et al. 1992; Yin et al. 2019). Thus, feature extraction of time series variables and exploring the inter-variable correlations are crucial for accurate forecasting. The TFT (Lim et al. 2021) employs RNN for feature extraction from time series variables and incorporates a feature filtering module to identify and utilize valuable information within these features. Aliformer (Qi et al. 2021) categorizes input time-series variables and incorporates future information about these variables, enhancing the model’s forecasting capabilities. Crossformer (Zhang and Yan 2022) leverages the attention mechanism to analyze attention-based correlations between variables, offering a nuanced approach to understanding their interplay. It is worth noting that in recent years, with the development of large language models(LLMS) (Zhao et al. 2023) like ChatGPT (Achiam et al. 2023), LLMs can directly or indirectly generate prediction sequences through prompt engineering (Liang et al. 2024; Zhang et al. 2024).

CNN models are constrained by their limited receptive field, hindering their ability to effectively capture long-term time series data (Tang et al. 2020; Gál et al. 2004; Luo et al. 2016). The RNN model, due to the problems of error accumulation, vanishing gradient, and exploding gradient, also cannot handle the long-term time series prediction task well. While Transformer models circumvent these issues, the attention mechanism’s computational cost grows quadratically with the series length, rendering the traditional Transformer impractical for long-term forecasting (Li et al. 2019). Therefore, some research is devoted to reducing the computational cost of the attention mechanism, primarily through sparse attention mechanisms or shortening time series length. Related studies based on the sparse attention mechanism include: LogTrans (Li et al. 2019) filters the target of attention computation based on the distance between time steps, reducing computation. Reformer (Kitaev et al. 2020) maps the data into a hash space and filters the target of attention computation based on the distance among time steps in the hash space. Informer (Zhou et al. 2021) filters the target of attention computation based on computational output distribution. Related studies on reducing series length include: Informer utilizes convolutional aggregation of time steps in the receptive field. Convolutional aggregation reduces the input series length of the attention mechanism. PatchTST (Nie et al. 2022) slices long sequences into multiple fixed-size patches, reducing the attention mechanism’s computational cost.

Regarding loss function, the above deep learning-based models commonly use Mean Absolute Error (MAE) or Mean Squared Error (MSE) to evaluate the gap between predictions and actual outcomes (Li et al. 2019; Zhou et al. 2021; Kitaev et al. 2020; Zhou et al. 2022; Oreshkin et al. 2019). However, using MAE or MSE as the sole optimization objective has some limitations (Goodfellow et al. 2014; Mogren 2016; Lyu et al. 2019). In response, several models have innovated upon the traditional loss function to address this issue. For instance, DeepAR (Salinas et al. 2020) converts the model output from specific values to probability distributions, using negative log-likelihood as its loss function. Similarly, SSDNet (Lin et al. 2021) integrates negative log-likelihood with MAE to form a hybrid loss function, allowing for the assessment of discrepancies in both value and probability terms. AST (Wu et al. 2020) employs a strategy inspired by the training mechanism of generative adversarial networks (GANs) (Goodfellow et al. 2014), comprising both discriminant loss and generative loss components. This approach facilitates the alignment of the predicted distribution with the actual data distribution.

Deep learning architectures usually require large-scale labeled datasets for achieving good performance on forecasting time series. Recent techniques of self-supervised learning (Pöppelbaum et al. 2022; Jaiswal et al. 2020) have opened up new a research frontier where deep learning architectures can learn general features from unlabeled time series. The task of self-supervised learning is usually accomplished with some sort of time-series augmentation such as flipping, random noise, time warping, and random smoothing. Based on these data augmentation methods (Wen et al. 2020; Cui 2016) and the strategy of contrastive training (Pöppelbaum et al. 2022; Al-Tahan and Mohsenzadeh 2021), deep learning-based time-series prediction models can capture temporal features during the pre-training phase. Subsequently, with a small amount of labeled data, they train the predictor to carry out predictions. At the same time, recent technology has also leveraged Few-Shot Learning to address the challenge of limited large-scale labeled datasets. This approach involves training the model on various public datasets, enabling it to extract time-series features effectively and exhibit strong generalization capabilities. Such a strategy leads to enhanced performance when applied to new datasets. Methods employing Few-Shot Learning can be categorized into two distinct directions. In Direction 1, the Memory network (Weston et al. 2014) approach is utilized. This involves encoding historical time-series data during training and retrieving these encodings when presented with new time-series datasets. This allows the model to identify similar patterns and make accurate predictions (Iwata and Kumagai 2020). Direction 2 involves using pre-trained LLMs known for their robust generalization abilities to generate precise predictions for time series data (Jin et al. 2023).

1.3 Highlights

The contributions of this paper are as follows:

-

This paper provides a systematic review of the evolution of deep learning-based time series forecasting models from multiple perspectives. It thoroughly examines the ability of various models to capture correlations both between time steps and among variables, explores the trade-offs between expanding the model’s receptive field and reducing computational costs, and analyzes commonly used loss functions.

-

This paper conducts a comprehensive evaluation of the effectiveness of various deep learning-based time series forecasting models in both univariate and multivariate tasks across different domains. Through experiments, we uncover the strengths and weaknesses of different algorithms, assess their ability to capture various types of time series patterns (including trends and seasonal variations), and discuss strategies to enhance the computational efficiency of these models.

-

Finally, this paper discusses future directions in the field of time series forecasting based on the analysis results.

The organization of this paper is as follows. Section 2 introduces the background knowledge used in this survey. Section 3 traces the progress of such models from 2014 to 2024, focusing on the logic behind time series information mining, including correlation among the time step and correlation among variables. Section 4 discusses the strategies for reducing computational costs in long-term forecasting. Section 5 summarizes the loss functions utilized in deep learning-based time series forecasting. In Sect. 6, we conduct experiments on the discussed models and methods across several datasets. We demonstrate the advantages and disadvantages of various algorithms from different perspectives, such as prediction accuracy, information extraction capabilities, the ability to identify trend and seasonal patterns, and the impact of different attention mechanisms. In Sect. 7, we summarize the experimental results and discuss the prospective directions for the future development of time series forecasting.

2 Background

In this section, we will briefly introduce the background information essential for this survey.

2.1 Time series forecasting

Given a historical time series \(\varvec{X}_{t-L:t}=\left[ \varvec{x}_{t-L},\cdots ,\varvec{x}_{t-1} \right] \in \mathbb {R}^{D \times L}\), where D is the number of dimensions and L is the length of the historical time series. The future time series to be predicted is \(\varvec{X}_{t:t+O}=\left[ \varvec{x}_{t},\cdots ,\varvec{x}_{t+O-1} \right] \in \mathbb {R}^{D \times O}\), with O being the forecast horizon. A time series forecasting model can predict the future sequence \(\varvec{X}_{t:t+O}\) from the historical sequence \(\varvec{X}_{t-L:t}\). Assuming that the predicted sequence is denoted as \(\tilde{\varvec{X}}_{t:t+O}\in \mathbb {R}^{D \times O}\) and the prediction model is denoted as \(\varphi (\cdot )\), the task of the time series forecasting can be expressed as:

Note that \(\pmb {x}_t \in \mathbb {R}^{D}\) denotes the time step at time t, and the time step correlation refers to the interrelations among these vectors. The time series can also be represented as \(\pmb {X} = [\pmb {v}_{1}^{\top }; \cdots ; \pmb {v}_{D}^{\top }]\), where \(\pmb {v}_{i}^{\top } \in \mathbb {R}^{L}\) denotes the i-th time-series variable. The correlation of the time-series variable is the vectors among different \(\pmb {v}\) vectors.

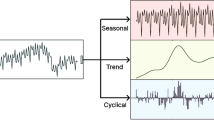

2.2 Time series decomposition

Time-step dependencies usually consist of various correlations, such as trend and season correlations. To more thoroughly explore these dependencies and simplify the forecasting process, some forecasting models incorporate the concept of time series decomposition, an approach rooted in traditional time series analysis (West 1997; Soltani 2002; Dagum 2010). This analysis posits that any given time series \(\varvec{X}\) is composed of distinct components: a trend term (\(\varvec{T}\)), indicative of the series’ long-term progression; a seasonal term (\(\varvec{S}\)), representing systematic and predictable fluctuations tied to seasonal effects; a recurrent fluctuation term (\(\varvec{C}\)), denoting periodic variations within a specific period of time; and an irregular fluctuation term (\(\varvec{N}\)), which accounts for random variability in the data. Decomposition models fall into two primary categories: additive and multiplicative. The additive model represents the time series as the sum of the four components, i.e., \(\varvec{X} = \varvec{T}+\varvec{S}+\varvec{C}+\varvec{N}\). While, for the multiplicative model, an arbitrary time series can be expressed as \(\varvec{X} = \varvec{T}*\varvec{S}*\varvec{C}*\varvec{N}\).

3 The logic of time series information mining

In this section, we will review the development of models over the past decade, with the processing logic of time series as the main storyline. Generally, the processing logic of models for time series can be categorized into two types: mining correlations among time steps and mining correlations among variables.

3.1 Mining correlations among time steps

In this subsection, we introduce the models and methods used to extract correlations among time steps. Specifically, these approaches can be categorized into two main types: holistic mining and targeted information mining, such as extracting trend or seasonal patterns.

3.1.1 Holistic mining

Autoencoders (Lv et al. 2014) are effective in uncovering time-step dependencies in time series. They typically consist of three components: an encoder, a decoder, and a prediction module, with the workflow divided into pretraining and prediction phases. During the pretraining phase, the encoder extracts features that are fed into the decoder, which then reconstructs the input sequence to train the model parameters. In the prediction phase, the extracted features are input into the predictor, generating the forecasted sequence. In contrast, DLinear (Yun et al. 2019) uses a single linear layer to map the input vector’s dimension directly to the desired output dimension. Due to the simplicity of autoencoders and linear layers, they are generally considered inadequate for fully capturing time-step dependencies in time series.

Given the success of CNNs in the image processing domain (He et al. 2016; Krizhevsky et al. 2017; Szegedy et al. 2015), they are also frequently utilized to identify temporal features in time series (Markova 2022; Lu et al. 2020; Zhao et al. 2017). Through convolution and pooling operations, CNNs aggregate temporal information within their receptive field to uncover internal time-step dependencies. However, due to the inherently limited receptive field, CNNs primarily extract short-term and discontinuous dependencies. To broaden the receptive field of CNNs and uncover dependencies across a wider range, Temporal Convolutional Networks (TCN) incorporate Dilated Causal Convolution (DCC) into the CNN architecture. Dilated convolution extends the receptive field without altering the convolution kernel’s size (Yu and Koltun 2015; Yazdanbakhsh and Dick 2019), allowing CNNs to capture more extensive time-step relationships. Furthermore, TCN enhances dilated convolution with temporal causality, ensuring that each time step’s output is only influenced by preceding inputs. The operational intricacies of DCC, TCN’s central component, are depicted in Fig. 2, showcasing how dilated causal convolution effectively satisfies the demands of temporal sequence analysis.

Meanwhile, in order to capture time-step dependencies, researchers have proposed Recurrent Neural Networks (RNNs) for time series forecasting, drawing upon the distinctive characteristics of time-series data (Shelatkar et al. 2020; Amalou et al. 2022; Tang et al. 2021). RNNs, utilizing their recurrent connections, enable the features of a preceding time step to act as inputs for the current step, thereby encapsulating the temporal attributes of each step with information from its antecedents. Unconstrained by the receptive field, RNNs theoretically can capture more extensive time-step correlations. However, the increased span of these recurrent connections may lead to problems like gradient explosion and vanishing. To counter these issues, Long Short-Term Memory (LSTM) (Shi et al. 2015; Fischer and Krauss 2018) units introduce a gate mechanism and cell states within the RNN framework. Cell states hold crucial information for long durations, while the gate mechanism-comprising input, output, and forget gates-regulates the flow of information. The input gate controls the incorporation of current input into the cell state, the forget gate determines the retention of cell memory, and the output gate dictates the utilization of cell information for current output, as depicted in Fig. 3. Thus, for a given input time step \(\pmb {x}_{t}\), LSTM’s output can be formulated as follows:

where \(\pmb {i_{t}}\), \(\pmb {f_{t}}\), \(\pmb {o}_{t}\) respectively denote the input gate, the forget gate, and the output gate. \(\widetilde{\pmb {c}}_t\) is the cell state candidates, \(\pmb {c}_{t}\) is the cell state at the t-th time step, and \(\pmb {h}_{t}\) is the time-step feature extracted at the t-th time step. \(\pmb {W}_{xi}\), \(\pmb {W}_{hi}\), and \(\pmb {b}_{i}\) denote the weight matrices and bias vectors for the input gates. Similarly, \(\pmb {W}_{xf}\), \(\pmb {W}_{hf}\), and \(\pmb {b}_{f}\) correspond to the weight matrices and bias vectors for the forget gates. For computing the cell states, \(\pmb {W}_{xc}\), \(\pmb {W}_{hc}\), and \(\pmb {b}_{c}\) are used. Lastly, \(\pmb {W}_{xo}\), \(\pmb {W}_{ho}\), and \(\pmb {b}_{o}\) represent the weight matrices and bias vectors for the output gates.

The gated structure allows models to selectively retain information from past time steps along with current features (Weerakody et al. 2021; Lin et al. 2022). This helps mitigate issues like gradient explosion and vanishing gradients and also reduces the impact of noise in historical data. This capability allows LSTM units to extract long-term temporal information more efficiently than traditional RNN models. Gated Recurrent Unit (GRU) simplifies LSTM’s gate mechanism, which can be regarded as a simplified version of LSTM and will not be introduced in detail here. For multi-step time series forecasting, RNN-based models typically rely on autoregression for sequential prediction (Maggiolo and Spanakis 2019; Binkowski et al. 2018), using outputs from one step as inputs for the next. However, this autoregressive approach may lead to error accumulation. Furthermore, as the sequence length processed by the model increases, RNNs face amplified risks of gradient explosion and error accumulation, challenges that gate mechanisms do not fully resolve.

Given its exceptional performance in both natural language and image processing domains (Devlin et al. 2018; Vaswani et al. 2017), the Transformer model is frequently employed to analyze general time-series data (Wen et al. 2022; Cai et al. 2020). As depicted in Fig. 4, the Transformer mitigates error accumulation in long-term forecasting by utilizing an encoder-decoder architecture. It encodes the temporal information of historical time series via the encoder and then decodes this information with the decoder to produce predictions. At the heart of the Transformer is the self-attention mechanism, which initially maps the input time series \(\pmb {X}\) into query \(\pmb {Q}\), key \(\pmb {K}\), and value \(\pmb {V}\) matrices. Subsequently, it computes a similarity matrix between \(\pmb {Q}\) and \(\pmb {K}\) and adjusts \(\pmb {V}\) accordingly. Therefore, the Attention mechanism’s output, \(\pmb {O} = \text {Atten}(\pmb {X},\pmb {X},\pmb {X})\), is determined by the following process:

where \(\pmb {Q},\pmb {K},\pmb {V} \in \mathbb {R}^{D_k \times L}\). The \(\pmb {A} \in \mathbb {R}^{L \times L}\) represents the similarity between the L time steps of the input time series, and \(\text {Softmax}(\cdot )\) transforms the similarity into an attention weight distribution. The value matrix \(\pmb {V}\) is then weighted and summed based on the attention weight distribution to obtain the time-series feature \(\pmb {O}\). In this way, \(\pmb {O}\) incorporates the similarity correlations between time steps.

In Fig. 4, the encoder layer of the Transformer encodes the temporal dependencies within the input time series, subsequently feeding the encoded data to the corresponding decoder layer. This decoder layer then generates the forecasted sequence, leveraging the identified temporal dependencies. Besides, the feed-forward module within both the encoder and decoder consists of several fully connected layers. With multiple layers of encoders and decoders stacked, the Transformer architecture can capture extensive long-term temporal correlations. The core of Transformer in time series forecasting lies in storing the similarity relationships between historical time steps and predicting future time steps based on these relationships. It is worth noting that existing large language models(LLM) (Zhao et al. 2023) like ChatGPT (Achiam et al. 2023) can be directly used for time series forecasting. Currently, large language models are commonly involved in time series forecasting in two ways: (1) as a predictive model (Liang et al. 2024): directly achieving sequential prediction based on prompt engineering (Mao et al. 2023; Shin et al. 2020), and (2) not as a predictive model (Zhang et al. 2024): generating embeddings by taking in relevant text information and using these embeddings as auxiliary variables input into the predictive model.

3.1.2 Targeted information mining

To further explore the correlations among time steps, some models leverage the concept of time series decomposition by developing custom modules designed to extract targeted information, such as trend or seasonal information. The final predictive output is then obtained by integrating the forecasting results of various types of target information. Current deep learning models that employ time series decomposition are listed in Table 1.

Trend Information Trend information represents the trend correlations exhibited by the series across time steps, where the term “trend correlations” denotes the discernible persistence and regularity of fluctuations between consecutive time steps within a defined scope (Taylor and Letham 2018; Cleveland et al. 1990; Asadi and Regan 2020). In addressing the attributes of trend information, Autoformer (Wu et al. 2021) employs a mean filter to extract the trend features from the time series. This involves convolving the input time series with a mean filter using a sliding window approach. However, due to the limited receptive field of the mean filter, the trend information acquired through the sliding window method tends to be discontinuous and localized. To mitigate this limitation, Fedformer (Zhou et al. 2022) devises a set of mean filters with varying receptive fields, yielding trend terms corresponding to different forecasting horizons. Subsequently, the model assigns learnable weights to aggregate the trend terms through weighted averaging. This approach enables Fedformer to incorporate trend information spanning diverse forecasting horizons.

Since trend information is usually more stable on a local scale, the time step to be predicted is more correlated with trend information from closer historical time steps, while data from distant steps are deemed less favorable for trend prediction (Li et al. 2019; Lai et al. 2018). To mitigate the influence of distant data on trend predictions, LSTnet (Lai et al. 2018) incorporates a predefined window, confining trend predictions to temporal information within the window nearest to the target prediction. Within this preset window, which includes k time steps, LSTnet employs linear mapping to capture the linear relationships within the sequence, subsequently leveraging autoregression to forecast the trend term. Thus, LSTnet’s prediction of the trend term at time t is expressed as:

where \(\pmb y_{t} \in \mathbb {R}^{D}\) denotes the prediction of the trend term at time t, \(\pmb x_{t-k+i} \in \mathbb {R}^{D}\) denotes historical value of the time series at the \((t-k+i)\)th time step, \(\pmb {W}_{i} \in \mathbb {R}^{D \times D }\) is the weight matrix and \(\pmb b\in \mathbb {R}^{D}\) is the bias. LSTnet employs autoregression to generate trend term predictions for all target locations. Similarly, both TDformer (Zhang et al. 2022) and DLinear (Yun et al. 2019) adopt linear mapping techniques for trend term prediction.

However, linear relationships inadequately characterize the trend information of time steps, leading to weak interpretability of trend terms obtained through linear mapping. To address these issues, N-BEATS (Oreshkin et al. 2019) introduces the Trend Stack module, aimed at capturing the trend information within time series data. This module primarily employs polynomial fitting techniques to model the trend relationships between time steps. For the input sequence \(\textbf{X}\), the Trend Stack module initially employs a fully connected layer to extract time-series features, obtaining parameters for polynomial fitting. Subsequently, it introduces the time vector \(\pmb {t} =\frac{[0,1,2,\cdots ,(O-1)]}{O} \in \mathbb {R}^O\) as the independent variable for the fitting process. The resulting trend term generated by the Trend Stack can be represented as:

where \(\pmb \theta \in \mathbb {R}^{D \times h}\) is the fit factor for polynomial fitting, \(\pmb {W} \in \mathbb {R}^{L \times h}\) is the weight matrix, and \(\pmb {B} \in \mathbb {R}^{D \times h}\) is the bias. \(\pmb {X}_{T} \in \mathbb {R}^{D \times O}\) is the trend term generated by the Trend Stack. h is the highest power in the polynomial fit, which is usually set to a small value (\(0< h< 5\)) by N-BEATS to prevent overfitting.

While LSTnet mitigates the influence of distant information on trend term prediction by implementing a preset window, it maintains uniform attention across time steps within this window. In contrast, ETSformer (Woo et al. 2022) posits that data proximate to the prediction position holds greater significance in encoding trend terms, prioritizing time steps in close proximity to the prediction location.

Exponentially weighted average enables the prediction of trend terms to pay more attention to those closer historical time steps (Hyndman et al. 2008; Ensafi et al. 2022), strengthening correlations among short-term time steps. ETSformer introduces the Exponential Smoothing Attention (ESA) module, integrating the concept of exponential weighted averaging into the attention mechanism. For the output \(\pmb {O}\) of the attention mechanism derived from Equation (5), the operation of ESA can be expressed as:

where \(0<\alpha <1\) is the learnable smoothing parameter and \(v_0\) denotes the learnable initial state.

The aforementioned models primarily capture the short-term trends within the time series, inadvertently overlooking the influence of long-term trend information. To address this limitation, PatchTST (Nie et al. 2022) draws inspiration from patch processing methodologies prevalent in computer vision (Dosovitskiy et al. 2020; Liu et al. 2021), integrating the concept of patches into the attention mechanism. As depicted in Fig. 5, PatchTST initially segments the input time series into fixed-length patches using a sliding window approach. Subsequently, these patches serve as inputs to the attention mechanism. Unlike traditional attention mechanisms, PatchTST does not focus on correlations among time steps within the individual patch; instead, it treats each patch as a unit and employs the attention mechanism to assess the similarity between patches. These patches contain aggregated information from short-term time steps, facilitating the exploration of long-term dependencies within the time series. For the input sequence \(\pmb {X}\), this process can be represented as:

where \(\pmb {X}_{p} \in \mathbb {R}^{D \times n \times p}\) is the set of sliced patches, n denotes the number of sliced patches, and p denotes the length of each individual patch. \(\pmb {W}_{Q},\pmb {W}_{K},\pmb {W}_{V} \in \mathbb {R}^{D \times p \times h}\) is the weight matrix, and h is the dimension of hidden layer. \(\pmb {A}_{p}\in \mathbb {R}^{D \times n \times n}\) is the attention matrix, which represents the similarity relationship between n patches.

Seasonal Information: Seasonal information is very important in time series analysis (Edition et al. 2002; Rawat et al. 2019), as it indicates the periodic behavior within the data, elucidating correlations between time steps separated by fixed intervals. However, extracting seasonal information is often more challenging than trend information due to the intricate periodicity inherent in time series data. Approaches for predicting seasonal terms can be broadly categorized into two groups: time domain-based methods and frequency domain-based methods. Time domain-based methods directly model the complex periodicity within the time domain (Lai et al. 2018; Wu et al. 2021). In contrast, frequency domain-based methods first transform the input time series into the frequency domain using a Fourier transform (Zhou et al. 2022; Woo et al. 2022; Zhang et al. 2022), then identify complex periodicities based on frequency components. In the following, we will delve into the seasonal terms mining approaches in both the time and frequency domains.

To enable RNNs to capture seasonal information within time series data, LSTnet (Lai et al. 2018) introduces a recurrent-skip network that contains skip connections. Incorporating skip connections facilitates direct interaction between time steps separated by a predefined interval. As depicted in Fig. 6, LSTnet initially assumes a potential period p for the input sequence. Subsequently, connections are established between the tth time step and the \((t-p)\)th time step within the RNN. The update of the hidden layer state at the tth time step encompasses information from the \((t-p)\)th time step, the current input, and the hidden layer state from the previous time step. The skip-connected RNN allows the state update at each time step to fully consider the information of the time steps before a fixed time interval.

The skip-connected RNN necessitates preset timing periods p and can only be utilized to extract a single periodic relation, rendering LSTnet inadequate for analyzing time series exhibiting intricate periodic patterns. To address this limitation and capture the complex periodic relations inherent in time series data, Autoformer (Wu et al. 2021) introduces the Auto-Correction module. This module aims to assess the time-delay similarity among input sequences to characterize the periodicity of the original sequences, where time-delay similarity denotes the likeness between time-delayed sequences and the original ones. Time-delayed sequences are shifted by \(\tau\) time steps. Autoformer posits that if a time-delayed sequence with a \(\tau\)-step delay exhibits significant similarity to the original sequence, \(\tau\) can be considered a period of the original sequence. To capture these complex period relations, as depicted in Fig. 7, the Auto-Correction module initially employs \(\text {Roll}(\cdot ,\tau )\) to shift the input sequence by a length of \(\tau\), subsequently evaluating the similarity between the delayed sequence and the original one. This similarity is then utilized as a weighting factor to average and aggregate the time-delayed sequence, thereby generating the seasonal terms of the sequence. Thus, for the input time series \(\textbf{X}\), the Auto-Correction process can be delineated as:

where \(\pmb {x}_{t}\) denotes the tth time step of the input time series, \(R_{\pmb {X}}(\tau )\) denotes the similarity between the original sequence \(\textbf{X}\) and its delayed sequence by a time delay of \(\tau\) time steps. \(\tau _1,\cdots ,\tau _k\) represent k time delays of the delayed sequence that has the highest similarity to the original sequence. Autoformer leverages the similarity of time delays to extract the periodicity inherent in the input time series. It approximates the complexity of the time series’ periods by utilizing multiple time delays of different degrees.

According to the Fourier series (Mathieu et al. 2013; Celeghini et al. 2021), any periodic signal can be expressed as a linear combination of sinusoids with different frequencies. Consequently, frequency domain-based processing involves transforming the time series into the frequency domain via Fourier transform and subsequently analyzing the series in this domain. Frequency domain-based seasonal term mining algorithms are able to analyze the frequency components directly, thereby extracting complex period information from the time series. Subsequently, we will delve into the seasonal term mining algorithm in the frequency domain.

Fedformer (Zhou et al. 2022) adopts an approach by directly assessing the similarity between frequency components within the frequency domain space. Fedformer introduces the FEA (Frequency Enhanced Attention) module, which employs an attention mechanism to scrutinize frequency components in the frequency domain, thereby delving into the periodic characteristics of the input time series.

In Fig. 8, the FEA module first transforms the input time series from the time domain to the frequency domain using the Fourier transform \(F(\cdot )\). To mitigate computational costs in the subsequent Attention mechanism and counteract the effects of noise, FEA employs \(\text {Select}(\cdot )\) to randomly sample frequency components in the frequency domain. Subsequently, FEA applies the Attention mechanism to these selected frequency components, deriving similarity relations among them and processing the components accordingly. Finally, FEA returns the processed results to the time domain using the inverse Fourier transform \(F^{-1}(\cdot )\). Consequently, the FEA processing, based on the provided description and Equation (5), can be expressed as:

where \(\tilde{\pmb {O}} \in \mathbb {C}^{I \times D_k}\), I denotes the number of frequency components in the frequency domain. To fulfill the forecasting task, FEA requires padding the processing results in the frequency domain using \(\text {Padding}(\cdot )\) to ensure that the sequence meets length requirements.

Since FEA performs a random selection of frequency components in the frequency domain, there’s a risk of losing crucial season information. To address this, ETSformer (Woo et al. 2022) filters frequency components based on their magnitudes, retaining the k components with the highest magnitude. For seasonal term processing, ETSformer introduces the FA (Frequency Attention) module. This module first transforms the input time series into the frequency domain, preserving the k frequency components with the greatest magnitude. Subsequently, the FA module returns the retained frequency components to the time domain via Fourier inverse transform and outputs the predicted seasonal terms.

In contrast to Fedformer’s FEA module, ETSformer operates under the premise that noise in time series data typically resides in frequency components with lower energy. Hence, it prioritizes frequency components with higher magnitudes. Conversely, Fedformer assumes noise may be present across all frequency components and thus employs random selection for screening. TDformer’s (Zhang et al. 2022) approach to seasonal term processing is the same as that of Fedformer. Meanwhile, DLinear (Yun et al. 2019) utilizes a single linear layer to handle the seasonal terms.

To streamline the module for extracting seasonal terms, N-BEATS (Oreshkin et al. 2019) simplifies its approach by exclusively processing specific frequencies within the frequency domain. Specifically, N-BEATS introduces a Season Stack, which utilizes Fourier period coding to analyze the seasonality of the time series. As depicted in Fig. 9, N-BEATS feeds the input time series into the Season Stack. The stack utilizes a fully connected network (FC) to derive Fourier period coding, representing the amplitudes of specific frequencies. Subsequently, leveraging the Fourier period coding alongside the input time vector \(\pmb {t} =\frac{[0,1,2,\cdots ,(O-1)]}{O} \in \mathbb {R}^O\), the Season Stack employs Fourier series to predict the seasonal terms. Thus, the processing of seasonal terms can be expressed as:

where \(\pmb {W} \in \mathbb {R}^{L \times h}\) denotes the weight matrix, \(\pmb \theta \in \mathbb {R}^{D \times h}\) denotes the Fourier coefficients predicted by an FC network, and h denotes the number of frequency components processed by the season module in the frequency domain. \(\pmb {Y}_{S}\in \mathbb {R}^{D \times O}\) denotes the seasonal terms predicted by the season module. From the equation provided, it’s evident that, unlike Fedformer, N-BEATS chooses to process specific frequency components, aiming to simplify the module’s task of extracting seasonal information.

Multi Scale Information: In addition to trend and seasonal information, models have begun to target other time-series features such as multi-scale and non-stationary information. Multi-scale information pertains to the temporal dependencies of a time series at different scales (Shabani et al. 2022; Challu et al. 2023). For instance, considering hourly observations as the finest scale, coarser scales could represent daily, weekly, or even monthly patterns within the time series. Integrating multi-scale temporal information enables models to capture dependencies across various durations, crucial for accurate time series modeling and forecasting.

To facilitate the capture of information across diverse time scales, Scaleformer (Shabani et al. 2022) introduces a multi-scale iterative framework for time series forecasting. This framework employs existing models (e.g., Autoformer, Fedformer) as the primary forecasting model and applies filtering operations to transform the input time series into different time scales. As illustrated in Fig. 10, Scaleformer comprises multiple processing layers, with each layer’s inputs derived from downsampling the original inputs and upsampling the outputs of the preceding layer. At the ith processing layer, Scaleformer first upsamples the output of the preceding layer \(\pmb {X}_{out,i-1}\) to obtain \(\pmb {U}_{i}\). Simultaneously, it downsamples the original input \(\pmb {X}\) using a scale factor \(s_{i}\) to obtain \(\pmb {P}_{i}\). These \(\pmb {U}_{i}\) and \(\pmb {P}_{i}\) are then normalized across scales and fed into the forecasting model. The primary forecasting model produces the processing result \(\pmb {X}_{out,i}\) for the current layer, and the output of the final processing layer yields the ultimate prediction \(\pmb {Y}\).

Since Scaleformer needs to get the prediction in different time scale iterations, the distribution of the model’s intermediate variables can change dramatically, which may lead to the accumulation and propagation of erroneous distributional information. In order to eliminate the effect of time scale changes, Scaleformer will perform cross-scale normalization operations on inputs \(\pmb {U}_{i}\) and \(\pmb {P}_{i}\) at each processing layer. For \(\pmb {U}_{i} \in \mathbb {R}^{D \times L_{n}}\) and \(\pmb {P}_{i} \in \mathbb {R}^{D \times L_{m}}\), the cross-scale normalization operation can be expressed as:

where \(\pmb {\mu }_{i}\) is the cross-scale mean coefficient of the ith layer, which is the mean of \(\pmb {U}_{i}\) and \(\pmb {P}_{i}\). The cross-scale normalization operation is essentially a "zero-mean" operation on \(\pmb {U}_{i}\) and \(\pmb {P}_{i}\). Scaleformer enhances the primary prediction model’s ability to learn multi-scale time-series information by feeding time series of different time scales into the primary prediction model.

To enable the attention mechanism to focus on multi-scale time-series information, Pyraformer (Liu et al. 2021) constructs a pyramid diagram comprising layers representing different time scales. Initially, Pyraformer processes the input time series through convolution, aggregating time steps within a receptive field to generate coarser time scales. As illustrated in Fig. 11, Pyraformer utilizes the original input time series as the bottom layer of the pyramid diagram to represent the finest time scales. Subsequently, it iteratively aggregates the bottom time steps via convolution, using the resultant coarse-scale time series as the top layer of the pyramid graph. Pyraformer employs the Pyramidal Attention Module (PAM) for nodes within the pyramid graph, enabling extraction of cross-time scale features. Finally, depending on specific prediction task requirements, Pyraformer feeds the features of the top nodes into different networks to obtain the final output. Hereafter, we delve into the principles of PAM.

In PAM, each node is only concerned with its adjacent nodes. Considering the pyramid structure depicted in Fig. 11, let \(n_l^{(s)} \in \mathbb {R}^{D}\) denote the lth node in the sth layer of the pyramid graph. The node \(n_l^{(s)}\) can obtain the set \(\mathbb {N}_l^{\left( s\right) }\) of its neighboring nodes in adjacent layers. This set encompasses three components: (1) neighboring A nodes within the same layer, including the \(n_l^{(s)}\) node itself, denoted as \(\mathbb {A}_l^{\left( s\right) }\); (2) the lower layer nodes, representing finer time scale nodes, denoted as \(\mathbb {C}_l^{\left( s\right) }\); and (3) the upper layer nodes, representing the coarser time scale node, denoted as \(\mathbb {P}_l^{\left( s\right) }\). This set is expressed as follows:

where L is the sequence length of the original input to the model, while A denotes a hyperparameter indicating the number of nodes selected at the same time scale. C serves as another hyperparameter designed to regulate the number of ensemble nodes. To ensure the model focuses on multi-scale time-series information, PAM lets nodes \(n_l^{(s)}\) compute attention correlations within the set \(\mathbb {N}_l^{\left( s\right) }\):

where \(\varvec{q}\) is the query vector generated by node \(n_l^{(s)}\), and \(\varvec{k}_{i}\), \(\varvec{v}_{i}\) are key-value pairs generated by nodes in the set \(\mathbb {N}_l^{\left( s\right) }\). Pyraformer aggregates time steps of fine time scales into coarse time scales through convolutional operations and captures similarity correlations across different time scales using the Attention mechanism.

Unlike Pyraformer, Triformer (Cirstea et al. 2022) generates coarse time-scale time steps through the Attention mechanism and takes fine time-scale sequences as inputs when processing coarse time-scale sequences. As shown in Fig. 12, the Triformer consists of multiple processing layers stacked on top of each other, each of which corresponds to a different time scale. The results from the fine time-scale processing in the lower layers are fed as inputs to the coarser time-scale processing layers. The outputs from all layers are integrated into Triformer’s prediction process. This mechanism enables Triformer to effectively explore multi-scale time series information. Next, we will delve into the underlying principles of each processing layer in Triformer. Triformer initially divides the input sequence into multiple local short sequences and applies the Attention mechanism within these segments. For a local short sequence \(\pmb {X}_{p} \in \mathbb {R}^{D \times p}\) in this layer, where p represents the length of the local short sequence. The output of the Attention mechanism in Triformer can be expressed as:

where \(\pmb {o}_{p} \in \mathbb {R}^{D}\) is the temporal feature vector at a short-term interval, corresponding to the coarser time scale. Meanwhile, \(\pmb {h} \in \mathbb {R}^{D}\) serves as the query vector, capturing the temporal information at the current scale. So \(\pmb {h}\) necessitates re-initialization for each processing layer accommodating distinct time scales. It is worth noting that \(\pmb {o}_{p}\) will be used as the time step in the input sequence of the next processing layer.

Non-stationary Information: Non-stationary information refers to variations in the statistical characteristics of a time series over time (Ulyanov et al. 2016; Du et al. 2021; Muandet et al. 2013; Zhong and Cambria 2023). The presence of non-stationary time series poses challenges for prediction tasks in deep learning models, as they complicate the modeling of sequences of statistically changing data during inference, typically manifested through shifts in mean and standard deviation. RevIN (Kim et al. 2021) addresses this issue by standardizing input sequences within the model, ensuring each processed sequence adheres to a consistent distribution, and subsequently reverting the model’s predicted sequences to the original distribution during the output stage. Hereafter, we delve into a detailed exposition of RevIN.

RevIN is a flexible end-to-end method that can be applied to arbitrary models (Li et al. 2023; Liu et al. 2024, 2022). To mitigate non-stationary effects in input time series, it initially normalizes each sequence within a defined lookback window. Unlike conventional normalization techniques applied during time series preprocessing, RevIN normalizes input sequences within the lookback window utilizing learnable affine parameters. The normalized sequences are then fed into the forecasting model to generate predictions. During the output stage, RevIN reverses the normalization process to restore the non-stationary characteristics of the time series. Formally, for an input time series \(\pmb {X}\), RevIN’s processing can be represented as:

where \(\pmb {m},\pmb {v} \in \mathbb {R}^{D}\) denotes the mean and variance of the time series in the lookback window, and \(\pmb {\gamma },\pmb {\beta }\in \mathbb {R}^{D}\) denotes the affine coefficients. Then \(\hat{\pmb {X}}\) is fed into an arbitrary model to get the prediction \(\pmb {Y}\). To restore the non-stationary information of the time series, RevIN denormalizes the prediction:

Non-stationary information within time series poses a significant challenge to accurate forecasting, it is widely acknowledged that preprocessing methods such as those discussed in (Kim et al. 2021; Liu et al. 2022; Passalis et al. 2019) can help mitigate the non-stationary nature of the original input series. However, removing the inherent non-stationarity of sequences may lead to the problem of over-stationarization, which in turn can subject forecasting models to severe overfitting. To tackle this issue, NS-Transformer (Liu et al. 2022) integrates non-stationary information into the Attention mechanism, compensating for information lost during the normalization of the sequence.

NS-Transformer adopts the standard Transformer architecture but incorporates De-stationary Attention in place of conventional Attention mechanisms. De-stationary Attention leverages statistical information, specifically mean and variance derived from instance normalization, enhancing the model’s ability to capture non-stationary patterns within the original time series. Since NS-Transformer assumes that the time series have the same variance in all variables, \(\pmb {v}\) is simplified to the scalar v. Given the stationarized input sequence \(\hat{\pmb {X}}\), linear mappings yield \(\hat{\pmb {Q}}\), \(\hat{\pmb {K}}\), \(\hat{\pmb {V}}\), alongside statistical parameters \(\pmb {m}\) and v from the original sequence. The computation process of De-stationary Attention is as follows:

where \(\pmb {Q},\pmb {K},\pmb {V}\) are the query matrix, key matrix, and value matrix obtained by linear mapping of the original input sequence. \(\pmb {m}\) denotes the mean vector statistically obtained in the normalization, and v denotes the variance statistically obtained in the normalization.

In order to directly use deep learning implementation Equation(27), NS-Transformer uses a multi-layer perceptron \(\text {MLP}(\cdot )\) to learn non-stationary information from the original input time series:

where \(\tau \in \mathbb {R}\) denotes the variance information learned from the non-stationary information and \(\pmb {\Delta } \in \mathbb {R}^{L}\) denotes the mean information learned from the non-stationary information. For the prediction of the Transformer model, NS-Transformer similarly chooses to denormalize to restore the prediction’s non-stationary information.

3.2 Mining correlations among variables

In the context of multivariate time series forecasting, each time series dimension represents a distinct univariate time series. In addition to considering time-step dependencies, correlations among variables also play a crucial role in predicting multivariate time series (Cheng et al. 2022; Jin et al. 2022; Cao et al. 2020; Zhang et al. 2023). For example, when predicting future temperature for a given region, relying solely on historical temperature records from the region is insufficient. Instead, including historical wind speed data from the region and historical temperature data from neighboring regions can significantly enhance the accuracy of the prediction. Therefore, in addition to capturing time-step dependencies, an alternative approach to temporal processing is to capture the correlations among variables. In section 3.1, the previously discussed forecasting models mainly concentrate on exploring dependencies among time steps, often overlooking the exploration of correlations among variables. Particularly, PatchTST (Nie et al. 2022), a model incorporating the Channel Independence (CI) strategy, entirely disregards correlations among variables. In the following, we will introduce models that explicitly extract correlations among variables.

To address variables at a more detailed level, TFT (Temporal Fusion Transformers) (Lim et al. 2021) classifies the variables of the input time series into three categories:

-

1.

Static variables: variables remain constant over time.

-

2.

Time-dependent variables: variables exhibit temporal changes and require prediction.

-

3.

Future-known variables: These variables include known future information (e.g., upcoming holiday dates), other exogenous time series (e.g., historical customer foot traffic), and static metadata (e.g., store location).

To handle these categorized multiple variables, TFT incorporates a Variable Selection Network (VSN) module for filtering. The structure of VSN is schematically represented in Fig. 13. For different types of input variables, the VSN guides them individually through the Gated Residual Network (GRN) module. Furthermore, the VSN plays a crucial role in regulating the representation for each variable by utilizing weighted averaging, serving as an effective mechanism for variable filtering. Specifically, the formulations of GRN are given by:

where \(\pmb {x} \in \mathbb {R}^{L}\) and \(\pmb {c} \in \mathbb {R}^{L}\) are univariate time series respectively from the primary input and the external context, \(\textrm{ELU}(\cdot )\) is the ELU activation function, \(\textrm{LayerNorm}(\cdot )\) represents layer normalization, and \(\pmb {W}_x, \pmb {W}_c \in \mathbb {R}^{h \times L}\), \(\pmb {W}_d \in \mathbb {R}^{h \times h}\) are learnable weight matrices. Additionally, \(\pmb b_e, \pmb b_d \in \mathbb {R}^{h}\) are learnable biases, and h denotes the dimension of the preset hidden layer. \(\textrm{GLU}(\cdot )\) refers to the gated linear unit. For the input \(\pmb d\), the computation of \(\textrm{GLU}(\cdot )\) is as follows:

where \(\pmb {W}_{1}, \pmb {W}_{2} \in \mathbb {R}^{h \times h}\) are learnable weight matrices, and \(\pmb {b}_{1}, \pmb {b}_{2} \in \mathbb {R}^{h}\) are learnable biases. The symbol \(\otimes\) represents the Hadamard product, and \(\sigma (\cdot )\) denotes the sigmoid activation function.

In TFT, the VSN module assigns weights to different variables, which helps suppress certain variables’ representation and remove noisy inputs. The VSN only accepts an univariate sequence as input, thus its output weights cannot consider the correlation between different variables. To address this issue, Aliformer (Qi et al. 2021) leverages the Attention mechanism to capture the similarity relationships among variables, which is called AliAttention. This innovative approach enables the fusion of attention graphs generated from different variables, allowing the Attention mechanism to consider the similarity relations among diverse variables simultaneously.

As depicted in Fig. 14, for the input variables \(\pmb {X}_{1} \in \mathbb {R}^{D_{1} \times L}\) and \(\pmb {X}_{2} \in \mathbb {R}^{D_{2} \times L}\), AliAttention performs separate computations of attention graphs for each variable. These individual attention graphs are then combined to create a unified attention graph. The resulting attention graph is subsequently used to generate the outputs of the Attention mechanism. This process can be summarized as follows:

In the process, \(\pmb {Q}_{1}, \pmb {K}_{1}, \pmb {V}_{1}\) are derived from variable \(\pmb {X}_{1}\), while \(\pmb {Q}_{2}, \pmb {K}_{2}\) are derived from variable \(\pmb {X}_{2}\). The resulting attention graph, denoted as \(\pmb {A}^{*} \in \mathbb {R}^{L \times L}\), is a newly obtained graph that integrates the similarity matrices of different variables. By incorporating AliAttention, the Attention mechanism can explore the interdependencies among the variables by fusing the attention graphs.

To facilitate a comprehensive exploration of correlations among variables, Crossformer (Zhang and Yan 2022) introduces the concept of directly applying the Attention mechanism to the variables instead of fusing attention graphs. For this purpose, Crossformer proposes DAttention. For the input time series \(\pmb {X} \in \mathbb {R}^{D \times L}\), Crossformer first transposes the dimension of input series to obtain transposed \(\pmb {X}^{'} \in \mathbb {R}^{L \times D}\), and then feeds \(\pmb {X}^{'}\) into the Attention mechanism. DAttention, based on Equation (4) and (5), can be mathematically expressed as:

where \(\pmb {Q}, \pmb {K}, \pmb {V} \in \mathbb {R}^{D_k \times D}\) are all computed based on the transposed input \(\pmb {X}^{'}\). Here, \(\pmb {Q}\) represents the query matrix, \(\pmb {K}\) represents the key matrix and \(\pmb {V}\) represents the value matrix. Importantly, the attention graph \(\pmb {A} \in \mathbb {R}^{D \times D}\) in DAttention captures the similarity relationships between D variables.

Indeed, the distinction between AliAttention and DAttention lies in how they utilize the Attention mechanism. AliAttention focuses on capturing similar relationships of variables by fusing attention graphs derived from each variable. On the other hand, DAttention applies the Attention mechanism directly to the variables, allowing it to uncover the cross-time-step dependence of variables.

4 Long-term time series forecasting optimization

In the field of long-term time series forecasting, Transformer model (Wu et al. 2021; Zhou et al. 2021, 2022) is widely used. This is because Convolutional Neural Networks (CNNs) have limitations regarding their receptive field, and Recurrent Neural Networks (RNNs) suffer from issues like error accumulation and gradient explosion. However, the attention mechanism’s computational cost grows quadratically with the series length. To address these challenges, researchers have developed various methods to optimize the attention mechanism for long-term time series prediction, as shown in Table 2.

The computational cost of the attention mechanism mainly arises from computing the similarity relationships between time steps. To optimize the Attention mechanism, two main approaches have been proposed:

-

(1)

Shortening the length of the input sequence of the Attention mechanism: This approach aims to reduce the computational complexity by processing shorter subsequences instead of the entire input sequence. Various techniques, such as window-based (Zhou et al. 2021; Cirstea et al. 2022), segment-based (Nie et al. 2022; Liu et al. 2021), and hierarchical methods (Zhang and Yan 2022), have been proposed to divide the input sequence into smaller parts before applying the attention mechanism.

-

(2)

Sparsifying the attention mechanism: This approach selectively computes and sparsifies the similarity relationships, reducing the required computations. Different methods like sparse Attention (Li et al. 2019), kernelized Attention (Kitaev et al. 2020), and low-rank Attention (Zhou et al. 2021) have been developed to exploit the sparsity or low-rank structure in the similarity matrix, resulting in computational savings.

In the following sections, we will explore these two approaches as a guiding framework to introduce optimization methods for the Attention mechanism in long-term time series prediction.

Shorten the Length of Time Series Processed by Attention Mechanism: Informer (Zhou et al. 2021) utilizes the Transformer framework, which integrates convolution and pooling operations to shorten the length of time series gradually. This sequential aggregation of time steps occurs at each layer. As a result, the computational burden of the Transformer is significantly diminished. Therefore, the input time series of the jth layer in Informer can be expressed as follows:

where \(\hat{\pmb {X}}_{j} \in \mathbb {R}^{D \times L_{j}}\) represents the output time series of the jth layer, and \(L_{j}\) denotes its sequence length. The input of the jth layer, denoted as \(\hat{\pmb {X}}_{j-1} \in \mathbb {R}^{D \times L_{j-1}}\), is worth noting that \(L_{j} < L_{j-1}\).

Unlike Informer, PatchTST (Nie et al. 2022) takes a different approach by dividing the original input sequence into multiple fixed-length short sequences, as depicted in Fig. 5. This decision allows the model to transition from processing a single, lengthy sequence to managing multiple, short sequences. Additionally, PatchTST treats these short sequences as cohesive units and employs its attention mechanism to compute their similarity relationships. As a result, the space complexity of the attention mechanism in PatchTST is reduced from the original \(O(L^2)\) to \(O(N^2)\), where L represents the length of the input sequence and N denotes the number of units, with \(N\ll L\). Consequently, PatchTST achieves significant reductions in space complexity. Other models, such as Pyraformer (Liu et al. 2021) and Triformer (Cirstea et al. 2022), employ a similar strategy to PatchTST in optimizing the space complexity of the attention mechanism. Compared to Informer, PatchTST demonstrates a greater advantage in optimizing space complexity due to its treatment of sliced short sequences during processing. Notably, this advantage becomes more pronounced when handling long-term time series, as the growth rate of N remains relatively slow compared to the progressive increase of L.

Sparse the Attention Mechanism: Sparsing the attention mechanism involves selectively computing the similarity between time steps within the mechanism while preserving the original length of the input sequence. These approaches can also effectively address the space complexity associated with the attention mechanism. Building upon this concept, LogTrans (Li et al. 2019) introduces the LogSparse Self-Attention mechanism. Illustrated in Fig. 15, for the tth time step of the input time sequence, LogSparse Self-Attention determines the index of the time step with which the similarity relationship needs to be computed as follows:

where \(\pmb {I}_t\) represents the index of the time step in the tth time step where similarity computation is required. LogSparse Self-Attention selectively filters similarity relationships based on their temporal proximity to the current time step, prioritizing relationships among nearby time steps. Additionally, LogSparse Self-Attention is causal, meaning that the current time step disregards similar information from future time steps.

Unlike LogTrans, which utilizes distance between time steps as a filtering criterion, Reformer (Kitaev et al. 2020) aims to group time steps in the feature space and compute attention within the groups, thereby achieving sparsity in the attention mechanism. The challenge of rapidly finding nearest neighbors in high-dimensional spaces can be addressed by Locality-Sensitive Hashing(LSH). A hashing scheme that assigns each time step to a hash space is called locality-sensitive if nearby vectors get the same hash with high probability and distant ones do not. Based on the LSH, Reformer proposes the LSH Attention mechanism. LSH Attention maps the input time series into a hash space, allowing attention computations only between time steps close to each other within this space.

To map time steps into the hash space, Reformer establishes the hash function as follows: \(h\left( \pmb {x} \right) =\arg \max \left( \left[ \pmb {R}^{\top }\pmb {x},-\pmb {R}^{\top }\pmb {x}\right] \right)\), where \(\pmb {R} \in \mathbb {R}^{D\times b/2}\), and b represents the number of hashes, which corresponds to the dimension of the hash space. As depicted in Fig. 16, the time steps within the input time series are mapped to the hash space using this hash function. Based on the positional relationship of the time steps in the hash space, Reformer organizes all time steps into distinct groups. Within each group, each time step computes the similarity relationship exclusively with other time steps belonging to the same group. This selective calculation significantly reduces the computation cost of the attention mechanism.

Informer (Zhou et al. 2021) also incorporates sparsity within its attention mechanism. However, unlike LogTrans and Reformer, Informer adopts a unique filtering criterion for similarity relationships based on the following assumption: within the attention mechanism, if the query vector \(\pmb {q}\) possesses intricate temporal information, its attention distribution with respect to all key vectors should deviate significantly from a uniform distribution. Based on this concept, Informer introduces ProbSparse Self-Attention, which selectively retains the computation results of the query according to the score of each query. The scoring function for query vector \(\pmb {q}\) can be expressed as follows:

where \(\pmb {K} = \left[ \pmb {k}_1,\cdots ,\pmb {k}_L \right]\) represents the key matrix containing all the key vectors in the time series, and \(\pmb {k}_j \in \mathbb {R}^{D_k}\) denotes the key vector corresponding to the jth time step in the time series. The scoring function primarily evaluates the query vectors \(\pmb {q}\) by analyzing their similarity distribution relative to the other key vectors \(\pmb {k}\). Ultimately, ProbSparse Self-Attention selectively retains the Top-U query vectors.

LogTrans, Reformer, and Informer employ Sparse Attention mechanisms that rely on specific filtering criteria for computing similarity relationships. For example, LogTrans filters the target of attention computation based on the distance between time steps. Reformer maps the data into a hash space and filters the target of attention computation based on the distance among time steps in the hash space. Informer filters query vectors based on the distribution of similarities between the query vector and all key vectors. In contrast, Crossformer (Zhang and Yan 2022) adopts a different approach by not employing a specific filtering criterion to sparsify the attention mechanism. Instead, it introduces transition units to avoid direct similarity computations between time steps. This strategy effectively reduces the space complexity of the Attention mechanism.

In the Crossformer model, when processing the input time serok with a length of L using the attention mechanism, c transition units are introduced, where \(c\ll L\). These transition units act as intermediate components for performing similarity computations between query vectors and key vectors within the attention mechanism. This approach helps avoid the space complexity of \(O(L^2)\) associated with direct similarity computations between query vectors and key vectors. For a key matrix \(\textbf{K} \in \mathbb {R}^{D_k \times L}\) and a value matrix \(\textbf{V} \in \mathbb {R}^{D_k \times L}\), the Attention mechanism with transition units can be expressed as follows:

where each column of \(\textbf{R} \in \mathbb {R}^{D_k \times c}\) represents the transition unit, which are learnable parameter vectors. As evident from the provided equations, the inclusion of these transition units allows for a reduction in the space complexity of the attention mechanism from \(O(L^2)\) to a linear complexity of \(O(2cL) = O(L)\).

5 Loss function

According to the optimization objective, the loss function commonly used in time series prediction models can be classified into two main types:

-

(1)

Loss function with a single optimization objective. This type of loss function primarily focuses on optimizing the gap in value between the prediction and the ground truth. The simplicity of this category of loss functions makes it widely employed. However, it has the limitation of solely aiming to minimize the gap in value between the prediction and the ground truth. Consequently, this category of loss functions faces challenges when dealing with time series that contain intricate temporal patterns and exhibit strong fluctuations.

-

(2)

Hybrid loss function that optimizes the multiple objectives. To address the aforementioned issue, an alternative group of models utilizes hybrid loss functions that optimize the multiple objectives. This category of loss functions aims to combine multiple loss functions to optimize different modules of the model, enabling collaborative predictions. In the following sections, we will discuss these two types of loss functions separately.

5.1 Single-objective loss function

MAE, MSE: Mean Absolute Error (MAE) and Mean Square Error (MSE) are commonly utilized loss functions in time series prediction tasks. They are employed to optimize the gap in value between the predicted values and the ground truth. MAE calculates the average of the absolute value of the prediction errors, while MSE calculates the average of the squared prediction errors. The specific formulas are provided below:

where \(\pmb {Y} \in \mathbb {R}^{O \times D}\) represents the ground truth, \(\pmb {\hat{Y}} \in \mathbb {R}^{O \times D}\) represents the prediction, and O is the length of the prediction and D is the number of variables. From this equation, it becomes evident that when dealing with challenging-to-predict data points, MSE exacerbates the gap in value between the prediction and the ground truth, imposing a more substantial penalty. On the other hand, MAE lacks this characteristic and treats all prediction points uniformly. In time series forecasting tasks, using MAE as the loss function can effectively mitigate the impact of outliers during model training. This approach is particularly beneficial for datasets like electricity and wind power data. Conversely, when the challenging prediction points in the time series dataset containning significant information, as observed in Traffic datasets (Wu et al. 2021) and Exchange Rate datasets (Lai et al. 2018), the model can opt for MSE as the loss function to emphasize their importance during the training process.

In essence, employing MSE or MAE implies a disregard for the direction of the error (Wang and Bovik 2009), meaning we are indifferent to whether the prediction surpasses or falls short of the actual value. However, this characteristic becomes crucial in specific time prediction scenarios. For instance, when forecasting the remaining lifespan of an engine, our prediction must underestimate the true value to prevent potential accidents.

Quantile Loss: Quantile loss is commonly used as a loss function in time series prediction tasks. Unlike MSE and MAE, quantile loss penalizes positive and negative deviations between predicted and true values differently. Given the prediction \(\pmb {\hat{Y}}\) and the ground truth \(\pmb {Y}\), the quantile loss between them can be expressed as:

where the quantile coefficient \(q\in \left[ 0,1 \right]\) can be adjusted to meet the specific demands of the prediction task. For example, in aircraft remaining useful life prediction (Zhang et al. 2023, 2023; Wang et al. 2023), it is often desired for the model’s predictions to be lower than the actual lifespan of the aircraft to minimize potential risks. Therefore, a quantile coefficient \(q<0.5\) can be used, which increases the penalty imposed by the loss function on larger predictions. This setting encourages the model to generate smaller predictions. However, a drawback of quantile loss is the requirement to specify a target quantile, which is often impractical in many prediction scenarios.

In summary, MAE, MSE, and quantile loss are commonly used loss functions for point forecasts in deep learning models for time series prediction. These loss functions are effective for both univariate and multivariate predictions, as well as for single-step and multi-step forecasting. Among the three, MSE is suitable when prediction errors are expected to follow a Gaussian distribution. However, when a dataset contains numerous outliers, MSE can be heavily affected by these, potentially leading to model failure. In such cases, MAE might be a better choice as the loss function. In specific scenarios, such as Remaining Useful Life (RUL) prediction (Zhang et al. 2023, 2023; Wang et al. 2023), where biased predictions are necessary, quantile loss is often employed.

Beyond point forecasts, interval forecasts (Armstrong 2001) represent another approach in time series prediction. As the name suggests, this method provides a range of possible outcomes rather than a single point, offering insights into the model’s confidence or prediction uncertainty. For interval forecasts, deep learning models typically use quantile loss as the loss function. Unlike in point forecasts, when quantile loss is applied to interval forecasts, the quantile parameter q in (43) represents a range rather than a single value. Due to space limitations, this paper does not explore interval forecasting in detail.

5.2 Hybrid loss function

The shortcomings of the aforementioned loss functions lie in their optimization objectives, which solely focus on the numerical distance between predictions and ground truth. Additionally, models based on a single optimization target are often structurally simple and cannot incorporate other structured predictive mechanisms such as SSM (State Space Model) (Salinas et al. 2020; Lin et al. 2021) or GAN (Generative Adversarial Network) (Wu et al. 2020; Mogren 2016; Goodfellow et al. 2014). Therefore, in order to leverage these specialized structures for collaborative predictions, some models utilize a joint loss function to optimize multiple objectives introduced by different structures within the model.