Abstract

This paper proposes a new meta-heuristic algorithm named tornado optimizer with Coriolis force (TOC) which is applied to solve global optimization and constrained engineering problems in continuous search spaces. The fundamental concepts and ideas beyond the proposed TOC Optimizer are inspired by nature based on the observation of the cycle process of tornadoes and how thunderstorms and windstorms evolve into tornadoes using Coriolis force. The Coriolis force is applied to windstorms that directly evolve to form tornadoes based on the developed optimization method. The proposed TOC algorithm mathematically models and implements the behavioral steps of tornado formation by windstorms and thunderstorms and then dissipation of tornadoes on the ground. These steps ultimately lead to feasible solutions when applied to solve optimization problems. These behavioral steps are mathematically represented along with the Coriolis force to allow for a proper balance between exploration and exploitation during the optimization process, as well as to allow search agents to explore and exploit every possible area of the search space. The performance of the proposed TOC optimizer was thoroughly examined on a simple benchmark set of 23 test functions, and a set of 29 well-known benchmark functions from the CEC-2017 test for a variety of dimensions. A comparative study of the computational and convergence analysis results was carried out to clarify the efficacy and stability levels of the proposed TOC optimizer compared to other well-known optimizers. The TOC optimizer outperformed other comparative algorithms using the mean ranks of Friedman’s test by 20.75%, 27.248%, and 25.85% on the 10-, 30-, and 50-dimensional CEC 2017 test set, respectively. The reliability and appropriateness of the TOC optimizer were examined by solving real-world problems including eight engineering design problems and one industrial process. The proposed optimizer divulged satisfactory performance over other competing optimizers regarding solution quality and global optimality as per statistical test methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Optimization techniques have been thoroughly investigated in the past several years in a variety of real-world problems (Panagant et al. 2023; Kumar et al. 2024). Optimization is the process of determining the optimal combination of decision variables in solving optimization problems, which is common in everyday life and work (Rezk et al. 2024). The search for effective and efficient ways to address optimization problems is becoming more and more important (Tejani et al. 2016). Many optimization problems are likely to be nonlinear and non-convex in nature, with many decision variables and, in some cases, intricate objective functions constrained by a variety of constraints. Furthermore, such optimization problems may have several local optimums and variable or abrupt peaks (Sowmya et al. 2024). Finding solutions to optimization problems is important in all areas of science and engineering (Nonut et al. 2022), with the desire to find ever-more robust solutions. This means that there is a need for sensible algorithms that can satisfy the complexity of such up-to-date engineering and scientific problems (Gundogdu et al. 2024).

As optimization problems get increasingly complicated and multifaceted, the demand for effective and precise optimization techniques is rising (Cao et al. 2020; Aye et al. 2023). Consequently, researchers have examined optimization techniques such as machine learning, dynamic programming, and linear programming over the past decade. Suitably, there is a need for optimization algorithms that have the potential to greatly improve problem-solving efficacy, reduce the computational load, and preserve computational and financial resources (Tejani et al. 2017). A thorough review of existing optimization algorithms in the literature reveals a diverse range of techniques (Braik 2021; Zhu et al. 2024). These methods can range from classical linear or non-linear mathematical methods (Vagaská and Miroslav 2021) to nature-inspired methods (Braik et al. 2021; Zhu et al. 2024), each with advantages and disadvantages. Mathematical methods refer to a large range of optimization techniques that use a well-defined mathematical model with a starting condition to repeatedly locate the optimal solution to an optimization problem. Newton’s method (Bertsekas 2022) and Nelder and Mead algorithm (Shirgir et al. 2024) are a few examples of mathematical techniques.

Traditional methods have proven reasonably successful in solving large-scale optimization problems (Alavi et al. 2021). However, these methods are subject to inherent dependence on gradient information and require a fully promising initial starting vector within the search space. These methods often need an in-depth understanding of the problem and may not be the most effective solution to contemporary large-scale and multimodal optimization problems (Rezk et al. 2024). When any of the traditional methods are applied to complex optimization problems with nonlinear search spaces or a large number of constraints or decision variables, they may find only locally optimal solutions. However, there is no guarantee that they will find the global optimal solution, and it is easy to stumble upon locally optimal solutions.

Nature-inspired methods can be defined as algorithmic frameworks that use heuristics and stochastic operators inherited from nature, referred to as meta-heuristic algorithms (Comert and Harun 2023). Meta-heuristic techniques balance the limitations of mathematical methods with the advantages of unpredictability and ease of implementation. Meta-heuristics have gained popularity in academia and are commonly used to solve complex engineering and scientific problems (Rezk et al. 2024). Nature-inspired meta-heuristics may be highly effective in solving many real-world optimization problems, but they may not be able to provide adequate solutions to others. This is partly due to the common nature of these approaches, which are involved in local or sub-optimal solutions (Kumar et al. 2023; Ghasemi et al. 2024).

Meta-heuristic algorithms that draw inspiration from artificial, natural, and occasionally supernatural phenomena have become ubiquitous in the literature (Sharma and Raju 2024). Everything from simulated annealing to swarm intelligence, evolutionary theory, human behavior, musicians, and even the COVID-19 epidemic appear to be potential sources of inspiration for creating “novel” optimization methods. The history of meta-heuristics has been heavily influenced by natural processes (Gendreau et al. 2010), yet in the past two decades, too many self-described “novel” metaphor-based algorithms have been presented in the literature. It is regrettably unclear in the vast majority of instances why the presented metaphors are employed and what new insights they offer to the meta-heuristics’ community.

In studies that propose so-called “novel” metaphor-based techniques, the following are some of the most problematic elements: First, they redefine previously recognized concepts in the optimization area by introducing a new language using a metaphor. Second, they use the proposed metaphor to construct mathematical models that are trivial and only extravagantly based on the metaphor itself, which means that the models do not accurately reflect the metaphors. In addition, the proposed algorithms frequently do not correspond with the mathematical models generated by the metaphor. Third, they justify the use of a new metaphor by citing reasons like “it has never been used before” or “the mathematical models are different from those used in the past”-instead of defending it with a solid scientific foundation and describing the optimization process the metaphor represents and how it was applied to make efficient design decisions in the proposed algorithm. Lastly, they offer skewed assessments and comparisons with alternative approaches, such as an experimental assessment based on a limited number of low- complexity problems and/or a comparison of the proposed algorithm with outdated methods whose performance is far from state-of-the-art (Camacho-Villalón et al. 2023).

According to the above discussions, any emerging new optimization technique should enhance existing algorithms and provide unique advantages that are not found in other algorithms already reported in the literature. In this, a unique optimization technique provides an opportunity to share knowledge to address challenging real-world problems. New optimization algorithms frequently use fast-integrating methods or engines to enhance the optimization effectiveness of existing optimization methods. Thus, the optimization community gains from new optimization methods, which are still appropriate for experimentation using various search strategies for certain real-world problems (Abdollahzadeh et al. 2024). The current work is primarily motivated by these fundamental insights. The three basic approaches implied in the literature for creating meta-heuristic algorithms involve recommending new optimization algorithms, merging preexisting algorithms, and creating hyper heuristics. The development of novel optimization algorithms and their integration with existing ones is complementary rather than antagonistic. From one perspective, new optimization approaches can offer better solutions for solving difficult real-world problems in addition to compensating for shortcomings of existing optimization algorithms in certain scenarios or with specific difficulties. Many modern optimization algorithms utilize operators or strategies with novel search features. These components demonstrate a variety of ways to improve the performance levels of existing optimization algorithms.

A hyper heuristic is a technique for choosing or developing heuristics, according to a solid optimization paradigm commonly referred to as hyper heuristics (Burke et al. 2010). Hyper heuristics provide a high-level strategy (HLS) by manipulating or controlling a set of low-level heuristics (LLH). Since meta-heuristics may be used in a wide range of real-world optimization problems, they are considered universal techniques (Blocho 2020). Hyper heuristics can boost a solution’s agility and performance by combining and altering several meta-heuristics. In this case, meta-heuristics are an essential component of hyper heuristics, and the development of new meta-heuristics increases the pool of optimization techniques from which hyper heuristics might select. This might increase the effectiveness of hyper heuristics by adding better and more efficient meta-heuristics. Cutting-edge ideas and concepts are also often introduced by new meta-heuristics. Hyper heuristics can benefit from these advancements and improve their flexibility in different problem domains by incorporating them into their decision-making process. The development of new hyper heuristics can also be sparked by novel meta-heuristics. Academic researchers could adapt concepts from novel techniques to develop more advanced hyper heuristics.

For example, the reasonably promising cuckoo search (CS) algorithm (Yang and Deb 2014) presents a Lévy flying method with substantial exploration quirks. This method has been widely adopted by various contemporary algorithms to boost its potential for preventing local optima. Hybrid algorithms are also produced by mixing many new algorithms with pre-existing ones. In consequence, they can take advantage of each algorithm to increase the optimization efficiency. The ant colony optimization (ACO) algorithm mimics the behavior of foraging ants since it makes decisions about the path planning problem based on how ants forage for food (Dorigo et al. 1996). While foraging, ants release pheromones into the earth; the amount of these pheromones determines where the ants will eventually travel. As more ants comply and repeat this process, the pheromone accumulation deepens and attracts more ants. The ants can decide this is the best method to get to the food source. The ACO model is based on real-world foraging strategies used by ants. Thus, path planning-related problems such as vehicle routing, shop scheduling, and computational optimization may be effectively handled by ACO Comert and Harun (2023). On the other hand, ACO struggles to solve some other problems, such as continuous optimization and high-dimensional optimization problems. Different meta-heuristics have different origins and exhibit different search behaviors; as a result, each approach is suitable for a limited set of problems, and this may result in the incapacity of current optimization algorithms to tackle some newly emerging or extremely intricate real-world problems (Zhong et al. 2022).

The two most important aspects of meta-heuristic algorithms for solving optimization problems are exploration and exploitation (Daliri et al. 2024).

1.1 Exploration and exploitation

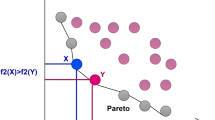

To estimate or find the global optimal solutions, meta-heuristic algorithms follow the same path, regardless of their differences. An initial collection of random solutions is used for optimization, and these solutions must aggregate and change quickly, easily, and arbitrarily. As a result, the solutions spread globally within the search space. This phase is referred to as “exploration” of the search space, during which different parts of the search space are targeted by solutions due to sudden changes (Askarzadeh 2016). This stage’s primary goals are to identify the most promising regions inside the search space, depart from the local optimum, and escape the local optima slump. Once the search space is sufficiently explored, the solutions start to change in a reasonable way and move locally in the search space towards the most promising solutions, which might improve the quality degree of their solutions. Enhancing the efficiency of the best solutions obtained during exploration is the main goal of this step, which is called “exploitation” Xue and Shen (2020). Although local optima may be avoided during the exploitation phase, the search region’s coverage is still smaller than during the exploration stage. In this situation, solutions avoid local solutions that are near the global optimum. Thus, one can deduce that the exploration and exploitation phases pursue opposing goals.

The most common way to evaluate the suitability of a new meta-heuristic in terms of its exploration and exploitation actions and its balance is to show how competitive it is when solving optimization problems compared to existing meta-heuristics and mathematical programming techniques. Although current meta-heuristics have proven their value in consistently identifying global optimal solutions throughout optimization of numerous real-world problems, existing meta-heuristics are not capable of successfully identifying the global optimum for all types of problems (Youfa et al. 2024). As per the ‘no-free-lunch’ (NFL) hypothesis (Wolpert and Macready 1997), there is no general optimization algorithm that can find the best solutions for all kinds of problems. Put otherwise, if a meta-heuristic algorithm in a particular class is fine-tuned to achieve a high level of performance in a particular class of problems, or even some methods within the same class, its performance in other classes of problems, or even other methods within the same class, will counteract it. This is why the NFL theory maintains this area of study open and encourages researchers to come up with new ways to improve accuracy and reinforce optimization. This is for the purpose of tackling complicated real-world problems that always arise due to high-tech advancements (Zhao et al. 2024). As per this, it is important for researchers to search for, devise, or propose new meta-heuristics that can give significant amelioration over current optimization techniques in solving optimization problems.

1.2 Outlines and motives of the proposed study

Construction of new efficient meta-heuristic algorithms is essential, but doing so is almost difficult. In essence, there is no existing meta-heuristic that specifically models and mathematically implements the life cycle of tornadoes and how thunderstorms and windstorms evolve to form tornadoes in nature. These reasons are the main motivations behind this work. So, this paper proposes and develops a new meta-heuristic named tornado optimizer with Coriolis force (TOC) which is inspired by the simulation of the life cycle of formation and dissipation of tornadoes as in nature. Another motivation behind the development of this algorithm is the expectancy of solving both unconstrained and constrained optimization problems that are not easy to solve using current optimization algorithms. Finding feasible optimal solutions for broadly well-known unconstrained optimization functions as well as nonlinear constrained engineering design and industrial problems that are superior to those found by existing meta-heuristics is another expectation of the proposed optimizer. Other aims include finding the global minimum among several local minimums as in multimodal functions as well as finding all the global minima for test functions having several global minima. Finally, exploration and exploitation are two essential aspects for the success of any meta-heuristic, and TOC aims to successfully orchestrate them to achieve a suitable balance between them.

1.3 Contributions of the work

The main novelties and contributions of this work can be succinctly summarized by the following points:

-

1.

A new optimizer referred to as TOC was first presented to simulate the life cycle of formation and dissipation of tornadoes, and is completely analyzed and mathematically expressed in detail.

-

2.

The Coriolis force and cyclostrophic wind speed are two vital novel concepts proposed in TOC to improve its competitiveness. These concepts were mathematically modeled for better exploration as well as for greater exploitation.

-

3.

The performance levels of the proposed optimizer were verified on 23 baseline benchmark functions and 29 well-known benchmark problems taken from the CEC-2017 test group with varied dimensions, and a comprehensive comparison was conducted with several excellent meta-heuristics to fully prove the advantages of the proposed optimizers.

-

4.

The relevance and reliability of the proposed optimizer were further investigated by applying it to 8 classical engineering problems and one complex industrial problem, and its outcomes were contrasted with those of several meta-heuristics.

The residual sections of this paper are partially structured as follows: Sect. 2 presents many meta-heuristics and their classes mentioned in the literature review. Section 3, describes the inspiration concepts of the optimizer developed in this work. The mathematical formulations of the proposed optimizer are presented in full in Sect. 4. Section 6 presents the computational evaluation results, convergence behavior, and statistical results of the competing algorithms. Section 7 presents the efficiency and practicality of TOC in tackling 8 engineering test cases. The experimental results of the TOC application to an industrial problem are examined and displayed in Sect. 8. In Sect. 10, the main findings and future trends of this work were drawn up.

2 Literature review

Meta-heuristic algorithms, which incorporate heuristics derived from natural phenomena, biological processes, natural life of creatures, human behavior, and even mathematics, are referred to as problem-independent algorithmic foundations. Due to their merits over mathematical approaches-such as unpredictability, simplicity of understanding, and black-box considerations-meta-heuristics are a potential substitute. Meta-heuristics are widely used to tackle a wide range of challenging optimization problems and have garnered considerable interest in the literature recently. Meta-heuristics can be broadly fall into one of the following classes: evolutionary-based algorithms (EAs), swarm-based algorithms (SAs), human-based algorithms (HAs), physics-based algorithms (PAs), mathematics-based algorithms (MAs), sport-based algorithms (SBAs), music-based algorithms (MBAs), and chemistry-based algorithms (CBAs). These classes are based on the processes that inspired the algorithms that belong to each of these classes (Zhao et al. 2024). These groups can be pictured as follows, along with certain well-known algorithms that belong to them:

2.1 Evolutionary-based algorithms (EAs)

EAs are the most previously advanced meta-heuristic techniques, and they were inspired by biological evolutionary phenomena such as natural selection, inheritance, and other processes resulting from biological evolution (Back 1996). Proponents of Darwin’s idea of evolution in biology, genetic algorithms (GAs) are among the most popular EAs. Moreover, GA is regarded as one of the most popular and long-standing meta-heuristics available today. During a specific iterative phase, a set of individuals is chosen at random as the starting spot in the search space and grows by a variety of evolutionary operators, such as selection, mutation, and reproduction procedures. At the end of the iteration loops, the GA’s best performer up to that point is considered the optimal solution. A kind of classical EA that employs some of the same evolutionary operators as GAs is called differential evolution (DE) Price (1996). The main difference between DE and GA is that, whereas DE places more emphasis on the mutation operator, GA places more emphasis on the crossover operator (Holland et al. 1992). There are also a few additional well-known EAs in Table 1.

2.2 Swarm-based algorithms

SAs are among the meta-heuristic algorithmic techniques with the fastest pace of growth, which draw inspiration from the collective behaviors of biological populations-such as those of microorganisms, and animals-found in nature. The optimization domain is heavily impacted by certain conventional SAs. An ant colony’s foraging strategy is modeled after that of an ant colony optimization (Dorigo et al. 1996). Ants have the capacity to leave behind substances called pheromones along their path while they forage. Ants adhere to the pheromone to the food and can determine the compounds’ strength. Particle swarm optimization (PSO) algorithm Kennedy and Eberhart (1995) mimics fish or bird’s social interactions. Artificial bee colony, or ABC for short, mimics the cooperation and division of work that individual bees use to find nectar in their surroundings (Karaboga and Basturk 2007). The whale optimization algorithm (WOA) Mirjalili and Lewis (2016) mimics the behaviors of whales seeking prey, circling prey, and attacking bubble nets. The capuchin search algorithm (CapSA) Braik et al. (2021) models the collective hunting behaviors of capuchin monkeys in nature, whereas the chameleon swarm algorithm (CSA) Braik (2021) resembles the hunting and foraging behaviors of chameleons in the wild. Another recently well-known SA is the white shark optimizer (WSO) Braik et al. (2022), which mimics the foraging behavior of white sharks in the ocean. Table 2 provides a variety of widely used meta-heuristics in this domain.

2.3 Human-based algorithms

HAs are newly developed meta-heuristics, which have received more attention in the literature. The two main things that inspired the creation of HAs are social relationships between humans and non-physical activities. Imperialist competitive algorithm (ICA) was created from interpersonal human behaviors that mimic the processes of imperial competition and colonial assimilation (Esmaeil and Caro 2007). The emergence of teaching-learning-based optimization (TLBO) Venkata Rao et al. (2011) is attributed to instructor guidance and student cooperation. This optimization method consists of two distinct phases: the teaching portion and the learning portion. When students interact with one another during the teaching phase, which is associated with learning from the teacher, learning happens during both the teaching and learning phases. Table 3 provides some notable examples of HAs.

2.4 Physics-based algorithms

PAs are the fundamental subset of meta-heuristic algorithms. These physical models include cover aspects of atomic physics, heat, electricity, mechanics, and a wide range of physical laws, processes, events, concepts, and motions. The law of gravitation serves as the motivation for a well-known PA known as the gravitational search algorithm (GSA) Rashedi et al. (2009). Within GSA, a group of search agents gravitationally draw near one other; a heavier search agent draws other search agents more readily. Another popular PA is atom search optimization (ASO) Zhao et al. (2019), which simulates atomic motion using the forces between atoms. The Lennard–Jones prospective and the bond-length prospective in this instance provide constraining forces that drive the interaction between the atoms. Additional typical PAs are included in Table 4.

2.5 Mathematics-based algorithms

MAs are a novel and essential part of meta-heuristics, which have achieved tremendous advancements in the discipline of optimization. The basis of MAs consists of certain mathematical operations, rules, formulas, and theories. Two well-known instances of this category are the arithmetic optimization algorithm (AOA) Abualigah et al. (2021) and the sine-cosine algorithm (SCA) Mirjalili (2016). The basis of AOA is the distributional assets of the four mathematical arithmetic operators: addition, subtraction, division, and multiplication. The SCA algorithm stimulates the regularity and fluctuation of the sine and cosine functions in mathematical notions. Although MAs are not as competitive as other meta-heuristics’ categories at present time, this category appears to have potential. Some former algorithms in this category include the Lévy flight distribution (LFD) method Houssein et al. (2020), which models a Lévy flight random walk; the circle search algorithm (CSA) Qais et al. (2022), which models the geometric properties of circles; and the golden sine algorithm (GSA) Tanyildizi and Demir (2017), which prototypes multiple types of sine function.

2.6 Sport-based algorithms

SBAs are inspired by physical activities involving humans and fitness programs. The classic SBA known as the league championship algorithm (LCA) Kashan (2009) is driven by the rivalry among sports teams in a league. A synthetic league in LCA comprises many weeks of action between various sports teams. Teams play fortnightly contests in combination, with the outcome determined by a certain structure’s fitness ratings and win/loss records. During the recovery phase, teams adjust their lineup and style of play in anticipation of the next week’s contest. The championship follows the league schedule for a few seasons or until an interim condition is met. Three other SBAs that are frequently used to handle complex optimization problems are world cup optimization (WCO), which is a recreation of FIFA’s world championships, tug of war optimization (TWO) Kaveh and Zolghadr (2016), which designs a simulated tug of war, and soccer league competition (SLC) Moosavian and Babak (2014), which propagates soccer partnership competition.

2.7 Music-based algorithms

MBAs are an inventive category of meta-heuristic algorithms where melody and music of nature served as the inspiration for this types of meta-heuristics. Harmony search (HS) Geem et al. (2001) is a well-known example of an MBA algorithm. It is comparable to musical improvisation in that musicians modify the pitch of their compositions to get the best harmony. Another well-known example of an MBA is the approach of musical composition (MMC) Mora-Gutiérrez et al. (2014), which simulates a dynamic music composition system.

2.8 Chemistry-based algorithms

Chemical reactions and thermodynamics are the main pieces of chemical reaction principles, which serve as the foundation for many CBAs. Another illustration of CBAs is chemical reaction optimization (CRO) Lam and Li (2012), which is predicated on the idea that molecule collisions direct the chain reaction to the stable and low trajectory of the potential energy surface during the chemical reaction. The conservation of energy principle is followed in the construction of four basic collision reactions. The artificial chemical reaction optimization algorithm, or ACROA, is a well-known CBA that was motivated by the many types of chemical reactions and their frequency (Alatas 2011).

The fact that most of the algorithms in the above lists share the same or comparable traits-exploration and exploitation-is noteworthy (Braik et al. 2021). Exploration implies that an algorithm explores the whole decision space by looking at it globally and in-depth. Exploitation occurs when a method actively investigates a certain decision space, usually in the area of solutions that already exist. Exploration increases the diversity of the set of possible solutions by making it easy to search across space for variables and generate solutions that differ from the ones that are presently in place. Exploitation pushes algorithms to often investigate the local neighborhood of existing solutions in order to find better ones. In view of this, convergence is increased, and solution accuracy is much enhanced. But this quest cannot break free from the trap of local optima. Both over-and under-exploration can slow down a method’s convergence time and solution accuracy. Under- and over-exploitation might speed up convergence while increasing the risk of becoming trapped in a local optimum. Therefore, to avoid immature convergence and local optima stasis, an efficient optimization algorithm must achieve a suitable balance between exploration and exploitation mechanisms (Braik et al. 2023).

Although the aforementioned algorithms play an important role in optimization, they suffer from drawbacks in some optimization cases. Some algorithms suffer from shortcomings in terms of computational burden, complexity, and parameter design. The balance between local exploitation and global exploration is greatly affected by the fact that many algorithms use the same individual search approach as the population search strategy. Additionally, this leads to the inefficiency of the algorithm when it comes to tackling continuous optimization problems, especially if they are very complex. The main question that arises in light of the number of optimization algorithms that have been developed so far is whether there is a need for other optimization algorithms. As mentioned before, the NFL theorem (Wolpert and Macready 1997) addresses this important question and issue. Specifically, the NFL theorem demonstrates how an optimization algorithm may perform very well on some optimization problems but perform poorly on a different class of problems. This is due to the fact that real-world problems vary in both their nature and how they are represented mathematically. This study’s development of a novel optimization algorithm that may be applied to the preparation of qualified quasi-optimal or optimum solutions for optimization problems is also motivated from the study of NFL theorem. Based on the above issues, a physics-based algorithm called tornado optimizer with Coriolis force (TOC), which draws inspiration from the creation process of tornadoes, is proposed to increase the optimization efficiency. By comparing this optimizer with other optimizers that have shown promising performance in the literature, this optimizer aims to address well-known constrained and unconstrained optimization problems.

3 Inspiration

The idea of the tornado optimizer with Coriolis force (TOC) presented in this paper is inspired based on the observation of tornado formation and dissipation and how windstorms and thunderstorms evolve to form tornadoes in nature (Cao and Liu 2023). To further clarify, some basics of how tornadoes, or also known as hurricanes, are created and move towards land, where they follow a recognizable life cycle, described as follows: The cycle begins when the wind speed and direction change within a storm system. This creates a spinning effect, which is tipped vertically by an updraft through the thunderclouds. A storm normally occurs at that point in this scenario. When a storm intensifies, it often turns into a supercell thunderstorm. Alternatively stated, a powerful thunderstorm develops a rotating system a few miles up in the atmosphere that becomes a supercell or thundercloud cell. These supercell thunderstorms are distinct, isolated cells that are not part of a storm line. Supercell storms are storms that go around and around. A storm cloud may produce a tornado when a rotating vertical column of air and a supercell thunderstorm come together (Zou and He 2023). The stages of the tornado formation process can be observed in Fig. 1.

Phases of tornado formation (SciJinks 2024a)

As it is observed from Fig. 1, tornadoes usually begin with a thunderstorm. But not just any thunderstorm - a specific kind of rotating thunderstorm called a supercell as shown in Fig. 1k. As shown in Fig. 1a and b, they can bring damaging hail, strong winds, lighting and flash floods. Supercells form when air becomes very unstable, and the wind speed and direction are different at different attitudes. This condition is called wind shear as shown in Fig. 1c to e. But when winds at ground level are blowing in one direction and winds higher up in the atmosphere blow in a different direction, this can cause a horizontal tube of air to form as shown in Fig. 1f to h. In a thunderstorm, warm air rises within the storm. This is called an updraft which can turn a horizontal rotating tube of air into a vertical one as shown in Fig. 1i. When this happens, the whole storm begins to spin, creating a supercell. Some supercells form a funnel cloud as shown in Fig. 1j and k. If this funnel cloud extends to the ground, it is called a tornado as shown in Fig. 1l.

A schematic diagram of a tornado (SciJinks 2024b)

In Fig. 2, the initial funnel, which hovers over the surface, grows from a thundercloud. Then, if conditions are favorable (temperature swings, winds, etc.), the tornado takes shape and reaches the ground. Finally, when the conditions start to change, the funnel narrows and starts to gradually rise toward the cloud. Occasionally, two or more tornadoes may occur from a single storm at the same time. Although tornadoes can vary in size, strength, and location, they all share certain traits (Hamideh and Sen 2022), which can be observed from Fig. 2, and can be described as follows:

In a nutshell, the Earth’s rotation around its axis causes winds in the northern hemisphere to deviate to the right, while winds in the southern hemisphere to deviate to the left. This is known as the Coriolis force or Coriolis effect, but it does not directly affect all air movement no matter how small. In general, the Coriolis effect only directly affects the direction of rotation of the largest atmospheric and oceanographic circulation systems on Earth.

3.1 Tornadoes and the Coriolis force (CF)

As windstorms move relative to the Earth, they experience a compound centrifugal force based on the combined tangential velocities of the Earth’s surface and the windstorms. When combined with the non-perpendicular gravitational component, the result is called the Coriolis force. This force indicates 90\(^\circ \) to the right of the downwind in the Northern Hemisphere, and 90\(^\circ \) to the left in the southern hemisphere. The magnitude of Coriolis acceleration is linear in speed and can be given as follows:

where f is the Coriolis parameter defined as presented in Eq. 2.

where \(\varOmega \) is the angular speed of the Earth, and \(\phi \) is the latitude.

3.1.1 Geostrophic wind and gradient wind

A gradient wind is defined as the wind that exists if a particle’s path is circular and there is a balance between the pressure gradient force, the Coriolis force, and the centrifugal force. If the flow is curved to the left (cyclonic flow) then the pressure gradient force must be stronger than the Coriolis force. Contrarily, if the flow is curved to the right (anticyclonic flow), the pressure gradient force must be weaker than the Coriolis force. Centripetal acceleration occurs when there is an imbalance between the pressure gradient force (PGF) and the Coriolis force (CF). The tangential wind speed can be defined as follows:

where V is the tangential wind speed, R is the radius of the curvature of a trajectory, f is the Coriolis parameter, and \(-\frac{\partial \phi }{\partial n}\) is the component of the pressure gradient force normal to the direction of the wind.

The gradient wind approximates the real wind that is typically closer to the real wind than geostrophic wind, where the PGF and CF are equal (Brill 2014). Because the gradient wind equation is quadratic, there are two possible solutions for the wind speed: cyclonic flow and anticyclonic flow.

3.1.2 Cyclonic flow (low pressure)

In this case, a Coriolis force and the centrifugal force (CeF) act in the same direction. To have a balance, the pressure gradient force must act in the opposite direction, and we have a lower pressure in the center. If we take the effect of curvature into account, we must expand the horizontal momentum formula to include the centrifugal term:

Equation 4 can be presented as:

Using the geostrophic balance \(fV_g =-\frac{1}{\rho } \frac{\partial p}{\partial n}\), we substitute the left side in Eq. 5 by \(fV_g\):

where \(V_g\) is the geostrophic wind, \(V_G\) is the gradient wind, and R is the radius of curvature.

The gradient wind speed is obtained by solving Eq. 6 for \(V_G\) to get:

Equation 7 tells us that \(V_G < V_g\) in all cases because the denominator is larger than one. The difference between \(V_G\) and \(V_g\) becomes larger at smaller R, and at smaller latitude angles.

3.1.3 Anticyclonic flow (high pressure)

In this case, the pressure gradient force and the centrifugal force are in the same direction. For there to be equilibrium, the Coriolis force must act in the opposite direction, resulting in a higher pressure at the center.

Equation 8 can be presented as:

In the same previous manner,

It is shown that \(V_G > V_g\) in all cases.

3.1.4 Cyclostrophic flow

If the horizontal scale of atmospheric disturbance is small enough, the Coriolis force may be neglected when compared to the centrifugal force and the pressure gradient force. Cyclostrophic balance occurs when the pressure gradient force and the centrifugal force are equal and in opposite direction as presented in Fig. 3. This is the situation near the equator, which can be formulated mathematically as shown in Eq. 3:

In Eq. 11, the centrifugal force: \(\frac{V^2}{R} \gg fV\), and the pressure gradient force \(\frac{\partial \phi }{\partial n} \gg fV\).

Solving Eq. 11, gives the cyclostrophic wind speed as follows:

There are four pictured cases as shown in Table 5.

The mathematically positive roots of the speed of the cyclostrophic wind correspond to only two physically possible solutions described as shown in Eq. 13.

Since the Coriolis force is not a factor, the cyclostrophic winds can rotate either clockwise or counterclockwise.

3.1.5 The gradient wind approximation

A gradient wind is just the wind component parallel to the height contour that satisfies:

Solving Eq. 14 gives:

The geostrophic flow can be defined as:

Finally, Eq. 15 can reformed as shown below:

In Eq. 17, Coriolis force is not neglected when compared to the centrifugal force and the pressure gradient force.

In this work, the overall picture of tornadoes in nature has guided us to the mathematical models devised for a new optimization algorithm that simulates tornadoes formation and carries out optimization. Below is a thorough characterization of these models and the proposed algorithm.

4 Tornado optimizer-based Coriolis force

In the tornado optimizer with Coriolis force (TOC), it is assumed that there are many windstorms, some thunderstorms, and precipitation phenomena, where tornadoes are generated by windstorms and thunderstorms, and thunderstorms are generated by windstorms. The following are the detailed mathematical models of the proposed TOC optimizer.

4.1 Initialization of population

The proposed TOC optimizer is a population-based algorithm; accordingly, the first step of initiating the optimization process by this optimizer is to randomly create an initial population of designs variables (i.e., windstorms and thunderstorms) between upper bounds (u) and lower bounds (l). The best individuals (i.e., windstorms and thunderstorms), ranked in terms of having minimum cost function, or in some other cases maximum fitness, are selected to form tornadoes or a tornado if there is only one tornado. A number of good individuals (i.e., values of the cost function close to the current best solution) are chosen as thunderstorms, while all other individuals are called windstorms that eventually evolve into thunderstorms and tornadoes.

To commence TOC as an optimization algorithm, an initial population matrix of n individuals (i.e., population size), in a d-dimensional search space (i.e., dimension of the problem) is created as a first step. In this, the position of every windstorm, thunderstorm, and tornado indicates a candidate solution to the optimization problem. Equation 21 states how to produce the initial population of windstorms, thunderstorms, and tornadoes in the search domain using a uniform random initialization process.

where rand is an arbitrary number generated in the range [0, 1], \(y_{i, j}\) is the starting value of the ith individual in the jth dimension, and \(u_{j}\) and \(l_{j}\) reflect the upper and lower limits of the search space, respectively.

After the creation of n individuals, \(n_{to}\) individuals are selected from the population that are considered the best candidates to be thunderstorms and tornadoes. Consequently, the individuals with the best values among them are considered tornadoes or are referred to as \(n_o\). Simply put, \(n_{to}\) is the summation of the number of thunderstorms and tornadoes, which can be described as exhibited in Eq. 19.

where \(n_t\) refers to the number of thunderstorms, while \(n_o\) refers to the number of tornadoes, which is equal to one in this work.

The rest of the population forms windstorms. These windstorms may evolve into thunderstorms or they may evolve directly into tornadoes, which can be calculated using Eq. 20.

where \(n_w\) stands for the number of windstorms and n denotes the total population size (i.e., \(n = n_w + n_t + n_o\)).

The initial population of windstorms, thunderstorms, and tornadoes can be described by a matrix of individuals of size \(n \times d\). Therefore, the randomly generated matrix y (that is, the total population) can be shown as follows:

where \(y_{i, j}\) denotes the ith candidate individual at dimension j, which could be a windstorm, a thunderstorm, or a tornado, d denotes the number of design variables (i.e., problem dimension), and the components \(y_w\), \(y_t\), and \(y_o\) stand for the population of tornadoes, thunderstorms and windstorms, which can be defined as shown in Eqs. 22, 23, and 24, respectively.

where \(y_{{w}_{i}}\) identifies the ith windstorm, \(y_{{t}_{i}}\) identifies the ith thunderstorm, and \(y_{{o}_i}\) represents the ith tornado.

As presented in Eq. 21, in a d dimensional optimization problem, windstorms, thunderstorms, and tornadoes can be combined together and described by a matrix of appropriate size.

4.2 Fitness evaluation

The cost of the fitness value (i.e., cost function) is computed for each windstorm and thunderstorm by evaluating the cost value as shown below:

where \(fit_i\) denotes the cost value of the ith individual.

Each potential solution to a new windstorm, thunderstorm, or tornado is evaluated based on a fitness criterion created specifically for this purpose. If the newly established position is superior to the present one, the former is then refurbished. As per this, several values of the objective function of the optimization problem of interest are evaluated as a result of putting potential solutions into the decision variables, which can be represented as given in Eq. 26.

where \(\vec {fit}\) is the vector of the acquired fitness function, and \(fit_i\) denotes the value of the acquired fitness function on the basis of the ith individual.

The value of the fitness function serves as a gauge of the candidate solution’s quality in meta-heuristic algorithms like TOC. The population’s member that results in the evaluation of the best value for the fitness function is referred to as the best population’s member. This member is updated in each iteration loop of the proposed optimizer because the candidate solutions are updated throughout. In the simulation of the proposed optimizer, individuals stay in their locations if they are better than the new locations.

4.3 Evolution of windstorms

Windstorms tend to move toward tornadoes and thunderstorms based on the volume and intensity of their evolution. This means that windstorms evolve into tornadoes more often than thunderstorms.

4.3.1 Initialization of windstorms’ population

As described above, \(n_w\) windstorms are generated, such that this number of candidate individuals are selected from the entire population. Equation 27 shows \(y_{w}\) (i.e., population of windstorms) that evolve into tornadoes or thunderstorms. Indeed, Eq. 27 is part of Eq. 21 (i.e., all individuals in the population):

4.3.2 Formation of windstorms

Depending on the size and power of the evolution of windstorms, tornadoes and each thunderstorm ingests windstorms. In any manner, one of the best ways to distribute windstorms between tornadoes and thunderstorms in a proportional way is to use cost functions (fitness functions) for tornadoes and thunderstorms. Hence, the number of windstorms that accede into thunderstorms and/or tornadoes mutates. The designated windstorms for tornadoes and each thunderstorm are evaluated using the following mathematical formulas:

where \(k = 1, 2, 3, \ldots, n_{to}\), and \(f_k\) specifies the cost value of the kth thunderstorm associated with a tornado.

where \(\left\lfloor \cdot \right\rceil \rrceil \) stands for the round operator, \(k = 1, 2, \ldots, n_{to}\), and \(n_{{\dot{w}}_k}\) is the number of windstorms that evolve or assign into specified thunderstorms or tornadoes.

In fact, in the implementation of the proposed optimizer, the costs of tornadoes and each thunderstorm have been deducted by the cost of an individual (i.e., \(n_{to} + 1\)) in the population of windstorms (see Eq. 27) as can be seen in Eq. 28. Based on their strength and rate of growth, windstorms frequently develop into thunderstorms and tornadoes. It implies more windstorms evolve into tornadoes than into thunderstorms. Hence, one of the finest techniques to distribute windstorms among tornadoes and thunderstorms in a proportionate manner is to employ objective criteria (fitness functions) for tornadoes and thunderstorms.

With the use of Eqs. 28 and 29, the best solution (i.e., tornadoes) will be able to control and retain more windstorms. It is worth noting that windstorms will be randomly selected from the population of windstorms. Each windstorm is controlled by one of the best individuals (i.e., tornados or thunderstorms). Thus, windstorms cannot be assigned to more than one best individual. However, in rare situations, the sum of \(n_{{\dot{w}}_k}\) in Eq. 29 may not equal \(n_{w}\). This issue has been sorted out in the implementation code of TOC. In this case, the number of windstorms deemed for thunderstorms and tornadoes is randomly decreased or increased by subtracting or adding a single value (i.e., \(\pm 1\)). Thus, the total number of windstorms assigned to thunderstorms and tornadoes will be exactly equal to \(n_{w}\).

The speed and direction of movement of windstorms may be affected by the Coriolis force, as shown below:

4.4 Windstorm velocity with the Coriolis force

For large-scale atmospheric turbulences that do not require the windstorm to move in a straight line, there is a three-way balance among the Coriolis force, centrifugal force, and the pressure gradient forces. As per this, the gradient windstorm speed that forms thunderstorms and tornadoes can be identified as shown in Eq. 30.

where \(i=1, 2, \ldots, n_w\), is the windstorm’s index for a population of size \(n_w\), \(\vec {v}_{i}^{t+1}\) denotes the new velocity vector of the ith windstorm, \(\vec {v}_{i}^{t}\) defines the current speed vector of the ith windstorm, rand refers to a generated random number with uniform distribution in the scope [0, 1], \(\eta \) identifies a shrinkage factor presented to simulate the convergence conduct of windstorms as defined in Eq. 31, \(\mu \) implements the fuzzy adaptive kinetic energy of windstorms defined as exposed in Eq. 32, \(R_l\) is the radius of curvature of the trajectory of windstorms in the Northern Hemisphere defined as given by Eq. 33, \(R_r\) is the radius of curvature of the path of windstorms in the Southern Hemisphere given by Eq. 34, c stands for a created random number in different ranges defined as shown in Eq. 35, and f, \(CF_l\), and \(CF_r\) can be defined as presented in Eqs. 38, 39, and 40, respectively.

In Eq. 30, \(rand \ge 0.5\) demonstrates that the motion of the windstorms is in the northern hemisphere, and \(rand < 0.5\) illustrates that the motion of windstorms is in the southern hemisphere. Thus, rand was used to simulate the motion of windstorms between the Northern and Southern Hemispheres

where \(\chi \) identifies the rate of acceleration of windstorms, which is equal to 4.10, where this value was obtained after careful investigation.

Equation 31 was introduced in the proposed optimizer as a constriction factor for ameliorating the convergence behavior. The constriction factor \(\eta \) in this equation has a value of 0.7298. Mathematically, the constriction factor is analogous to momentum energy, which can be important to provide windstorms with the necessary power to reach the target to form thunderstorms and tornadoes. This factor can be essential for the success of the proposed optimizer and achieving promising performance levels.

Beyond the constriction factor \(\eta \) in Eq. 31, a fuzzy adaptive \(\mu \) was applied in the proposed optimizer with a random version setting of what is defined in Eq. 32.

where rand denotes a generated random number with uniform distribution in the scope [0, 1].

Equation 32 was used to give a fuzzy adaptive random number for dynamic system optimization, where this random \(\mu \) has an expectation of 0.75.

where t and T stand for the current and maximum number of iteration indices, respectively.

In fact, windstorm speeds can exhibit both clockwise and counterclockwise rotation. Most (but not all) tornadoes in the northern hemisphere rotate counterclockwise, because they develop from large, rotating supercell thunderstorms.

where \(b_r\) is a constant equal to 100000, \(\delta _1\) stands for a change in the sign presented as shown in Eq. 36, and \(w_r\) identifies a random value generated with different ranges defined as given by Eq. 37.

where \(f_d\) represents a function of values 1 and -1 to represent the change in sign.

where rand stands for generated random values in the range ]0, 1], and \(w_{min}\) and \(w_{max}\) are fixed values equal to 1.0 and 4.0, respectively.

where \(\varOmega \) stands for the angular rotation rate that is equal to 0.7292115E-04 radians \(\hbox {s}^{-1}\), rand stands for a random number generated with uniform distribution in the range [0, 1] Vallis (2017), and \(-1 + 2\cdot rand\) specifies a random value for the latitude.

where \(\phi _{i}^{t}\) is the component of the pressure gradient force (PGF) normal to the direction of the current ith windstorm at the specified t iteration as exposed in Eq. 41.

where \(y_{{o}_\zeta }^t\) is the current position vector of the tornado at a random index \(\zeta \) at the tth iteration, \(y_{{w}_{i}}^{t}\) identifies a position vector of a windstorm at the tth iteration, and \(\zeta \) is a random index of a tornado defined as shown in Eq. 42.

where \(rand(1, n_o)\) implements a uniformly generated vector of random values with a uniform distribution in the interval \(\left[ 0, 1\right] \).

Equation 30 is subject to the constraints given in Eqs. 43, 44, and 45.

where rand stands for a random number generated with uniform distribution in the range [0, 1].

4.4.1 Evolution of windstorms to tornadoes

The process of evolution of windstorms into tornadoes is performed in the TOC optimizer. A tornado or tornadoes are formed from windstorms or thunderstorms when windstorms evolve to tornadoes or thunderstorms. This evolution process can be simulated mathematically as shown in Eq. 46.

where \(\vec {y}_{{w}_{i}}^{t+1}\) and \(\vec {y}_{{w}_{i}}^{t}\) define the next and current position vectors of the ith windstorm at iterations \((t+1)\) and t, respectively, \(\vec {y}_{{o}_i}^t\) defines the current position vector of the ith tornado at iteration t, \((\vec {y}_{{o}_i}^t - rand_w)\) denotes the difference between the evolution of windstorms into tornadoes and the random formation of windstorms, the components \(rand_w\) and \(\alpha \) stand for random values that can be defined as presented in Eqs. 47 and 48, respectively.

where \(rand_w\) is an index vector for randomly selected windstorms.

where rand denotes a random value created with a uniform distribution in the range [0, 1], and \(a_y\) represents an exponential parameter defined as shown in Eq. 49.

where \(a_0\) denotes a constant value of 2.0 and was found after extensive analysis.

Equation 46 can essentially be thought of as an update formula for new positions of windstorms that evolve into tornadoes.

4.4.2 Evolution of windstorms to thunderstorms

As noted above, there are n individuals of which \(n_{t}\) is selected as thunderstorms and \(n_{o}\) is selected as tornadoes. In this work, we assume that there is only one tornado. A schematic view of a windstorm evolving into a particular thunderstorm along its contact line is seen in Fig. 4.

The distance \(\gamma \) between windstorms and thunderstorm may be amended randomly as given in Eq. 50.

where x is the present separation between windstorms and thunderstorms, \(0.5< \rho < 2\) where 2 may be the optimal value of \(\rho \), and \(\gamma \) conforms to a random number between 0 and \(\rho \times x\) that is uniformly distributed or chosen from a plausible distribution.

Windstorms can evolve in several directions approaching thunderstorms when \(\rho > 0.5\) is set. This idea may be utilized as well to explain how thunderstorms may evolve into tornadoes. In essence, to consummate the exploration and exploitation phases in TOC, the evolution process of windstorms into thunderstorms may be simulated as follows:

where \(\vec {y}_{{w}_{j+\sum _{1}^{n_{{\dot{w}}_k}}}}^{t+1}\) and \(\vec {y}_{{w}_{j+\sum _{1}^{n_{{\dot{w}}_k}}}}^{t}\) represent the next and current position vectors of windstorms developing into thunderstorms at iterations \((t+1)\) and t, respectively, \(\vec {y}_{{t}_i}^t\) represents the current position vector of the ith thunderstorm at iteration t, and rand stands for a random number produced between 0 and 1 with uniform distribution.

Equation 51 is regarded as a mathematical formula for new positions of windstorms that evolve into thunderstorms.

4.5 Evolution of thunderstorms to tornadoes

In the exploration and exploitation phases of the proposed optimizer, the new position of thunderstorms during evolving into tornadoes can be simulated in the manner defined below:

where \(\vec {y}_{{t}_{i}}^{t+1}\) and \(\vec {y}_{{t}_{i}}^{t}\) represent the next and current position vectors of thunderstorms developing into tornadoes at iterations \((t+1)\) and t, respectively, \(y_{{o}_\zeta }^t\) identifies a position vector for a tornado at a random index \(\zeta \), and \(\vec {y}_{{t}_{\vec {p}}}^{t}\) identifies a position vector for a thunderstorm at a random index vector \(\vec {p}\) which is the index vector for randomly selected thunderstorms identified as shown in Eq. 53.

where rand is a uniformly distributed random number in the range of [0, 1].

Equation 52 is the updated mathematical model for thunderstorms that evolve into tornadoes. Notations marked with a vector sign correspond to vector values, otherwise the rest of the notations and parameters are scalar values.

The positions of the thunderstorms and windstorms are exchanged if the windstorm’s solution is better than that of its connected thunderstorm (i.e., the windstorm becomes a thunderstorm, and the thunderstorm becomes a windstorm). As a result, windstorms from preceding thunderstorms serve as new thunderstorms and are better windstorms (in terms of the cost function value). In fact, the current thunderstorm is in charge of all earlier windstorms. The transition between a thunderstorm and a tornado, as well as between windstorms and tornadoes, may be done similarly. In this scenario, the evolving thunderstorm will behave like a new tornado, and the outgoing tornado will be a new thunderstorm with its own windstorms that can be pushed exactly in its direction. Therefore, the windstorms connected to the prior thunderstorms-which are now a new tornado-will behave as windstorms that are directly developing into the new tornado. The interchange of windstorms and thunderstorms in the population of the proposed optimizer can be observed as shown in Fig. 5.

Figure 6 (which also incorporates the concept from Fig. 4) shows how the optimization process of the proposed optimizers can be evolved, with balls, asterisks, and the bullet represent windstorms, thunderstorms, and tornadoes, respectively. The white (empty) shapes show where the windstorms and thunderstorms have moved to.

4.6 Random formation of windstorms

The stochastic formation of windstorms process is defined in the proposed TOC-based optimization to enhance its exploration capability. To be more precise, the random formation of windstorms enables TOC to avoid falling into local solutions and immature convergence. Basically, windstorms evolve in random locations when they evolve into thunderstorms or evolve into tornadoes, resulting in mature tornadoes in different positions. This process is applied to both windstorms and thunderstorms, which must be checked to see if they are close enough to a tornado to make this process occur. For this purpose, the following mathematical formula can be utilized to accomplish the random process of forming windstorms into tornadoes:

where l and u refer to the bottom and top limits of the search area, respectively, rand is a random number systematically inserted into the range [0, 1], \(\delta _2\) stands for a change in the sign specified as given in Eq. 55, \(\left\| \cdot \right\| \) refers to a norm operator, and \(\nu \) is an exponential function defined as shown in Eq. 56, which is capable of generating small numbers.

where \(f_d\) represents a function of the values 1 and -1 to represent the change in sign.

where t represents the current iteration index and T represents the maximum iteration index.

Continuing the random formation of windstorm, the following mathematical formula can be utilized to accomplish the random process of windstorms formation into thunderstorms:

Accordingly, Eqs. 54 and 57 were introduced in this work to specify the new locations of newly formed windstorms. As presented in Eq. 56, \(\nu \) regulates the search intensity close to the tornado. In view of this, big values of \(\nu \) may discourage further searches, but small values may promote search activity in the immediate vicinity of the tornado.

From a mathematical perspective, the parameter \(a_y\) creates an adaptive function over the iteration loops of TOC. Using this adaptive function, windstorms with \(a_y\) following the condition utilized in Eqs. 54 and 57 are scattered about it. In fact, with the use of this method, TOC may execute a better search surrounding the tornado during its exploitation phase.

Additionally, as observed in nature, certain thunderstorms form slowly since just a few windstorms give rise to them. As a result, they will not be able to go closer to a tornado and may eventually get smaller after making certain motions. The TOC optimizer adopts the parameter \(a_y\) to boost the random evolution process of windstorms to reinforce this idea. Then, using Eqs 54 and 57, new windstorms will be produced at new locations, equal to the number of prior windstorms and thunderstorms. As can be seen from Eq. 52, thunderstorms are not regarded as fixed points in the proposed TOC and must evolve towards tornadoes (i.e., the optimal solution). This process (developing windstorms into thunderstorms and then thunderstorms into tornadoes) promotes indirect development in the direction of the best solution. In short, as the iterations of the TOC optimizer continue, the likelihood of random generation process of windstorms decreases.

4.7 Complexity analysis

A function that relates the dimension and input size of a given input problem to the time of execution of the optimization algorithm under investigation may be employed to quantify the computational complexity of the algorithm. This basically singles out how the complexity issue of the proposed optimizer can be studied. The time and space computational complexities of the developed optimizer are described below in terms of Big-O notation as a standard expression.

4.7.1 Time complexity

Time complexity of the proposed optimizer can be generally represented using Big-O notation as follows.

The computational complexities of the proposed TOC optimizer depend on the time complications of several components connected to the relevant problem and the proposed method, as shown in Eq. 58. There are various temporal complexity issues with each of these components, where these elements can be described as follows:

-

1.

Defining the problem takes \({\mathcal {O}} (1)\) time.

-

2.

The time required for population initialization is \({\mathcal {O}} (v \times n \times d)\) time.

-

3.

The time required for cost function evaluation is \({\mathcal {O}}(v \times K \times c \times n)\) time, where c represents the cost of the criterion assessment.

-

4.

Updates to the solution and their evaluation take \({\mathcal {O}}(v \times K \times n \times d)\) time.

The time complexity of the optimization process is dependent on a number of variables, including v, n, d, T, and c, where these parameters stand for the total amount of evaluations, the amount of individuals, the overall dimensions of the optimization problem, the amount of iteration steps, and the cost of the fitness criterion, respectively. This is explained above and in connection with what is presented in Eq. 58. One may describe the total time complexity of TOC in distinct components as shown in Eq. 59 by considering the points above and Eq. 58.

As \(1 \ll Kcn\), \(1 \ll 5Knd\), \(nd \ll Kcn\), \(nd \ll 5Knd\), and \(Knd < 5Knd\), Eq. 59 may be streamlined to what is presented in Eq. 60:

As it turns out, the time complexity issue of TOC in terms of Big-O notation is of the polynomial order. The proposed TOC optimizer might be seen in this sense as a computationally efficient optimization technique. The number of decision variables in the problem (d), the cost of the problem’s objective criterion (c), the number of individuals (n), and the overall amount of iterations (T) can all be expressed as the main considerations to recognize the computational complexity of TOC when addressing an optimization problem.

4.7.2 Space complexity

The parameters of the number of windstorms, thunderstorms, and tornadoes, and the size of the problem of interest influence the space complexity of TOC in terms of the available memory space. This reveals how much room TOC would take up while starting the optimization process. Accordingly, the spatial complexity of TOC may be well represented as exhibited in Eq. 61.

4.8 Implementation steps of the proposed optimizer

The general picture of the evolution process of tornadoes and the stochastic formation methods of windstorms and thunderstorms have led us to develop mathematical models of the proposed TOC optimizer and the implementation of optimization. While solving optimization problems, the optimization process of TOC endeavors to advance in the direction of the global optimal solution. This is because it is very probable that windstorms and thunderstorms as well as tornadoes will turn out and spirally rotate in the encircling area in the search space to locate a better solution. The implementation of this capacity relies on where the optimal windstorms, thunderstorms, and tornadoes are found. Consequently, windstorms and thunderstorms are consistently capable of turning all around the potential areas in the search space to evolve into tornadoes. The key procedural steps mentioned in Algorithm 1 summarize the pseudo code of the proposed optimizer.

The proposed TOC optimizer starts the optimization process by randomly generating the locations of the population of windstorms, thunderstorms, and tornadoes in the search space, in accordance with the stages of the pseudo code presented in Algorithm 1. To update these individuals’ positions during each function evaluation, TOC uses Eqs. 30, 46, 51, 52, 54, and 57. According to the simulated stages of the proposed optimizer, if any of the entities (i.e., windstorms, thunderstorms, and tornadoes) depart the search space, they will all be brought back. In each function evaluation, the solutions are evaluated using a predetermined fitness criterion, and the individuals with the best fitness values are identified by updating the fitness function. It is indicated that the best position for individuals to achieve their goals is the most appropriate solution. In each function evaluation, all algorithmic steps-aside from the initialization ones-are repeated until the predetermined overall amount of function evaluations has been attained. The proposed models of this optimizer are continuously capable of spiraling and spinning out in all places in the search space, according to the theoretical claims of the optimizer described above.

4.9 Characteristics of TOC

The proposed TOC optimizer as a nature-inspired meta-heuristic has two capabilities during the optimization of a particular problem inside the search space: exploration and exploitation. The convergence of the proposed TOC optimizer towards the global optimal solution makes these capabilities possible. Specifically, convergence occurs when the majority of thunderstorms and windstorms congregate in the same area of the search space. TOC uses a number of crucial parameters that promote the exploration and exploitation aspects including \(\mu \), \(\alpha \), \(a_y\), and \(\nu \) which are defined in Eqs. 32, 48, 49, and 56, respectively. By adjusting these parameters, the proposed TOC optimizer may more effectively explore the search space for every potential solution to find the sub-optimal or optimal solutions. Therefore, these control parameters are useful in TOC to implement promising convergence property. As windstorms and thunderstorms develop into tornadoes in the TOC optimizer, these search agents-windstorms, thunderstorms, and tornadoes-can update their positions in accordance with the mathematical models and tuning criteria of TOC implemented by the evolution models of the search agents. The models of TOC are presented in Eqs. 30, 46, 51, 52, 54, and 57. Windstorms and thunderstorms are assumed to efficiently evolve into tornadoes within the search space in each of the presented models. Additionally, the random motions of windstorms and thunderstorms occur as a result of their random evolution, which forces them to move to random locations. Thus, tornadoes, thunderstorms, and windstorms all explore the search space in various directions and places, implying that additional attractive areas could hold better solutions. In summary, TOC offers a few characteristics based on its fundamental idea, which may be summed up as follows: (1) The position update models of the proposed TOC optimizer efficiently help the population search agents explore and exploit each region of the search space, (2) The random motions of windstorms and their random formation into tornadoes in the search space using Eqs. 46, 54 and 57 boost population diversity of TOC and guarantee a reasonable convergence rate, demonstrating an effective trade-off between exploration and exploitation, (3) The random movements of windstorms and their evolution into thunderstorms using Eq. 51 and the random motions of thunderstorms and their evolution into tornadoes in the search space using Eq. 52 increase the diversity of the population, ensure sensible convergence property, and provide an efficient equilibrium between exploration and exploitation aspects, (4) The number of parameters in TOC is reasonably acceptable, and these are promising operators to provide a high level of performance.

5 Comparative analysis of TOC with other optimizers

This section compares the TOC method with various well-established meta-heuristic algorithms, such as particle swarm optimization (PSO), genetic algorithms (GAs), differential evolution (DE), and ant colony optimization (ACO) algorithm.

5.1 Particle swarm optimization

PSO imitates the collective cooperative social behavior of living organisms, such as flocks of birds, fish, and many other species of creatures (Kennedy and Eberhart 1995). Artificial particles, or randomly produced solutions, are used as the starting point for optimization in PSO. The velocity of every particle in the swarm is initially produced at random. As introduced in Yan et al. (2017), the position updating technique can be expressed, assuming that \(x_i\) is the initial location of particle i with velocity \(v_i\), as shown in Eq. 62.

where w represents the inertial weight, \(c_1\) and \(c_2\) represent the cognitive and social parameters, respectively, \(r_{1}\) and \(r_{2}\) are randomly distributed values produced in the interval [0, 1], \(Pbest_i\) represents the local best solution for particle i, and Gbest represents the global best solution for all particles in the swarm.

5.1.1 TOC versus PSO

Like the PSO algorithm, the proposed TOC optimizer motivates search agents to move around in the search space in pursuit of their objective, which starts the optimization process. However, the mathematical models of TOC and PSO have completely distinct updating mechanisms. The following is a description of some of the primary differences between these two optimizers:

-

1.

In PSO, the parameters \(Pbest_i\) and Gbest provide the position update of the ith particle, as shown in Eq. 62. The influence of these two parameters is taken into account to determine the particles’ new position within the search space.

-

2.

In the matter of the proposed TOC optimizer, four different design models are used to determine the new positions of search agents (windstorms, thunderstorms, and tornadoes). These models are provided in Eqs. 30, 46, 51, 52, 54, and 57. These design models feature windstorms turning into tornadoes and thunderstorms, thunderstorms turning into tornadoes, and windstorms forming at random. However, PSO uses a single strategy, as shown in Eq. 63, to update the positions of all search agents within the search space.

-

3.

The starting values of the cognitive and social parameters, as well as the velocity vector’s weighting strategy-which is employed when a particle of the swarms in PSO establishes a new position-have a significant impact on the PSO algorithm. On the other hand, during the iterative loops of TOC, tornadoes, windstorms, and thunderstorms use different parameters and search strategies to determine their new positions.

-

4.

The behavior of tornadoes is influenced by the formation of thunderstorms and windstorms, which are designed with a variety of distinct strategies, and the speed of this formation is affected by the Coriolis force. As a result, Eq. 30 is used to determine the speed of thunderstorms and tornadoes, which rotate clockwise and counterclockwise. By incorporating this velocity into TOC, thunderstorms move and evolve abruptly and are redirected to create tornadoes, and thus reinforce the exploration and exploitation features of TOC. However, PSO does not employ this kind of behavior.

-

5.

Simulation of tornadoes’ conduct in Eqs. 54 and 57 provides a chance to show a random tornado movement behavior. This makes it possible for the TOC optimizer to lessen stumbling in local optimal solutions. The PSO algorithm does not benefit from this feature because of swarms’ inherent nature simulated in this algorithm.

-

6.

Eq. 51, which formulates the evolution process of windstorms into thunderstorms, enhances the exploration and exploitation features of TOC, whereas PSO does not have such a feature.

-

7.

The use of the parameters \(\mu \), f, \(CF_l\), and \(CF_r\), defined in Eqs. 32, 38, 39, and 40, respectively, in the TOC optimizer, allows it to explore the search space globally at times, conduct local searches in local areas at other times, and obtain a suitable balance between exploration and exploitation features. In the PSO algorithm, these parameters are absent.

5.2 Conventional differential evolution algorithm

The differential evolution (DE) method is a well-established population-based evolutionary technique developed to address real-valued optimization problems (Storn and Price 1997). Similar to GAs, DE uses evolutionary principles such as those used in GAs including crossover, mutation, and selection mechanisms. The initialization process of each individual in DE can be defined as shown in Eq. 64.

where \(X_i\) stands for the ith individual in which \(i \in \left\{ {1, 2, \ldots, NP}\right\} \), NP stands for the population size, \(d \in \left\{ {1, 2, \ldots, D}\right\} \) indicates the problem’s dimension, u and l stand for the upper and lower limits of \(X_i\) in the dth dimension, respectively.

According to Eq. 65, the mutation mechanism in the DE algorithm may often produce a mutant vector that serves as an intermediary variable \(V_i\) for the evolution.

where \(r_1, r_2\), and \(r_3 \in \left\{ {1, 2, \dots, NP}\right\} \) are arbitrary indexes, in which \(i \ne r_1 \ne r_2 \ne r_3\), and F is a constant operator that denotes the degree of amplification.

The crossover technique of the DE algorithm, which combines the first solution \(X_i\) with the interim variable \(V_i\) to increase the diversity of the new solution \(U_i\), can be described as presented in Eq. 66.

where \(d_{rand} \in \left\{ [1, 2, \ldots, D]\right\} \) indicates a random value, and CR stands for a crossover control parameter.

The selection mechanism in the DE algorithm is carried out at each iteration loop by comparing \(U_i\) with \(X_i\) using a greedy norm for a better solution reserve in the population for the next iteration loop. It is possible for DE to quickly converge and ultimately reach the global optimum solution through these evolutionary processes.

5.2.1 TOC versus DE

Since TOC is a physics-based algorithm, there is no need for evolutionary processes such as crossover, mutation and selection operations. The main differences between DE and TOC can be briefed by the next points:

-

1.

The DE algorithm eliminates the knowledge from earlier generations when a new population is created, but the TOC optimizer keeps search space information over the course of successive rounds.

-

2.

Comparatively speaking, the TOC algorithm requires fewer operators to operate and modify than the DE algorithm, which employs many procedures including crossover and selection. Additionally, TOC uses a parameter that represents fuzzy adaptive \(\mu \), but DE does not use such a parameter to help locate the optimal solutions.

-

3.

In TOC, exploration is improved by permitting windstorms and thunderstorms to evolve into tornadoes to randomly explore the search space, whereas in DE, exploration is improved employing crossover and selection procedures.

-

4.

In DE algorithm, mutation is often carried out with the intention of improving exploitation. In contrast, a better exploitation of the TOC algorithm is achieved by the evolution process of windstorms into thunderstorms with the use of random values.

5.3 Genetic algorithm

Holland was the first to forward GA Holland et al. (1992). It is regarded as a global optimization technique that draws inspiration from biological processes like genetics and evolution. Every potential solution is coded as a chromosome (i.e., an individual) when employing GAs, which use the search space to create chromosomes. During the optimization process of GA, evolution starts with a collection of randomly selected individuals from a population. In every generation loop during optimization, each individual’s fitness score is re-calculated. The solutions’ variables are modified in accordance with their fitness scores. The random starting solutions are more likely to be improved as the best individuals are given a greater probability to contribute to improving other solutions. A fitness function is used to pick chromosomes during optimization for the following generations, and then certain genetic operators, including crossover and mutation, are applied to the chosen chromosomes to create new ones. Until global optimal solutions are reached, the theory goes that these chromosomes change and always produce better individuals (Song et al. 2019).

5.3.1 TOC versus GAs

Although both GAs and TOC are population-based algorithms, GA is an evolutionary-based algorithm while TOC is a physics-based algorithm. The following is a quick summary of the main distinctions between them:

-

1.