Abstract

Several explainable AI methods are available, but there is a lack of a systematic comparison of such methods. This paper contributes in this direction, by providing a framework for comparing alternative explanations in terms of complexity and robustness. We exemplify our proposal on a real case study in the cybersecurity domain, namely, phishing website detection. In fact, in this domain explainability is a compelling issue because of its potential benefits for the detection of fraudulent attacks and for the design of efficient security defense mechanisms. For this purpose, we apply our methodology to the machine learning models obtained by analyzing a publicly available dataset containing features extracted from malicious and legitimate web pages. The experiments show that our methodology is quite effective in selecting the explainability method which is, at the same time, less complex and more robust.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Machine Learning (ML) methods are boosting the applications of Artificial Intelligence (AI) in all human activities. Despite the high predictive performance, most of ML models lack an intuitive interpretation. In fact, their nonlinear complex dependence makes the underlying rationale of the predictions difficult to explain. This is the reason why authorities and regulators have begun to monitor the risks arising from the adoption of (unexplainable) AI methods (Bracke et al. 2019), (Babaei, Giudici and Raffinetti 2025). In particular, the European Commission has introduced the AI Act, which puts forward a number of key requirements to machine learning models in terms of accuracy, explainability, fairness and robustness (European Commission 2020). In parallel, standardization bodies such as the ISO/IEC are discussing how to operationalize the requirements of AI trustworthiness, especially in high-risk fields such as automotive, finance and healthcare.

In all the above, explainability is defined in connection with the human oversight of the output from Artificial Intelligence, that is, the capability of a human (either a programmer, a user or a consumer) to understand not only the output but also the reasons of that output ((Bracke et al. 2019)). From a regulatory viewpoint, explanations should be able to address a variety of questions about a model’s operations, thus, it is fundamental to clarify the notion of “explainability”. In literature, some important institutional definitions have been provided ((Miller 2019)). The definition reflecting the most the essence of this concept from the statistical perspective, is the one introduced by Bracke et al. (2019), who state that “explainability means that an interested stakeholder can comprehend the main drivers of a model-driven decision”. It follows that to be explainable, machine learning models must provide detailed reasons clarifying their functioning and address questions raised by developers, managers, auditors and regulators.

The machine learning literature provides different metrics to measure explainability. For regression models, that are explainable by design, regression coefficients are the main tool: they measure the estimated impact of each predictor on the response. Furthermore, the frequent assumption of an underlying stochastic model allows the application of statistical tests that can be employed to decide whether each predictor is relevant. High dimensional regression models are often supplemented with regularization techniques, such as Lasso. In this case the coefficients of less influential variables are constrained at zero, and only the variables with the highest impact have non zero coefficients in the final model.

For ensemble models, such as Random Forests, bagging and boosting, feature importance is the main tool for explainability. Importance is usually derived by averaging the contribution of each predictor’s splits to the reduction of the heterogeneity or the variability of the response. In particular, a Random Forest model averages the classifications obtained from a set of tree models, each based on a sample of training data and of explanatory variables. In a tree, the explanatory variables control the splitting rules, with stopping decisions determined by the need to significantly reduce within group variability (as measured, for example, by the the Gini coefficient).

For Neural Networks and Support Vector Machines, permutation feature importance is the main tool: it measures the importance of a feature by calculating the variation in the prediction error of the model after permuting the feature.

All the above metrics are “built-in" in the respective models. In this paper we will focus on these metrics, even though they are not model agnostic. In fact, unlike model agnostic techniques, such as Shapley and LIME, these metrics do not require extra computation and modeling. Hence, starting from the explanations given by different models, we compare them, under the assumption that they might identify different aspects. Our contribution to the state of the art is in this direction. More specifically, the main contributions of our work are as follows:

-

Methodology to assess and compare alternative explanations, coming from different machine learning models;

-

Guidelines to select the models that provide the most parsimonious and robust explanations.

We exemplify the proposed framework on a real case study in the cybersecurity domain. More precisely, we focus on phishing, a security threat with very serious impacts on businesses and individuals that is often used as a vector to perform other dangerous security attacks. This threat leverages psychological and social engineering techniques with the objective of stealing valuable information from users. Machine learning models have been extensively applied in the context of phishing detection, thus making these models explainable will have significant benefits to better detect and monitor fraudulent attacks and design efficient mitigation measures. In the case study, we apply our methodology to a publicly available phishing dataset that contains 48 features extracted from malicious and legitimate web pages. The experiments have shown that the proposed approach is quite effective in identifying the less complex and more robust models.

The rest of the paper is organized as follows. In Sect. 2 we review the state of the art in the areas of explainability and phishing detection. In Sect. 3 we introduce the proposed methodological framework, while we describe the dataset used to assess our framework in Sect. 4. In Sect. 5 we present and discuss the experimental results and we conclude the paper in Sect. 6 with some final remarks and possible future research directions.

2 Related work

This section reviews the state of the art in the areas of explainability and phishing detection, that is the application domain selected for showcasing the framework proposed in this paper.

2.1 Explainability

A machine learning model, to be judged reliable and trustworthy, must satisfy a set of requirements, some “internal" to the model, while others referring to its “external" impacts on individuals and the society (European Commission 2020). The most important internal requirements are model accuracy, complexity, robustness and explainability. While accuracy, complexity and robustness have been extensively addressed in the computer science and statistics literature, the notion of explainability is more recent. The importance of this topic has grown in parallel with the development of "black-box" learning models, that might be very accurate, although very difficult, or impossible, to interpret, especially in terms of their driving mechanisms.

A precise mathematical definition of explainability does not exist, due to its somehow subjective interpretation. Nevertheless, the extant literature relies on the regulatory definition, such as that reported in Bracke et al. (2019), for which explainability requires an explicit understanding of the factors which determine a given machine learning output (see also Guidotti et al. (2018)).

The application of the explainability requirement has led to a noticeable increase of its importance. In fact, to be considered trustworthy, a machine learning model must satisfy this requirement. Some machine learning models are explainable by design, in terms of their parameters: linear regression and logistic regression, for example, even with their recent advances in terms of Lasso and Ridge feature selection (e.g., regression models). On the contrary, other models are much less explainable, if any: tree models, Random Forests, bagging and boosting models. These models have a built-in mechanism, the feature importance plot, that assesses the importance of each variable in terms of the splits (or average splits) it generates on the tree (or forest) model. Finally, Neural Networks and Support Vector Machines are typically not explainable. They can be made explainable, with some computational efforts, by permutating and reshuffling the values of any given explanatory variable.

The requirement of explainability has introduced the need to obtain robust explanations (see, e.g., Calzarossa 2025) and to compare models in terms of an independent measure (model agnostic), to be run in a post-processing step (see, e.g., Minh et al. (2022), Burkart and Huber (2021)). The most important of such measures are Shapley values (Lundberg and Lee 2017), and their normalized variants, such as Shapley Lorenz values (Giudici and Raffinetti 2021).

Shapley values were introduced in game theory with the aim of dividing the value of a game equally between the various participants (Shapley 1953). The goal was to extend the technique to machine learning models. In this context, Shapley values allow for the equitable distribution of a model’s prediction among the regressors that constitute it.

Assume that a game is associated with each observation to be predicted. For each game, the players are the model predictors and the total gain is equal to the predicted value, obtained as the sum of each predictor’s contribution. The (local) effect of each variable \(k \, (k= 1, \ldots, K)\), on each observation \(i \, (i=1,\ldots,N)\), can then be calculated as follows:

where \(X^{'}\) is a subset of \(|X^{'}|\) predictors, \(\hat{f}(X^{'}\cup X_k)_i\) and \(\hat{f}(X^{'})_i\) are the predictions of the i-th observation obtained with all possible subset configurations including variable \(X_k\) and not including variable \(X_k\), respectively. Once Shapley values are calculated, for each observation, the global contribution of each explanatory variable to the predictions is obtained summing or averaging them over all observations. Thus, Shapley values allow understanding the impact of the various explanatory variables in a model’s output. In other words, they help to understand which explanatory variable drives the model’s predictions.

We remark that, unlike Lasso or Ridge techniques, which are applied in model building (ex-ante), Shapley values are applied once a model is selected (ex-post). Another difference is that, while Lasso and Ridge are mainly used for model selection, Shapley values are employed for model interpretation.

In light of Occam’s razor principle, for simplifying a model, by making it more parsimonious and, therefore, more robust to input variations, it may be worth examining how much variability of the response is explained by a given set of predictors. To this end, Shapley values should be normalized, to be interpreted similarly in different application settings.

To normalize Shapley values, Giudici and Raffinetti (2021) introduced Shapley Lorenz values, which replace the pay-off of Shapley values with a pay-off based on the difference between Lorenz Zonoids, normalized by definition. More precisely, given K predictors, the Shapley Lorenz contribution associated with the additional variable \(X_k\) was defined as:

here \(\mathcal {C}(X)\setminus X_k\) is the set of all possible model configurations which can be obtained excluding variable \(X_k\); \(|X^{'}|\) denotes the number of variables included in each possible model; \(LZ(\hat{Y}_{X^{'} \cup X_k})\) and \(LZ(\hat{Y}_{X^{'}})\) are Lorenz Zonoids which describe the (mutual) variability of the response variable Y explained by the models which include the \(X^{'} \cup X_k\) predictors and only the \(X^{'}\) predictors, respectively.

While appealing from an interpretation viewpoint and, for this reason, much employed in the extant machine learning literature, both Shapley and Shapley Lorenz values suffer from being highly computation intensive, especially when many explanatory features are available. The extra computational cost may overweigh the benefit of model interpretation.

In this paper we propose an alternative solution to measure the explainability of a model, which does not require a post processing step. For any given model, we consider its default (non model agnostic) explanation, and compare alternative models not only in terms of their accuracy, but also in terms of explainability and, in particular, in terms of the robustness and complexity of their explanations.

2.2 Phishing detection

Malicious websites resembling their legitimate counterparts are usually deployed for phishing attacks, that is, to trick users into giving their valuable information voluntarily. Hence, a timely detection of these phishing websites is very important. Machine learning models have been widely used for this purpose (see, e.g., Basit et al. (2021), Das et al. (2020), Zieni et al. (2023) for comprehensive surveys). These models are often based on traditional supervised learning algorithms, such as Decision trees, Support Vector Machine, Naive Bayes, that employ features able to differentiate malicious and legitimate websites.

The literature suggests a variety of approaches for the identification and extraction of the features. Some works focus on features extracted from the page URLs (see, e.g., Garera et al. (2007), Gupta et al. (2021), Jalil et al. (2023), Karim et al. (2023), Sahingoz et al. (2019), Tupsamudre et al. (2019)), whereas some others consider the page content (see, e.g., Adebowale et al. (2019), Jain and Gupta (2018), Jha et al. (2023), Li et al. (2019), Pandey and Mishra (2023), Rao and Pais (2019)). For example, to extract URL features (Garera et al. 2007) leverage the obfuscation techniques utilized by attackers who try to make users believe the URL belongs to a trusted party. Sahingoz et al. (2019) analyze the structure and composition of the URL strings for extracting features. Gupta et al. (2021) demonstrate that URL features are very powerful for detecting phishing websites, thus only few URL features are sufficient.

To derive features associated with the page content, most works focus on HTML tags included in the page source code. For example, to identify potentially malicious content, Rao and Pais (2019) analyze tags and hyperlinks associated with the attributes of these tags. Similarly, Li et al. (2019) consider the consistency of the page content by examining HTML tags and the page URL, while (Jain and Gupta 2018) study the identity of a web page by checking the content of some tags.

Machine learning models are generally very accurate in detecting phishing websites. Nevertheless, they do not provide any hint about how their decisions were taken and about the impact of the individual features used for model training. To increase transparency and trust, there is a compelling need for making these decisions explainable. According to some recent surveys (see e.g., Capuano et al. (2022), Nadeem et al. (2023), Zhang et al. (2022)), explainability has been integrated to some extent in AI-based cybersecurity models, whereas it has been considered to a rather limited extent in the context of phishing detection. For example, Galego Hernandes et al. (2021) explain the models created for detecting phishing URLs using two techniques, i.e., the Explainable Boosting Machine, applied in the construction of the models and the Local Interpretable Model-agnostic Explanations (LIME), applied to the trained models. The Accumulated Local Effects plots that describe how features influence the prediction of a machine learning model are used by Poddar et al. (2022) to explain a two level ensemble learning model. Another approach considered for explaining phishing detection models is based on the Lorenz Zonoid model selection method, that is, the multidimensional version of Gini coefficient. This approach is applied in Calzarossa et al. (2023) to explain machine learning models trained using unstructured inputs, namely, bag of words features extracted from the content of the web pages, whereas an application to structured inputs, namely, features extracted from the page URL, is presented in Calzarossa et al. (2024).

In this work, we applied the proposed framework to explain the website characteristics that drive phishing, utilizing a publicly available phishing dataset that contains 48 features extracted from malicious and legitimate web pages.

3 Methodology

In this section we present our main methodological contributions. As already mentioned, several explainable AI methods have been proposed in the literature, some of which are model dependent (e.g., feature importance plots and regression coefficients), while others are model independent (e.g., Shapley values and Shapley Lorenz values).

To the best of our knowledge, no papers have assessed and compared so far different explanations, obtained from different machine learning models, using the same dataset. In this paper we propose to do so, employing, as a selection criterion, two main principles, namely:

-

model complexity or its opposite, model parsimony;

-

model robustness.

These principles will be applied only to models that have reached a given level of accuracy, thus filtering out models that are not accurate enough.

The first principle we consider is model complexity. According to Occam’s razor principle, if a set of models has a similar predictive accuracy, the most parsimonious model is to be preferred. We measure parsimony by measuring the concentration of the explanations.

To this aim, we analyze the distribution of the explanations, ordering them in non decreasing order from the lowest to the highest in magnitude. We then calculate, as a summary concentration measure, the Lorenz Zonoid of the concentration curve which, for the one dimensional response considered here, corresponds to the Gini coefficient. We will then choose the model with the highest value of the Lorenz Zonoid.

Lorenz Zonoids were introduced by Koshevoy and Mosler (1996) as a generalization of the Lorenz curve in d dimensions (Lorenz 1905).

The Lorenz curve of a non negative random variable Y having expectation \(E(Y)=\mu \) is the diagram of the function:

where \(F_{Y}^{-1}\) is the quantile function of Y, i.e., \(F_{Y}^{-1}=\min \{y:F(y)\ge t\}.\) Roughly speaking, given n observations, the Lorenz curve \(L_Y\) of the Y variable is given by the set of points \((i/n,\sum _{j=1}^{i}y_{(j)}/(n\bar{y}))\), for \(i=1,\ldots,n\), where \(y_{(i)}\) indicates the Y variable values ordered in a non-decreasing order and \(\bar{y}\) is the Y variable mean value. Similarly, the Y variable can be re-ordered in a non-increasing order providing the dual Lorenz curve \(L^{'}_{Y}\), which is defined as the set of points \((i/n,\sum _{j=1}^{i}y_{(n+1-j)}/(n\bar{y}))\).

It can be shown (see, e.g., (Giudici and Raffinetti 2020)) that a Lorenz Zonoid can be expressed as a function of the sum of the distances between the y-axis values of the points lying on the Lorenz curve and those of the points lying on the bisector line. More precisely, the Lorenz Zonoid is equal to:

where

where y(j) are the n observed values of the Y variable, ordered in a non-decreasing order.

It is important to illustrate how the Lorenz Zonoid can be calculated, in practice. To this aim, we introduce a simple illustrative example. Let \(Y^*\) be a vector of \(n=10\) points, namely, \(Y^*=\{12,27,48,3,34,11,0,46,75,28\}\). The mean value of \(Y^*\), \(\bar{y}^*\), is equal to 28.4. The x-axis and y-axis values of the Lorenz curve are reported in Table 1. The Lorenz curve is then obtained joining \((n+1)\) points: the (0, 0) point with all points whose coordinates are specified in Table 1.

The x-axis values of the dual Lorenz curve are the same as those of the Lorenz curve, whereas the y-axis values are obtained cumulating the \(Y^*\) values in the reverse order. The resulting coordinate pairs of the dual Lorenz curve are reported in Table 2, and the dual Lorenz curve is then obtained joining the (0, 0) point with all the other points in Table 2.

The Lorenz Zonoid can then be calculated as the area between the Lorenz and the dual Lorenz curve, applying Eq. 1. It results that \(d=2.16\) and, therefore, \(LZ=0.432\).

In our context, the Lorenz Zonoid will be calculated for a variable Y with as many points as the number of features of the considered machine learning model. Each point corresponds to a feature’s explanation. The Lorenz Zonoid of Y determines the degree of concentration of the explanations: the higher, the more parsimonious the model.

We remark that the Lorenz Zonoid corresponds, in the one dimensional case, to the Gini index, one of the most employed measures of inequality. When the considered variable is binary, the Gini coefficient is a function of the Area Under the ROC Curve (AUC): \(Gini = 2 * AUC -1.\) However, the Lorenz Zonoid is more general, and can be applied to all types of variables, as demonstrated in a recent paper by Giudici and Raffinetti (2024).

The second principle considered in our methodology to compare alternative explanations is their robustness. This is because explanations obtained with the same model, but using different train/test samples of data, should not vary considerably between each other. This apparently natural requirement is not easy to be satisfied, especially when a model is highly complex.

To measure the robustness of the explanations we can apply the bootstrap method to any given model, and obtain M different explanations for each model variable, corresponding to the application of the model to the M different training samples. To ease the interpretation, we can then represent the M obtained explanations in a diagram that depicts, for each explanatory variable, the corresponding sample distribution.

We suggest to employ a boxplot representation in which we report, for each variable: the first quartile, median and third quartile of the explanations; along with a lower and an upper bound such that the interval above the lower bound contains 95% of the observations. We can then compare the lower bound of the boxplot with the explanations that we would have been obtained with a baseline explanation, that is, a model characterized by a uniform distribution of the explanations. For any given variable, if the boxplot lower bound is greater than the baseline explanation, the explanation is deemed to be significant.

More formally, for any given feature \(X_k\)\( (k= 1, \ldots, K)\) we can calculate a lower bound \(LB_k\), corresponding to the 5-th percentile of the distribution obtained considering all M samples. If the \(LB_k\) is lower than the explanation of the baseline model, \(X_k\) will not be considered as a significant explanation.

The same bootstrap samples can also be used to represent the distribution of the Gini coefficient (Lorenz Zonoid), for any given machine learning method. This allows comparing the concentration of each model, taking robustness into account: the higher the concentration boxplot, the more parsimonious the model. The values can be compared with those of a baseline model, corresponding to a random allocation of the binary response, whose Gini coefficient is equal to 0.5.

To better illustrate our methodology the pseudo code of the proposed framework is shown in Algorithm 1.

We finally remark that domain knowledge must also be used in the comparison of the explanations. While statistical metrics, such as Lorenz Zonoids and the boxplots can be used to select a model in terms of parsimony and robustness (assuming that the compared models are accurate), expert knowledge should be the ultimate tool to evaluate whether machine learning explanations are valid in practical implementations. A solid domain knowledge for comparing explanations is particularly relevant in the cybersecurity context because of the potential benefits of explanations for detecting fraudulent attacks and for designing efficient security defense mechanisms.

4 Experimental data

As already mentioned, the application domain examined for demonstrating the proposed methodology is cybersecurity and more precisely the detection of phishing websites.

In this context, we consider a publicly available phishing dataset containing 10,000 observations equally distributed between phishing and legitimate pages (Tan 2018). Each of these observations is described by 48 features that capture specific properties of both the page URL and the HTML source code.

The choice of this dataset is motivated by the fact that it combines a set of relevant features selected from several highly cited research papers. Some features consider the number of symbols, characters or words in the entire URL or in its components. Other features are extracted from the page source code by analyzing hyperlinks and forms or by checking the presence of specific components, such as frame tags. Beyond these binary and numeric features, the dataset also includes some categorical features whose values are obtained by applying heuristics that take into account the tricks of the attackers in creating phishing websites.

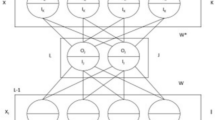

All these features are the basis for building machine learning models capable of discriminating between phishing and legitimate websites. To obtain these models, we solve a binary classification problem using different supervised machine learning algorithms, namely, K-Nearest Neighbors (KNN), Logistic Regression (LR), Random Forest (RF), Support Vector Machine (SVM) and EXtreme Gradient Boosting (XGboost). In detail, we estimate the performance of the classifiers across small data samples by applying the bootstrap method, thus, we train the five classifiers on 100 random samples of 1,000 observations each drawn from the whole dataset.

For the models of a given classifier, we derive the importance of each feature by applying specific methods, e.g., permutation feature importance for SVM. In addition, the importance of each feature is scaled with respect to the total importance of all features of a given model to make it comparable across classifiers.

The values and the distribution of the feature importance provide useful insights about the robustness and the significance of the explanations of the models. These values are also used for evaluating the concentration of the explanations obtained for each of the models of a given classifier. For this purpose, we arrange the features according to their importance from the lowest to the highest in magnitude and we analyze the corresponding distributions.

We outline that, as a baseline to evaluate the experimental results, we will consider a uniform distribution of the feature importance, where the importance associated with each feature is equal to the inverse of the number of features, that is, \(1 / 48 = 0.0208\).

5 Experimental results

This section presents the main results obtained by applying the proposed methodology to explain the machine learning models created for phishing detection.

The first results refer to the 100 machine learning models trained with the five classifiers considered in our study. Table 3 summarizes the model performance in terms of accuracy and Area under the ROC Curve (AUC), also expressed in terms of confidence intervals computed with a 95% confidence level on the different bootstrap samples.

From the table we notice that the performance of the considered models is generally very good. For example, the sample mean of model accuracy of all classifiers, apart from KNN, is well above 0.9. In addition, the corresponding confidence intervals are rather narrow, thus suggesting quite precise population estimates. Similar considerations apply to the AUC. The very high values of the sample mean of four out of the five classifiers denote that the models can achieve a very good degree of discrimination between the two classes, i.e., legitimate and phishing websites.

Given these results, in what follows we apply our methodology to the models obtained with three of these classifiers, that is, LR, RF and SVM. We exclude KNN as less accurate than the others and we exclude XGBoost as very similar to Random Forest. In fact, both the latter models belong to the same class of tree models, and their predictive accuracy is very similar.

Figure 1 shows the importance of the 48 features for the models obtained from the 100 bootstrap samples, applying each of the three chosen classifiers.

We remark that the computation of the feature importance is based on methods that depend on the specific classifier: it is not model agnostic, but it does not require any extra computational step. In detail, the Lasso method is used for the LR, whereas Random Forest feature importance and permutation feature importance are applied for RF and SVM, respectively.

The diagrams in Fig. 1 allow the identification of the most important features for each classifier and help in assessing the significance of the explanations and the robustness of the classifiers. From the visual inspection of the figure, we can easily notice that the explanations vary across classifiers. In general, the number of features whose importance is above the baseline for all 100 models of a given classifier is very small. Four features (i.e., FrequentDomainNameMismatch, SubmitInfoToEmail, InsecureForms, PctExtNullSelfRedirectHyperlinksRT ) are identified for the LR, three (i.e., HttpsInHostname, NumAmpersand, UrlLengthRT) for the SVM and two (i.e., PctExtHyperlinks, PctExtNullSelfRedirectHyperlinksRT) for the RF. Note that these features refer to both the page URL and its source code. For example, the most important features for the RF consider specific characteristics of the hyperlinks included in the page source code, such as hyperlinks pointing to external domains or self redirecting. From the figure we can also notice that the importance of 8 and 12 features out of 48 is below the baseline for the LR and SVM, respectively, whereas for the RF the importance of 31 features is below. All these results clearly demonstrate that RF models better concentrate the explanations and are therefore the most parsimonious.

To further investigate these behaviors and assess the significance of the explanations, we summarize in Fig. 2 the corresponding sample distributions using the boxplot representation described in the methodological section.

In detail, for each feature the boxplot represents the first quartile, median and third quartile of the explanations. We also consider a lower and an upper bound chosen as to make the boxplot contain 95% of the explanations.

According to our methodology, whenever the lower bound of the boxplot is greater than the baseline, the explanation is to be considered significant. From the diagrams, we can identify the significance of the explanations of each classifier. These results confirm the conclusions previously drawn, that is, RF models lead to more concentrated explanations, with only two significant features, against at least three for LR and SVM, thus these models are the most parsimonious.

This conclusion can be better ascertained considering not only the number of significant features, but also comparing the concentration of the explanations assigned by the different models, by calculating the distribution of the Gini coefficient (Lorenz Zonoid) for each model, as described in Sect. 3.

We represent the feature concentration for each of the considered bootstrap samples in Fig. 3 which shows the cumulative distribution of feature importance, arranged in ascending order from lowest to highest in magnitude. As can be seen, the Random Forest classifier (in the middle) leads to more concentrated models, for all boostrap samples.

Cumulative distributions of the feature importance obtained for the 100 bootstrap samples for the three classifiers. Features are arranged according to their importance from lowest to highest in magnitude. Each color refers to a specific sample. The dotted line corresponds to the cumulative of the baseline

To improve the robustness of our findings, we compare the explanations of our model with those obtained using Shapley values. Shapley values produce a distribution of contributions among features, but their exact computation is intensive, with a high complexity. For this reason, we used the SHAP Python library (Lundberg and Lee 2017) which is designed to provide interpretable explanations for machine learning model predictions by approximating Shapley values. SHAP significantly reduce the computational burden, making the calculation of Shapley values feasible for complex models and large datasets. Figure 4 compares the most important explanatory variables identified by applying our method and the Shapley value method.

Note that, not to clutter the presentation, the results refer to the Random Forest models, whose parameters and settings have been previously illustrated. We recall that for these models the default feature importance method is based on the mean reduction in Gini impurity.

In detail, Fig. 4a shows the Random Forest feature importance plot obtained using our method. Each bar corresponds to the maximum importance of a given feature computed over the 100 models, while the yellow vertical line refers to the corresponding mean. In this diagram, features are sorted according to their mean importance. On the other hand, Fig. 4b shows the global feature importance for the model using SHAP bar plot. Each bar represents the mean absolute SHAP value for a feature across all samples, indicating its average contribution to the model’s predictions. Features are ranked according to their importance. Both diagrams display the 15 top features.

As can be seen, the explanations obtained with the two methods are rather similar. The three most influential features, namely, PctExtNullSelfRedirectHyperlinksRT, PctExtHyperlinks and FrequentDomainNameMismatch, are the same, with the first two of these features with much larger values than the remaining ones. In addition, two thirds of the most important features, i.e., ten out of 15, identified by our method have also been identified by the Shapley method.

We finally remark that the computation of the 15 importances displayed in Fig. 4a required about four minutes, whereas the time for computing the 15 Shapley values displayed in Fig. 4b was about two hours and 19 min. All these computations were performed on a laptop equipped with a 1.80 GHz Intel Core i7-10610U, 32 GB RAM and 512GB SSD.

6 Conclusions and future research directions

In this paper we contributed to the field of explainable AI, by providing a methodology that is able to compare alternative explanations, in terms of accuracy, complexity and robustness, without any extra computation efforts as required in model agnostic procedures, such as Shapley values.

The methodology is based on two main concepts: i) Lorenz Zonoids, to calculate the degree of concentration in explanations corresponding to any given model: the higher the concentration, the less complex the model; ii) bootstrap procedure to obtain confidence intervals of the explanations, represented as boxplots, which can be used to assess whether a model’s explanation is significantly higher than the baseline.

The application of the methodology to a publicly available phishing dataset has shown its effectiveness in selecting the machine learning model which is not only the most accurate, but also the most robust and the least complex.

We remark that, since the proposed method is applied to highly accurate models, the distributions of explanations are stable enough to be studied and compared in terms of their parsimony. Moreover, parsimony is not the only criterion used to compare explanations. In fact, this criterion has to be coupled with specific considerations of the experts in the domain being considered.

We also outline that, when the aim of the analysis is to make accurate predictions with simple models that allow rapid intervention, as it is often the case of phishing detection, parsimony may be a sufficient criterion for deciding which model to prefer. Instead, when the aim is also to understand the mechanisms that lead to accurate predictions, as, for example, in detecting the gene expressions that most likely lead to a cell failure, a sparse model might be preferable with respect to a concentrated one.

From a computational viewpoint, we underline that our approach requires bootstrapping, which is generally expensive in terms of computations, although not comparable to running post processing model agnostic explainable techniques, such as Shapley values, as we have shown in our application. The advantage of our proposal is that the explainability measures we employ are already available for the models being considered, and we do not need an extra computational step, which is sometimes difficult to interpret.

As future research directions, we plan to extend our methodology to cope with unstructured inputs. In addition, we will focus on adversarial attacks by investigating the impact of adversarial samples and by devising methods to identify good adversarial samples in tabular data. This will improve our methodology, towards an overall evaluation of the trustworthiness of machine learning applications in phishing website detection.

Data availability

No datasets were generated or analysed during the current study.

References

Adebowale M, Lwin K, Sánchez E, Hossain M (2019) Intelligent web-phishing detection and protection scheme using integrated features of images, frames and text. Expert Syst Appl 115:300–313

Babaei G, Giudici P, Raffinetti E (2025) A rank graduation box for SAFE Artificial Intelligence. Expert Syst Appl 259:125239

Basit A, Zafar M, Liu X, Javed A, Jalil Z, Kifayat K (2021) A comprehensive survey of AI-enabled phishing attacks detection techniques. Telecommun Syst 76(1):139–154

Bracke P, Datta A, Jung C, Shayak S (2019) Machine learning explainability in finance: an application to default risk analysis. Technical report, Staff Working Paper No. 816, Bank of England

Burkart N, Huber M (2021) A survey on the explainability of supervised machine learning. J Artif Intell Res 70:245–317

Calzarossa M, Giudici P, Zieni R (2023) Explainable machine learning for bag of words-based phishing detection. In: Longo L (ed) Explain Artif Intell, vol 1901. Communications in Computer and Information Science. Springer, Cham., pp 531–543

Calzarossa M, Giudici P, Zieni R (2024) Explainable machine learning for phishing feature detection. Qual Reliab Eng Int 40:362–373

Calzarossa M, Giudici P, Zieni R (2025) How robust are ensemble machine learning explanations? Neurocomputing

Capuano N, Fenza G, Loia V, Stanzione C (2022) Explainable artificial intelligence in cybersecurity: a survey. IEEE Access 10:93575–93600

Das A, Baki S, El Aassal A, Verma R, Dunbar A (2020) SoK: a comprehensive reexamination of phishing research from the security perspective. IEEE Commun Surv Tutor 22:671–708

European Commission: White Paper on Artificial Intelligence: a European approach to excellence and trust. COM/2020/65 (2020)

Galego Hernandes P, Floret C, Cardozo De Almeida K, Da Silva V, Papa J, Pontara Da Costa K (2021) Phishing detection using URL-based XAI techniques. In: Proceedings of the IEEE symposium series on computational intelligence (SSCI). IEEE, Piscataway, pp. 01–06

Garera S, Provos N, Chew M, Rubin A (2007) A framework for detection and measurement of phishing attacks. In: Proceedings of the ACM workshop on recurring malcode (WORM). ACM, New York, pp. 1–8

Giudici P, Raffinetti E (2020) Lorenz model selection. J Classif 37(3):754–768

Giudici P, Raffinetti E (2021) Shapley-lorenz explainable artificial intelligence. Expert Syst Appl 167(895):114104

Giudici P, Raffinetti E (2024) RGA: a unified measure of predictive accuracy. Adv Data Anal Classif. https://doi.org/10.1007/s11634-023-00574-2

Guidotti R, Monreale A, Ruggieri S, Turini F, Giannotti F, Pedreschi D (2018) A survey of methods for explaining black box models. ACM Comput Surv (CSUR) 51(5):1–42

Gupta B, Yadav K, Razzak I, Psannis K, Castiglione A, Chang X (2021) A novel approach for phishing URLs detection using lexical based machine learning in a real-time environment. Comput Commun 175:47–57

Jain A, Gupta B (2018) Towards detection of phishing websites on client-side using machine learning based approach. Telecommun Syst 68(4):687–700

Jalil S, Usman M, Fong A (2023) Highly accurate phishing URL detection based on machine learning. J Ambient Intell Humaniz Comput 14(7):9233–9251

Jha A, Muthalagu R, Pawar P (2023) Intelligent phishing website detection using machine learning. Multimed Tools Appl 82(19):29431–29456

Karim A, Shahroz M, Mustofa K, Belhaouari S, Joga S (2023) Phishing detection system through hybrid machine learning based on URL. IEEE Access 11:36805–36822

Koshevoy G, Mosler K (1996) The Lorenz Zonoid of a multivariate distribution. J Am Stat Assoc 91(434):873–882

Li Y, Yang Z, Chen X, Yuan H, Liu W (2019) A stacking model using URL and HTML features for phishing webpage detection. Future Gener Comput Syst 94:27–39

Lorenz M (1905) Methods of measuring the concentration of wealth. Publ Am Stat Assoc 9(70):209–219

Lundberg SM, Lee S-I (2017) A unified approach to interpreting model predictions. In: Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R. (eds.) Proceedings of the 31st international conference on neural information processing systems (NIPS). Curran Associates, New York, pp. 4768–4777

Miller T (2019) Explanation in artificial intelligence: insights from the social sciences. Artif Intell 267:1–38

Minh D, Wang X, Li F, Nguyen N (2022) Explainable artificial intelligence: a comprehensive review. Artif Intell Rev 55:3503–3568

Nadeem A, Vos D, Cao C, Pajola L, Dieck S, Baumgartner R, Verwer S (2023) Sok: explainable machine learning for computer security applications. In: Proceedings of the IEEE 8th European symposium on security and privacy (EuroS &P). IEEE, Piscataway, pp. 221–240

Pandey P, Mishra N (2023) Phish-sight: a new approach for phishing detection using dominant colors on web pages and machine learning. Int J Inf Secur 22(4):881–891

Poddar S, Chowdhury D, Dwivedi A, Mukkamala R (2022) Data driven based malicious URL detection using explainable AI. In: Proceedings of the IEEE international conference on trust, security and privacy in computing and communications (TrustCom), pp. 1266–1272

Rao R, Pais A (2019) Detection of phishing websites using an efficient feature-based machine learning framework. Neural Comput Appl 31(8):3851–3873

Sahingoz O, Buber E, Demir O, Diri B (2019) Machine learning based phishing detection from URLs. Expert Syst Appl 117:345–357

Shapley L (1953) A value for \(n\)-person games. In: Kuhn H, Tucker A (eds) Contributions to the theory of games II. Princeton University Press, Princeton, pp 307–317

Tan C (2018) Phishing dataset for machine learning: feature evaluation. Mendeley Data. https://doi.org/10.17632/h3cgnj8hft

Tupsamudre H, Singh A, Lodha S (2019) Everything is in the name - a URL based approach for phishing detection. In: Dolev S, Hendler D, Lodha S, Yung M (eds) Cyber security cryptography and machine learning, vol 11527. Lecture Notes in Computer Science. Springer, Cham, pp 231–248

Zhang Z, Al Hamadi H, Damiani E, Yeun C, Taher F (2022) Explainable artificial intelligence applications in cyber security: state-of-the-art in research. IEEE Access 10:93104–93139

Zieni R, Massari L, Calzarossa M (2023) Phishing or not phishing? A survey on the detection of phishing websites. IEEE Access 11:18499–18519

Acknowledgements

This study was funded by: the European Union - NextGenerationEU, in the framework of the GRINS- Growing Resilient, INclusive and Sustainable (GRINS PE00000018). The views and opinions expressed are solely those of the authors and do not necessarily reflect those of the European Union, nor can the European Union be held responsible for them.

Funding

Open access funding provided by Università degli Studi di Pavia within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to this work.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no Conflict of interest to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Calzarossa, M.C., Giudici, P. & Zieni, R. An assessment framework for explainable AI with applications to cybersecurity. Artif Intell Rev 58, 150 (2025). https://doi.org/10.1007/s10462-025-11141-w

Accepted:

Published:

DOI: https://doi.org/10.1007/s10462-025-11141-w