Abstract

Concomitant with people beginning to understand their legal rights or entitlement to complain, complaints of offensive odors and smell pollution have increased significantly. Consequently, monitoring gases and identifying their types and causes in real time has become a critical issue in the modern world. In particular, toxic gases that may be generated at industrial sites or odors in daily life consist of hybrid gases made up of various chemicals. Understanding the types and characteristics of these mixed gases is an important issue in many areas. However, mixed gas classification is challenging because the gas sensor arrays for mixed gases must process complex nonlinear high-dimensional data. In addition, obtaining sufficient training data is expensive. To overcome these challenges, this paper proposes a novel method for mixed gas classification based on analogous image representations with multiple sensor-specific channels and a convolutional neural network (CNN) classifier. The proposed method maps a gas sensor array into a multichannel image with data augmentation, and then utilizes a CNN for feature extraction from such images. The proposed method was validated using public mixture gas data from the UCI machine learning repository and real laboratory experiments. The experimental results indicate that it outperforms the existing classification approaches in terms of the balanced accuracy and weighted F1 scores. Additionally, we evaluated the performance of the proposed method in various experimental settings in terms of data representation, data augmentation, and parameter initialization, so that practitioners can easily apply it to artificial olfactory systems.

Similar content being viewed by others

References

Bagnall, A., Lines, J., Bostrom, A., Large, J., & Keogh, E. (2017). The great time series classification bake off: a review and experimental evaluation of recent algorithmic advances. Data mining and knowledge discovery, 31, 606–660.

Baydogan, M. G., Runger, G., & Tuv, E. (2013). A bag-of-features framework to classify time series. IEEE transactions on pattern analysis and machine intelligence, 35, 2796–2802.

Behera, B., & Kumaravelan, G., et al. (2019). Performance evaluation of deep learning algorithms in biomedical document classification, in 2019 11th International Conference on Advanced Computing (ICoAC), IEEE, pp. 220–224.

Carmel, L., Levy, S., Lancet, D., & Harel, D. (2003). A feature extraction method for chemical sensors in electronic noses. Sensors and Actuators B: Chemical, 93, 67–76.

Chen, Z., Chen, Z., Song, Z., Ye, W., & Fan, Z. (2019). Smart gas sensor arrays powered by artificial intelligence. Journal of Semiconductors, 40, 111601.

Deng, C., Lv, K., Shi, D., Yang, B., Yu, S., He, Z., & Yan, J. (2018). Enhancing the discrimination ability of a gas sensor array based on a novel feature selection and fusion framework. Sensors, 18, 1909.

Distante, C., Leo, M., Siciliano, P., & Persaud, K. C. (2002). On the study of feature extraction methods for an electronic nose. Sensors and Actuators B: Chemical, 87, 274–288.

Eklöv, T., Mårtensson, P., & Lundström, I. (1997). Enhanced selectivity of MOSFET gas sensors by systematical analysis of transient parameters. Analytica Chimica Acta, 353, 291–300.

Faleh, R., Othman, M., Gomri, S., Aguir, K., & Kachouri, A. (2016). A transient signal extraction method of WO 3 gas sensors array to identify polluant gases. IEEE Sensors Journal, 16, 3123–3130.

Fawaz, H. I., Forestier, G., Weber, J., Idoumghar, L., & Muller, P.-A. (2019). Deep learning for time series classification: a review. Data Mining and Knowledge Discovery, 33, 917–963.

Feng, S., Farha, F., Li, Q., Wan, Y., Xu, Y., Zhang, T., & Ning, H. (2019). Review on smart gas sensing technology. Sensors, 19, 3760.

Ferri, C., Hernández-Orallo, J., & Modroiu, R. (2009). An experimental comparison of performance measures for classification. Pattern Recognition Letters, 30, 27–38.

Fonollosa, J., Rodríguez-Luján, I., Trincavelli, M., Vergara, A., & Huerta, R. (2014). Chemical discrimination in turbulent gas mixtures with mox sensors validated by gas chromatography-mass spectrometry. Sensors, 14, 19336–19353.

Fonollosa, J., Sheik, S., Huerta, R., & Marco, S. (2015). Reservoir computing compensates slow response of chemosensor arrays exposed to fast varying gas concentrations in continuous monitoring. Sensors and Actuators B: Chemical, 215, 618–629.

García, V., Mollineda, R. A., & Sánchez, J. S. (2009). Index of balanced accuracy: A performance measure for skewed class distributions, in Iberian conference on pattern recognition and image analysis, Springer, pp. 441–448.

Han, L., Yu, C., Xiao, K., & Zhao, X. (2019). A new method of mixed gas identification based on a convolutional neural network for time series classification. Sensors, 19, 1960.

Hasibi, R., Shokri, M., & Dehghan, M. (2019). Augmentation scheme for dealing with imbalanced network traffic classification using deep learning, arXiv preprintarXiv:1901.00204.

He, K., Zhang, X., Ren, S., & Sun, J. (2016a). Deep residual learning for image recognition, in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778.

He, K., Zhang, X., Ren, S., & Sun, J. (2016a). (2016b), Identity mappings in deep residual networks, in European conference on computer vision, Springer, pp. 630–645.

Jamal, M., Khan, M., Imam, S. A., & Jamal, A. (2010). Artificial neural network based e-nose and their analytical applications in various field, in 2010 11th International Conference on Control Automation Robotics & Vision, IEEE, pp. 691–698.

Jeni, L. A., Cohn, J. F., & De La Torre, F. (2013). Facing imbalanced data–recommendations for the use of performance metrics, In 2013 Humaine association conference on affective computing and intelligent interaction, IEEE, pp. 245–251.

Jia, Y., Yu, B., Du, M., & Wang, X. (2018). Gas Composition Recognition Based on Analyzing Acoustic Relaxation Absorption Spectra: Wavelet Decomposition and Support Vector Machine Classifier, in 2018 2nd International Conference on Electrical Engineering and Automation (ICEEA 2018), Atlantis Press, pp. 126–130.

Kalantar-Zadeh, K. (2013). Sensors: an introductory course, Springer Science & Business Media.

Karakaya, D., Ulucan, O., & Turkan, M. (2020). Electronic nose and its applications: a survey. International Journal of Automation and Computing, 17, 179–209.

Kim, E., Lee, S., Kim, J. H., Kim, C., Byun, Y. T., Kim, H. S., & Lee, T. (2012). Pattern recognition for selective odor detection with gas sensor arrays. Sensors, 12, 16262–16273.

Kingma, D. P. & Ba, J. (2014). Adam: A method for stochastic optimization, arXiv preprintarXiv:1412.6980.

Laref, R., Losson, E., Sava, A., & Siadat, M. (2018). Support vector machine regression for calibration transfer between electronic noses dedicated to air pollution monitoring. Sensors, 18, 3716.

Moore, S., Gardner, J., Hines, E., Göpel, W., & Weimar, U. (1993). A modified multilayer perceptron model for gas mixture analysis. Sensors and Actuators B: Chemical, 16, 344–348.

Nakata, S., Akakabe, S., Nakasuji, M., & Yoshikawa, K. (1996). Gas sensing based on a nonlinear response: discrimination between hydrocarbons and quantification of individual components in a gas mixture. Analytical chemistry, 68, 2067–2072.

Oh, Y. & Kim, S. (2021), Multi-channel Convolution Neural Network for Gas Mixture Classification, In 2021 International Conference on Data Mining Workshops (ICDMW), IEEE, pp. 1094–1095.

O’Hara, S. & Draper, B. A. (2011), Introduction to the bag of features paradigm for image classification and retrieval, arXiv preprintarXiv:1101.3354.

Ohnishi, M. (1992). A molecular recognition system for odorants incorporating biomimetic gas-sensitive devices using Langmuir-Blodgett films. Sens. Mater, 4, 53–60.

Olson, M., Wyner, A. J., & Berk, R. (2018), Modern neural networks generalize on small data sets, In Proceedings of the 32nd International Conference on Neural Information Processing Systems, pp. 3623–3632.

Pancoast, S. & Akbacak, M. (2012). Bag-of-audio-words approach for multimedia event classification, in Thirteenth Annual Conference of the International Speech Communication Association.

Pashami, S., Lilienthal, A. J., & Trincavelli, M. (2012). Detecting changes of a distant gas source with an array of MOX gas sensors. Sensors, 12, 16404–16419.

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Weiss, R., & Dubourg, V., et al. (2011). Scikit-learn: Machine learning in Python, the Journal of machine Learning research, 12, 2825–2830.

Peng, P., Zhao, X., Pan, X., & Ye, W. (2018). Gas classification using deep convolutional neural networks. Sensors, 18, 157.

Persaud, K., & Dodd, G. (1982). Analysis of discrimination mechanisms in the mammalian olfactory system using a model nose. Nature, 299, 352–355.

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bernstein, M., et al. (2015). Imagenet large scale visual recognition challenge. International journal of computer vision, 115, 211–252.

Schütze, H., Manning, C. D., & Raghavan, P. (2008). Introduction to information retrieval, vol. 39, Cambridge University Press Cambridge.

Shorten, C., & Khoshgoftaar, T. M. (2019). A survey on image data augmentation for deep learning. Journal of Big Data, 6, 1–48.

Sokolova, M., & Lapalme, G. (2009). A systematic analysis of performance measures for classification tasks. Information processing & management, 45, 427–437.

Sujono, H. A., Rivai, M., & Amin, M. (2018). Asthma identification using gas sensors and support vector machine. Telkomnika, 16, 1468–1480.

Tan, C. W., Petitjean, F., Keogh, E., & Webb, G. I. (2019). Time series classification for varying length series, arXiv preprintarXiv:1910.04341.

Thammarat, P., Kulsing, C., Wongravee, K., Leepipatpiboon, N., & Nhujak, T. (2018). Identification of volatile compounds and selection of discriminant markers for elephant dung coffee using static headspace gas chromatography-Mass spectrometry and chemometrics. Molecules, 23, 1910.

Um, T. T., Pfister, F. M., Pichler, D., Endo, S., Lang, M., Hirche, S., Fietzek, U., & Kulić, D. (2017). Data augmentation of wearable sensor data for parkinson’s disease monitoring using convolutional neural networks, in Proceedings of the 19th ACM International Conference on Multimodal Interaction, pp. 216–220.

Wang, Q., Li, L., Ding, W., Zhang, D., Wang, J., Reed, K., & Zhang, B. (2019). Adulterant identification in mutton by electronic nose and gas chromatography-mass spectrometer. Food Control, 98, 431–438.

Wei, G., Li, G., Zhao, J., & He, A. (2019). Development of a LeNet-5 gas identification CNN structure for electronic noses. Sensors, 19, 217.

Xiaobo, Z., Jiewen, Z., Shouyi, W., & Xingyi, H. (2003). Vinegar classification based on feature extraction and selection from tin oxide gas sensor array data. Sensors, 3, 101–109.

Yan, J., Guo, X., Duan, S., Jia, P., Wang, L., Peng, C., & Zhang, S. (2015). Electronic nose feature extraction methods: A review. Sensors, 15, 27804–27831.

Yan, J., Tian, F., He, Q., Shen, Y., Xu, S., Feng, J., & Chaibou, K. (2012). Feature extraction from sensor data for detection of wound pathogen based on electronic nose. Sensors and Materials, 24, 57–73.

Yan, M., Tylczak, J., Yu, Y., Panagakos, G., & Ohodnicki, P. (2018). Multi-component optical sensing of high temperature gas streams using functional oxide integrated silica based optical fiber sensors. Sensors and Actuators B: Chemical, 255, 357–365.

Yang, J., Sun, Z., & Chen, Y. (2016). Fault detection using the clustering-kNN rule for gas sensor arrays. Sensors, 16, 2069.

Zhan, C., He, J., Pan, M., & Luo, D. (2021). Component Analysis of Gas Mixture Based on One-Dimensional Convolutional Neural Network. Sensors, 21, 347.

Zhang, Y., Jin, R., & Zhou, Z.-H. (2010). Understanding bag-of-words model: a statistical framework. International Journal of Machine Learning and Cybernetics, 1, 43–52.

Zhao, X., Wen, Z., Pan, X., Ye, W., & Bermak, A. (2019). Mixture gases classification based on multi-label one-dimensional deep convolutional neural network. IEEE Access, 7, 12630–12637.

Acknowledgements

This work was partly supported by Institute of Information & Communications Technology Planning & Evaluation (IITP) Grant funded by the Korea Government(MSIT) (No. 2021-0-02068, Artificial Intelligence Innovation Hub) and the National Research Foundation of Korea (NRF) Grant funded by the Korea government (MSIT) (NRF-2021R1F1A1061038).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A. The UCI dataset: data characteristics

-

I.

Sensor information to measure gas mixture

The UCI data were collected using eight sensors, as shown in Table 10. In particular, the MOX gas sensor value used for data measurement varies according to the change in the resistance value of the semiconductor oxide film, at which time the oxide film of the semiconductor reacts to the gas molecule. Therefore, the response value of mixed gases cannot simply be expressed as a linear combination of the response values of individual gases and, to accurately predict the type and concentration of the desired gases, it must be able to reflect the characteristics inherent in mixed gases described earlier.

-

II.

Details of data collection and experiments

The UCI dataset utilizes three types of gases: carbon monoxide (CO), methane, and ethylene. The experimental environment had two gas outlets: one outlet emitted 2500 ppm of ethylene, while the other outlet emitted 1000 ppm of methylene and 4000 ppm of CO. Each emission speed was varied, and the released gases were sprayed through turbines, making them a mixture of varying concentrations when the wind-blown gases touched the sensors. The concentrations of N, L, M, and H for each gas are listed in Table 11. The actual generated gas mixture samples were classified into five classes, as shown in Table 12.

Appendix B. Real data: data characteristics

-

I.

Sensor information to measure gas mixture

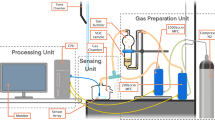

The data used in this study were collected using a total of 11 gas sensor arrays, as shown in Table 13 as data from the Taesung Environmental Research Institute. There were two MOX type, one NDIR type, and eight ELECTRONICHEMICAL type sensors.

The MOX sensor is based on the conductivity change of the gas-sensitive MOX semiconductor layer. The NDIR sensor recognizes gas molecules using infrared light. When infrared rays are absorbed by gas molecules, they vibrate the gas molecules at specific wavelengths. The electronic chemical sensor makes measurements by oxidizing or reducing the gas it wants to detect when it enters the internal sensor.

-

II.

Details of data collection and experiments

The data from the Taesung Environmental Research Institute were created by varying the injection volumes of three types of standard gases: dimethyl sulfide, butyl acetate, and toluene. Standard gas was prepared at each concentration of 10,000 ppm using the following formula:

$$\begin{aligned} V_i[\mu l] = \frac{C_t[ppm] \times V_t[L] \times w_m[g/mol]}{22.4 \times 1000 \times d[g/ml]} \times \frac{\textit{p}[\%]}{100}. \end{aligned}$$\(V_i\) represents the amount of injection gas (injection volume), \(C_t\) represents the target concentration (target concentration), \(V_t\) represents the target volume (Target Volume), \(w_m\) indicates the atomic volume of the injection gas (density), and p indicates the purity of the injection gas (purity). The gas was injected to match the concentration and volume of the target mixture, and the experiment was conducted, as shown in Table 14.

A mixture of 1ml=0.001l of standard gas generated by the above process and 10l of nitrogen can produce 1ppm of mixed gas, and several standard gases can be used to produce mixed gases with different proportions and concentrations.

A total of 100 samples were generated and classified into four classes according to the ratio of the injection amount, with the characteristics and numbers of each class equal to those in Table 15. The numbers in parentheses indicate the amount of standard gas injected during the sample generation process. For example, class 1 is a specimen injected with 1000 ppm of dimethyl sulfide and 1000 ppm of toluene. In this study, we focused on the existence of a specific chemical in a gas mixture rather than its ratio or amount. Therefore, in the case of class 4, diverse combinations of concentrations were treated as the same class.

Appendix C. Details of model architecture and learning strategy

-

I.

Model architectures

There are several major differences between ResNet models for ImageNet classification and Multichannel ResNet models for gas mixture classification. First, the input size and normalization parameters differ. The ImageNet input images were cropped to a size of \(224\times 224\) with normalization with three channels: mean=[0.485, 0.456, 0.406] and std=[0.229, 0.224, 0.225]. The proposed model uses a larger number of channels. We use a larger resizing to \(256\times 256\) and the same normalization for all channels, such as mean=\([0.5, \cdots , 0.5]\) and std=\([0.5, \cdots , 0.5]\).

The first input layer is changed because of the larger input size, which aggregates the multichannel input to the designated output dimension. The first convolutional layer uses a smaller kernel size and stride. Therefore, the proposed architecture does not reduce the input after the first convolutional layer. ResNet works as downsampling to reduce the image and computation. In our case, we used more expensive operations because of the small number of data and features. In addition, the last fully connected layer changed to the number of classes, from 1000 labels in ImageNet to four or five labels in our cases. This is a general modification of transfer learning. Therefore, we changed the major components in the model for our datasets and tasks.

-

II.

Training Parameters Setting

Five-fold cross validation was used to avoid over-fitting. The models were trained with a batch size of 4, Adam optimizer, learning rate of \(10^{-6}\) with weight decay of \(10^{-8}\), and 50 epochs. The weighted cross-entropy loss function was used, and the best model was selected based on the minimum loss in the validation set.

Appendix D. The UCI dataset: Model Performances

-

(I)

Machine models with the Bag-of-Features representation

-

(II)

Comparison of Noise-added data augmentation

-

(III)

CNN models with the analogous image matrix representation

Appendix E. Real data: Model Performances

-

(I)

Machine models with the Bag-of-Features representation

-

(II)

Comparison of Noise-added data augmentation

-

(III)

CNN models with analogous image matrix representation

Appendix F. The bag-of-features approach

The BoF approach represents complex objects with feature vectors of sub-objects. Originally, the main idea of the BoF approach is well-known as bag-of-words (BoW) and developed in document classification. The main idea is to define a code book and display it as a histogram, with each item being the number of code books that have occurred in the document. While ignoring word order information, BoW models are still very effective in capturing document information because they are good at navigating document code word frequency information (Zhang et al., 2010; Schütze et al., 2008) Also, BoF is a well-known dictionary-based feature extraction method for time-series classification (O’Hara & Draper 2011; Pancoast & Akbacak 2012; Baydogan et al., 2013). Furthermore, BoF can be easily applied when the observations have different sequence lengths (Tan et al., 2019).

Feature selection or feature extraction is a common approach to analyze high-dimensional data. We implemented BoF representation for multivariate time series. The BoF representation can represent the original data into lower-dimensional features. We had N samples of the gas sensor array in each dataset. In this study, the bag-of-features representation was the baseline for evaluating the proposed approach. compared to the image transformation, we used the individual observations in the gas sensor array as a feature:. \({\mathbf {X}} =\left[ {\mathbf {z}}_1\; {\mathbf {z}}_2\;\cdots \; {\mathbf {z}}_T\right] \), where \({\mathbf {z}}_j= (x_{1j}, \ldots , x_{Mj})^T\), \(j=1,\ldots ,T\). Here, we use \({\mathbf {z}}_j\) as a sample for the BoF.

To build a codebook, \({\mathbf {z}}_j\) can be quantized using a clustering algorithm, hence the K-means clustering model g with \(K=128\) was trained. K-means clustering generates K centroids, and each vector \({\mathbf {z}}_j\) is classified with the centroid index closest to it. In other words, \(g ({\mathbf {z}}_j) = k\), where \(j \in \left\{ 1, \cdots , T\right\} \) and \(k \in \left\{ 1, \cdots , K\right\} \). Subsequently, we used the centroid index as a codebook for each sample. Each centroid can be seen as a “word” in the dictionary and each sample as a “document, ” which has a different set of words. We used the frequency ratio of each centroid index as a feature of the samples. In this approach, K is the size of the codebook, which can affect the classifier model, but the effect of the codebook size was not investigated in this research.

We used a sufficiently large K to represent each sample’s characteristics. Following the proposed approach, each sample was transformed into a K-dimensional vector. The original data \(\mathbf{X} \) became a feature vector \(\mathbf{f} \) with a size of K.

Through this process, we could extract K-dimensional features from the \(M \times T\) matrix. Once features were selected by using the bag-of-features, we used logistic regression (LR), support vector machine (SVM), Naive Bayes (NB), K-nearest neighbor (KNN), decision tree (DT), random forest (RF), extreme gradient boosting (XGBoost), light gradient boosting machine (LGBM), and multilayer perceptron (MLP) to classify the classes of samples.

Rights and permissions

About this article

Cite this article

Oh, Y., Lim, C., Lee, J. et al. Multichannel convolution neural network for gas mixture classification. Ann Oper Res 339, 261–295 (2024). https://doi.org/10.1007/s10479-022-04715-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10479-022-04715-2