Abstract

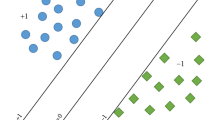

In this paper, a novel unconstrained convex minimization problem formulation for the Lagrangian dual of the recently introduced twin support vector machine (TWSVM) in simpler form is proposed for constructing binary classifiers. Since the objective functions of the modified minimization problems contain non-smooth ‘plus’ function, we solve them by Newton iterative method either by considering their generalized Hessian matrices or replacing the ‘plus’ function by a smooth approximation function. Numerical experiments were performed on a number of interesting real-world benchmark data sets. Computational results clearly illustrates the effectiveness and the applicability of the proposed approach as comparable or better generalization performance with faster learning speed is obtained in comparison with SVM, least squares TWSVM (LS-TWSVM) and TWSVM.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Balasundaram S, Gupta D (2014) Training Lagrangian twin support vector regression via unconstrained convex minimization. Knowl-Based Syst 59:85–96

Cortes C, Vapnik V N (1995) Support vector networks. Mach Learn 20:273–297

Cristianini N, Shawe-Taylor J (2000) An introduction to support vector machines and other kernel based learning method. Cambridge University Press, Cambridge

Demsar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

Fung G, Mangasarian OL (2003) Finite Newton method for Lagrangian support vector machine. Neurocomputing 55:39–55

Golub GH, Van Loan CF (1996) Matrix computations, 3rd ed., The Johns Hopkins University Press

Guyon I, Weston J, Barnhill S, Vapnik V (2002) Gene selection for cancer classification using support vector machine. Mach Learn 46:389–422

Hiriart-Urruty J -B, Strodiot J J, Nguyen V H (1984) Generalized Hessian matrix and second-order optimality conditions for problems with C 1,1 data. Appl Math Optim 11:43–56

Jayadeva K R, Chandra S (2007) Twin support vector machines for pattern classification. IEEE Trans Pattern Anal Mach Intell 29(5):905–910

Joachims T, Ndellec C, Rouveriol (1998) Text categorization with support vector machines: learning with many relevant features. In: European conference on machine learning, no.10, Chemnitz, Germany, pp 137–142

Kumar M A, Gopal M (2009) Least squares twin support vector machines for pattern classification. Expert Syst Appl 36:7535–7543

Kumar M A, Gopal M (2008) Application of smoothing technique on twin support vector machines. Pattern Recogn Lett 29:1842–1848

Lee Y J, Mangasarian O L (2001) SSVM: A smooth support vector machine for classification. Comput Optim Appl 20(1):5–22

Mangasarian O L (2002) A finite Newton method for classification. Optimization Methods and Software 17:913–929

Mangasarian O L, Musicant D R (2001) Lagrangian support vector machines. J Mach Learn Res 1:161–177

Mangasarian O L, Wild E W (2006) Multisurface proximal support vector classification via generalized eigenvalues. IEEE Trans Pattern Anal Mach Intell 28(1):69–74

Murphy P M, Aha D W (1992) UCI Repository of machine learning databases. University of California, Irvine. http://www.ics.uci.edu/~mlearn

Osuna E, Freund R, Girosi F (1997) Training support vector machines: an application to face detection. In: Proceedings of Computer Vision and Pattern Recognition, pp 130–136

Platt J (1999) Fast training of support vector machines using sequential minimal optimization. In: Scholkopf B, Burges CJC, Smola AJ (Ed.), Advances in kernel methods- support vector learning, MIT press, Cambridge, MA, pp 185–208

Peng X (2011) TPMSVM: A novel twin parametric-margin support vector machine for pattern recognition. Pattern Recogn 44(10-11):2678–2692

Peng X (2010) TSVR: An efficient twin support vector machine for regression. Neural Netw 23(3):365–372

Rockafellar R T (1974) Conjugate duality and optimization. SIAM, Philadelphia

Shao Y, Zhang C, Wang X, Deng N (2011) Improvements on twin support vector machines. IEEE Trans Neural Netw 22(6):962–968

Suykens J A K, Vandewalle J (1999) Least squares support vector machine classifiers. Neural Process Lett 9(3):293–300

Vapnik VN (2000) The nature of statistical learning theory, 2nd ed. Springer, New York

Zhou S, Liu H, Zhou L, Ye F (2007) Semi-smooth Newton support vector machine. Pattern Recogn Lett 28:2054–2062

Acknowledgments

The authors are thankful to the anonymous reviewers for their comments.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Balasundaram, S., Gupta, D. & Prasad, S.C. A new approach for training Lagrangian twin support vector machine via unconstrained convex minimization. Appl Intell 46, 124–134 (2017). https://doi.org/10.1007/s10489-016-0809-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-016-0809-8