Abstract

Existing work on Automated Negotiations commonly assumes the negotiators’ utility functions have explicit closed-form expressions, and can be calculated quickly. In many real-world applications however, the calculation of utility can be a complex, time-consuming problem and utility functions cannot always be expressed in terms of simple formulas. The game of Diplomacy forms an ideal test bed for research on Automated Negotiations in such domains where utility is hard to calculate. Unfortunately, developing a full Diplomacy player is a hard task, which requires more than just the implementation of a negotiation algorithm. The performance of such a player may highly depend on the underlying strategy rather than just its negotiation skills. Therefore, we introduce a new Diplomacy playing agent, called D-Brane, which has won the first international Computer Diplomacy Challenge. It is built up in a modular fashion, disconnecting its negotiation algorithm from its game-playing strategy, to allow future researchers to build their own negotiation algorithms on top of its strategic module. This will allow them to easily compare the performance of different negotiation algorithms. We show that D-Brane strongly outplays a number of previously developed Diplomacy players, even when it does not apply negotiations. Furthermore, we explain the negotiation algorithm applied by D-Brane, and present a number of additional tools, bundled together in the new BANDANA framework, that will make development of Diplomacy-playing agents easier.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Notes

We refer to https://www.wizards.com/avalonhill/rules/diplomacy.pdf for a complete description of the rules.

In a real-life game this is achieved by letting each player first secretly write down his orders on a piece of paper and only once everyone has done so, the orders are revealed.

An invincible pair for provinces (p,q) is considered a value for the variable corresponding to p, where p is lower than q in some predefined ordering of the Supply Centers.

Remember that p l a n(ν) is a set of battle plans, and each battle plan is a set of orders. So with “the orders in p l a n(ν)” we actually mean the orders in the battle plans in p l a n(ν).

For readers more familiar with Diplomacy: we mean that the players declare a draw whenever a game reaches the Winter 1920 phase.

To be precise: this result was obtained in case one DipBlue was negotiating with one instance of slightly more simplistic agent called ‘Naive’ against five DumbBots. In case two instances of DipBlue were playing, their result was worse than 3.57.

In this model we consider hold orders as a special kind of move-to order, for which the destination is the current location of the unit: p=l o c(u).

References

An B, Sim K M, Tang L, Li S, Cheng D (2006) Continuous time negotiation mechanism for software agents. IEEE Trans Syst Man Cybern B: Cybern 36(6):1261–1272

An B, Gatti N, Lesser V (2009) Extending alternating-offers bargaining in one-to-many and many-to-many settings. In: Proceedings of the 2009 IEEE/WIC/ACM international joint conference on web intelligence and intelligent agent technology—volume 02. IEEE Computer Society, Washington, WI-IAT ’09, pp 423–426. doi:10.1109/WI-IAT.2009.188

Baarslag T, Hindriks K, Jonker C M, Kraus S, Lin R (2010) The first automated negotiating agents competition (ANAC 2010). In: Ito T, Zhang M, Robu V, Fatima S, Matsuo T (eds) New trends in agent-based complex automated negotiations, series of studies in computational intelligence. Springer, Berlin

de Jonge D, Sierra C (2015) NB3: a multilateral negotiation algorithm for large, non-linear agreement spaces with limited time. Auton Agent Multi-Agent Syst 29(5):896–942. doi:10.1007/s10458-014-9271-3. http://www.iiia.csic.es/files/pdfs/jaamas%20NB3.pdf

Dechter R, Mateescu R (2007) And/or search spaces for graphical models. Artif Intell 171(2–3):73–106. doi:10.1016/j.artint.2006.11.003. http://www.sciencedirect.com/science/article/pii/S000437020600138X http://www.sciencedirect.com/science/article/pii/S000437020600138X

Dimopoulos Y, Moraitis P (2011) Advances in argumentation based negotiation. In: Negotiation and argumentation in multi-agent systems: fundamentals, theories systems and applications, pp 82–125

Endriss U (2006) Monotonic concession protocols for multilateral negotiation. In: Proceedings of the fifth international joint conference on autonomous agents and multiagent systems. ACM, New York, AAMAS ’06, pp 392–399. doi:http://doi.acm.org/10.1145/1160633.1160702

Fabregues A (2014) Facing the challenge of automated negotiations with humans. PhD thesis, Universitat Autònoma de Barcelona

Fabregues A, Sierra C (2011) Dipgame: a challenging negotiation testbed. Engineering Applications of Artificial Intelligence

Faratin P, Sierra C, Jennings N R (1998) Negotiation decision functions for autonomous agents. Robot Auton Syst 24(3-4):159–182. doi:10.1016/S0921-8890(98)00029-3. http://www.sciencedirect.com/science/article/pii/S0921889098000293, multi-Agent Rationality

Faratin P, Sierra C, Jennings NR (2000) Using similarity criteria to make negotiation trade-offs. In: International conference on multi-agent systems, ICMAS’00, pp 119–126

Fatima S, Wooldridge M, Jennings NR (2009) An analysis of feasible solutions for multi-issue negotiation involving nonlinear utility functions. In: Proceedings of the 8th international conference on autonomous agents and multiagent systems—volume 2, International Foundation for Autonomous Agents and Multiagent Systems, Richland, SC, AAMAS ’09, pp 1041–1048, http://dl.acm.org/citation.cfm?id=1558109.1558158

Ferreira A, Lopes Cardoso H, Paulo Reis L (2015) Dipblue: a diplomacy agent with strategic and trust reasoning. In: 7th international conference on agents and artificial intelligence (ICAART 2015), pp 398–405

Genesereth M, Love N, Pell B (2005) General game playing: overview of the aaai competition. AI Mag 26(2):62–72

Hemaissia M, El Fallah Seghrouchni A, Labreuche C, Mattioli J (2007) A multilateral multi-issue negotiation protocol. In: Proceedings of the 6th international joint conference on autonomous agents and multiagent systems, ACM, New York, AAMAS ’07, pp 155:1–155:8. doi:http://doi.acm.org/10.1145/1329125.1329314

Ito T, Klein M, Hattori H (2008) A multi-issue negotiation protocol among agents with nonlinear utility functions. Multiagent Grid Syst 4:67–83. http://dl.acm.org/citation.cfm?id=1378675.1378678

Klein M, Faratin P, Sayama H, Bar-Yam Y (2003) Protocols for negotiating complex contracts. IEEE Intell Syst 18(6):32–38. doi:10.1109/MIS.2003.1249167

Koenig S, Tovey C, Lagoudakis M, Markakis V, Kempe D, Keskinocak P, Kleywegt A, Meyerson A, Jain S (2006) The power of sequential single-item auctions for agent coordination. In: Proceedings of the AAAI conference on artificial intelligence (AAAI), pp 1625–1629

Kraus S, Lehmann D (1995) Designing and building a negotiating automated agent. Comput Intell 11:132–171

Kraus S, Lehman D, Ephrati E (1989) An automated diplomacy player. In: Levy D, Beal D (eds) Heuristic programming in artificial intelligence: the 1st computer Olympia. Ellis Horwood Limited, pp 134–153

Krishna V, Serrano R (1996) Multilateral bargaining. Rev Econ Stud 63 (1):61–80. http://EconPapers.repec.org/RePEc:bla:restud:v:63:y:1996:i:1:p:61-80

Lai G, Sycara K, Li C (2008) A decentralized model for automated multi-attribute negotiations with incomplete information and general utility functions. Multiagent Grid Syst 4:45–65. http://dl.acm.org/citation.cfm?id=1378675.1378677

Marsa-Maestre I, Lopez-Carmona MA, Velasco JR, de la Hoz E (2009) Effective bidding and deal identification for negotiations in highly nonlinear scenarios. In: Proceedings of the 8th international conference on autonomous agents and multiagent systems—volume 2. International Foundation for Autonomous Agents and Multiagent Systems, Richland, AAMAS ’09, pp 1057–1064. http://dl.acm.org/citation.cfm?id=1558109.1558160

Marsa-Maestre I, Lopez-Carmona MA, Velasco JR, Ito T, Klein M, Fujita K (2009) Balancing utility and deal probability for auction-based negotiations in highly nonlinear utility spaces. In: Proceedings of the 21st international joint conference on artifical intelligence. Morgan Kaufmann Publishers Inc., San Francisco, IJCAI’09, pp 214–219. http://dl.acm.org/citation.cfm?id=1661445.1661480

Nash J (1950) The bargaining problem. Econometrica 18:155–162

Nash J (1951) Non-cooperative games. Ann Math 54(2):286–295. http://www.jstor.org/stable/1969529

Nguyen TD, Jennings NR, 2004 Coordinating multiple concurrent negotiations. In: Proceedings of the third international joint conference on autonomous agents and multiagent systems - volume 3. IEEE Computer Society, Washington, AAMAS ’04, pp 1064–1071. doi:10.1109/AAMAS.2004.94

Poundstone W (1993) Prisoner’s Dilemma, 1st edn. Doubleday, New York

Rosenschein J S, Zlotkin G (1994) Rules of encounter. MIT Press, Cambridge

Rossi F, Beek PV, Walsh T (2006) Handbook of constraint programming (foundations of artificial intelligence). Elsevier Science Inc., New York

Serrano R (2008) Bargaining. In: Durlauf SN, Blume LE (eds) The new Palgrave dictionary of economics. Palgrave Macmillan, Basingstoke

Sierra C, Jennings N R, Noriega P, Parsons S (1997) A framework for argumentation-based negotiation. In: International workshop on agent theories, architectures, and languages. Springer, pp 177–192

Sycara K P (1990) Persuasive argumentation in negotiation. Theor Decis 28(3):203–242. doi:10.1007/BF00162699

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A:

Our experiments are largely implemented using the DipGame framework. However, we have implemented a couple of new tools on top of DipGame to make experimentation easier. We have combined these tools into a new extension of DipGame, that we call BANDANA (BAsic eNvironment for Diplomacy playing Automated Negotiating Agents). It includes the following components:

-

A new negotiation server.

-

A new negotiation language, which we find simpler to use than DipGame’s default language.

-

A Notary agent, for the implementation of the Unstructured Negotiation Protocol, as explained in Section 4.

-

Several example agents, including D-Brane and DumbBot.

-

The strategic component of D-Brane.

-

Example code that shows how one can implement a negotiating agent on top of D-Brane’s strategic component.

-

An Adjudicator which, given a set of orders for all units, determines which of those orders are successful.

-

A Game Builder, which allows users to set up a customized board configuration. This can be useful for testing.

The BANDANA framework can be downloaded from: http://www.iiia.csic.es/~davedejonge/bandana.

More details about BANDANA’s negotiation language and its other tools can be found in the manual which can also be downloaded from the same address.

Appendix B:

We here give a more thorough definition of the one-shot game D i p 𝜖 . We claim that D i p 𝜖 can be modeled as a COG, and that a single round of Diplomacy can be seen as an instance of the negotiation game N ( D i p 𝜖 ).

Definition 9

Let 𝜖 denote a configuration of units on the Diplomacy map. Then D i p 𝜖 is defined by the tuple:

Here, G r is a symmetric graph, of which the vertices are called provinces. The set of provinces is denoted P r o v and we use the notation a d j(p,q) to state that provinces p and q are adjacent in the graph. The set of Supply Centers SC is a subset of P r o v. The set P l Dip represents the 7 players: \(Pl^{Dip} = \{\alpha _{1}, {\dots } \alpha _{7}\}\). For each player α i there is a finite set U n i t s i , which we call the set of units owned by α i . These sets are all disjoint: \(i\neq j \Rightarrow Units_{i} \cap Units_{j} = \emptyset \). The set of all units is denoted: \(\mathit {Units}= \bigcup _{i=1}^{7}\mathit {Units_{i}}\). The state 𝜖 of the game implicitly defines an injective function \(loc : Units \rightarrow Prov\) that assigns a province (the location of u) to any unit u. In order to define the utility functions \(f^{Dip_{\epsilon }}_{i}\) we first need to define several other concepts.

Given the state 𝜖 we can define the set of possible orders O r d 𝜖:

The orders in M t o 𝜖 are called move-to orders Footnote 11 and the orders in S u p 𝜖 are called support orders. We use the notation \(Ord_{u}^{\epsilon }\) to denote the subset of O r d 𝜖 consisting of all possible orders for a given unit u.

Furthermore, we will use \(Ord_{i}^{\epsilon }\) to denote the set possible orders for any unit of player α i :

and for a set of players \(C \subset Pl\) we define:

We use similar notation conventions for other sets, such as U n i t s, M t o 𝜖 and S u p 𝜖.

An action μ i for a player α i in D i p 𝜖 is then defined as a set of orders, containing exactly one order for each of α i ’s units:

Definition 10

If α i plays an action μ i that contains order o then we say that α i submits the order o. If μ=(μ 1,μ 2,...μ 7) is an action profile then \(\hat {\mu }\) denotes the set of all orders submitted by all players:

Definition 11

A support order \((u,u^{\prime }) \in Sup^{\epsilon }\) is considered valid, for an action profile μ, if \(\hat {\mu }\) contains a move-to order \((u^{\prime }, p) \in Mto^{\epsilon }\) where p is adjacent to the location of u. This is denoted by the predicate \(val(\mu , u,u^{\prime })\).

The rules of Diplomacy specify that players may only submit support orders that are valid.

Definition 12

Given an action profile μ, a support \((u,u^{\prime })\in Sup_{u}^{\epsilon }\) in \(\hat {\mu }\) is said to be cut if \(\hat {\mu }\) also contains a move-to order that moves to the location of u:

Definition 13

The set of successful supports of u in an action profile μ is defined as those orders that support u, and that are valid and not cut:

Definition 14

The force s(μ,u,p) exerted by unit u on province p is defined as:

Definition 15

We say a player α i conquers a province p if α i has a unit that exerts more force on p than any other unit:

Finally, we can define the utility function \(f^{Dip_{\epsilon }}_{i}\) for a player α i as the number of Supply Centers he or she conquers:

A natural way to play Diplomacy is to determine for each Supply Center separately whether, and how, it can be conquered. However, the decision how to attack one Supply Center may restrict the possibilities to attack another Supply Center if the same units are involved. This is the essence of a COG. Therefore, we will now show how D i p 𝜖 can be modeled as a COG.

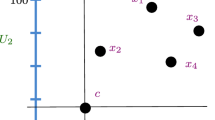

We define the set of units of player α i involved in province p as those units that may move to p, hold in p, support a unit holding in or moving to p, or that may cut any opponent unit that could give support to another opponent unit holding in p or moving to p. This set is denoted by U n i t s p,i . More precisely, it consists of all units next to or inside p, and all units located next to an opponent’s unit that is located next to p:

Definition 16

The set of units of player α i involved in province p, denoted U n i t s p,i is defined as:

We model D i p 𝜖 as a COG by defining a micro-game D i p 𝜖,p for each Supply Center p∈S C. An action in such a micro-game consists of a set of orders containing maximally 1 order for each unit of player α i involved in Supply Center p:

Note that in this definition a player is not required to submit an order to each unit involved in p. This is because that unit may instead be used to attack or defend another province \(p^{\prime }\).

This definition implies that there are binary constraints between the micro-games, because a unit may be involved in more than one province. Two actions μ p,i and μ q,i are incompatible if for any unit involved in both provinces, two different orders are submitted. That is, μ p,i and μ q,i are compatible iff the following restriction holds:

We define the utility function of the micro-game D i p 𝜖,p to be:

Here, μ p is an action profile in the micro-game D i p 𝜖,p . The question whether c o n q(μ,i,p) holds only depends on the orders submitted for the units involved in p. Therefore, if \(\hat {\mu }_{p}\) is a subset of \(\hat {\mu }\) then c o n q(μ p ,i,p) holds iff c o n q(μ,i,p) holds, which means that (5) and (7) are consistent with (1).

In the following, we use the notation U n i t s C , for a set of players C (a ‘coalition’) to denote the union of the units of those players:

Definition 17

Let C denote a coalition containing player α i , then a battle plan β for α i and Supply Center p is a set of orders \(\beta \subset Ord^{\epsilon }\) of the form:

with u∈U n i t s i , and \(Supports \subseteq Sup_{C}^{\epsilon }\) is a (possibly empty) set of support orders \((u^{\prime }, u)\) that support u and \(Cuts \subseteq Mto_{C}^{\epsilon }\) a (possibly empty) set of orders that aim to cut any opponent unit that may support a hostile move into p:

Furthermore we have the restriction that β can contain at most one order for each unit:

The set of all battle plans for player α i on Supply Center p is denoted \(B_{i,p}^{\epsilon }\).

Definition 18

Given a set of deals X, an invincible plan is a battle plan \(\beta \in B_{i,p}^{\epsilon }\) for some province p and some player α i that for every action profile that satisfies X and in which all orders of β are submitted α i will conquer p. That is, β is invincible iff the following holds:

Furthermore, we can determine all invincible pairs:

Definition 19

Given a set of deals X, an invincible pair is a pair of battle plans \((\beta _{1} , \beta _{2}) \in B_{i,p}^{\epsilon } \times B_{i,q}^{\epsilon }\) for two provinces p,q and some player α i that guarantees player α i to either conquer p or conquer q:

Rights and permissions

About this article

Cite this article

de Jonge, D., Sierra, C. D-Brane: a diplomacy playing agent for automated negotiations research. Appl Intell 47, 158–177 (2017). https://doi.org/10.1007/s10489-017-0919-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-017-0919-y