Abstract

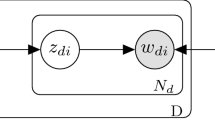

Biterm Topic Model (BTM) is an effective topic model proposed to handle short texts. However, its standard gibbs sampling inference method (StdBTM) costs much more time than that (StdLDA) of Latent Dirichlet Allocation (LDA). To solve this problem we propose two time-efficient gibbs sampling inference methods, SparseBTM and ESparseBTM, for BTM by making a tradeoff between space and time consumption in this paper. The idea of SparseBTM is to reduce the computation in StdBTM by both recycling intermediate results and utilizing the sparsity of count matrix \(\mathbf {N}^{\mathbf {T}}_{\mathbf {W}}\). Theoretically, SparseBTM reduces the time complexity of StdBTM from O(|B| K) to O(|B| K w ) which scales linearly with the sparsity of count matrix \(\mathbf {N}^{\mathbf {T}}_{\mathbf {W}}\) (K w ) instead of the number of topics (K) (K w < K, K w is the average number of non-zero topics per word type in count matrix \(\mathbf {N}^{\mathbf {T}}_{\mathbf {W}}\)). Experimental results have shown that in good conditions SparseBTM is approximately 18 times faster than StdBTM. Compared with SparseBTM, ESparseBTM is a more time-efficient gibbs sampling inference method proposed based on SparseBTM. The idea of ESparseBTM is to reduce more computation by recycling more intermediate results through rearranging biterm sequence. In theory, ESparseBTM reduces the time complexity of SparseBTM from O(|B|K w ) to O(R|B|K w ) (0 < R < 1, R is the ratio of the number of biterm types to the number of biterms). Experimental results have shown that the percentage of the time efficiency improved by ESparseBTM on SparseBTM is between 6.4% and 39.5% according to different datasets.

Similar content being viewed by others

References

Azzopardi L, Girolami M, van Risjbergen K (2003) Investigating the relationship between language model perplexity and ir precision-recall measures. In: Proceedings of the 26th annual international ACM SIGIR conference on Research and development in informaion retrieval, pp. 369–370. ACM

Blei D, Carin L, Dunson D (2010) Probabilistic topic models. IEEE Signal Process Mag 27(6):55–65

Blei DM (2012) Probabilistic topic models. Commun ACM 55(4):77–84

Blei DM, Lafferty JD (2009) Topic models. Text mining: classification, clustering, and applications 10 (71):34

Blei DM, Ng AY, Jordan MI (2001) Latent Dirichlet allocation Advances in neural information processing systems, pp. 601–608

Blei DM, Ng AY, Jordan MI (2003) Latent Dirichlet allocation. J Mach Learn Res 3:993–1022

Carter CK, Kohn R (1994) On gibbs sampling for state space models. Biometrika 81(3):541–553

Cheng X, Yan X, Lan Y, Guo J (2014) Btm: Topic modeling over short texts. IEEE Trans Knowl Data Eng 26(12):2928–2941

Chib S, Greenberg E (1995) Understanding the metropolis-hastings algorithm. Am Stat 49(4):327–335

Chuang J, Gupta S, Manning CD, Heer J (2013) Topic model diagnostics: assessing domain relevance via topical alignment ICML, pp. 612–620

Crain SP, Zhou K, Yang SH, Zha H (2012) Dimensionality reduction and topic modeling: from latent semantic indexing to latent Dirichlet allocation and beyond, pp. 129–161. Springer

Geweke J, Tanizaki H (2001) Bayesian estimation of state-space models using the metropolischastings algorithm within gibbs sampling. Comput Stat Data Anal 37(2):151–170

Gilks WR, Richardson S, Spiegelhalter D (1995) Markov chain Monte Carlo in practice. CRC press

Griffiths T (2002) Gibbs sampling in the generative model of latent Dirichlet allocation. Technical Report

Griffiths T, Steyvers M (2004) Finding scientific topics. Proc Natl Acad Sci 101(suppl 1):5228–5235

Guo W, Li H, Ji H, Diab MT (2013) Linking tweets to news: a framework to enrich short text data in social media. In: ACL (1), pp. 239–249. Citeseer

Heinrich G (2004) Parameter estimation for text analysis. Technical Report

Hong L, Davison BD (2011) Empirical study of topic modeling in twitter. In: Proceedings of the First Workshop on Social Media Analytics, pp. 80–88. ACM

Kronmal RA, Peterson AV (1979) On the alias method for generating random variables from a discrete distribution. Am Stat 33(4):214–218

Li AQ, Ahmed A, Ravi S, Smola AJ (2014) Reducing the sampling complexity of topic models. In: Proceedings of the 20th ACM SIGKDD international conference on knowledge discovery and data mining, pp. 891–900. ACM

Li X, Ouyang J, Zhou X, Lu Y, Liu Y (2015) Supervised labeled latent Dirichlet allocation for document categorization. Appl Intell 42(3):581–593

Marsaglia G, Tsang WW, Wang J (2004) Fast generation of discrete random variables. J Stat Softw 11 (3):1–11

Porteous I, Newman D, Ihler A, Asuncion A, Smyth P, Welling M (2008) Fast collapsed gibbs sampling for latent Dirichlet allocation Proceedings of the 14th ACM SIGKDD international conference on Knowledge discovery and data mining, pp. 569–577. ACM

Qiu Z, Wu B, Wang B, Shi C, Yu L (2014) Collapsed gibbs sampling for latent Dirichlet allocation on spark. J Mach Learn Res 36:17–28

Roberts GO, Smith AF (1994) Simple conditions for the convergence of the gibbs sampler and metropolis-hastings algorithms. Stochastic Processes and their Applications 49(2):207–216

Smith AF, Roberts GO (1993) Bayesian computation via the gibbs sampler and related Markov chain Monte Carlo methods. J R Stat Soc Ser B Methodol 55:3–23

Sriram B, Fuhry D, Demir E, Ferhatosmanoglu H, Demirbas M (2010) Short text classification in twitter to improve information filtering. In: Proceedings of the 33rd international ACM SIGIR conference on Research and development in information retrieval, pp. 841–842. ACM

Steyvers M, Griffiths T (2007) Probabilistic topic models. Handbook of Latent Semantic Analysis 427 (7):424–440

Suhara Y, Toda H, Nishioka S, Susaki S (2013) Automatically generated spam detection based on sentence-level topic information. In: Proceedings of the 22nd international conference on World Wide Web. ACM, pp 1157–1160

Walker AJ (1977) An efficient method for generating discrete random variables with general distributions. ACM Trans Math Soft (TOMS) 3(3):253–256

Wang F, Wang Z, Li Z, Wen JR (2014) Concept-based short text classification and ranking. In: Proceedings of the 23rd ACM International Conference on Conference on Information and Knowledge Management, pp. 1069–1078. ACM

Weng J, Lim EP, Jiang J, He Q (2010) Twitterrank: finding topic-sensitive influential twitterers. In: Proceedings of the third ACM international conference on Web search and data mining, pp. 261–270. ACM

Xiao H, Stibor T (2010) Efficient collapsed gibbs sampling for latent Dirichlet allocation. In: ACML, pp. 63–78

Yan X, Guo J, Lan Y, Cheng X (2013) A biterm topic model for short texts Proceedings of the 22nd international conference on World Wide Web, pp. 1445–1456. International World Wide Web Conferences Steering Committee

Yao L, Mimno D, McCallum A (2009) Efficient methods for topic model inference on streaming document collections. In: Proceedings of the 15th ACM SIGKDD international conference on Knowledge discovery and data mining, pp. 937–946. ACM

Yuan J, Gao F, Ho Q, Dai W, Wei J, Zheng X, Xing EP, Liu TY, Ma WY (2015) Lightlda: Big topic models on modest computer clusters. In: Proceedings of the 24th International Conference on World Wide Web, pp. 1351–1361. International World Wide Web Conferences Steering Committee

Zhao WX, Jiang J, Weng J, He J, Lim EP, Yan H, Li X (2011) Comparing twitter and traditional media using topic models, pp. 338–349. Springer

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by National Nature Science Foundation of China (NSFC) under the Grant No. 61170092, 61133011 and 61103091.

Rights and permissions

About this article

Cite this article

Zhou, X., Ouyang, J. & Li, X. Two time-efficient gibbs sampling inference algorithms for biterm topic model. Appl Intell 48, 730–754 (2018). https://doi.org/10.1007/s10489-017-1004-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-017-1004-2