Abstract

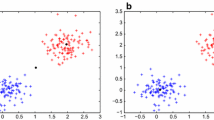

This paper has two contributions. First, we introduce a clustering basic benchmark. Second, we study the performance of k-means using this benchmark. Specifically, we measure how the performance depends on four factors: (1) overlap of clusters, (2) number of clusters, (3) dimensionality, and (4) unbalance of cluster sizes. The results show that overlap is critical, and that k-means starts to work effectively when the overlap reaches 4% level.

Similar content being viewed by others

Notes

References

Forgy E (1965) Cluster analysis of multivariate data: efficiency vs. interpretability of classification. Biometrics 21:768

MacQueen J (1967) Some methods for classification and analysis of multivariate observations. In: Berkeley symposium on mathematical statistics and probability, volume 1: statistics. University of California Press, Berkeley, pp 281–297

Lloyd SP (1982) Least squares quantization in PCM. IEEE Trans Inf Theory 28(2):129–137

Jain AK (2010) Data clustering: 50 years beyond K-means. Pattern Recogn Lett 31:651–666

Krishna K, Murty MN (1999) Genetic k-means algorithm. IEEE Trans Syst Man Cybern Part B 29 (3):433–439

Fränti P (2000) Genetic algorithm with deterministic crossover for vector quantization. Pattern Recogn Lett 21(1):61–68

Fränti P, Kivijärvi J (2000) Randomized local search algorithm for the clustering problem. Pattern Anal Applic 3(4):358–369

Steinley D, Brusco MJ (2007) Initializing k-means batch clustering: a critical evaluation of several techniques. J Classif 24:99–121

Steinbach M, Ertöz L, Kumar V (2003) The challenges of clustering high dimensional data. New Vistas in statistical physics – applications in econophysics, bioinformatics, and pattern recognition. Springer

Morissette L, Chartier S (2013) The k-means clustering technique: general considerations and implementation in Mathematica. Tutor Quantitative Methods Psychol 9(1):15–24

Liang J, Bai L, Dang C, Cao F (2012) The k-means-type algorithms versus imbalanced data distributions. IEEE Trans Fuzzy Syst 20(4):728–745

Luxburg U, Williamson RC, Guyon I (2012) Clustering: science or art. J Mach Learn Res 27:65–79

Zhao Q, Fränti P (2014) WB-index: a sum-of-squares based index for cluster validity. Data Knowl Eng 92:77–89

Zoubi M, Rawi M (2008) An efficient approach for computing silhouette coefficients. J Comput Sci 4 (3):252–255

Fränti P, Virmajoki O, Hautamäki V (2006) Fast agglomerative clustering using a k-nearest neighbor graph. IEEE Trans Pattern Anal Mach Intell 28(11):1875–1881

Zhang RR, Livny M (1997) BIRCH: a new data clustering algorithm and its applications. Data Min Knowl Disc 1(2):141–182

Kärkkäinen I, Fränti P Dynamic local search algorithm for the clustering problem, Research Report A-2002-6

Fränti P, Virmajoki O (2006) Iterative shrinking method for clustering problems. Pattern Recogn 39 (5):761–765

Fränti P, Mariescu-Istodor R, Zhong C (2016) XNN graph. In: IAPR Joint int. workshop on structural, syntactic, and statistical pattern recognition merida, Mexico, LNCS 10029, pp 207– 217

Rezaei M, Fränti P (2016) Set-matching methods for external cluster validity. IEEE Trans Knowl Data Eng 28(8):2173– 2186

Maitra R, Melnykov V (2010) Simulating data to study performance of finite mixture modeling and clustering algorithms. J Comput Graph Stat 19(2):354–376

Beyer K, Goldstein J, Ramakrishnan R, Shaft U (1999) When is “nearest neighbor” meaningful. In: Int Conf on database theory, pp 217–235

Chavez E, Navarro G (2001) A probabilistic spell for the curse of dimensionality. Workshop on Algorithm Engineering and Experimentation LNCS 2153:147–160

Tomasev N, Radovanovi M, Mladeni D, Ivanovi M (2014) The role of hubness in clustering high-dimensional data. IEEE Trans Knowl Data Eng 26(3):739–751

Radovanovic M, Nanopoulos A, Ivanovic M (2010) Hubs in space: popular nearest neighbors in high-dimensional data. J Mach Learn Res 11:2487–2531

Dasgupta S (2007) The hardness of k-means clustering, Technical Report CS2007-0890. University of California, San Diego

Mahajan M, Nimbhorkar P, Varadarajan K (2012) The planar k-means problem is NP-hard. Theor Comput Sci 442:13–21

Fränti P, Rezaei M, Zhao Q (2014) Centroid index: cluster level similarity measure. Pattern Recogn 47(9):3034–3045

Steinley D, Brusco MJ, Hubert L (2016) The variance of the adjusted rand index. Psychol Methods 21 (2):261–272

Kanungo T, Mount DM, Netanyahu N, Piatko C, Silverman R, Wu AY (2004) A local search approximation algorithm for k-means clustering. Comput Geom Theory Appl 28:89–112

Li SC, Ng YK, Zhang L (2008) A PTAS for the k-consensus structures problem under squared euclidean distance. Algorithms 1(2):43–51

Awasthi P, Charikar M, Krishnaswamy R, Sinop AK (2015) The hardness of approximation of euclidean k-means. In: Int. Symp. on computational geometry (SoCG). Eindhoven

Arthur D, Vassilvitskii S (2007) K-means+ +: the advantages of careful seeding. In: ACM-SIAM Symp. on discrete algorithms (SODA), New Orleans, 1027-1035 January

Ball GH, Hall DJ (1967) A clustering technique for summarizing multivariate data. Syst Res Behav Sci 12 (2):153–155

Bradley P, Fayyad U (1998) Refining initial points for k-means clustering. In: Int. Conf. on machine learning. San Francisco, pp 91-99

Steinley D (2003) Local optima in k-means clustering: what you don’t know may hurt you. Psychol Methods 8:294–304

Gonzalez T (1985) Clustering to minimize the maximum intercluster distance. Theor Comput Sci 38 (2–3):293–306

Tezuka S, Equyer P (1991) Efficient portable combined Tausworthe random number generators. ACM Trans Model Comput Simul 1:99–112

Peña J, Lozano JA, Larrañaga P (1999) An empirical comparision of four initialization methods for the k-means algorithm. Pattern Recogn Lett 20(10):1027–1040

Mallah C, Cope J, Orwell J (2013) Plant leaf classification using probabilistic integration of shape, texture and margin features. Signal Process Pattern Recogn Appl

Frey PW, Slate DJ (1991) Letter recognition using Holland-style adaptive classifiers. Mach Learn 6 (2):161–182

Yan D, Huang L, Jordan MI (2009) Fast approximate spectral clustering. ACM SIGKDD Int Conf on knowledge discovery and data mining, 907–916

Xiong H, Wu J, Chen J (2009) K-means clustering versus validation measures: a data distribution perspective. IEEE Trans Syst Man Cybern Part B 39(2):318–331

Zhou K, Yang S (2016) Exploring the uniform effect of FCM clustering: a data distribution perspective. Knowl-based Syst 96:76–83

Hirsch JE (2005) An index to quantify an individual’s scientific research output. PNAS 102(46):16569–72

Ben-Hur A, Horn D, Siegelmann HT, Vapnik V (2001) Support vector clustering. J Mach Learn Res 2:125–137

Balcan M-F, Blum A, Vempala S (2008) A discriminative framework for clustering via similarity functions. ACM Symposium on theory of computing, 671–680

Ackerman M, Ben-David S, Brânzei S, Loker D (2012) Weighted clustering. AAAI Conf on artificial intelligence, 858–863

Biçici E, Yuret D (2007) Locally scaled density based clustering. In: Int. Conf. on adaptive and natural computing algorithms. Springer, pp 739–748

Pelleg D, Moore AW (2000) X-means: extending k-means with efficient estimation of the number of clusters. Int Conf on machine learning, 1

Hinneburg A, Keim DA (1999) Optimal grid-clustering: towards breaking the curse of dimensionality in high-dimensional clustering. Int Conf on very large databases, 506–517

Domeniconi C, Gunopulos D, Ma S, Yan B, Al-Razgan M, Papadopoulos D (2007) Locally adaptive metrics for clustering high dimensional data. Data Min Knowl Disc 14(1):63–97

Bellman R (1961) Adaptive control processes: a guided tour. Princeton University Press

Aggarwal CC, Hinneburg A, Keim DA (2001) On the surprising behavior of distance metrics in high dimensional spaces. In: Int. conf. on database theory, LNCS vol 1973. Springer, pp 420– 434

Sieranoja S, Fränti P (2018) Random projection for k-means clustering. In: Int. Conf. artificial intelligence and soft computing (ICAISC). Zakopane

Hartigan JA, Wong MA (1979) Algorithm AS 136: a k-means clustering algorithm. J R Statist Soc C 28 (1):100–108

Erisoglu M, Calis N, Sakallioglu S (2011) A new algorithm for initial cluster centers in k-means algorithm. Pattern Recogn Lett 32(14):1701–1705

Fränti P (2018) Efficiency of random swap clustering. J Big Data 5:13:1–29

Duda RO, Hart PE (1973) Pattern classification and scene analysis. Wiley, New York

Kinnunen T, Sidoroff I, Tuononen M, Fränti P (2011) Comparison of clustering methods: a case study of text-independent speaker modeling. Pattern Recogn Lett 32(13):1604–1617

Huang X, Zhang L, Wang B, Li F, Zhang Z (2018) Feature clustering based support vector machine recursive feature elimination for gene selection. Appl Intell 48:594–607

Ultsch A (2005) Clustering with SOM: U*C, workshop on self-organizing maps. Paris, pp 75–82

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fränti, P., Sieranoja, S. K-means properties on six clustering benchmark datasets. Appl Intell 48, 4743–4759 (2018). https://doi.org/10.1007/s10489-018-1238-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-018-1238-7