Abstract

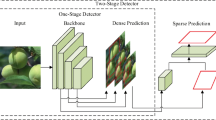

When a robot picks green fruit under natural light, the color of the fruit is similar to the background; uneven lighting and fruit and leaf occlusion often affect the performance of the detection method. We take green mangoes as an experimental object. A lightweight green mangoes detection method based on YOLOv3 is proposed here. To improve the detection speed of the method, we first combine the color, texture, and shape features of green mango to design a lightweight network unit to replace the residual units in YOLOv3. Second, the improved Multiscale context aggregation (MSCA) module is used to concatenate multilayer features and make predictions, solving the problem of insufficient position information and semantic information on the prediction feature map in YOLOv3; this approach effectively improves the detection effect for the green mangoes. To address the overlap of green mangoes, soft non-maximum suppression (Soft-NMS) is used to replace non-maximum suppression (NMS), thereby reducing the missing of predicted boxes due to green mango overlaps. Finally, an auxiliary inspection green mango image enhancement algorithm (CLAHE-Mango) is proposed, is suitable for low-brightness detection environments and improves the accuracy of the green mango detection method. The experimental results show that the F1% of Light-YOLOv3 in the test set is 97.7%. To verify the performance of Light-YOLOv3 under the embedded platform, we embed one-stage methods into the Adreno 640 and Mali-G76 platforms. Compared with YOLOv3, the F1% of Light-YOLOv3 is increased by 4.5%, and the running speed is increased by 5 times, which can meet the real-time running requirements for picking robots. Through three sets of comparative experiments, we could determine that our method has the best detection results in terms of dense, backlit, direct light, night, long distance, and special angle scenes under complex lighting.

Similar content being viewed by others

References

He Z, Xiong J, Lin R, Zou X, Tang L, Yang Z (2017) A method of green litchi recognition in natural environment based on improved LDA classifier. Computers & Electronics in Agriculture 140:159–167

Lu J, Sang N (2015) Detecting citrus fruits and occlusion recovery under natural illumination conditions. Computers & Electronics in Agriculture 110:121–130

Li H, Lee W, Wang K (2016) Immature green citrus fruit detection and counting based on fast normalized cross correlation (FNCC) using natural outdoor colour images. Precis Agriculture 17(6):678–697

Rajneesh B, Won S, Saumya S (2013) Green citrus detection using fast Fourier transform (FFT) leakage. Precis Agric 14(1):59–70

Lu J, Lee W, Gan H, Hu X (2018) Immature citrus fruit detection based on local binary pattern feature and hierarchical contour analysis. Biosyst Eng 171:78–90

Stein M, Bargoti S, Underwood J (2016) Image based mango fruit detection localisation and yield estimation using multiple view geometry. Sensors 16(11):57–64

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521:436–444

Xu Z, Jia R, Liu Y, Zhao C, Sun H (2020) Fast method of detecting tomatoes in a complex scene for picking robots. IEEE Access 8:55289–55299

Girshick R, Donahue J, Darrell T, Malik J (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 580–587

Girshick R (2015) Fast R-CNN. IEEE international conference on computer vision (ICCV) 1440-1448

Ren S, He K, Girshick R, Sun J (2015) Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 39:1137–1149

Dai J, Li Y, He K, Sun J (2016) R-FCN: object detection via region based fully convolutional networks. Neural information processing systems 379-387

He K, Gkioxari G, Dollár P, Girshick R (2017) Mask R-CNN. Proc IEEE international conference on computer vision (ICCV) 2961-2969

Sa I, Ge Z, Dayoub F (2016) DeepFruits: A Fruit Detection System Using Deep Neural Networks. Sensors 16(8):888–911

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S et al (2016) SSD: single shot multibox detector. European Conference on Computer Vision 21–37

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: unified real-time object detection. IEEE conference on computer vision and pattern recognition (CVPR) 779-788

Redmon J, Farhadi A (2017) YOLO9000: Better Faster Stronger. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 7263–7271

Redmon J, Farhadi A (2018): YOLOv3: an incremental improvement. [online] Available: https://arxiv.org/abs/1804.02767

Lin T, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. IEEE international conference on computer vision (ICCV) 2980-2988

Kim S, Kook H, Sun J, Kang M (2018) Parallel feature pyramid network for object detection. The European Conference on Computer Vision (ECCV) 234–250

Bodla N, Singh B, Chellappa R (2017) Soft-NMS — improving object detection with one line of code. IEEE international conference on computer vision (ICCV) 5562-5570

Neubeck A, Vangool L, (2006) Efficient non-maximum suppression. 18th international conference on pattern recognition 3: 850-855

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 770–778

Sandler M, Howard A, Zhu M, Zhmoginov A, and Chen L (2018) Mobilenetv2: inverted residuals and linear bottlenecks. IEEE conference on computer vision and pattern recognition (CVPR) 4510-4520

Zhang, X, Zhou, X, Lin M, Sun J (2017) ShuffleNet: an extremely efficient convolutional neural network for Mobile devices. Conference on Computer Vision and Pattern Recognition (CVPR) 6848–6856

Ma N, Zhang X, Zheng H (2018) ShuffleNet v2: practical guidelines for efficient CNN architecture design. European Conference on Computer Vision (ECCV) 1440–1448

Krizhevsky A, Sutskever I, Hinton G (2017) ImageNet classification with deep convolutional neural networks. Commun ACM 60(6):84–90

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. International conference on machine learning 448-456

Wu Y, He K (2019) Group normalization. Int J Comput Vis 128(3):742–755

Reza A (2004) Realization of the contrast limited adaptive histogram equalization (clahe) for real-time image enhancement. Journal of Vlsi Signal Processing System for Signal 38(1):35–44

Everingham M, Eslami A, Gool L, Williams C, Winn J (2014) The PASCAL visual object classes challenge: a retrospective. Int J Comput Vis 111:98–136

Mao Q, Sun H, Liu Y (2019) Mini-YOLOv3: real-time object detector for embedded applications. IEEE Access 7:133529–133538

Acknowledgments

The authors are grateful for collaborative funding support from the Natural Science Foundation of Shandong Province, China (ZR2018MEE008), the Key Research and Development Program of Shandong Province, China (2017GSF20115).

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xu, ZF., Jia, RS., Sun, HM. et al. Light-YOLOv3: fast method for detecting green mangoes in complex scenes using picking robots. Appl Intell 50, 4670–4687 (2020). https://doi.org/10.1007/s10489-020-01818-w

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-020-01818-w