Abstract

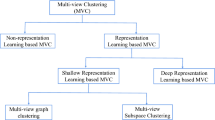

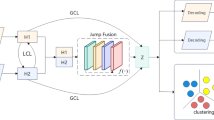

Multi-view data can collaborate with each other to provide more comprehensive information than single-view data. Although there exist a few unsupervised multi-view representation learning methods taking both the discrepancies and incorporating complementary information from different views into consideration, they always ignore the use of inner-view discriminant information. It remains challenging to learn a meaningful shared representation of multiple views. To overcome this difficulty, this paper proposes a novel unsupervised multi-view representation learning model, MRL. Unlike most state-of-art multi-view representation learning, which only can be used for clustering or classification task, our method explores the proximity guided representation from inner-view and complete the task of multi-label classification and clustering by the discrimination fusion representation simultaneously. MRL consists of three parts. The first part is a deep representation learning for each view and then aims to represent the latent specific discriminant characteristic of each view, the second part builds a proximity guided dynamic routing to preserve its inner features of direction,location and etc. At last, the third part, GCCA-based fusion, exploits the maximum correlations among multiple views based on Generalized Canonical Correlation Analysis (GCCA). To the best of our knowledge, the proposed MRL could be one of the first unsupervised multi-view representation learning models that work in proximity guided dynamic routing and GCCA modes. The proposed model MRL is tested on five multi-view datasets for two different tasks. In the task of multi-label classification, the results show that our model is superior to the state-of-the-art multi-view learning methods in precision, recall, F1 and accuracy. In clustering task, its performance is better than the latest related popular algorithms. And the performance varies w.r.t. the dimensionality of G is also made to explore the characteristics of MRL.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Shu T, Zhang B, Tang YY (2019) Multi-view classification via aFast and effective multi-view nearest-subspace classifier. IEEE Access 7:49669–49679

Suk H, Shen D (2013) Deep learning-based feature representation for AD/MCI classification. Medical image computing and computer assisted intervention 16: 583-590

Wu S, Chen Y, Li X (2016) An enhanced deep feature representation for person re-identification. 2016 IEEE Winter Conference on Applications of Computer Vision : 1–8

Zou J, Li W, Chen C (2016) Scene classification using local and global features with collaborative representation fusion. Inf Sci 348:209–226

Cui L, Chen Z, Zhang J (2018) Multi-view fusion through cross-modal retrieval. International conference on image processing: 1977-1981

Maeday K, Takahashi S, Ogaway T (2019). Multi-feature fusion based on supervised multi-view multi-label canonical correlation projection. International conference on acoustics speech and signal processing: 3936-3940

Zhang C, Yu Z, Hu Q (2018). Latent Semantic Aware Multi-view Multi-label Classification. AAAI: 4414–4421

Zhang C, Adeli E, Zhou T(2018) Multi-layer multi-view classification for Alzheimer's disease diagnosis. AAAI: 4406–4413

Lu C, He L, Shao W (2017) Multilinear factorization Machines for Multi-Task Multi-View Learning.WSDM: 701-709

Blei D M, Jordan M I (2003) Modeling annotated data. International acm sigir conference on research and development in information retrieval: 127-134

Li Y, Yang M, Zhang Z (2016) Multi-view representation learning: a survey from shallow methods to deep methods. Journal of latex class files 14:1–20

Tran L, Yin X, Liu X (2017) Disentangled representation learning GAN for pose-invariant face recognition. Computer vision and pattern recognition (CVPR): 1283-1292

Tulsiani S, Zhou T, Efros A A (2017) Multi-view supervision for single-view reconstruction via differentiable ray consistency. Computer vision and pattern recognition(CVPR): 209-217

Hao T, Wu D, Wang D (2017) Multi-view representationlearning for multi-view action recognition. J. Visual communication and image Representation 48:53–460

Su H, Maji S, Kalogerakis E (2015) Multi-view convolutional neural networks for 3D shape recognition. International conference on computer vision: 945-953

Chen M, Denoyer L (2017) Multi-view generative adversarial networks. European conference on machine learning: 175-188

Srivastava N, Salakhutdinov R (2012) Multimodal learning with deep Boltzmann machines. Neural information processing systems: 2222-2230

Hotelling H (1936) Relations between two sets of Variates Biometrika: 321-377

Tenenhaus A, Tenenhaus M (2011) Regularized generalized canonical correlation analysis. Psychometrika 76(2):257–284

Tenenhaus A, Philippe C, Frouin V (2015) Kernel generalized canonical correlation analysis. Computational Statistics & Data Analysis 90:114–131

Shen C, Sun M, Tang M (2014) Generalized canonical correlation analysis for classification. J Multivar Anal 130:310–322

Lai PL, Fyfe C (2000) Kernel and nonlinear canonical correlation analysis. International Journal of Neural Systems 10(5):365–377

Sun L, Ji S, Ye J (2008) A least squares formulation for canonical correlation analysis.international conference on machine learning: 1024–1031

Bach F, Jordan M.I (2005) A probabilistic interpretation of canonical correlation analysis. Tech Rep

Horst P (1961) Generalized canonical correlations and their applications to experimentaldata. J Clin Psychol 17(4):331–347

Andrew G, Arora R, Bilmes J A (2013) Deep canonical correlation analysis. International conference on machine learning: 1247-1255

Benton A, Khayrallah H, Gujral B (2017) Deep generalized canonical correlation analysis. Meeting of the association for computational linguistics: 1-6

Hinton GE, Salakhutdinov R (2006) Reducing the dimensionality of data with neural networks. Science 313(5786):504–507

Wang X, Peng D, Hu P (2019) Adversarial correlated autoencoder for unsupervised multi-view representation learning. Knowledge Based Systems 168:109–120

Klein B, Lev G, Sadeh G (2014) Fisher Vectors Derived from Hybrid Gaussian-Laplacian Mixture Models for Image Annotation. arXiv: Computer Vision and Pattern Recognition:4437–4446

Gong Y, Wang L, Hodosh M, Hockenmaier J, Lazebnik S (2014) Improving image-sentence embeddings using large weakly annotated photo collections.Proc.Eur.Conf.Comput.Vis:529–545

Gong Y, Ke Q, Isard M (2014) A Multi-View Embedding Space for Modeling Internet Images, Tags, and Their Semantics. International Journal of Computer Vision 106(2):210–233

Hardoon DR, Szedmak S, Shawetaylor J (2004) Canonical correlation analysis: an overview with application to learning methods. Neural Comput 16(12):2639–2664

Hodosh M, Young P, Hockenmaier J (2013) Framing image description as a ranking task: data, models and evaluation metrics. J Artif Intell Res 47(1):853–899

Wang L, Li Y, Huang J (2019) Learning two-branch neural networks for image-text matching tasks. IEEE Trans Pattern Anal Mach Intell 41(2):394–407

Zhou Y, Lu H, Cheung Y (2017) Bilinear probabilistic canonical correlation analysis via hybrid concatenations. AAAI: 2949–2955

Salakhutdinov R, Hinton G E (2009) Deep Boltzmann machines. International conference on artificial intelligence and statistics: 448-455

Krizhevsky A, Sutskever I, Hinton G E (2012) Imagenet classification with deep convolutional neural networks:1097–1105

Lu A, Wang W, Bansal M (2015) Deep multilingual correlation for improved word Embeddings. North american chapter of the association for computational linguistics: 250-256

Yan F, Mikolajczyk K (2015). Deep correlation for matching images and text. Computer vision and pattern recognition: 3441-3450

Wang W, Yan X, Lee H (2017) Deep Variational Canonical Correlation Analysis. arXiv: Learning

Zhao J, Xie X, Xu X, Sun S (2017) Multi-view learning overview: recent progress and new challenges. Information Fusion 38:43–54

Li J, Yong H, Zhang B (2018) A Probabilistic Hierarchical Model for Multi-view and Multi-feature Classification. national conference on artificial intelligence: 3498–3505

Wang W, Arora R, Livescu K (2015) Unsupervised learning of acoustic features via deep canonical correlation analysis. International conference on acoustics, speech, and signal processing 2015: 4590-4594

Ngiam J, Khosla A, Kim M (2011) Multimodal deep learning. International conference on machine learning: 689-696

Donahue J, Hendricks L A, Guadarrama S (2015) Long-term recurrent convolutional networks for visual recognition and description. Computer vision and pattern recognition: 2625-2634

Kiros R,Salakhutdinov R,.Zemel R S (2014) Unifying visual-semantic embedding with multimodal neural language models. arXiv pre-print CoRR abs/1411.2539

Venugopalan S, Xu H, Donahue J (2014) Translating Videos to Natural Language Using Deep Recurrent Neural Networks. arXiv: Computer Vision and Pattern Recognition

Feng F, Wang X, Li R (2014) Cross-modal retrieval with correspondence autoencoder. Acm multimedia: 7-16

Wang W, Arora R, Livescu K (2015) On Deep Multi-View Representation Learning. international conference on machine learning: 1083–1092

Goodfellos IJ, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Geneative adversarial nets. Adv Neural Inf Proces Syst:2672–2680

Ba J, Swersky K, Fidler S (2015) Predicting deep zero-shot convolutional neural networks using textual descriptions. International conference on computer vision: 4247-4255

Jabri A,Joulin A, Maaten L (2016). Revisiting visual question ansewering baselines.arXiv: 1606.08390

Fukui A, Park D H, Yang D (2016) Multimodal compact bilinear pooling for visual question answering and visual grounding. Empirical methods in natural language processing: 457-468

Zhu C, Miao D, Wang Z (2020) Global and local multi-view multi-label learning. Neurocomputing 371:67–77

Tsoumakas G, Katakis I, Vlahavas I(2009) Mining Multi-label Data. Data Mining and Knowledge Discovery Handbook:667–685

Weng W, Lin Y, Wu S (2018) Multi-label learning based on label-specific features and local pairwise label correlation. Neurocomputing: 385-394

Kumar V, Pujari AK, Padmanabhan V (2018) Multi-label classification using hierarchical embedding. Expert Syst Appl 91:263–269

Xiao Q, Dai J, Luo J (2019) Multi-view manifold regularized learning-based method forprioritizing candidate disease miRNAs. Knowledge Based Systems 175:118–129

Wang H, Yang Y, Liu B, Fujita H (2019) A study of graph-based system for multi-view clustering. Knowledge Based Systems 163:1009–1019

Zhang Y, Yang Y, Li T, Fujita H (2019) A multitask multiview clustering algorithm inheterogeneous situations based on LLE and LE. Knowledge Based Systems 163:776–786

Zhang C, Fu H, Liu S, Liu G (2015) Lowrank tensor constrained multiview subspace clustering.Proc. IEEE Int. Conf. Comput. Vis:1582–1590

Zhang C, Fu H, Hu Q (2020) Generalized latent multi-view subspace clustering. IEEE Trans Pattern Anal Mach Intell 42(1):86–99

Luo S, Zhang C, Zhang W (2018) Consistent and specific multi-view subspace clustering. National conference on artificial intelligence: 3730-3737

Sabour S, Frosst N, Hinton G E (2017) Dynamic Routing Between Capsule. arXiv: Computer Vision and Pattern Recognition

Chandar S, Khapra MM, Larochelle H (2016) Correlational neural networks. Neural Comput 28(2):257–285

Yang P, Gao W (2014) Information-theoretic multi-view domain adaptation:a the oretival and empirical study. Journal of Artificial Intelligence Research 49(1):501–525

Acknowledgements

Partially supported by the National Natural Science Foundation of China (Grant No. 61872260),Key Research and Development Program International Cooperation Projectof Shanxi Province of China(Grant No.201703D421013), Institute Level Research Fund Project of Shanxi Energy College (Grant No.ZY-2017002),Institute Level Research Fund Project of Shanxi Energy College (Grant No.SY-2018004).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The authors take the position that the data mining based on AI technologies are meaningful for social applications and people life. In the presented paper, they research on the multi-view data mining and design a fusion representation learning method of multi-view data. Through the paper, the authors aim to present a brief description of model and explain how such model might be used to improve the multi-view fusion learning ability. The authors also plan to design more high-level multi-view representation leaning method through future studies and analyses. It is hoped that the preliminary findings from these follow-up studies will be ready for presentation.

Rights and permissions

About this article

Cite this article

Zheng, T., Ge, H., Li, J. et al. Unsupervised multi-view representation learning with proximity guided representation and generalized canonical correlation analysis. Appl Intell 51, 248–264 (2021). https://doi.org/10.1007/s10489-020-01821-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-020-01821-1