Abstract

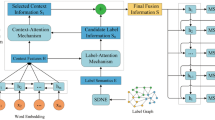

Multi-label text classification (MLTC) is a significant task that aims to assign multiple labels to each given text. There are usually correlations between the labels in the dataset. However, traditional machine learning methods tend to ignore the label correlations. To capture the dependencies between the labels, the sequence-to-sequence (Seq2Seq) model is applied to MLTC tasks. Moreover, to reduce the incorrect penalty caused by the Seq2Seq model due to the inconsistent order of the generated labels, a deep reinforced sequence-to-set (Seq2Set) model is proposed. However, the label generation of the Seq2Set model still relies on a sequence decoder, which cannot eliminate the influence of the predefined label order and exposure bias. Therefore, we propose an MLTC model with latent word-wise label information (MLC-LWL), which constructs effective word-wise labeled information using a labeled topic model and incorporates the label information carried by the word and label context information through a gated network. With the word-wise label information, our model captures the correlations between the labels via a label-to-label structure without being affected by the predefined label order or exposure bias. Extensive experimental results illustrate the effectiveness and significant advantages of our model compared with the state-of-the-art methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Baker S, Korhonen A (2017) Initializing neural networks for hierarchical multi-label text classification. BioNLP 2017:307–315

Benites F, Sapozhnikova E (2015) Haram: a hierarchical aram neural network for large-scale text classification. In: 2015 IEEE international conference on Data mining workshop (ICDMW). IEEE, pp 847–854

Blei DM, Ng A, Jordan MI (2003) Latent dirichlet allocation. J Mach Learn Res 3:993–1022

Boutell MR, Luo J, Shen X, Brown CM (2004) Learning multi-label scene classification. Pattern Recogn 37(9):1757–1771

Chen G, Ye D, Xing Z, Chen J, Cambria E (2017) Ensemble application of convolutional and recurrent neural networks for multi-label text categorization. In: 2017 International joint conference on neural networks (IJCNN). IEEE, pp 2377–2383

Christopher DM, Prabhakar R, Hinrich S (2008) Introduction to information retrieval. Introd Inf Retriev 151(177):5

Clare A, King RD (2001) Knowledge discovery in multi-label phenotype data. In: European conference on principles of data mining and knowledge discovery. Springer, pp 42–53

Elisseeff A, Weston J (2002) A kernel method for multi-labelled classification. In: Advances in neural information processing systems, pp 681–687

Gelfand AE (2000) Gibbs sampling. J Amer Stat Assoc 95(452):1300–1304

He ZF, Yang M, Gao Y, Liu HD, Yin Y (2019) Joint multi-label classification and label correlations with missing labels and feature selection. Knowl-Based Syst 163:145–158

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Ioffe S, Szegedy C (2015) Batch normalization: Accelerating deep network training by reducing internal covariate shift. In: International conference on machine learning, pp 448–456

Katakis I, Tsoumakas G, Vlahavas I (2008) Multilabel text classification for automated tag suggestion. In: Proceedings of the ECML/PKDD, vol 18

Kim Y (2014) Convolutional neural networks for sentence classification. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp 1746–1751

Kurata G, Xiang B, Zhou B (2016) Improved neural network-based multi-label classification with better initialization leveraging label co-occurrence. In: Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp 521–526

Lewis DD, Yang Y, Rose TG, Li F (2004) Rcv1: a new benchmark collection for text categorization research. J Mach Learn Res 5:361–397

Li Z, Tang J, Mei T (2018) Deep collaborative embedding for social image understanding. IEEE Trans Pattern Anal Mach Intell 41(9):2070–2083

Li Z, Tang J, Wang X, Liu J, Lu H (2016) Multimedia news summarization in search. ACM Trans Intell Syst Technol (TIST) 7(3):1–20

Li Z, Wang M, Liu J, Xu C, Lu H (2011) News contextualization with geographic and visual information. In: Proceedings of the 19th ACM international conference on Multimedia, pp 133–142

Lin Z, Feng M, Santos CNd, Yu M, Xiang B, Zhou B, Bengio Y (2017) A structured self-attentive sentence embedding. In: Proceedings of the 5th International Conference on Learning Representations (ICLR), pp 34–49

McCallum A (1999) Multi-label text classification with a mixture model trained by em. In: AAAI Workshop on text learning, pp 1–7

Nam J, Kim J, Mencía EL, Gurevych I, Fürnkranz J (2014) Large-scale multi-label text classification—revisiting neural networks. In: Joint european conference on machine learning and knowledge discovery in databases. Springer, pp 437–452

Nam J, Mencía EL, Kim HJ, Fürnkranz J (2017) Maximizing subset accuracy with recurrent neural networks in multi-label classification. In: Advances in neural information processing systems, pp 5413–5423

Ramage D, Hall D, Nallapati R, Manning CD (2009) Labeled lda: a supervised topic model for credit attribution in multi-labeled corpora. In: Proceedings of the 2009 Conference on Empirical Methods in Natural Language Processing: Vol 1. Association for Computational Linguistics, pp 248–256

Read J, Pfahringer B, Holmes G, Frank E (2011) Classifier chains for multi-label classification. Mach Learn 85(3):333

Schapire RE, Singer Y (1999) Improved boosting algorithms using confidence-rated predictions. Mach Learn 37(3):297–336

Socher R, Ganjoo M, Manning CD, Ng A (2013) Zero-shot learning through cross-modal transfer. In: Advances in neural information processing systems, pp. 935–943

Srivastava RK, Greff K, Schmidhuber J (2015) Training very deep networks. In: Advances in neural information processing systems, pp 2377–2385

Tang J, Qu M, Mei Q (2015) Pte: Predictive text embedding through large-scale heterogeneous text networks. In: Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. ACM, pp 1165–1174

Tsoumakas G, Katakis I (2007) Multi-label classification: an overview. Int J Data Warehous Min (IJDWM) 3(3):1–13

Wang G, Li C, Wang W, Zhang Y, Shen D, Zhang X, Henao R, Carin L (2018) Joint embedding of words and labels for text classification. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), vol 1, pp 2321–2331

Yang P, Luo F, Ma S, Lin J, Sun X (2019) A deep reinforced sequence-to-set model for multi-label classification. In: Proceedings of the 57th Conference of the Association for Computational Linguistics, pp 5252–5258

Yang P, Sun X, Li W, Ma S, Wu W, Wang H (2018) Sgm: sequence generation model for multi-label classification. In: Proceedings of the 27th International Conference on Computational Linguistics, pp 3915–3926

Yu L, Zhang W, Wang J, Yu Y (2017) Seqgan: sequence generative adversarial nets with policy gradient. In: AAAI-17: Thirty-First AAAI conference on artificial intelligence, vol 31. Association for the advancement of artificial intelligence (AAAI), pp 2852–2858

Zhang J, Li C, Cao D, Lin Y, Su S, Dai L, Li S (2018) Multi-label learning with label-specific features by resolving label correlations. Knowl-Based Syst 159:148–157

Zhang ML, Zhou ZH (2006) Multilabel neural networks with applications to functional genomics and text categorization. IEEE Trans Knowl Data Eng 18(10):1338–1351

Zhang ML, Zhou ZH (2007) Ml-knn: a lazy learning approach to multi-label learning. Pattern Recogn 40(7):2038–2048

Zheng Y, Mobasher B, Burke R (2014) Context recommendation using multi-label classification. In: Proceedings of the 2014 IEEE/WIC/ACM International Joint Conferences on Web Intelligence (WI) and Intelligent Agent Technologies (IAT)-Vol 02. IEEE Computer Society, pp 288–295

Acknowledgments

This research is partially supported by National Natural Science Foundation of China (No. U1711263).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chen, Z., Ren, J. Multi-label text classification with latent word-wise label information. Appl Intell 51, 966–979 (2021). https://doi.org/10.1007/s10489-020-01838-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-020-01838-6