Abstract

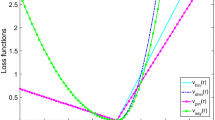

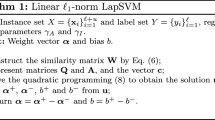

The insufficiency and contamination of supervision information are the main factors affecting the performance of support vector machines (SVMs) in real-world applications. To address this issue, novel correntropy loss functions and Laplacian SVM (LapSVM) are utilized for robust semi-supervised classification. It is known that correntropy loss functions have been used in robust learning and achieved promising results. However, the potential for more diverse priors has not been extensively explored. In this paper, a correntropy loss function induced from Laplace kernel function, called LK-loss, is applied to LapSVM for the construction of robust semi-supervised classifier. Properties of LK-loss are demonstrated including robustness, symmetry, boundedness, Fisher consistency and asymptotic approximation behaviors. Moreover, the asymmetric version of LK-loss is introduced to further improve the performance. Concave-convex procedure (CCCP) technique is used to handle the non-convexity of Laplace kernel-induced correntropy loss functions iteratively. Experimental results show that in most cases, the proposed methods have better generalization performance than the comparing ones, which demonstrate the feasibility and effectiveness of the proposed semi-supervised classification framework.

Similar content being viewed by others

References

Vapnik V (1995) The nature of statistical learning theory. Wiley, New York

Deng T, Ye D, Ma R, Fujita H, Xiong L (2020) Low-rank local tangent space embedding for subspace clustering. Inf Sci 508:1–21

Yang X, Jiang X, Tian C, Wang P, Zhou F, Fujita H (2020) Inverse projection group sparse representation for tumor classification: A low rank variation dictionary approach. Knowledge-Based Syst 196(21):105768

Zhang Y, Yang Y, Li T, Fujita H (2019) A multitask multiview clustering algorithm in heterogeneous situations based on LLE and LE. Knowledge-Based Syst 163(1):776–786

Bartlett P, Jordan M, McAuliffe J (1995) Convexity, classification, and risk bounds. J Am Stat Assoc 101(473):138–156

Huber PJ (1981) Robust estimation of a location parameter. Ann Math Statist 35(1):73–101

Huang X, Shi L, Suykens JAK (2014) Support vector machine classifier with pinball loss. IEEE Trans Pattern Anal Mach Intell 36(5):984–997

Wang L, Jia H, Li J (2008) Training robust support vector machine with smooth Ramp loss in the primal space. Neurocomputing 71(13):3020–3025

Zhong P (2012) Training robust support vector regression with smooth non-convex loss function. Optim Methods Softw 27(6):1039–1058

Wang K, Zhong P (2014) Robust non-convex least squares loss function for regression with outliers. Knowledge-Based Syst 71:290–302

Shen X, Niu L, Qi Z, Tian Y (2017) Support vector machine classifier with truncated pinball loss. Pattern Recognit 68:199–210

Yang L, Dong H (2018) Support vector machine with truncated pinball loss and its application in pattern recognition. Chemometrics Intell Lab Syst 177:89–99

Suykens JAK, Brabanter J, Lukas L, Vandewalle J (2002) Weighted least squares support vector machines: Robustness and sparse approximation. Neurocomputing 48(1):85–105

Huang X, Shi L, Suykens JAK (2014) Asymmetric least squares support vector machine classifiers. Comput Statist Data Anal 70(2):395–405

Yang X, Song Q, Cao A (2007) A weighted support vector machine for data classification. Int J Pattern Recognit Artif Intell 21(5):961–976

Rousseeuw P, Leroy A (1987) Robust regression & outlier detection. J Am Stat Assoc 31 (2):260–261

Rekha A, Abdulla M, Asharaf S (2017) Lightly trained support vector data description for novelty detection. Expert Syst Appl 85:25–32

Principe JC (2010) Information theoretic learning. Springer, New York

Santamaria I, Pokharel P, Principe JC (2006) Generalized correlation function: Definition, properties, and application to blind equalization. IEEE Trans Signal Process 54(6):2187–2197

Singh A, Principe JC (2010) A loss function for classification based on a robust similarity metric. In: Proceedings of international joint conference on neural networks IJCNN’10

He R, Hu B, Zheng W, Kong X (2011) Robust principal component analysis based on maximum correntropy criterion. IEEE Trans Image Process 20(6):1485–1494

Chen X, Yang J, Liang J, Ye Q (2012) Recursive robust least squares support vector regression based on maximum correntropy criterion. Neurocomputing 97(1):63–73

Xing H, Wang X (2013) Training extreme learning machine via regularized correntropy criterion. Neural Comput Appl 23(7):1977–1986

Hu T, Fan J, Wu Q, Zhou D (2013) Learning theory approach to minimum error entropy criterion. J Mach Learn Res 14(1):377–397

Feng Y, Huang X, Shi L, Yang Y, Suykens JAK (2015) Learning with the maximum correntropy criterion induced losses for regression. J Mach Learn Res 16(1):993–1034

Singh A, Pokharel R, Principe JC (2014) The C-loss function for pattern classification. Pattern Recognit 47(1):441–453

Xu G, Hu B, Principe JC (2018) Robust C-loss kernel classifiers. IEEE Trans Neural Netw Learn Syst 29(3):510–522

Zhu X (2008) Semi-supervised learning literature survey. Comput Sci, University of Wisconsin-Madison

Belkin M, Niyogi P (2004) Semi-supervised learning on Riemannian manifolds. Mach Learn 56(1-3):209–239

Belkin M, Niyogi P, Sindhwani V (2006) Manifold regularization: a geometric framework for learning from labeled and unlabeled examples. J Mach Learn Res 7(1):2399–2434

Bradley PS, Mangasarian OL (1998) Feature selection via concave minimization and support vector machines. In: Proceedings of international conference on machine learning ICML’98

Thi HAL, Dinh TP, Thiao M (2016) Efficient approaches for ℓ2-ℓ0 regularization and applications to feature selection in SVM. Appl Intell 45:549–565

He R, Zheng W, Tan T, Sun Z (2014) Half-quadratic-based iterative minimization for robust sparse representation. IEEE Trans Pattern Anal Mach Intell 36(2):261–275

Gomez-Chova L, Camps-Valls G, Munoz-Mari J, Calpe J (2008) Semisupervised image classification with Laplacian support vector machines. IEEE Geosci Remote Sens Lett 5(3):336–340

Munoz-Mari J, Bovolo F, Gomez-Chova L, Bruzzone L, Camps-Valls G (2010) Semi-supervised one-class support vector machines for classification of remote sensing data. IEEE Trans Geosci Remote Sens 48(8):3188–3197

Qi Z, Tian Y, Shi Y (2012) Laplacian twin support vector machine for semi-supervised classification. Neural Netw 35(11):46–53

Yang Z, Xu Y (2016) Laplacian twin parametric-margin support vector machine for semi-supervised classification. Neurocomputing 171:325–334

Yang L, Ren Z, Wang Y, Dong H (2017) A robust regression framework with Laplace kernel-induced loss. Neural Comput 29(11):3014–3039. 16(1):1063–1101

Yuille AL, Rangarajan A (2003) The concave-convex procedure. Neural Comput 15(4):915–936

Thi HAL, Dinh TP (2005) The DC (difference of convex functions) programming and DCA revisited with DC models of real world non-convex optimization problems. Ann Oper Res 133:23–46

Fung G, Mangasarian OL (2001) Semi-supervised support vector machines for unlabeled data classification. Optim Method Softw 15:29–44

Neumann J, Schnörr C, Steidl G (2005) Combined SVM-based feature selection and classification. Mach Learn 61:129–150

Liu W, Pokharel PP, Principe JC (2007) Correntropy: Properties and applications in non-Gaussian signal processing. IEEE Trans Signal Process 55(11):5286–5298

Lin Y (2004) A note on margin-based loss functions in classification. Stat Probab Lett 68(1):73–82

Steinwart I, Hush D, Scovel C (2011) Training SVMs without offset. J Mach Learn Res 12 (1):141–202

Yang L, Dong H (2019) Robust support vector machine with generalized quantile loss for classification and regression. Appl Soft Comput 81:105483

Sriperumbudur BK, Lanckriet GRG (2009) On convergence rate of concave–convex procedure. In: Proceedings of advances in neural information processing systems NIPS’09

Yang L, Zhang S (2016) A sparse extreme learning machine framework by continuous optimization algorithms and its application in pattern recognition. Eng Appl Artif Intell 53:176–189

Dua D, Graff C (2019) UCI machine learning repository (http://archive.ics.uci.edu/ml)

Suykens JAK, Vandewalle J (1999) Least squares support vector machine classifiers. Neural Process Lett 9:293–300

Wu Y, Liu Y (2013) Adaptively weighted large margin classifiers. J Comput Graph Stat 22 (2):416–432

Xu G, Cao Z, Hu B, Principe JC (2017) Robust support vector machines based on the rescaled hinge loss function. Pattern Recognit 63:139–148

Acknowledgements

This work is supported by National Nature Science Foundation of China (No. 11471010, 11271367). Moreover, the authors thank very the referees and the editor for their constructive comments. Their suggestions improved the paper significantly.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Dong, H., Yang, L. & Wang, X. Robust semi-supervised support vector machines with Laplace kernel-induced correntropy loss functions. Appl Intell 51, 819–833 (2021). https://doi.org/10.1007/s10489-020-01865-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-020-01865-3