Abstract

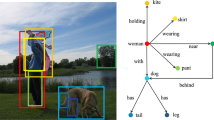

A scene graph can describe images concisely and structurally. However, existing methods of scene graph generation have low capabilities of inferring certain relationships, because of the lack of semantic information and their heavy dependence on the statistical distribution of the training set. To alleviate the above problems, a Multimodal Graph Inference Network (MGIN), which includes two modules; Multimodal Information Extraction (MIE) and Target with Multimodal Feature Inference (TMFI), is proposed in this study. MGIN can increase the inference capability of triplets, especially for uncommon samples. In the proposed MIE module, the prior statistical knowledge of the training set is incorporated into the network in a reprocess to relieve the problem of overfitting to the training set. Visual and semantic features are extracted in the MIE module and fused as unified multimodal features in the TMFI module. These features are efficient for the inference module to increase the prediction capability of MGIN, especially for some uncommon samples. The proposed method achieves 27.0% average mean recall and 55.9% average recall, with improvements of 0.48% and 0.50%, respectively, compared with state-of-the-art methods. It also increases the average recall of 20 relationships with the lowest probability by 4.91%.

Similar content being viewed by others

References

Johnson J, Krishna R, Stark M, Li LJ, Shamma D, Bernstein M, Fei-Fei L (2015) Image retrieval using scene graphs. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3668–3678

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1097–1105

Lin TY, Dollár P, Girshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2117–2125

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Xiong X, Min W, Zheng W, Liao P, Yang H, Wang S (2020) S3d-cnn: skeleton-based 3d consecutive-low-pooling neural network for fall detection. Applied Intelligence

Qiu J, Dong Y, Ma H, Li J, Wang K, Tang J (2018) Network embedding as matrix factorization: Unifying deepwalk, line, pte, and node2vec. In: Proceedings of the eleventh ACM international conference on web search and data mining, 459–467

Cui P, Wang X, Pei J, Zhu W (2018) A survey on network embedding. IEEE Trans Knowl Data Eng 31(5):833–852

Cai H, Zheng VW, Chang KCC (2018) A comprehensive survey of graph embedding: Problems, techniques, and applications. IEEE Trans Knowl Data Eng 30(9):1616–1637

Li Y, Tarlow D, Brockschmidt M, Zemel R (2015) Gated graph sequence neural networks. arXiv:151105493

Scarselli F, Gori M, Tsoi AC, Hagenbuchner M, Monfardini G (2008) The graph neural network model. IEEE Trans Neural Netw 20(1):61–80

Scarselli F, Gori M, Tsoi AC, Hagenbuchner M, Monfardini G (2008) Computational capabilities of graph neural networks. IEEE Trans Neural Netw 20(1):81–102

Li X, Jiang S (2019) Know more say less: Image captioning based on scene graphs. IEEE Trans Multimed 21(8):2117–2130

Xu N, Liu A-A, Liu J, Nie W, Su Y (2019) Scene graph captioner: Image captioning based on structural visual representation. J Vis Commun Image Represent 58:477–485

Yang X, Tang K, Zhang H, Cai J (2019) Auto-encoding scene graphs for image captioning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 10685–10694

Xi Y, Zhang Y, Ding S, Wan S (2020) Visual question answering model based on visual relationship detection. Signal Process Image Commun 80:115648

Prabhu N, Venkatesh Babu R (2015) Attribute-graph: A graph based approach to image ranking. In: Proceedings of the IEEE international conference on computer vision, pp 1071–1079

Herzig R, Bar A, Xu H, Chechik G, Darrell T, Globerson A (2019) Learning canonical representations for scene graph to image generation. arXiv:191207414

Chen T, Yu W, Chen R, Lin L (2019) Knowledge-embedded routing network for scene graph generation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6163–6171

Krishna R, Zhu Y, Groth O, Johnson J, Hata K, Kravitz J, Chen S, Kalantidis Y, Li LJ, Shamma DA et al (2017) Visual genome: Connecting language and vision using crowdsourced dense image annotations. Int J Comput Vis 123(1):32–73

Zhang H, Kyaw Z, Chang S-F, Chua T-S (2017) Visual translation embedding network for visual relation detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5532–5540

Wan H, Luo Y, Peng B, Zheng W-S (2018) Representation learning for scene graph completion via jointly structural and visual embedding. In: IJCAI, pp 949–956

Hung Z-S, Mallya A, Lazebnik S (2020) Contextual translation embedding for visual relationship detection and scene graph generation. IEEE Transactions on Pattern Analysis and Machine Intelligence

Dai B, Zhang Y, Lin D (2017) Detecting visual relationships with deep relational networks. In: Proceedings of the IEEE conference on computer vision and Pattern recognition, pp 3076–3086

Xu D, Zhu Y, Choy CB, Fei-Fei L (2017) Scene graph generation by iterative message passing. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 5410–5419

Li Y, Ouyang W, Zhou B, Wang K, Wang X (2017) Scene graph generation from objects, phrases and region captions. In: Proceedings of the IEEE international conference on computer vision, pp 1261–1270

Lu C, Krishna R, Bernstein M, Fei-Fei L (2016) Visual relationship detection with language priors. In: European conference on computer vision, Springer, pp 852–869

Zellers R, Yatskar M, Thomson S, Choi Y (2018) Neural motifs: Scene graph parsing with global context. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5831–5840

Gu J, Zhao H, Lin Z, Li S, Cai J, Ling M (2019) Scene graph generation with external knowledge and image reconstruction. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1969–1978

Li Y, Ouyang W, Zhou B, Shi J, Zhang C, Wang X (2018) Factorizable net: an efficient subgraph-based framework for scene graph generation. In: Proceedings of the european conference on computer vision (ECCV), pp 335–351

Yang J, Lu J, Lee S, Batra D, Parikh D (2018) Graph r-cnn for scene graph generation. In: Proceedings of the European conference on computer vision (ECCV), pp 670–685

Deng C, Yang X, Nie F, Tao D (2019) Saliency detection via a multiple self-weighted graph-based manifold ranking. IEEE Trans Multimed 22(4):885–896

Li C, Tang H, Deng C, Zhan L, Liu W (2020) Vulnerability vs. reliability: Disentangled adversarial examples for cross-modal learning. In: Proceedings of the 26th ACM SIGKDD International conference on knowledge discovery & data mining, association for computing machinery, pp 421–429

Guo W, Cai J, Wang S (2020) Unsupervised discriminative feature representation via adversarial auto-encoder. Appl Intell 50(4):1155–1171

Guo W, Wang J, Wang S (2019) Deep multimodal representation learning: A survey. IEEE Access 7:63373–63394

Aytar Y, Castrejon L, Vondrick C, Pirsiavash H, Torralba A (2017) Cross-modal scene networks. IEEE Trans Pattern Anal Mach Intell 40(10):2303–2314

Xie D, Deng C, Li C, Liu X, Tao D (2020) Multi-task consistency-preserving adversarial hashing for cross-modal retrieval. IEEE Trans Image Process 29:3626–3637

Yang E, Deng C, Li C, Liu W, Li J, Tao D (2018) Shared predictive cross-modal deep quantization. IEEE Trans Neural Netw Learn Syst 29(11):5292–5303

Wang S, Zhang H, Wang H (2017) Object co-segmentation via weakly supervised data fusion. Comput Vis Image Underst 155:43–54

Jiang YG, Wu Z, Wang J, Xue X, Chang SF (2017) Exploiting feature and class relationships in video categorization with regularized deep neural networks. IEEE Trans Pattern Anal Mach Intell 40 (2):352–364

Vo AD, Nguyen QP, Ock CY (2020) Semantic and syntactic analysis in learning representation based on a sentiment analysis model. Appl Intell 50(3):663–680

Nickel M, Murphy K, Tresp V, Gabrilovich E (2015) A review of relational machine learning for knowledge graphs. Proc IEEE 104(1):11–33

Marino K, Salakhutdinov R, Gupta A (2016) The more you know: Using knowledge graphs for image classification. arXiv:161204844

Lee CW, Fang W, Yeh CK, Frank Wang YC (2018) Multi-label zero-shot learning with structured knowledge graphs. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1576–1585

Yang X, Deng C, Liu T, Tao D (2020) Heterogeneous graph attention network for unsupervised multiple-target domain adaptation. IEEE Transactions on Pattern Analysis and Machine Intelligence

Pennington J, Socher R, Manning CD (2014) Glove: Global vectors for word representation. In: Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), pp 1532–1543

Kipf TN, Welling M (2016) Semi-supervised classification with graph convolutional networks. arXiv:160902907

Redmon J, Farhadi A (2017) Yolo9000: better, faster, stronger. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7263–7271

Tang K, Niu Y, Huang J, Shi J, Zhang H (2020) Unbiased scene graph generation from biased training. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3716–3725

Chen V, Varma P, Krishna R, Bernstein M, Re C, Fei-Fei L (2019) Scene graph prediction with limited labels. In: International conference on computer vision

Newell A, Deng J (2017) Pixels to graphs by associative embedding. In: Advances in neural information processing systems, pp 2171–2180

Khademi M, Schulte O (2018) Dynamic gated graph neural networks for scene graph generation. In: Asian conference on computer vision, Springer, pp 669–685

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant Nos. 62076117, 61762061, 61763031), the Natural Science Foundation of Jiangxi Province, China (Grant No. 20161ACB20004) and Jiangxi Key Laboratory of Smart City (Grant No. 20192BCD40002).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Duan, J., Min, W., Lin, D. et al. Multimodal graph inference network for scene graph generation. Appl Intell 51, 8768–8783 (2021). https://doi.org/10.1007/s10489-021-02304-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-021-02304-7