Abstract

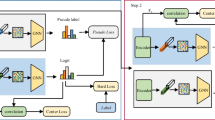

Meta-learning approaches are recently introduced to obtain a proficient model in the problem of few-shot learning. The existing state-of-the-art methods can resolve the training difficulty to achieve the model generalization with limited instances by incorporating several tasks containing various drawn classes. However, during the fast-adaptation stage, the characteristics of instances in meta-training and meta-test sets are assumed to be similar. In contrast, most meta-learning algorithms are implemented in challenging settings where those are drawn from different populations. This critical assumption can cause the model to exhibit degraded performance. We propose an Augmented Domain Agreement for Adaptable Meta-Learner (AD2AML), which augments the domain adaptation framework in meta-learning to overcome this problem. We minimize the latent representation divergence of the inputs drawn from different distributions to enhance the model at obtaining more general features. Therefore, the trained network can be more transferable at shifted domain conditions. Furthermore, we extend our main idea by augmenting the image reconstruction network with cross-consistency loss to encourage the shared network to extract a similar input representation. We demonstrate our proposed method’s effectiveness on the benchmark datasets of few-shot classification and few-shot domain adaptation problems. Our experiment shows that our proposed idea can improve generalization performance. Moreover, the extension with image reconstruction and cross-consistency loss can stabilize domain loss minimization during training.

Similar content being viewed by others

References

Agarap AF (2018) Deep learning using rectified linear units (reLU). arXiv:1803.08375

Antoniou A, Edwards H, Storkey AJ (2019) How to train your MAML. In: International conference on learning representations

Balaji Y, Sankaranarayanan S, Chellappa R (2018) MetaReg: Towards domain generalization using meta-regularization. In: Advances in neural information processing systems 31, pp 1006–1016

Devlin J, Chang M, Lee K, Toutanova K (2019) BERT: Pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 conference of the north american chapter of the association for computational linguistics: human language technologies, pp 4171–4186. https://doi.org/10.18653/v1/n19-1423

Fink M (2004) Object classification from a single example utilizing class relevance metrics. In: Advances in neural information processing systems 17, pp 449–456

Finn C, Abbeel P, Levine S (2017) Model-agnostic meta-learning for fast adaptation of deep networks. In: Proceedings of the 34th International Conference on Machine Learning, vol 70, pp 1126–1135

Finn C, Xu K, Levine S (2018) Probabilistic model-agnostic meta-learning. In: Advances in neural information processing systems 31, pp 9537–9548

Flennerhag S, Rusu AA, Pascanu R, Visin F, Yin H, Hadsell R (2020) Meta-learning with warped gradient descent. In: 8th International Conference on Learning Representations. https://openreview.net/forum?id=rkeiQlBFPB

Ganin Y, Lempitsky VS (2015) Unsupervised domain adaptation by backpropagation. In: Proceedings of the 32nd international conference on machine learning, vol 37, pp 1180–1189

Ganin Y, Ustinova E, Ajakan H, Germain P, Larochelle H, Laviolette F, Marchand M, Lempitsky VS (2017) Domain-adversarial training of neural networks. In: Csurka G (ed) Domain adaptation in computer vision applications, advances in computer vision and pattern recognition. Springer, pp 189–209. https://doi.org/10.1007/978-3-319-58347-1_10

Goodfellow IJ, Pouget-abadie J, Mirza M, Xu B, Warde-farley D, Ozair S, Courville AC, Bengio Y (2014) Generative adversarial nets. In: Advances in neural information processing systems 27, pp 2672–2680

Grant E, Finn C, Levine S, Darrell T, Griffiths TL (2018) Recasting gradient-based meta-learning as hierarchical Bayes. In: 6th international conference on learning representations

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: IEEE Conference on computer vision and pattern recognition, pp 770–778. https://doi.org/10.1109/CVPR.2016.90

Hendrycks D, Lee K, Mazeika M (2019) Using pre-training can improve model robustness and uncertainty. In: Proceedings of the 36th international conference on machine learning, vol 97, pp 2712–2721

Hinton GE, Osindero S, Teh YW (2006) A fast learning algorithm for deep belief nets. Neural Comput 18(7):1527–1554. https://doi.org/10.1162/neco.2006.18.7.1527

Hoffman J, Tzeng E, Park T, Zhu J, Isola P, Saenko K, Efros AA, Darrell T (2018) CyCADA: Cycle-consistent adversarial domain adaptation. In: Proceedings of the 35th international conference on machine learning, vol 80, pp 1994–2003

Hospedales TM, Antoniou A, Micaelli P, Storkey AJ (2020) Meta-learning in neural networks: A survey. arXiv:2004.05439

Huisman M, van Rijn JH, Plaat A (2021) A survey of deep meta-learning. Artif. Intell Rev. https://doi.org/10.1007/s10462-021-10004-4

Kang B, Feng J (2018) Transferable meta learning across domains. In: Proceedings of the Thirty-Fourth conference on uncertainty in artificial intelligence, pp 177–187

Kingma DP, Ba J (2015) Adam: a method for stochastic optimization. In: 3Rd international conference on learning representations

Koch G, Zemel R, Salakhutdinov R (2015) Siamese neural networks for one-shot image recognition. In: ICML Deep learning workshop. Lille, vol 2

Kornblith S, Shlens J, Le QV (2019) Do better imageNet models transfer better?. In: IEEE Conference on computer vision and pattern recognition, pp 2661–2671. https://doi.org/10.1109/CVPR.2019.00277

Kouw WM (2018) An introduction to domain adaptation and transfer learning. arXiv:1812.11806

Kullback S, Leibler RA (1951) On information and sufficiency. Ann Math Stat 22(1):79–86

Lake BM, Salakhutdinov R, Gross J, Tenenbaum JB (2011) One shot learning of simple visual concepts. In: Proceedings of the 33th annual meeting of the cognitive science society

Lee Y, Choi S (2018) Gradient-based meta-learning with learned layerwise metric and subspace. In: Proceedings of the 35th international conference on machine learning, vol 80, pp 2933–2942

Li D, Yang Y, Song Y, Hospedales TM (2018) Learning to generalize: Meta-learning for domain generalization. In: Proceedings of the Thirty-Second AAAI conference on artificial intelligence, (AAAI-18), the 30th innovative applications of artificial intelligence (IAAI-18), and the 8th AAAI symposium on educational advances in artificial intelligence (EAAI-18), pp 3490–3497

Li F, Fergus R, Perona P (2006) One-shot learning of object categories. IEEE Trans Pattern Anal Mach Intell 28(4):594–611. https://doi.org/10.1109/TPAMI.2006.79

Li Z, Zhou F, Chen F, Li H (2017) Meta-SGD: Learning to learn quickly for few shot learning. arXiv:1707.09835

Long M, Cao Y, Wang J, Jordan MI (2015) Learning transferable features with deep adaptation networks. In: Proceedings of the 32nd international conference on machine learning, vol 37, pp 97–105

Mangla P, Singh M, Sinha A, Kumari N, Balasubramanian VN, Krishnamurthy B (2020) Charting the right manifold: Manifold mixup for few-shot learning. In: IEEE Winter conference on applications of computer vision, pp 2207–2216. https://doi.org/10.1109/WACV45572.2020.9093338

Motiian S, Piccirilli M, Adjeroh DA, Doretto G (2017) Unified deep supervised domain adaptation and generalization. In: IEEE International conference on computer vision, pp 5716–5726. https://doi.org/10.1109/ICCV.2017.609

Najafabadi MM, Villanustre F, Khoshgoftaar TM, Seliya N, Wald R, Muharemagic E (2015) Deep learning applications and challenges in big data analytics. J Big Data 2:1. https://doi.org/10.1186/s40537-014-0007-7

Nichol A, Achiam J, Schulman J (2018) On first-order meta-learning algorithms. arXiv:1803.02999

Rajeswaran A, Finn C, Kakade SM, Levine S (2019) Meta-learning with implicit gradients. In: Advances in neural information processing systems 32, pp 113–124

Ravi S, Larochelle H (2017) Optimization as a model for few-shot learning. In: 5Th international conference on learning representations

Ren M, Triantafillou E, Ravi S, Snell J, Swersky K, Tenenbaum JB, Larochelle H, Zemel RS (2018) Meta-learning for semi-supervised few-shot classification. In: 6Th international conference on learning representations

Rumelhart DE, Hinton GE, Williams RJ (1986) Learning internal representations by error propagation. MIT Press, Cambridge, pp 318–362

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein MS, Berg AC, Li F (2015) ImageNet large scale visual recognition challenge. Int J Comput Vis 115(3):211–252. https://doi.org/10.1007/s11263-015-0816-y

Saenko K, Kulis B, Fritz M, Darrell T (2010) Adapting visual category models to new domains. In: 11Th european conference on computer vision, vol 6314, pp 213–226. https://doi.org/10.1007/978-3-642-15561-1_16

Silver D, Huang A, Maddison CJ, Guez A, Sifre L, van den Driessche G, Schrittwieser J, Antonoglou I, Panneershelvam V, Lanctot M, Dieleman S, Grewe D, Nham J, Kalchbrenner N, Sutskever I, Lillicrap TP, Leach M, Kavukcuoglu K, Graepel T, Hassabis D (2016) Mastering the game of Go with deep neural networks and tree search. Natural 529(7587):484–489. https://doi.org/10.1038/nature16961

Snell J, Swersky K, Zemel RS (2017) Prototypical networks for few-shot learning. In: Advances in neural information processing systems 30, pp 4077–4087

Sung F, Yang Y, Zhang L, Xiang T, Torr PHS, Hospedales TM (2018) Learning to compare: Relation network for few-shot learning. In: IEEE Conference on computer vision and pattern recognition, pp 1199–1208. https://doi.org/10.1109/CVPR.2018.00131

Thrun S, Pratt L (1998) Learning to learn: Introduction and overview. Kluwer Academic Publishers, USA, pp 3–17

Tzeng E, Hoffman J, Saenko K, Darrell T (2017) Adversarial discriminative domain adaptation. In: IEEE Conference on computer vision and pattern recognition, pp 2962–2971. https://doi.org/10.1109/CVPR.2017.316

Tzeng E, Hoffman J, Zhang N, Saenko K, Darrell T (2014) Deep domain confusion: Maximizing for domain invariance. arXiv:1412.3474

Venkateswara H, Eusebio J, Chakraborty S, Panchanathan S (2017) Deep hashing network for unsupervised domain adaptation. In: IEEE Conference on computer vision and pattern recognition, pp 5385–5394. https://doi.org/10.1109/CVPR.2017.572

Vinyals O, Blundell C, Lillicrap T, Kavukcuoglu K, Wierstra D (2016) Matching networks for one shot learning. In: Advances in neural information processing systems 29, pp 3630–3638

Vuorio R, Sun S, Hu H, Lim JJ (2019) Multimodal model-agnostic meta-learning via task-aware modulation. In: Advances in neural information processing systems 32, pp 1–12

Welinder P, Branson S, Mita T, Wah C, Schroff F, Belongie S, Perona P (2010) Caltech-UCSD Birds 200. Tech. Rep. CNS-TR-2010-001 California Institute of Technology

Ye H, Sheng X, Zhan D (2020) Few-shot learning with adaptively initialized task optimizer: a practical meta-learning approach. Mach Learn 109(3):643–664. https://doi.org/10.1007/s10994-019-05838-7

Acknowledgements

This work was supported by Dongseo University, “Dongseo Cluster Project” Research Fund of 2021 (DSU-20210001).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Widhianingsih, T.D.A., Kang, DK. Augmented domain agreement for adaptable Meta-Learner on Few-Shot classification. Appl Intell 52, 7037–7053 (2022). https://doi.org/10.1007/s10489-021-02744-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-021-02744-1