Abstract

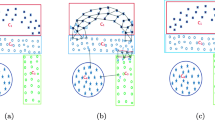

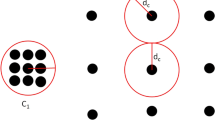

Hierarchical clustering is a common unsupervised learning technique that is used to discover potential relationships in data sets. Despite the conciseness and interpretability, hierarchical clustering algorithms still face some challenges such as inaccuracy, time-consuming, and difficulty in choosing merging strategies. To overcome these limitations, we propose a novel Hierarchical Clustering algorithm with a Merging strategy based on Shared Subordinates (HCMSS), which defines new concepts of the local core representative and the shared subordinate belonging to multiple representatives. First, the state-of-the-art natural neighbor (NaN) is introduced to compute the local neighborhood and the local density of each data point. Next, a sharing-based local core searching algorithm (SLORE) is proposed to find local core points and divide the input data set into numerous initial small clusters. Lastly, these small clusters are merged hierarchically and form the final clustering result. We creatively split the merging process into two sub-steps: first, pre-connecting small clusters according to a shared-subordinates-based indicator that measures the stickiness between clusters; second, merging the pre-connected intermediate clusters and the remaining unconnected small clusters in a classical hierarchical way. Experiments on 8 synthetic and 8 real-world data sets demonstrate that HCMSS can effectively improve the clustering accuracy and is less time-consuming than 2 state-of-the-art benchmarks.

Similar content being viewed by others

Availability of Data and Material

The datasets and third-party libraries used in the experiments are open source and accessible online.

Code Availability

We decide not to make the sourcecode of our work open source right now.

References

Macqueen J (1967) Some methods for classification and analysis of multivariate observations. Proc 5th Berkeley Symp Math Stat Prob 1:281–297

Kaufman L, Rousseeuw PJ (1990) Finding groups in data: an introduction to cluster analysis. Wiley, New Jersey

Comaniciu D, Meer P (2002) Mean shift: a robust approach toward feature space analysis. IEEE Trans Pattern Anal Mach Intell 24:603–619. https://doi.org/10.1109/34.1000236

Ester M, Kriegel H, Sander J, Xu X (1996) A density-based algorithm for discovering clusters in large spatial databases with noise. Proc 2nd Int Conf Knowl Disc Data Min:226–231

Ankerst M, Breunig M, Kriegel H, Sander J (1999) OPTICS: Ordering points to identify the clustering structure. Proc ACM SIGMOD Int Conf Manag Data 28(2):49–60. https://doi.org/10.1145/304182.304187

Rodriguez A, Laio A (2014) Clustering by fast search and find of density peaks. Science 344 (6191):1492–1496. https://doi.org/10.1126/science.1242072

Liu R, Wang H, Yu X (2018) Shared-nearest-neighbor-based clustering by fast search and find of density peaks. Inf Sci 450:200–226. https://doi.org/10.1016/j.ins.2018.03.031

Wang W, Yang J, Muntz R (1997) STING: A statistical information grid approach to spatial data mining. Proc 23rd Int Conf Very Large Data Bases:186–195

Sheikholeslami G, Chatterjee S, Zhang A (1998) Wavecluster: a multi-resolution clustering approach for very large spatial databases. Proc 24th Int Conf Very Large Data Bases:428–439

McQuitty L (1957) Elementary linkage analysis for isolating orthogonal and oblique types and typal relevancies. Educ Psychol Meas 17(2):207–229. https://doi.org/10.1177/001316445701700204

King B (1967) Step-Wise clustering procedures. J Am Stat Assoc 62(317):86–101. https://doi.org/10.1080/01621459.1967.10482890

Ward Jr J (1963) Hierarchical grouping to optimize an objective function. J Am Stat Assoc 58 (301):236–244. https://doi.org/10.1080/01621459.1963.10500845

Zhang T, Ramakrishnan R, Livny M (1996) BIRCH: An efficient data clustering method for very large databases. Proc ACM SIGMOD Int Conf Manage Data:103–114. https://doi.org/10.1145/233269.233324

Guha S, Rastogi R, Shim K (1998) CURE: An efficient clustering algorithm for large databases. Proc ACM SIGMOD Int Conf Manage Data:73–84. https://doi.org/10.1145/276304.276312

Karypis G, Han E, Kumar V (1999) Chameleon: hierarchical clustering using dynamic modeling. Computer 32(8):68–75. https://doi.org/10.1109/2.781637

Barton T, Bruna T, Kordik P (2019) Chameleon 2: An Improved Graph-Based Clustering Algorithm. ACM Trans Knowl Discov Data 13(1), Article 10. https://doi.org/10.1145/3299876

Xie W, Lee Y, Wang C, Chen D, Zhou T (2020) Hierarchical clustering supported by reciprocal nearest neighbors. Inf Sci 527:279–292. https://doi.org/10.1016/j.ins.2020.04.016

Ng A, Jordan M, Weiss Y (2002) On spectral clustering: analysis and an algorithm. Proc 14th Int Conf Neural Inf Proces Syst:849–856

Frey B, Dueck D (2007) Clustering by passing messages between data points. Science 315 (5814):972–976. https://doi.org/10.1126/science.1136800

Böhm C, Plant C, Shao J, Yang Q (2010) Clustering by synchronization. Proc 16th ACM SIGKDD Int Conf Knowl Disc Data Min:583–592. https://doi.org/10.1145/1835804.1835879

Zhu Q, Feng J, Huang J (2016) Natural neighbor: a self-adaptive neighborhood method without parameter. K Pattern Recogn Lett 80:30–36. https://doi.org/10.1016/j.patrec.2016.05.007

Cheng D, Zhu Q, Huang J, Yang L, Wu Q (2017) Natural neighbor-based clustering algorithm with local representatives. Knowl Based Syst 123:238–253. https://doi.org/10.1016/j.knosys.2017.02.027

Aggarwal C, Reddy C (2014) Data clustering: algorithms and applications. CRC Press, Boca RatonFlorida

Pedregosa F, Varoquaux G, Gramfort A, et al. (2011) Scikit-learn: machine learning in python. J Mach Learn Res 12:2825–2830. https://doi.org/10.5555/1953048.2078195

Novikov A (2019) Pyclustering: data mining library. J Open Source Softw 4(36):1230. https://doi.org/10.21105/joss.01230

Dua D, Graff C (2019) UCI Machine learning repository. University of California, School of Information and Computer Science, Irvine. http://archive.ics.uci.edu/ml

Alcalá-Fdez J, Fernandez A, Luengo J, Derrac J, García S, Sánchez L, Herrera F (2011) KEEL Data-mining software tool: data set repository, integration of algorithms and experimental analysis framework. J Mult-Valued Log Soft Comput 17(2-3):255–287

Fu L, Medico E (2007) FLAME, a novel fuzzy clustering method for the analysis of DNA microarray data. BMC bioinform 8(1):3. https://doi.org/10.1186/1471-2105-8-3

Chang H, Yeung DY (2008) Robust path-based spectral clustering. Pattern Recognit 41 (1):191–203. https://doi.org/10.1016/j.patcog.2007.04.010

Gionis A, Mannila H, Tsaparas P (2007) Clustering aggregation. ACM Trans. Knowl Discov Data 1(1):1–30. https://doi.org/10.1145/1217299.1217303

Acknowledgements

This work is supported by Project of National Natural Science Foundation for Young Scientists of China (61802360).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Shi, J., Zhu, Q. & Li, J. A novel hierarchical clustering algorithm with merging strategy based on shared subordinates. Appl Intell 52, 8635–8650 (2022). https://doi.org/10.1007/s10489-021-02830-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-021-02830-4