Abstract

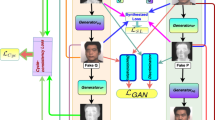

Generative Adversarial Network (GAN) is one of the recent developments in the area of deep learning to transform the images from one domain to another domain. While transforming the images, we need to make sure that the background information should not influence the learning process. The attention-based networks are developed to learn the saliency maps and to prioritize the learning based on the important image regions. We develop a new Cyclic Synthesized Attention Generative Adversarial Network (CSA-GAN) in this paper by incorporating the cycle synthesized loss with the attention network. The use of attention guidance as well as cycle synthesis objective reduces the learning space more towards the optimum solution. It also improves the rate of convergence. The proposed method is tested for Sketch to Face synthesis over CUHK and AR benchmark datasets. We also experimented for thermal to visible face synthesis over WHU-IIP dataset. The proposed CSA-GAN observed promising performance for face synthesis in comparison with state-of-the-art GAN methods.

Similar content being viewed by others

References

Mao, X, Shen, C, Yang, YB: Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections. In: Advances in neural information processing systems, pp 2802–2810 (2016)

Wang, L, Sindagi, V, Patel, V: High-quality facial photo-sketch synthesis using multi-adversarial networks. In: 2018 13th IEEE international conference on automatic face & gesture recognition (FG 2018), pp 83–90. IEEE (2018)

Shen, Y, Luo, P, Yan, J, Wang, X, Tang, X: Faceid-gan: Learning a symmetry three-player gan for identity-preserving face synthesis. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 821–830 (2018)

Peng C, Wang N, Li J, Gao X (2020) Face sketch synthesis in the wild via deep patch representation-based probabilistic graphical model. IEEE Transactions on Information Forensics and Security 15:172–183

Cho, K, van Merriënboer, B, Gulcehre, C, Bahdanau, D, Bougares, F, Schwenk, H, Bengio, Y: Learning phrase representations using rnn encoder–decoder for statistical machine translation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp 1724–1734 (2014)

Isola, P, Zhu, JY, Zhou, T, Efros, AA: Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1125–1134 (2017)

Zhu, JY, Park, T, Isola, P, Efros, AA: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE international conference on computer vision, pp 2223–2232 (2017)

Yi, Z, Zhang, H, Tan, P, Gong, M: Dualgan: Unsupervised dual learning for image-to-image translation. In: Proceedings of the IEEE international conference on computer vision, pp 2849–2857 (2017)

Liao, B, Chen, Y: An image quality assessment algorithm based on dual-scale edge structure similarity. pp 56–56 (2007). https://doi.org/10.1109/ICICIC.2007.143

Souly, N, Spampinato, C, Shah, M: Semi supervised semantic segmentation using generative adversarial network. In: Proceedings of the IEEE International Conference on Computer Vision, pp 5688–5696 (2017)

Zhang, R, Isola, P, Efros, AA: Colorful image colorization. In: European conference on computer vision, pp 649–666. Springer (2016)

Hao, F, Zhang, T, Zhao, L, Tang, Y: Efficient residual attention network for single image super-resolution. Applied Intelligence (2021). https://doi.org/10.1007/s10489-021-02489-x.

Ledig, C, Theis, L, Huszár, F, Caballero, J, Cunningham, A, Acosta, A, Aitken, A, Tejani, A, Totz, J, Wang, Z, et al: Photo-realistic single image super-resolution using a generative adversarial network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4681–4690 (2017)

Abdal, R, Qin, Y, Wonka, P: Image2stylegan: How to embed images into the stylegan latent space? In: Proceedings of the IEEE International Conference on Computer Vision, pp 4432–4441 (2019)

Zhang S, Ji R, Hu J, Lu X, Li X (2018) Face sketch synthesis by multidomain adversarial learning. IEEE transactions on neural networks and learning systems 30(5):1419–1428

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. nature 521(7553):436–444

Goodfellow, I, Pouget-Abadie, J, Mirza, M, Xu, B, Warde-Farley, D, Ozair, S, Courville, A, Bengio, Y: Generative adversarial nets. In: Advances in neural information processing systems, pp 2672–2680 (2014)

Zhu, M, Chen, C, Wang, N, Tang, J, Zhao, C: Mixed attention dense network for sketch classification. Applied Intelligence (2021). https://doi.org/10.1007/s10489-021-02211-x.

Zhang, XY, Huang, YP, Mi, Y, Pei, YT, Zou, Q, Wang, S: Video sketch: A middle-level representation for action recognition. Applied Intelligence 51(4), 2589–2608 (2021). https://doi.org/10.1007/s10489-020-01905-y.

Li, Y, Guo, K, Lu, Y, Liu, L: Cropping and attention based approach for masked face recognition. Applied Intelligence 51(5), 3012–3025 (2021). https://doi.org/10.1007/s10489-020-02100-9.

Xue, H, Ren, Z: Sketch discriminatively regularized online gradient descent classification. Applied Intelligence 50(5), 1367–1378 (2020). https://doi.org/10.1007/s10489-019-01590-6.

Pan, C, Huang, J, Gong, J, Chen, C: Teach machine to learn: hand-drawn multi-symbol sketch recognition in one-shot. Applied Intelligence 50(7), 2239–2251 (2020). https://doi.org/10.1007/s10489-019-01607-0.

Mallat, K, Damer, N, Boutros, F, Kuijper, A, Dugelay, JL: Cross-spectrum thermal to visible face recognition based on cascaded image synthesis. 2019 International Conference on Biometrics (ICB) pp 1–8 (2019)

Di, X, Zhang, H, Patel, VM: Polarimetric thermal to visible face verification via attribute preserved synthesis. In: 2018 IEEE 9th International Conference on Biometrics Theory, Applications and Systems (BTAS), pp 1–10. IEEE (2018)

Mirza, M, Osindero, S: Conditional generative adversarial nets. arxiv:1411.1784 (2014)

Liu, MY, Tuzel, O: Coupled generative adversarial networks. In: D. Lee, M. Sugiyama, U. Luxburg, I. Guyon, R. Garnett (eds.) Advances in Neural Information Processing Systems, vol. 29. Curran Associates, Inc. (2016). https://proceedings.neurips.cc/paper/2016/file/502e4a16930e414107ee22b6198c578f-Paper.pdf. Accessed 11 Dec 2021

Kancharagunta, KB, Dubey, SR: Csgan: Cyclic-synthesized generative adversarial networks for image-to-image transformation. arXiv preprint arXiv:1901.03554 (2019)

Zhang, H, Goodfellow, I, Metaxas, D, Odena, A: Self-attention generative adversarial networks. In: International Conference on Machine Learning, pp 7354–7363 (2019)

Zhang, H, Goodfellow, IJ, Metaxas, DN, Odena, A: Self-attention generative adversarial networks. arxiv:1805.08318 (2018)

Person re-identification using spatial and layer-wise attention (2020) Lejbølle, AR, Nasrollahi, K, Krogh, B, Moeslund, TB, IEEE Transactions on Information Forensics and Security 15:1216–1231. https://doi.org/10.1109/TIFS.2019.2938870

Mejjati, YA, Richardt, C, Tompkin, J, Cosker, D, Kim, KI: Unsupervised attention-guided image-to-image translation. In: Advances in Neural Information Processing Systems, pp 3693–3703 (2018)

Tang, H, Xu, D, Sebe, N, Yan, Y: Attention-guided generative adversarial networks for unsupervised image-to-image translation. In: 2019 International Joint Conference on Neural Networks (IJCNN), pp 1–8. IEEE (2019)

Ma, S, Fu, J, Wen Chen, C, Mei, T: Da-gan: Instance-level image translation by deep attention generative adversarial networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 5657–5666 (2018)

Tang, H, Xu, D, Sebe, N, Wang, Y, Corso, JJ, Yan, Y: Multi-channel attention selection gan with cascaded semantic guidance for cross-view image translation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 2417–2426 (2019)

Tang, H, Chen, X, Wang, W, Xu, D, Corso, JJ, Sebe, N, Yan, Y: Attribute-guided sketch generation. In: 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), pp 1–7. IEEE (2019)

Chen H, Hu G, Lei Z, Chen Y, Robertson NM, Li SZ (2020) Attention-based two-stream convolutional networks for face spoofing detection. IEEE Transactions on Information Forensics and Security 15:578–593. https://doi.org/10.1109/TIFS.2019.2922241

Jolicoeur-Martineau, A: The relativistic discriminator: a key element missing from standard gan. In: International Conference on Learning Representations (2019)

Szegedy, C, Vanhoucke, V, Ioffe, S, Shlens, J, Wojna, Z: Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2818–2826 (2016)

Pumarola, A, Agudo, A, Martínez, A.M., Sanfeliu, A., Moreno-Noguer, F.: Ganimation: Anatomically-aware facial animation from a single image. CoRR abs/1807.09251 (2018). arxiv:1807.09251

Simonyan, K, Zisserman, A: Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556 (2014)

Tang, H, Liu, HC, Xu, D, Torr, PHS, Sebe, N: Attentiongan: Unpaired image-to-image translation using attention-guided generative adversarial networks. arxiv:1911.11897 (2019)

He, K, Zhang, X, Ren, S, Sun, J: Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778 (2016)

Ulyanov, D, Vedaldi, A, Lempitsky, VS: Instance normalization: The missing ingredient for fast stylization. arxiv:1607.08022 (2016)

Wang Z, Chen Z, Wu F (2018) Thermal to visible facial image translation using generative adversarial networks. IEEE Signal Processing Letters 25:1161–1165

Dubey, SR, Chakraborty, S, Roy, SK, Mukherjee, S, Singh, SK, Chaudhuri, BB: diffgrad: An optimization method for convolutional neural networks. IEEE Transactions on Neural Networks and Learning Systems 31 (2020)

Babu, KK, Dubey, SR: Pcsgan: Perceptual cyclic-synthesized generative adversarial networks for thermal and nir to visible image transformation. Neurocomputing 413, 41–50 (2020). https://doi.org/10.1016/j.neucom.2020.06.104. https://www.sciencedirect.com/science/article/pii/S0925231220310936

Johnson, J, Alahi, A, Fei-Fei, L: Perceptual losses for real-time style transfer and super-resolution. In: European conference on computer vision, pp 694–711. Springer (2016)

Zhao H, Gallo O, Frosio I, Kautz J (2017) Loss functions for image restoration with neural networks. IEEE Transactions on Computational Imaging 3(1):47–57. https://doi.org/10.1109/TCI.2016.2644865

Hore, A, Ziou, D: Image quality metrics: Psnr vs. ssim. In: 2010 20th International Conference on Pattern Recognition, pp 2366–2369. IEEE (2010)

Sheikh H, Bovik A (2006) Image information and visual quality. IEEE Transactions on Image Processing 15(2):430–444. https://doi.org/10.1109/TIP.2005.859378

Zhang, R, Isola, P, Efros, AA, Shechtman, E, Wang, O: The unreasonable effectiveness of deep features as a perceptual metric. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 586–595. IEEE (2018)

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Yadav, N.K., Singh, S.K. & Dubey, S.R. CSA-GAN: Cyclic synthesized attention guided generative adversarial network for face synthesis. Appl Intell 52, 12704–12723 (2022). https://doi.org/10.1007/s10489-021-03064-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-021-03064-0