Abstract

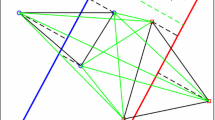

To reflect the similarity of input samples and improve the sparsity of semi-supervised least squares support vector machine (SLSSVM), a novel semi-supervised sparse least squares support vector machine based on Mahalanobis distance (MS-SLSSVM) was proposed, which innovatively introduced the Mahalanobis distance metric learning and the pruning method of geometric clustering into SLSSVM. MS-SLSSVM, which combines the advantages of metric learning and pruning, is a promising semi-supervised classification algorithm. For one thing, MS-SLSSVM effectively uses the Mahalanobis distance kernel to capture the internal mechanism of the two classes of input samples, in which Mahalanobis distance kernel solves the optimization problem with linear space to nonlinear space through simple linear transformation. For another, to solve the sparsity of SLSSVM, MS-SLSSVM can not only use geometric clustering pruning method to filter unlabeled samples in high-density regions, but also label high-confidence unlabeled samples of estimation error ranking. A series of experiments has been conducted on multiple datasets: artificial, UCI and realistic datasets. The experimental results prove the effectiveness of the proposed algorithm.

Similar content being viewed by others

References

Burges C (1998) A tutorial on support vector machines for pattern recognition. Data Min Knowl Disc 2(2):121–167

Xie X, Sun S (2020) Multi-view support vector machines with the consensus and complementarity information. IEEE Trans Knowl Data Eng 32:2401–2413

Durand T, Thome N, Cord M (2018) Minmax latent SVM for weakly labeled data. IEEE Trans Neural Netw Learn Syst 99:1–14

Peruffo Minotto V, Rosito Jung C, Lee B (2015) Multimodal multi-channel on-Line speaker diarization using sensor fusion through SVM. IEEE Trans Multimedia 17(10):1694–1705

Chapelle O, Schölkopf B, Zien A (2006) Semi-supervised Learning. MIT Press, Massachusetts, pp 1–15

Hiroshi H, Kazuo M, Masaki R (2007) Electric network classifiers for semi-supervised learning on graphs. J Oper Res Soc Jpn 50(3):219–232

Li W, Meng W, Au MH (2020) Enhancing collaborative intrusion detection via disagreement-based semi-supervised learning in IoT environments. J Netw Comput Appl 161:102631

Gordon J, Hernández-Lobato J-M (2020) Combining deep generative and discriminative models for Bayesian semi-supervised learning. Pattern Recogn 100:107156

Yan W, Sun Q, Sun H et al (2020) Semi-supervised learning framework based on statistical analysis for image set classification. Pattern Recogn 107:107500

Chapelle O, Sindhwani V, Keerthi S (2008) Optimization techniques for semi-supervised support vector machines. J Mach Learn Res 9:203–233

Adankon MM, Cheriet M, Biem A (2009) Semi-supervised least squares support vector machine. IEEE Trans Neural Netw 20(12):1858

Li YF, Zhou ZH (2015) Towards making unlabeled data never hurt. IEEE Trans Neural Netw Learn Syst 37(1):175–188

Bagattini F, Cappanera P, Schoen F (2018) Lagrangean-based Combinatorial Optimization for Large-Scale s3VMs. IEEE Trans Neural Netw Learn Syst 29(9):4426–4435

Singla A, Patra S, Bruzzone L (2014) A novel classification technique based on progressive transductive SVM learning. Pattern Recogn Lett 42(1):101–106

Xie X, Sun S (2020) General multi-view semi-supervised least squares support vector machines with multi-manifold regularization. Inform Fusion 62:63–72

Tian Y, Luo J (2017) A new branch-and-bound approach to semi-supervised support vector machine. Soft Comput 21(1):245–254

Adankon MM, Cheriet M (2010) Genetic algorithm-based training for semi-supervised SVM. Neural Comput Applic 19(8):1197–1206

Reddy IS, Shevade SK (2011) A fast quasi-Newton method for semi-supervised SVM. Pattern Recogn 44(10):2305–2313

Gieseke F, Airola A, Pahikkala T et al (2014) Fast and simple gradient-based optimization for semi-supervised support vector machines. Neurocomputing 123(1):23–32

Suykens J, Vandewalle J (1999) Least squares support vector machine classifiers. Neural Process Lett 9(3):293–300

Pei H, Wang K, Zhong P (2017) Semi-supervised matrixized least squares support vector machine. Appl Soft Comput 61:72–87

Liu Z, Liu H, Zhao Z (2018) Weighted least squares support vector machine for semi-supervised classification. Wireless Personal Communications 103(1):797C808

Sun G, Rong X, Zhang A et al (2019) Multi-Scale Mahalanobis Kernel-Based support vector machine for classification of High-Resolution remote sensing images. Cognitive Computation

Hao J, Wai-Ki C, Fai CYK et al (2018) Stationary Mahalanobis kernel SVM for credit risk evaluation. Appl Soft Comput 71:407–417

Mahalanobis PC (1936) On the generalised distance in statistics. Proceedings of the National Institute of Sciences of India 2(1):49–55

Long B, Xian W, Li M et al (2014) Improved diagnostics for the incipient faults in analog circuits using LSSVM based on PSO algorithm with Mahalanobis distance. Neurocomputing 133(10):237–248

Reitmaier T, Sick B (2015) The responsibility weighted Mahalanobis kernel for semi-supervised training of support vector machines for classification. Inf Sci 323(11):179–198

Ke T, Song L, Yang B et al (2018) A biased least squares support vector machine based on Mahalanobis distance for PU learning. Physica A: Statistical Mechanics and its Applications 509(1):422–438

Suykens JAK, Brabanter JD, Lukas L et al (2002) Weighted least squares support vector machines: robustness and sparse approximation. Neurocomputing 48(1-4):908–916

Dereniowski D, Kubale M (2004) Cholesky factorization of matrices in parallel and ranking of graphs. 5th International Conference on Parallel Processing and Applied Mathematics 3019:985–992

Belkin M, Niyogi P, Sindhwani V (2006) Manifold regularization: a geometric framework for learning from labeled and unlabeled examples. J Mach Learn Res 7:2399–2434

Suykens JAK, Lukas L, Vandewalle J (2002) Sparse approximation using least squares support vector machines. IEEE International Symposium on Circuits & Systems 2:757–760

Yang L, Yang S, Zhang R et al (2014) Sparse least square support vector machine via coupled compressive pruning. Neurocomputing 131(9):77–86

Mall R, Suykens JAK (2015) Very sparse LSSVM reductions for large-scale data. IEEE Trans Neural Netw Learn Syst 26(5):1086–1097

Zhou S (2015) Sparse LSSVM in primal using Cholesky factorization for large-scale problems. IEEE Trans Neural Netw Learn Syst 27(4):783–795

Xia XL (2018) Training sparse least squares support vector machines by the QR decomposition. Neural Netw 106:175– 184

Chen L, Zhou S (2018) Sparse algorithm for robust LSSVM in primal space. Neurocomputing 275:2880–2891

Oliveira SA, Gomes JP, Neto ARR (2018) Sparse least-squares support vector machines via accelerated segmented test: A dual approach. Neurocomputing 321:308–320

Zhang Z, He J, Gao G et al (2019) Bi-sparse optimization-based least squares regression. Appl Soft Comput 77:300–315

Zhao J, Xu Y, Fujita H (2019) An improved non-parallel Universum support vector machine and its safe sample screening rule. Knowl-Based Syst 170:79–88

Shao YH, Li CN, Huang LW et al (2019) Joint sample and feature selection via sparse primal and dual LSSVM. Knowl-Based Syst 185(1):104915.1-104915.16

Ye YF, Bai L, Hua XY et al (2016) Weighted Lagrange-twin support vector regression. Neurocomputing 197:53–68

GüngÖr E, Özmen A (2017) Distance and density based clustering algorithm using Gaussian kernel. Expert Syst Appl 69:10– 20

Ikonomakis EK, Spyrou GM, Vrahatis MN (2018) Content driven clustering algorithm combining density and distance functions. Pattern Recogn 87:190–202

Tao X, Wang R, Chang R et al (2019) Spectral clustering algorithm using density-sensitive distance measure with global and local consistencies. Knowl-Based Syst 170(15):26–42

Yang W, Long H, Ma L et al (2020) Research on clustering method based on weighted distance density and K-Means. Procedia Comput Sci 166:507–511

Antonis FL, Ravi S, Arun A et al (2020) Hierarchical density-based clustering methods for tolling zone definition and their impact on distance-based toll optimization. Transportation Research Part C: Emerging Technologies 118:102685

Cheng B, Xiang S (2021) Shape optimization of GFRP elastic gridshells by the weighted Lagrange-twin support vector machine and multi-objective particle swarm optimization algorithm considering structural weight. Structures 33:2066–2084

Acknowledgment

This work is financially supported by The Fundamental Research Funds for the Central Universities of Central China Normal University (Grant No. CCNU19ZN020), and Research Project of Hubei Provincial Department of Education (Grant No. B2018401).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Cui, L., Xia, Y. Semi-supervised sparse least squares support vector machine based on Mahalanobis distance. Appl Intell 52, 14294–14312 (2022). https://doi.org/10.1007/s10489-022-03166-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03166-3