Abstract

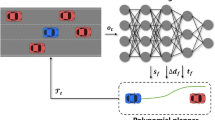

The complexity of taking decisions for an autonomous vehicle (AV) to avoid road accident fatalities, provide safety, comfort, and reduce traffic raises the need for improvements in the field of decision making. To solve these challenges, many algorithms and techniques were applied, and the most common ones were reinforcement learning (RL) algorithms combined with deep learning techniques. Therefore, in this paper we proposed a novel extension of the popular “SARSA” (State-Action-Reward-State-Action) RL technique called “Harmonic SK Deep SARSA” that takes advantage of the stability which SARSA algorithm provides and uses the notion of similar and cumulative states saved in an alternative memory to enhance the stability of the algorithm and achieve remarkable performance that SARSA could not accomplish due to its on policy nature. Through the investigation of our novel extension the adaptability of the algorithm to unexpected situations during learning and to unforeseen changes in the environment was proved while reducing the computational load in the learning process and increasing the convergence rate that plays a key role in upgrading decision making application that require numerous real time consecutive decisions, including autonomous vehicles, industrial robots, gaming, aerial navigation... The novel algorithm was tested in a gym environment simulator called “Highway-env” with multiple highway situations (multiple lanes configurations, highway with dynamic number of lanes (from 4-lane to 2-lane, from 4-lane to 6-lane), merge) with numerous dynamic obstacles. For the purpose of comparison, we used a benchmark of cutting edge algorithms known for their prominent performance. The experimental results showed that the proposed algorithm outperformed the comparison algorithms in learning stability and performance that were validated by the following metrics: average loss value per episode, average accuracy per episode, maximum speed value reached per episode, average speed per episode, and the total reward per episode.

Similar content being viewed by others

References

Christian L (2019) Situation Awareness And Decision-making for Autonomous Driving. IROS2019-IEEE/RSJ International Conference on Intelligent Robots and Systems, Macau, pp 1–25

Wilko S, Javier A, Daniela R (2018) Planning and Decision-Making for autonomous vehicles, annual review of control. Robot Auton Syst 1:187–210

Faisal R, Sohail J, Muhammad S, Mudassar A, Kashif N (2018) Nouman a planning and decision-making for autonomous vehicles. Comput Electr Eng 69:690–704

Yan M, Zhaoyong M, Tao W, Jian Q, Wenjun D (2020) Xiangyao M Obstacle avoidance path planning of unmanned submarine vehicle in ocean current environment based on improved firework-ant colony algorithm. Computers and Electrical Engineering. https://doi.org/10.1016/j.compeleceng.2020.106773

Christian L (2019) A journey in the history of Automated Driving. IROS2019-IEEE/RSJ Int Conf Intell Robot Syst 87:1–27

Bugala M (2018) Algorithms applied in autonomous vehicle systems. Szybkobiezne Pojazdy Gasienicowe 50:119-138

Badue C, Guidolini R, Vivacqua Carneiro R, Azevedo P, Brito Cardoso V, Forechi A, Jesus L, Berriel R, Paixão T, Mutz F, Veronese L, Oliveira-Santos T, Ferreira De Souza A (2021) Self-Driving Cars: A Survey, Expert Systems with Applications. https://doi.org/10.1016/j.eswa.2020.113816

Chohra A, Farah A, Benmehrez C (1998) Neural Navigation Approach for Intelligent Autonomous Vehicles (IAV) in Partially Structured Environments. Appl Intell 8:219–233. https://doi.org/10.1023/A:1008216400353

Sutton R, Barto A (1998) Reinforcement learning: an introduction. In: Adaptative computation and ML Series, MIT Press, Bradford, pp 2–4

Gatti C (2015) Design of experiments for reinforcement learning. Springer International Publishing, Cham

Padakandla SKJP, Bhatnagar S (2020) Reinforcement learning algorithm for non-stationary environments. Appl Intell 50:3590–3606. https://doi.org/10.1007/s10489-020-01758-5

Ravichandiran S (2018) Hands-on Reinforcement Learning with Python Master reinforcement and deep reinforcement learning using open AI Gym and TensorFlow, pp 91–111. Packt Publishing

Sandro S (2018) Introduction to deep learning - from logical calculus to artificial intelligence. Undergraduate topics in computer science. Springer

Hodge VJ, Hawkins R, Alexander R (2020) Deep reinforcement learning for drone navigation using sensor data. Neural Comput Appl. https://doi.org/10.1007/s00521-020-05097-x

Zouaidia K, Ghanemi S, Rais MS, Bougueroua L (2021) Hybrid intelligent framework for one-day ahead wind speed forecasting. Neural Computing and Applications. https://doi.org/10.1007/s00521-021-06255-5

Mnih V, Kavukcuoglu K, Silver D, Graves A, Antonoglou I, Wierstra D, Riedmil-ler M (2013) Playing atari with deep reinforcement learning, arXiv:1312.5602

Wang J, Zhang Q, Zhao D, Chen Y (2019) Lane change decision-making through deep reinforcement learning with rule-based constraints. International Joint Conference on Neural Networks

Zap A, Joppen T, Fürnkranz J (2020) Deep Ordinal Reinforcement Learning, Machine Learning and Knowledge Discovery in Databases. ECML PKDD 2019. Springer, Cham, pp 3–18

Anschel O, Baram N, Shimkin N (2017) DQN: variance reduction and stabilization for deep reinforcement learning. Proc 34th Int Conf Mach Learn 70:176–185

Samuele T (2019) Boosted Deep Q-Network Bachelor-Thesis von Jeremy Eric Tschirneraus Kassel

Andrecut M, Ali MK (2001) Deep-SARSA: a reinforcement learning algorithm for autonomous navigation. World Sci Publish Comp Int J Modern Phys 12:1513–1523. https://doi.org/10.1142/S0129183101002851

Olyaei MH, Jalali H, Olyaei A, Noori A (2018) Implement deep SARSA in grid world with changing obstacles and testing against new environment. Fund Res Electr Eng:267–279

Luo W, Tang Q, Fu C, Eberhard P (2018) Deep-sarsa based multi-UAV path planning and obstacle avoidance in a dynamic environment. Adv Swarm Intell:102–111

XU Z, CAO L, CHEN X, LI C, ZHANG Y, LAI J (2018) Q-Learning Deep reinforcement learning with sarsa a hybrid approach. IEICE transactions on information and systems. E101.D(9):2315–2322

Xu X, Zuo L, Li X, Qian L, Ren J, Sun Z (2020) A reinforcement learning approach to autonomous decision making of intelligent vehicles on highways. IEEE Trans Syst Man Cybern Syst 50 (10):3884–3897. https://doi.org/10.1109/TSMC.2018.2870983

Derui D, Zifan D, Guoliang W, Fei H (2019) An improved reinforcement learning algorithm based on knowledge transfer and applications in autonomous vehicles. Neurocomputing 361:243–255. ISSN 0925-2312. https://doi.org/10.1016/j.neucom.2019.06.067

Qiao Z, Tyree Z, Mudalige P, Schneider JG, Dolan JM (2020) Hierarchical reinforcement learning method for autonomous vehicle behavior planning. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp 6084–6089

Hoel C, Driggs-Campbell K, Wolff K, Laine L, Kochenderfer M.J (2020) Combining Planning and Deep Reinforcement Learning in Tactical Decision Making for Autonomous Driving. IEEE Trans Intell Veh 5(2):294–305. https://doi.org/10.1109/TIV.2019.2955905

Alizadeh A, Moghadam M, Bicer Y, Ure NK, Yavas MU, Kurtulus C (2019) Automated lane change decision making using deep reinforcement learning in dynamic and uncertain highway environment. 2019 IEEE Intelligent Transportation Systems Conference (ITSC), pp 1399–1404

Likmeta A, Metelli AM, Tirinzoni A, et al. (2020) Combining reinforcement learning with rule-based controllers for transparent and general decision-making in autonomous driving. Robotics and Autonomous Systems. https://doi.org/10.1016/j.robot.2020.103568

Xing W, Haolei C, Changgu C, Mingyu Z, Shaorong X, Yike G, Hamido F (2020) The autonomous navigation and obstacle avoidance for USVs with ANOA deep reinforcement learning method. Knowl-Based Syst 196:105201. ISSN: 0950–7051. https://doi.org/10.1016/j.knosys.2019.105201

Huynh A, Nguyen B, Nguyen H, Vu S, Nguyen H (2021) A Method of Deep Reinforcement Learning for Simulation of Autonomous Vehicle Control. In: Proceedings of the 16th International Conference on Evaluation of Novel Approaches to Software Engineering, pp 372–379 ISBN: 978-989-758-508-1

Kuutti S, Bowden R, Fallah S (2021) Weakly supervised reinforcement learning for autonomous highway driving via virtual safety cages. Sensors (Basel, Switzerland) 21(6):2032. https://doi.org/10.3390/s21062032

Williams RJ (1992) Statistical gradient-following algorithms for connectionist reinforcement learning. Mach Learn 8:229–256. https://doi.org/10.1007/BF00992696

Zhong S, Tan J, Dong H, Chen X, Gong S, Qian Z (2020) Modeling-Learning-Based Actor-Critic Algorithm with gaussian process approximator. Grid Comput 18:181–195. https://doi.org/10.1007/s10723-020-09512-4

Ravichandiran S (2018) Hands-on Reinforcement Learning with Python Master reinforcement and deep reinforcement learning using openAI Gym and TensorFlow. Packt Publishing, pp 69–90

Bellman R (1957) A Markovian decision process. J Math Mech 6:679–684

Littman ML (2001) Markov decision processes, international encyclopedia of the social and behavioral sciences. Science Direct: 9240–9242. https://doi.org/10.1016/B0-08-043076-7/00614-8

Ravichandiran S (2018) Hands-on Reinforcement Learning with Python Master reinforcement and deep reinforcement learning using openAI Gym and TensorFlow. Packt Publishing, pp 41–46

Leurent E (2018) ‘Highway-env’ An Environment for Autonomous Driving Decision-Making, GitHub repository, https://github.com/eleurent/highway-env

Woo Geem Z, Hoon Kim J, Loganathan GV (2001) A new heuristic optimization algorithm: harmony search, simulation: transactions of the society for modeling and simulation international 78:60–68. https://doi.org/10.1177/003754970107600201

Hado v. H., Arthur GD (2016) Deep reinforcement learning with double Q-Learning. In: Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence. AAAI Press, pp 2094– 2100

Peteiro-Barral D, Guijarro-Berdiñas B (2013) A study on the scalability of artificial neural networks training algorithms using multiple-criteria decision-making methods. In: Rutkowski L, Korytkowski M, Scherer R, Tadeusiewicz R, Zadeh LA, Zurada JM (eds) Artificial Intelligence and Soft Computing. ICAISC 2013. Lecture Notes in Computer Science. https://doi.org/10.1007/978-3-642-38658-9_15, vol 7894. Springer, Berlin

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Rais, M.S., Boudour, R., Zouaidia, K. et al. Decision making for autonomous vehicles in highway scenarios using Harmonic SK Deep SARSA. Appl Intell 53, 2488–2505 (2023). https://doi.org/10.1007/s10489-022-03357-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03357-y