Abstract

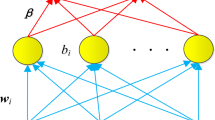

A new random vector functional link (RVFL) network with subspace-based local connections (abbreviated as RVFL-SLC network) is proposed in this paper. The main innovation of RVFL-SLC network is that the local connections are utilized in the input-layer of RVFL network. To select appropriate local connections for RVFL-SLC network, a novel efficient decision entropy criterion is used to partition the original attribute space into disjoint attribute subspaces, where the different attribute subspaces correspond to the respective hidden-layer weights. The decision entropy of each attribute subspace is first defined based on the posterior probabilities of classes given the attribute subspace. Then, the attribute subspaces are iteratively updated to increase the validation accuracy of the RVFL-SLC network. In each iteration, the attribute subspace with the minimum decision entropy is selected as candidate for updating. The optimal attribute subspaces are finally obtained when the RVFL-SLC network reaches a stable validation accuracy. To evaluate the classification accuracy of RVFL-SLC, it was compared on 20 benchmark data sets with four other randomization-based networks, namely the regular RVFL network, regular extreme learning machine (ELM), ELM with local connections (ELM-LC), and the No-Prop network. Experimental results show that the proposed RVFL-SLC network not only obtains the highest testing accuracy compared to other randomization-based networks but also best prevents overfitting. This demonstrates the rationality and effectiveness of using the strategy of subspace-based local connections to improve the generalization capability of RVFL networks.

Similar content being viewed by others

Data Availability

None.

Materials Availability

None.

Code Availability

None.

References

Schmidt WF, Kraaijveld MA, Duin RP (1992) Feedforward neural networks with random weights. Inproceedings of the 11th IAPR International Conference on Pattern Recognition, pp 1–4

Igelnik B, Pao YH (1995) Stochastic choice of basis functions in adaptive function approximation and the functional-link net. IEEE transactions on Neural Networks 6(6):1320–1329

Zhang L, Suganthan PN (2016) A comprehensive evaluation of random vector functional link networks. Information Sciences 367:1094–1105

Te Braake HA, Van Straten G (1995) Random activation weight neural net (RAWN) for fast non-iterative training. Engineering Applications of Artificial Intelligence 8(1):71–80

Huang GB, Zhu QY, Siew CK (2006) Extreme learning machine: theory and applications. Neurocomputing 70(1-3):489–501

Huang GB, Zhou H, Ding X et al (2011) Extreme learning machine for regression and multiclass classification. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics) 42(2):513–529

Huang G, Huang GB, Song S et al (2015) Trends in extreme learning machines: A review. Neural Networks 61:32–48

Widrow B, Greenblatt A, Kim Y et al (2013) The No-Prop algorithm: A new learning algorithm for multilayer neural networks. Neural Networks 37:182–188

Wang LP, Wan CR (2008) Comments on “The extreme learning machine”. IEEE Transactions on Neural Networks 19(8):1494–1495

Huang GB (2008) Reply to “comments on “the extreme learning machine””. IEEE Transactions on Neural Networks 19(8):1495–1496

Suganthan PN (2018) On non-iterative learning algorithms with closed-form solution. Applied Soft Computing 70:1078–1082

Cao WP, Wang XZ, Ming Z et al (2018) A review on neural networks with random weights. Neurocomputing 275:278–287

Chen CP (1996) A rapid supervised learning neural network for function interpolation and approximation. IEEE Transactions on Neural Networks 7(5):1220–1230

Zhang L, Suganthan PN (2017) Visual tracking with convolutional random vector functional link network. IEEE Transactions on Cybernetics 47(10):3243–3253

Xu KK, Li HX, Yang HD (2017) Kernel-based random vector functional-link network for fast learning of spatiotemporal dynamic processes. IEEE Transactions on Systems, Man, and Cybernetics: Systems 49 (5):1016–1026

Pratama M, Angelov PP, Lughofer E, Er MJ (2018) Parsimonious random vector functional link network for data streams. Information Sciences 430:519–537

Zhang PB, Yang ZX (2020) A new learning paradigm for random vector functional-link network: RVFL+. Neural Networks 122:94–105

Li F, Yang J, Yao M et al (2019) Extreme learning machine with local connections. Neurocomputing 368:146–152

Huang GB, Bai Z, Kasun LLC et al (2015) Local receptive fields based extreme learning machine. IEEE Computational Intelligence Magazine 10(2):18–29

Dua D, Graff C (2019) UCI machine learning repository. Irvine, CA: University of california school of information and computer science

Moreno-Torres JG, Sáez JA, Herrera F (2012) Study on the impact of partition-induced dataset shift on k-fold cross-validation. IEEE Transactions on Neural Networks and Learning Systems 23(8):1304–1313

Wang XZ, He YL, Wang DD (2013) Non-naive Bayesian classifiers for classification problems with continuous attributes. IEEE Transactions on Cybernetics 44(1):21–39

Parzen E (1962) On estimation of a probability density function and mode. The Annals of Mathematical Statistics 33(3):1065–1076

Jones MC, Marron JS, Sheather SJ (1996) A brief survey of bandwidth selection for density estimation. Journal of the American Statistical Association 91(433):401–407

Cao FL, Wang DH, Zhu HY et al (2016) An iterative learning algorithm for feedforward neural networks with random weights. Information Sciences 328:546–557

Li M, Wang DH (2017) Insights into randomized algorithms for neural networks: Practical issues and common pitfalls. Information Sciences 382:170–178

Dudek G (2019) Improving randomized learning of feedforward neural networks by appropriate generation of random parameters. Lecture Notes in Computer Science 11506:517–530

Dudek G (2020) Generating random parameters in feedforward neural networks with random hidden nodes: Drawbacks of the standard method and how to improve it. Communications in Computer and Information Science 1333:598–606

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. Journal of Machine Learning Research 7:1–30

ur Rehman MH, Liew CS, Abbas A et al (2016) Big data reduction methods: a survey. Data Science and Engineering 1(4):265–284

Acknowledgements

The authors would like to sincerely thank the editors and anonymous reviewers whose meticulous readings and valuable suggestions help them to improve this paper significantly. This paper was supported by National Natural Science Foundation of China (61972261) and Basic Research Foundations of Shenzhen (JCYJ20210324093609026 and JCYJ20200813091134001).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

None.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

He, YL., Yuan, ZH. & Huang, J.Z. Random vector functional link network with subspace-based local connections. Appl Intell 53, 1567–1585 (2023). https://doi.org/10.1007/s10489-022-03404-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03404-8