Abstract

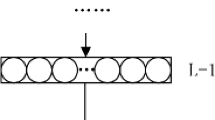

In this paper, we propose a CVAM (continuous-valued associative memory for one-to-many associations) with back-propagation learning and analyze the performance in detail. Conventional associative memories often deal with binary patterns, however, most of the data handled today are continuous-valued data. The basic architecture of the proposed CVAM is a three-layer perceptron with multiple sub-layers in the hidden layer. The multiple sub-layers enable one-to-many associations using back-propagation (BP) learning algorithm; each sub-layer memorizes single one-to-one association and the multiple sub-layers enables one-to-many associations. We carried out experiments to analyze the important properties such as memory capacity and noise tolerance performance using continuous-valued data. In addition, we conducted a demonstrative experiment to visually confirm the behavior of the proposed CVAM as an associative memory model using the CIFAR-10 image data set.

Similar content being viewed by others

References

Liu J, Gong M, He H (2019) Deep associative neural network for associative memory based on unsupervised representation learning. Neural Netw 113:41–53

Nakano K (1972) Associatron-a model of associative memory. IEEE Trans Syst Man Cybern Syst, (3):380–388

Kohonen T (1972) Correlation matrix memories. IEEE Trans Comput 100(4):353–359

Anderson JA (1972) A simple neural network generating an interactive memory. Math Biosci 14 (3-4):197–220

Hopfield JJ (1982) Neural networks and physical systems with emergent collective computational abilities. In: Proc the national academy of sciences, vol 79, (8), pp 2554–2558

Kosko B (1988) Bidirectional associative memories. IEEE Trans Syst Man Cybern Syst 18 (1):49–60

Hagiwara M (1990) Multidirectional associative memory. In: Proc IEEE and INNS int joint conf on neural networks, vol 1, pp 3–6

Kojima T, Nonaka H, Date T (1995) Capacity of the associative memory using the Boltzmann machine learning. In: Proc IEEE international conference on neural networks, (5), pp 2572–2577

Hinton GE, Salakhutdinov RR (2006) Reducing the dimensionality of data with neural networks. Science 313(5786):504–507

Smolensky P (1986) Information processing in dynamical systems: foundations of harmony theory. Parallel Distributed Processing: Explorations in the Microstructure of Cognition 1:194–281

Nagatani K, Hagiwara M (2014) Restricted Boltzmann machine associative memory. In: International joint conference on neural networks, pp 3745–3750

Mikolov T, Sutskever I, Chen K, Corrado G, Dean v (2013) Distributed representations of words and phrases and their compositionality. Adv Neural Inf Process Syst:3111–3119

Mikolov T, Chen K, Corrado G, Dean J (2013) Efficient estimation of word representations in vector space . In: Proc ICLR, vol 3781, pp 1–12

Donahue J, Jia Y, Vinyals O, Hoffman J, Zhang N, Tzeng E, Darrell T (2014) Decaf: a deep convolutional activation feature for generic visual recognition. In: International conference on machine learning, pp 647–655

Hirai Y (1983) A model of human associative processor (HASP). In: IEEE transactions on systems, man, and cybernetics. SMC, vol 13, (5), pp 851–857

Amari S (1967) A theory of adaptive pattern classifiers. IEEE Trans Electron Comput EC-16:299–307

Okatani T (2015) Deep learning, Kodansha, Tokyo

Hattori M, Hagiwara M, Nakagawa M (1994) Quick learning for bidirectional associative memory. IEICE Trans Inf Syst 77(4):385–392

Sakamoto Y (2010) How to make a multivariate normal random numbers : to create a multivariate normal random numbers using MS-excel. Bulletin of Taisei Gakuin University 12:255–258

Tsutsui Y, Hagiwara M (2019) Analog value associative memory using restricted boltzmann machine. J Adv Comput Intell Intell Inf 23(1):60–66

Yanai H, Sawada Y (1990) On some properties of Sequence-Association type model neural networks. IEICE Trans on Fundamentals of Electronics, Communications and Computer Science J73-D-II (8):1192–1197

Xiao H, Rasul K, Vollgraf R (2017) Fashion-MNIST: a novel image dataset for benchmarking machine learning algorithms

Krizhevsky A, Hinton G (2009) Learning multiple layers of feature from tiny images

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kano, S., Hagiwara, M. CVAM: continuous-valued associative memory for one-to-many associations. Appl Intell 53, 5462–5472 (2023). https://doi.org/10.1007/s10489-022-03814-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03814-8