Abstract

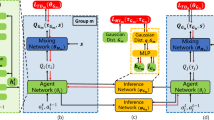

In recent years, the Multi-agent Deep Reinforcement Learning Algorithm has been developing rapidly, in which the value method-based algorithm plays an important role (such as Monotonic Value Function Factorisation (QMIX) and Learning to Factorize with Transformation for Cooperative Multi-Agent Reinforcement learning (QTRAN)). In spite of the fact, the performance of current value-based multi-agent algorithm under complex scene still can be further improved. In value function-based model, a mixing network is usually used to mix the local action value of each agent to get joint action value when the partial observability will cause the problem of misalignment and unsatisfying mixing results. This paper proposes a multi-agent model called Transform Networks that transform the individual local action-value function gotten by agent network to individual global action-value function, which will avoid the problem of misalignment caused by partial observability when the individual action value is mixed, and the joint action value can represent the cooperative conditions of all agents well. Using the StarCraft Multi-Agent Challenge (SMAC) as the experimental platform, the comparison of the performance of algorithms on five different maps proved that the proposed method has better effect than the current most advanced baseline algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Liu T, Liu H, Li YF, Chen Z, Zhang Z, Liu S (2019) Flexible ftir spectral imaging enhancement for industrial robot infrared vision sensing. IEEE Trans Industr Inform 16(1):544–554

Liu T, Liu H, Li YF, Zhang Z, Liu S (2018) Efficient blind signal reconstruction with wavelet transforms regularization for educational robot infrared vision sensing. IEEE/ASME Trans Mechatron 24(1):384–394

Liu H, Nie H, Zhang Z, Li YF (2021) Anisotropic angle distribution learning for head pose estimation and attention understanding in human-computer interaction. Neurocomputing 433:310–322

Hoffmann R, Zhang C, Ling X, Zettlemoyer L, Weld DS (2011) Knowledge-based weak supervision for information extraction of overlapping relations. In: Proceedings of the 49th annual meeting of the association for computational linguistics: human language technologies, pp 541–550

Tan M (1993) Multi-agent reinforcement learning: Independent vs. cooperative agents

Liu H, Liu T, Zhang Z, Sangaiah AK, Yang B, Li Y ARHPE (2022) Asymmetric relation-aware representation learning for head pose estimation in industrial human–machine interaction. IEEE Trans Ind Inf

Liu H, Fang S, Zhang Z, Li D, Lin K, Wang J (2021) Mfdnet: Collaborative poses perception and matrix fisher distribution for head pose estimation. IEEE Transactions on Multimedia

Liu H, Zheng C, Li D, Shen X, Lin K, Wang J, Zhang Z, Zhang Z, Xiong NN (2021) Edmf: Efficient deep matrix factorization with review feature learning for industrial recommender system IEEE Transactions on Industrial Informatics

Li Z, Liu H, Zhang Z, Liu T, Xiong NN (2021) Learning knowledge graph embedding with heterogeneous relation attention networks. IEEE Transactions on Neural Networks and Learning Systems

Liu H, Zheng C, Li D, Zhang Z, Ke Lin, Shen X, Xiong NN, Wang J (2022) Multi-perspective social recommendation method with graph representation learning. Neurocomputing 468:469–481

Gupta JK, Egorov M, Kochenderfer M (2017) Cooperative multi-agent control using deep reinforcement learning. In: International conference on autonomous agents and multiagent systems. Springer, pp 66–83

Oliehoek FA, Spaan MTJ, Vlassis N (2008) Optimal and approximate q-value functions for decentralized pomdps. J Artif Intell Res 32:289–353

Lowe R, Wu YI, Tamar A, Harb J, Abbeel P, Mordatch I (2017) Multi-agent actor-critic for mixed cooperative-competitive environments. arXiv:1706.02275

Foerster J, Farquhar G, Afouras T, Nardelli N, Whiteson S (2018) Counterfactual multi-agent policy gradients. In: Proceedings of the AAAI Conference on artificial intelligence, vol 32

Sunehag P, Lever G, Gruslys A, Czarnecki WM, Zambaldi V, Jaderberg M, Lanctot M, Sonnerat N, Leibo JZ , Tuyls K et al (2017) Value-decomposition networks for cooperative multi-agent learning. arXiv:1706.05296

Rashid T, Samvelyan M, Schroeder C, Farquhar G, Foerster J, Whiteson S (2018) Qmix: Monotonic value function factorisation for deep multi-agent reinforcement learning. In: International conference on machine learning. PMLR, pp 4295–4304

Son K, Kim D, Kang WJ, Hostallero DE, Yi Y (2019) Qtran: Learning to factorize with transformation for cooperative multi-agent reinforcement learning. In: International conference on machine learning. PMLR, pp 5887–5896

Samvelyan M, Rashid T, De Witt CS, Farquhar G, Nardelli N, Rudner TGJ, Hung Chia-Man, Torr PHS , Foerster J, Whiteson S (2019) The starcraft multi-agent challeng. arXiv:1902.04043

Zhao S, Grishman R (2005) Extracting relations with integrated information using kernel methods. In: Proceedings of the 43rd annual meeting of the association for computational linguistics (acl’05), pp 419–426

Wen C, Yao Xu, Wang Y, Tan X (2020) Smix (λ): Enhancing centralized value functions for cooperative multi-agent reinforcement learning. In: Proceedings of the AAAI Conference on artificial intelligence, vol 34, pp 7301–7308

Mahajan A, Rashid T, Samvelyan M, Whiteson S (2019) Maven: Multi-agent variational exploration. Advances in Neural Information Processing Systems 32

Wang J, Ren Z, Liu T, Yu Y, Zhang c (2020) Qplex: Duplex dueling multi-agent q-learning. arXiv:2008.01062

Du Y, Han L, Fang M, Lui J, Dai T, Tao D (2019) liir: Learning individual intrinsic reward in multi-agent reinforcement learning

Wang W, Yang T, Liu Y, Hao J, Hao X, Hu Y, Chen Y, Fan C, Gao Y (2019) Action semantics network: Considering the effects of actions in multiagent systems. arXiv:1907.11461

Vinyals O, Ewalds T, Bartunov S, Georgiev P, Vezhnevets AS, Yeo M, Makhzani A, Küttler H, Agapiou J , Schrittwieser J et al (2017) Starcraft ii: A new challenge for reinforcement learning. arXiv:1708.04782

Oliehoek FA, Amato C (2015) A concise introduction to decentralized pomdps

Littman ML (1994) Markov games as a framework for multi-agent reinforcement learning. In: Machine learning proceedings 1994. Elsevier, pp 157–163

Wiering MA, Van Otterlo M (2012) Reinforcement learning. Adaptation, learning, and optimization, 12(3)

Szepesvári C (2009) Synthesis lectures on artificial intelligence and machine learning. Synthesis lectures on artificial intelligence and machine learning

Acknowledgements

This work is supported by the Key laboratory fund of underwater acoustic countermeasure technology(SSDKKFJJ-2019-02-03).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, H., Xie, X. & Zhou, L. Transform networks for cooperative multi-agent deep reinforcement learning. Appl Intell 53, 9261–9269 (2023). https://doi.org/10.1007/s10489-022-03924-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03924-3