Abstract

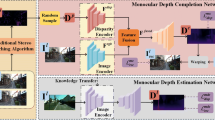

Self-supervised monocular depth estimation (SMDE) has emerged as a promising alternative to generate a dense depth map in outdoor scenarios because of its low requirements for training data and sensors. However, training with only consecutive temporal frames without depth ground truth causes problems such as a lack of global supervision information and inaccurate depth estimation in low-texture areas. In this study, we propose a pseudo-label generation (PLG) module, a two-stream pose estimation (TSPE) structure, and a grid regularization loss function to address these issues. Here, the PLG is used to automatically generate pseudo-grid and pseudo-pose in the data preprocessing stage. The pseudo-grid provides reliable global position and direction information for supervision, while the pseudo-pose is used in TSPE to provide more geometric information. The TSPE fuses the geometry-based pseudo-pose and the network-based pose with a simple structure. The generalization of the pose estimation is improved by providing more geometric information. By handling the negligible depth error in the low-texture area, the proposed grid regularization loss function improves the depth estimation performance. Experiments show that our methods can improve the depth estimation performance, especially in the object boundary and low-texture area, with no additional training data or model parameters.

Similar content being viewed by others

References

Luo X, Huang JB, Szeliski R, Matzen K, Kopf J (2020) Consistent video depth estimation. ACM Trans Graph (TOG) 39(4):71–1

Wang Y, Chao WL, Garg D, Hariharan B, Campbell M, Weinberger KQ (2019) Pseudo-lidar from visual depth estimation: Bridging the gap in 3d object detection for autonomous driving. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8445–8453

Zhu K, Jiang X, Fang Z, Gao Y, Fujita H, Hwang JN (2021) Photometric transfer for direct visual odometry. Knowl-Based Syst 213:106671

Guizilini V, Ambrus R, Pillai S, Raventos A, Gaidon A (2020) 3d Packing for self-supervised monocular depth estimation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2485–2494

Zhao C, Sun Q, Zhang C, Tang Y, Qian F (2020) Monocular depth estimation based on deep learning: an overview. Sci China Technol Sci, pp 1–16

Xu H, Liu N (2021) Detail-preserving depth estimation from a single image based on modified fully convolutional residual network and gradient network. SN Applied Sciences 3(12):1–15

Godard C, Mac Aodha O, Firman M, Brostow GJ (2019) Digging into self-supervised monocular depth estimation. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 3828–3838

GonzalezBello JL, Kim M (2020) Forget about the lidar: Self-supervised depth estimators with med probability volumes. Adv Neural Inf Process Syst 33:12626–12637

Xue F, Zhuo G, Huang Z, Fu W, Wu Z, Ang MH (2020) Toward hierarchical self-supervised monocular absolute depth estimation for autonomous driving applications. In: 2020 IEEE/RSJ International conference on intelligent robots and systems (IROS), pp 2330–2337. https://doi.org/10.1109/IROS45743.2020.9340802https://doi.org/10.1109/IROS45743.2020.9340802

Wu Z, Zhuo G, Xue F (2020) Self-supervised monocular depth estimation scale recovery using ransac outlier removal

Song X, Li W, Zhou D, Dai Y, Fang J, Li H, Zhang L (2021) Mlda-net: Multi-level dual attention-based network for self-supervised monocular depth estimation. IEEE Trans Image Process 30:4691–4705

Chen X, Wang Y, Chen X, Zeng W (2021) S2r-depthnet: Learning a generalizable depth-specific structural representation . In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3034–3043

Kumar VR, Klingner M, Yogamani S, Milz S, Fingscheidt T, Mader P (2021) Syndistnet: Self-supervised monocular fisheye camera distance estimation synergized with semantic segmentation for autonomous driving. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 61–71

Klingner M, Termöhlen JA, Mikolajczyk J, Fingscheidt T (2020) Self-supervised monocular depth estimation: Solving the dynamic object problem by semantic guidance. In: European conference on computer vision, pp 582–600. Springer

Zhu S, Brazil G, Liu X (2020) The edge of depth: Explicit constraints between segmentation and depth. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 13116–13125

Garg R, Bg VK, Carneiro G, Reid I (2016) Unsupervised cnn for single view depth estimation: Geometry to the rescue. In: European conference on computer vision, pp 740–756. Springer

Godard C, Mac Aodha O, Brostow GJ (2017) Unsupervised monocular depth estimation with left-right consistency. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 270–279

Li K, Fu Z, Wang H, Chen Z, Guo Y (2021) Adv-depth: Self-supervised monocular depth estimation with an adversarial loss. IEEE Signal Process Lett 28:638–642. https://doi.org/10.1109/LSP.2021.3065203

Zheng C, Cham TJ, Cai J (2018) T2net: Synthetic-to-realistic translation for solving single-image depth estimation tasks. In: Proceedings of the european conference on computer vision (ECCV), pp 767–783

Sattler T, Zhou Q, Pollefeys M, Leal-Taixe L (2019) Understanding the limitations of cnn-based absolute camera pose regression. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3302–3312

Jaderberg M, Simonyan K, Zisserman A et al (2015) Spatial transformer networks. Adv Neural Inf Process Syst 28:2017–2025

Nguyen T, Chen SW, Shivakumar SS, Taylor CJ, Kumar V (2018) Unsupervised deep homography: a fast and robust homography estimation model. IEEE Robot Autom Lett 3(3):2346–2353

Tao Y, Ling Z (2020) Deep features homography transformation fusion network—a universal foreground segmentation algorithm for ptz cameras and a comparative study. Sensors 20(12):3420

Lowe DG (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60 (2):91–110

Bay H, Ess A, Tuytelaars T, Van Gool L (2008) Speeded-up robust features (surf). Comput Vis Image Underst 110(3):346–359

Rublee E, Rabaud V, Konolige K, Bradski G (2011) Orb: an efficient alternative to sift or surf. In: 2011 International conference on computer vision, pp 2564–2571. Ieee

Wang H, Sang X, Chen D, Wang P, Yan B, Qi S, Ye X, Yao T (2021) Self-supervised learning of monocular depth estimation based on progressive strategy. IEEE Trans Comput Imaging 7:375–383

Li J, Hu Q, Ai M (2021) Point cloud registration based on one-point ransac and scale-annealing biweight estimation. IEEE Trans Geosci Remote Sens

Zhang YF, Thorburn PJ, Xiang W, Fitch P (2019) Ssim—a deep learning approach for recovering missing time series sensor data. IEEE Internet Things J 6(4):6618–6628

Yin KL, Pu YF, Lu L (2020) Combination of fractional flann filters for solving the van der pol-duffing oscillator. Neurocomputing 399:183–192

Wang N, He H (2019) Adaptive homography-based visual servo for micro unmanned surface vehicles. Int J Adv Manuf Technol 105(12):4875–4882

Geiger A, Lenz P, Stiller C, Urtasun R (2013) Vision meets robotics: The kitti dataset. Int J Robot Res (IJRR)

Geiger A, Lenz P, Urtasun R (2012) Are we ready for autonomous driving? the kitti vision benchmark suite. In: Conference on computer vision and pattern recognition (CVPR)

Zhou T, Brown M, Snavely N, Lowe DG (2017) Unsupervised learning of depth and ego-motion from video. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1851–1858

Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, Killeen T, Lin Z, Gimelshein N, Antiga L et al (2019) Pytorch: an imperative style, high-performance deep learning library. Adv Neural Inf Process Syst 32:8026–8037

Xiao J, Li H, Qu G, Fujita H, Cao Y, Zhu J, Huang C (2021) Hope: heatmap and offset for pose estimation. J Ambient Intell Humaniz Comput, pp 1–13

Zhan H, Garg R, Weerasekera CS, Li K, Agarwal H, Reid I (2018) Unsupervised learning of monocular depth estimation and visual odometry with deep feature reconstruction. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 340–349

Chen PY, Liu AH, Liu YC, Wang YCF (2019) Towards scene understanding: Unsupervised monocular depth estimation with semantic-aware representation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2624–2632

Lei Z, Wang Y, Li Z, Yang J (2021) Attention based multilayer feature fusion convolutional neural network for unsupervised monocular depth estimation. Neurocomputing 423:343–352

Zhou T, Brown M, Snavely N, Lowe DG (2017) Unsupervised learning of depth and ego-motion from video. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR)

Acknowledgements

This work was supported in part by the National Key Research and Development Program of China (No. 2018YFB2101300), in part by the National Natural Science Foundation of China (Grant No. 61871186), and in part by the Dean’s Fund of Engineering Research Center of Software/Hardware Co-design Technology and Application, Ministry of Education (East China Normal University).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xiao, Y., Chen, W. & Wang, J. Self-supervised monocular depth estimation based on pseudo-pose guidance and grid regularization. Appl Intell 53, 10149–10161 (2023). https://doi.org/10.1007/s10489-022-04006-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-04006-0