Abstract

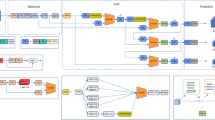

A significant issue in X-ray security inspection is that there are considerably more background images than foreground images. Although convolutional neural network (CNN)-based detection models have proven to be effective at detecting objects in X-ray security inspection systems, they are not designed to handle imbalance issues during training. Hence, typical CNN models are suboptimal at extracting background features and balancing the foreground and background. To address these two problems, we propose an efficient background learning (EBL) method with three modules: mixed foreground and background learning (MFB), hierarchical balanced hard negative example (HBHE) sampler and prime background mining with voting (PBMV). The MFB module extracts the foreground and background during each iteration and combines them into a single image for training, achieving unified and balanced foreground and background training. The HBHE sampler balances difficult foreground and background samples, dynamically choosing the number of negative samples based on the MFB image by calculating the difficulty factor. The PBMV module selects a prime background that is prone to false detection and provides increased attention through multiple voting during training. Experiments show that EBL can reduce false positives while maintaining high recall. When applied to Faster R-CNN, AP50 increases by 5.8% on benchmark X-ray datasets, including 2.3% on OPIXray and 3.7% on SIXray. Moreover, the performance of our model is improved without increasing computational costs or memory during the inference phase.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data Availability

The datasets analysed during the current study are available at:

• OPIXray: https://github.com/OPIXray-author/OPIXray

• SIXray: https://github.com/MeioJane/SIXray

• EDXray: not available due to privacy policy.

References

Miao C, Xie L, Wan f et al (2019) Sixray: A large-scale security inspection x-ray benchmark for prohibited item discovery in overlapping images. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2119–2128

Mery D, Riffo V, Zscherpel U et al (2015) GDXRay: The database of X-ray images for nondestructive testing. J Nondestruct Eval 34(4):1–12

Wei Y, Tao R, Wu Z et al (2020) Occluded prohibited items detection: An x-ray security inspection benchmark and de-occlusion attention module. In: Proceedings of the 28th ACM international conference on multimedia, pp 138–146

Wang B, Zhang L, Wen L et al (2021) Towards real-world prohibited item detection: A large-scale x-ray benchmark. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 5412–5421

Girshick R, Donahue J, Darrell T et al (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 580–587

Girshick R (2015) Fast r-cnn. In: Proceedings of the IEEE international conference on computer vision, pp 1440–1448

Ren S, He K, Girshick R et al (2015) Faster r-cnn: Towards real-time object detection with region proposal networks. Advances in neural information processing systems, vol 28

Cai Z (2018) Vasconcelos N. Cascade r-cnn: Delving into high quality object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6154–6162

Lin TY, Dollár P, Girshick R et al (2017) Feature pyramid networks for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2117–2125

Lin TY, Goyal P, Girshick R et al (2017) Focal loss for dense object detection. In: Proceedings of the IEEE international conference on computer vision, pp 2980–2988

Liu W, Anguelov D, Erhan D et al (2016) Ssd: Single shot multibox detector. In: European conference on computer vision. Springer, Cham, pp 21–37

Redmon J, Divvala S, Girshick R et al (2016) You only look once: Unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 779–788

Redmon J, Farhadi A (2017) YOLO9000: better, faster, stronger. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7263–7271

Redmon J, Farhadi A (2018) Yolov3: An incre-mental improvement. arXiv:1804.02767

Bochkovskiy A, Wang CY, Liao HYM (2020) Yolov4: Optimal speed and accuracy of object detection. arXiv:2004.10934

Wang CY, Bochkovskiy A, Liao HYM (2021) Scaled-yolov4: Scaling cross stage partial network. In: Proceedings of the IEEE/cvf conference on computer vision and pattern recognition, pp 13029–13038

Tian Z, Shen C, Chen H et al (2019) Fcos: Fully convolutional one-stage object detection. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 9627–9636

Duan K, Bai S, Xie L et al (2019) Centernet: Keypoint triplets for object detection. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 6569–6578

Yun S, Han D, Oh SJ et al (2019) Cutmix: Regularization strategy to train strong classifiers with localizable features. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 6023–6032

Shrivastava A, Gupta A, Girshick R (2016) Training region-based object detectors with online hard example mining. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 761–769

Cao Y, Chen K, Loy CC et al (2020) Prime sample attention in object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11583–11591

Bradley D, Roth G (2007) Adaptive thresholding using the integral image. J Graph Tools 12 (2):13–21

He K, Zhang X, Ren S et al (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Chen K, Wang J, Pang J et al (2019) MMDetection: Open mmlab detection toolbox and benchmark. arXiv:1906.07155

Lin TY, Maire M, Belongie S et al (2014) Microsoft coco: Common objects in context. In: European conference on computer vision. Springer, Cham, pp 740–755

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. nature 521(7553):436–444

Akcay S, Breckon TP (2017) An evaluation of region based object detection strategies within x-ray baggage security imagery. In: 2017 IEEE International conference on image processing (ICIP). IEEE, pp 1337–1341

Akçay S, Kundegorski ME, Devereux M et al (2016) Transfer learning using convolutional neural networks for object classification within X-ray baggage security imagery. In: 2016 IEEE International conference on image processing (ICIP). IEEE, pp 1057–1061

Pang J, Chen K, Shi J et al (2019) Libra r-cnn: Towards balanced learning for object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 821–830

Zhang H, Chang H, Ma B et al (2020) Dynamic r-CNN: Towards high quality object detection via dynamic training. In: European conference on computer vision. Springer, Cham, pp 260–275

Law H, Deng J (2018) Cornernet: Detecting objects as paired keypoints. In: Proceedings of the European conference on computer vision (ECCV), pp 734–750

Zhang H, Cisse M, Dauphin YN et al (2017) Mixup: Beyond empirical risk minimization. arXiv:1710.09412,

Gao SH, Cheng MM, Zhao K et al (2019) Res2net: A new multi-scale backbone architecture. IEEE Trans Pattern Anal Mach Intell 43(2):652–662

Xie S, Girshick R, Dollár P et al (2017) Aggregated residual transformations for deep neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1492–1500

Acknowledgements

This work was supported by the National Key Research and Development Program of China(SQ2020YFF0426385). The authors would like to thank AJE (www.aje.com) for its linguistic assistance during the preparation of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Effect of the Hyperparameters

We conduct comparative experiments on some hyperparameters in the HBHE and PBMV modules. The experimental results for different δ and k are shown in Table 9. Smaller values of δ address the problem of extreme sampling bias to a certain extent. We find that setting δ to 0.1 achieves the best trade-off and use this as the default value in later experiments. Larger values of k reduce the overall quality of the difficult backgrounds. We find that setting k to 0.1 achieves the best performance. The experimental results on the resampling times are shown in Table 10. We find that a prime background that appears only once can affect the performance of the model if that background participates in resampling.

Appendix B: Result visualization

We visualize the background mining results of PBMV, as shown in Fig. 11. Nonprime BGs have relatively simple features that are difficult to falsely detect and are ineffective for training the model. The “Prime” background usually contains many objects with more complex features. The “S-Prime” background contains even more complex features than the Prime background. The features in these backgrounds are useful for reducing the number of false positives in the model.

Figure 12 visualizes the detection results of some examples and compares the baseline with our method on both the FGs and BGs. In the FGs, our method not only ensurethat contraband is detected but also eliminate falsely detected objects in the background that are close to the baseline. In the BGs, our method greatly reduces false detection.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, W., He, L., Li, Y. et al. EBL: Efficient background learning for x-ray security inspection. Appl Intell 53, 11357–11372 (2023). https://doi.org/10.1007/s10489-022-04075-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-04075-1