Abstract

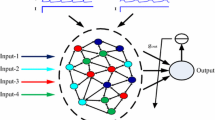

Liquid state machine (LSM) of spiking neurons is a biologically plausible computational model imitating the structure and functions of the nervous system for information processing. Current supervised learning algorithms for spiking LSMs, such as the remote supervised method (ReSuMe), generally only adjust the synaptic weights in the output layer, while the synaptic weights of input and liquid layers are no longer changed during supervised learning. In this paper, a spike-train level supervised learning algorithm for spiking LSMs based on a bidirectional modification mechanism is proposed, which is called the bidirectional modification method (BiMoMe). Unlike ReSuMe, the proposed BiMoMe algorithm can adjust all synaptic weights in LSMs, which can enhance the dynamics of the network and is a biologically plausible supervised learning algorithm. The learning performance of BiMoMe is evaluated through several spike train learning tasks and an image classification problem. Experimental results show that BiMoMe has stronger spike train learning ability and image classification performance compared with other related algorithms, indicating that BiMoMe is effective in solving spatio-temporal pattern learning problems.

Similar content being viewed by others

References

Yang GR, Wang XJ (2020) Artificial neural networks for neuroscientists: a primer. Neuron 107(6):1048–1070

Prieto A, Prieto B, Ortigosa EM, et al. (2016) Neural networks: an overview of early research, current frameworks and new challenges. Neurocomputing 214:242–268

Walter F, Röhrbein F, Knoll A (2016) Computation by time. Neural Process Lett 44 (1):103–124

Saravanan R, Sujatha P (2018) A state of art techniques on machine learning algorithms: a perspective of supervised learning approaches in data classification. In: 2018 second international conference on intelligent computing and control systems, pp 945–949

Azarfar A, Calcini N, Huang C, Zeldenrust F, Celikel T (2018) Neural coding: a single neuron’s perspective. Neurosci Biobehav Rev 94:238–247

Smith EH, Horga G, Yates MJ, Mikell CB, Banks GP, Pathak YJ, Sheth SA et al (2019) Widespread temporal coding of cognitive control in the human prefrontal cortex. Nat Neurosci 22 (11):1883–1891

Xiao R, Tang H, Ma Y, Yan R, Orchard G (2019) An eventdriven categorization model for aer image sensors using multispike encoding and learning. IEEE Transactions on Neural Networks and Learning Systems 31(9):3649–3657

Chu Z, Ma J, Wang H (2021) Learning from crowds by modeling common confusions. In: Association for the advancement of artificial intelligence (AAAI), pp 5832–5840

He Z, Yuan S, Zhao J, Du B, Yuan Z, Alhudhaif A, Alenezi F, Althubiti SA (2022) A novel myocardial infarction localization method using multi-branch DenseNet and spatial matching-based active semi-supervised learning. Inf Sci 606:649–668

Uthamacumaran A, Elouatik S, Abdouh M, Berteau-Rainville M, Gao ZH, Arena G (2022) Machine learning characterization of cancer patients-derived extracellular vesicles using vibrational spectroscopies: results from a pilot study. Appl Intell 1–17

Zhao L, Zhou Y, Lu H, Fujita H (2019) Parallel computing method of deep belief networks and its application to traffic flow prediction. Knowl-Based Syst 163:972–987

Zhang Y, Zhou Y, Lu H, Fujita H (2020) Traffic network flow prediction using parallel training for deep convolutional neural networks on spark cloud. IEEE Trans Indust Inform 16(12):7369–7380

Maass W (1997) Networks of spiking neurons: the third generation of neural network models. Neural Netw 10(9):1659–1671

Taherkhani A, Belatreche A, Li Y, Cosma G, Maguire LP, McGinnity TM (2020) A review of learning in biologically plausible spiking neural networks. Neural Netw 122:253–272

Izhikevich EM (2004) Which model to use for cortical spiking neurons? IEEE Trans Neural Netw 15(5):1063–1070

Ponulak F, Kasinski A (2011) Introduction to spiking neural networks: Information processing, learning and applications. Acta Neurobiol Exp 71(4):409–433

Lin X, Wang X, Hao Z (2017) Supervised learning in multilayer spiking neural networks with inner products of spike trains. Neurocomputing 237:59–70

Wang X, Lin X, Dang X (2020) Supervised learning in spiking neural networks: a review of algorithms and evaluations. Neural Netw 125:258–280

Tiňo P, Mills AJS (2006) Learning beyond finite memory in recurrent networks of spiking neurons. Neural Comput 18(3):591–613

Bohte SM, Kok JN, La Poutre H (2002) Error-backpropagation in temporally encoded networks of spiking neurons. Neurocomputing 48(1-4):17–37

Bellec G, Salaj D, Subramoney A, Legenstein R, Maass W (2018) Long short term memory and learning-to-learn in networks of spiking neurons. In: Advances in neural information processing systems, pp 787–797

Lillicrap TP, Santoro A (2019) Backpropagation through time and the brain. Curr Opin Neurobiol 55:82–89

Brea J, Senn W, Pfister JP (2011) Sequence learning with hidden units in spiking neural networks. Adv Neural Inf Process Syst 24:1422–1430

Brea J, Senn W, Pfister JP (2013) Matching recall and storage in sequence learning with spiking neural networks. J Neurosci 33(23):9565–9575

Galtier MN, Wainrib G (2013) A biological gradient descent for prediction through a combination of STDP and homeostatic plasticity. Neural Comput 25(11):2815–2832

Lin X, Shi G (2018) A supervised multi-spike learning algorithm for recurrent spiking neural networks. In: International conference on artificial neural networks. Springer, pp 222–234

Gilra A, Gerstner W (2017) Predicting non-linear dynamics by stable local learning in a recurrent spiking neural network. Elife e28295:6

Maass W, Natschläger T, Markram H (2002) Real-time computing without stable states: a new framework for neural computation based on perturbations. Neural Comput 14(11):2531–2560

Verstraeten D, Schrauwen B, d’Haene M, Stroobandt D (2007) An experimental unification of reservoir computing methods. Neural Netw 20(3):391–403

Yang X, Wu Z, Zhang Q (2022) Bluetooth indoor localization with Gaussian–Bernoulli restricted boltzmann machine plus liquid state machine. IEEE Trans Instrum Meas 2022(71):1–8

Saraswat V, Gorad A, Naik A, Patil A, Ganguly U (2021) Hardware-friendly synaptic orders and timescales in liquid state machines for speech classification. In: 2021 International joint conference on neural networks, pp 1–8

Saktheeswari M, Balasubramanian T (2021) Multi-layer tree liquid state machine recurrent auto encoder for thyroid detection. Multimed Tools Appl 80(12):17773–17783

Ponulak F, Kasiński A (2010) Supervised learning in spiking neural networks with ReSuMe: sequence learning, classification,and spike shifting. Neural Comput 22(2):467–510

Zhang T, Cheng X, Jia S, Poo MM, Zeng Y, Xu B (2021) Self-backpropagation of synaptic modifications elevates the efficiency of spiking and artificial neural networks. Sci Adv 7(43):eabh0146

Tsodyks M (2002) Spike-timing-dependent synaptic plasticity–The long road towards understanding neuronal mechanisms of learning and memory. Trends Neurosci 25(12):599–600

Caporale N, Dan Y (2008) Spike timing–dependent plasticity: a Hebbian learning rule. Ann Rev Neurosci 31:25–46

Wang Z, Xu NL, Wu CP, Duan S, Poo MM (2003) Bidirectional changes in spatial dendritic integration accompanying long-term synaptic modifications. Neuron 37(3):463–472

Li C, Lu J, Wu CP, Duan S, Poo MM (2004) Bidirectional modification of presynaptic neuronal excitability accompanying spike timing-dependent synaptic plasticity. Neuron 41(2):257–268

Gerstner W (2001) A framework for spiking neuron models: The spike response model. Handbook Biol Phys 4:469–516

Song S, Miller KD, Abbott LF (2000) Competitive Hebbian learning through spike-timing-dependent synaptic plasticity. Nat Neurosci 3(9):919–926

Mohemmed A, Schliebs S, Matsuda S, Kasbov N (2012) SPAN: Spike pattern association neuron for learning spatio-temporal spike patterns. Int J Neural Syst 22(4):1659–1685

Mansvelder HD, Verhoog MB, Goriounova NA (2019) Synaptic plasticity in human cortical circuits: cellular mechanisms of learning and memory in the human brain. Curr Opin Neurobiol 54:186–193

Zhang LI, Poo MM (2001) Electrical activity and development of neural circuits. Nat Neurosci 4(11):1207–1214

Malenka RC, Nicoll RA (1999) Long-term potentiation–adecade of progress? Science 285 (5435):1870–1874

Bear MF, Linden DJ (2001) The mechanisms and meaning of long-term synaptic depression in the mammalian brain. Synapses 1:455–517

Gaiarsa JL, Ben-Ari Y (2006) Long-term plasticity at inhibitory synapses: a phenomenon that has been overlooked. The Dynamic Synapse: Molecular Methods in Ionotropic Receptor Biology 23–36

Xu Y, Zeng X, Han L, Yang J (2013) A supervised multi-spike learning algorithm based on gradient descent for spiking neural networks. Neural Netw 43:99–113

Park IM, Seth S, Paiva AR, Li L, Principe JC (2013) Kernel methods on spike train space for neuroscience: a tutorial. IEEE Signal Proc Mag 30(4):149–160

Wang X, Lin X, Dang X (2019) A delay learning algorithm based on spike train kernels for spiking neurons. Front Neurosci 13:252

Paiva AR, Park I, Principe JC (2009) A reproducing kernel Hilbert space framework for spike train signal processing. Neural Comput 21(2):424–449

Russell BC, Torralba A, Murphy KP, Freeman WT (2008) Labelme: a database and web-based tool for image annotation. Int J Comput Vis 77(1-3):157–173. http://labelme.csail.mit.edu/Release3.0/. Accessed 18 June 2021

Nadasdy Z (2009) Information encoding and reconstruction from the phase of action potentials. Front Syst Neurosci 3:6

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant no. 62266040), the Key Research and Development Project of Gansu Province, China (Grant no. 20YF8GA049), the Youth Science and Technology Fund Project of Gansu Province, China (Grant no. 20JR10RA097), the Natural Science Foundation of Gansu Province, China (Grant no. 21JR7RA116), the Industrial Support Plan Project for Colleges and Universities in Gansu Province, China (Grant no. 2022CYZC-13), and the Lanzhou Municipal Science and Technology Project (Grant no. 2019-1-34).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lu, H., Lin, X., Wang, X. et al. Spike-train level supervised learning algorithm based on bidirectional modification for liquid state machines. Appl Intell 53, 12252–12267 (2023). https://doi.org/10.1007/s10489-022-04152-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-04152-5