Abstract

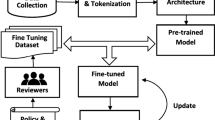

An effective dialogue system needs amount of training data, but the existing training data is insufficient. Although the pre-trained model has made great progress in recent years, which can alleviate the problem of low resource dialogue to a certain extent, the pre-trained model is large and difficult to deploy. How to improve the performance of dialogue model without additional annotation data and decreasing the model volume has become a new challenge. We propose a multi-source data augmentation method for low-resource dialogue generation by utilizing inverse curriculum learning (inverse CL). Firstly, we adopt three data augmentation methods, including round-trip translation, paraphrasing and pre-trained model, to generate augmentation data. Next, we propose a new training strategy based on inverse CL to utilize different augmentation data. Comparing with the baselines, our method comprehensively outperform the baselines on all evaluation metrics, which shows the effectiveness of our proposed training strategy for dialogue generation. To the best of our knowledge, this is the first systematic investigation of data augmentation in the dialogue generation.

Similar content being viewed by others

References

Fuwei C, Qian C, Yongduan S (2021) A survey on Learning-Based approaches for modeling and classification of Human–Machine dialog systems. IEEE Trans Neural Netw Learn Syst 32(4):1418–1432. https://doi.org/10.1109/TNNLS.2020.2985588

Connor S, Taghi M (2019) Khoshgoftaar, a survey on image data augmentation for deep learning. J Big Data 6(1):1–48. https://doi.org/10.1186/s40537-019-0197-0

Redmon J, Santosh D, Ross G, Ali F (2016) You only look once: unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. https://doi.org/10.1109/CVPR.2016.91, pp 779–788

Marzieh F, Arianna B, Christof M (2017) Data augmentation for low-resource neural machine translation. In: Proceedings of the 55th annual meeting of the association for computational linguistics. https://doi.org/10.18653/v1/P17-2090, vol 2, pp 567–573

Xinyi W, Hieu P, Zihang D, Graham N (2018) Switchout: an efficient data augmentation algorithm for neural machine translation. In: Proceedings of the 2018 conference on empirical methods in natural language processing, pp 856–861. https://doi.org/10.18653/v1/D18-1100

Fei G, Jinhua Z, Lijun W, Yingce X, Tao Q, Xueqi C, Wengang Z, Tie-Yan L (2019) Soft contextual data augmentation for neural machine translation. In: Proceedings of the 57th annual meeting of the association for computational linguistics, pp 5539–5544. https://doi.org/10.18653/v1/P19-1555

Jason W, Kai Z (2019) EDA: easy data augmentation techniques for boosting performance on text classification tasks. In: Proceedings of the 2019 conference on empirical methods in natural language processing and the 9th international joint conference on natural language processing (EMNLP-IJCNLP), pp 6382–6388. https://doi.org/10.18653/v1/D19-1670

Qizhe X, Zihang D, Eduard H, Thang L, Quoc L (2020) Unsupervised data augmentation for consistency training. Adv Neural Inf Process Syst :6256–6268. https://proceedings.neurips.cc/paper/2020/file/44feb0096faa8326192570788b38c1d1-Paper.pdf

Raiman J, Miller J (2017) Globally normalized reader. In: Proceedings of the 2017 conference on empirical methods in natural language processing, pp 1059–1069. https://doi.org/10.18653/v1/D17-1111https://doi.org/10.18653/v1/D17-1111

Luque FM (2019) Atalaya at TASS 2019: data augmentation and robust embeddings for sentiment analysis. In: IberLEF@SEPLN

Yan G, Li Y, Zhang S, Chen Z (2019) Data augmentation for deep learning of judgment documents. In: ISCIDE

Peng B, Zhu C, Zeng M, Gao J (2020) Data augmentation for spoken language understanding via pretrained models. arXiv:2004.13952

Zhang X, Zhao J, LeCun Y (2015) Character-level convolutional networks for text classification. In: Advances in neural information processing systems. https://proceedings.neurips.cc/paper/2015/file/250cf8b51c773f3f8dc8b4be867a9a02-Paper.pdf

Wang WY, Yang D (2015) That’s so annoying!!! a lexical and frame-semantic embedding based data augmentation approach to automatic categorization of annoying behaviors using #petpeeve tweets. In: Proceedings of the 2015 conference on empirical methods in natural language processing, pp 2557–2563. https://doi.org/10.18653/v1/D15-1306

Xie Q, Dai Z, Hovy E, Luong M-T, Le QV (2019) Unsupervised data augmentation for consistency training. arXiv:1904.12848

Zhang Y, Ge T, Sun X (2020) Parallel data augmentation for formality style transfer. In: Proceedings of the 58th annual meeting of the association for computational linguistics, pp 34–40. https://doi.org/10.18653/v1/2020.acl-main.5

Hou Y, Liu Y, Che W, Liu T (2018) Sequence-to-sequence data augmentation for dialogue language understanding, arXiv:1807.01554

Li K, Chen C, Quan X, Ling Q, Song Y (2020) Conditional augmentation for aspect term extraction via masked sequence-to-sequence generation. arXiv:2004.14769

Liu D, Gong Y, Fu J, Yan Y, Chen J, Lv J, Duan N, Zhou M (2020) Tell me how to ask again: question data augmentation with controllable rewriting in continuous space. arXiv:2010.01475

Li B, Hou Y, Che W (2021) Data augmentation approaches in natural language processing: a survey. arXiv:2110.01852

Wen T-H, Gašic M, Mrkšic N, Rojas-Barahona LM, Su P-H, Vandyke D, Young S (2016) Multi-domain neural network language generation for spoken dialogue systems. In: Proceedings of NAACLHLT, pp 120–129

Gao S, Zhang Y, Ou Z, Yu Z (2020) Paraphrase augmented Task-Oriented dialog generation. In: Proceedings of the 58th annual meeting of the association for computational linguistics, pp 639–649

Bengio Y, Louradour J, Collobert R, Weston J (2009) Curriculum learning. In: Proceedings of the 26th annual international conference on machine learning, pp 41–48. https://doi.org/10.1145/1553374.1553380

Liu C, He S, Liu K, Zhao J (2018) Curriculum learning for natural answer generation. In: The twenty-seventh international joint conference on artificial intelligence

Tay Y, Wang S, Tuan LA, Fu J, Phan MC, Yuan X, Rao J, Hui SC, Zhang A (2019) Simple and effective curriculum pointer-generator networks for reading comprehension over long narratives. In: Proceedings of the 57th annual meeting of the association for computational linguistics, pp 4922–4931. https://doi.org/10.18653/v1/P19-1486

Platanios EA, Stretcu O, Neubig G, Póczos B, Mitchell TM (2019) Competence-based curriculum learning for neural machine translation. In: NAACL-HLT, pp 1162–1172. https://doi.org/10.18653/v1/N19-1119

Matiisen T, Oliver A, Cohen T, Schulman J (2020) Teacher–student curriculum learning. IEEE Trans Neural Netw Learn Syst 31(9):3732–3740. https://doi.org/10.1109/TNNLS.2019.2934906

Sennrich R, Haddow B, Birch A (2016) Improving neural machine translation models with monolingual data. In: Proceedings of the 54th annual meeting of the association for computational linguistics. https://doi.org/10.18653/v1/P16-1009, pp 86–96

Edunov S, Ott M, Auli M, Grangier D (2018) Understanding back-translation at scale. In: Proceedings of the 2018 conference on empirical methods in natural language processing, pp 489–500

Marcin J-D, Roman G, Tomasz D et al (2018) Marian: fast neural machine translation in {C++}. In: Proceedings of the 56th annual meeting of the association for computational linguistics-system demonstrations, pp 116–121

De Beaugrande RA, Dressler WU (1981) Introduction to text linguistics. Rocky Mt Mod Lang Assoc 37:103–104. https://doi.org/10.2307/1347273

Halliday M, Matthiessen CMIM, Matthiessen C (2014) An introduction to functional grammar, Routledge, pp 186–188. https://doi.org/10.1016/0024-3841(86)90084-7

Wieting J, Gimpel K (2018) ParaNMT-50M: pushing the limits of paraphrastic sentence embeddings with millions of machine translations. In: Proceedings of the 56th annual meeting of the association for computational linguistics. https://doi.org/10.18653/v1/P18-1042, vol 1, pp 451–462

Wieting J, Mallinson J, Gimpel K (2017) Learning paraphrastic sentence embeddings from Back-Translated bitext. In: Proceedings of the 2017 conference on empirical methods in natural language processing, pp 274–285. https://doi.org/10.18653/v1/D17-1026

Zhang Y, Sun S, Galley M et al (2020) DialoGPT: large-scale generative pre-training for conversational response generation. In: Proceedings of the 58th annual meeting of the association for computational linguistics: system demonstrations, 270–278. https://doi.org/10.18653/v1/2020.acl-demos.30

Forgues G, Pineau J, Larchevêque JM, Tremblay R (2004) Bootstrapping dialog systems with word embeddings. In: Proceedings of the 42nd annual conference of the association for computational linguistics, pp 605–612

Li J, Galley M, Brocket C, Gao J, Dolan B (2016) A diversity promoting objective function for neural conversation models. In: Proceedings of the 2016 conference of the north American chapter of the association for computational linguistics: human language technologies, pp 110–119

Li Y, Su ui, Shen X, Li W, Cao Z, Niu S (2017) Dailydialog: a manually labelled multi-turn dialogue dataset. In: Proceedings of the eighth international joint conference on natural language processing, vol 1. pp 986–995

Kingma D, Ba J (2015) Adam: a method for stochastic optimization. In: The 3rd international conference on learning representations, p 13

Serban IV, Sordoni A, Bengio Y, Courville AC, Pineau J (2016) Building end-to-end dialogue systems using generative hierarchical neural network models. In: Proceedings of the thirtieth AAAI conference on artificial intelligence, pp 3776–3784

Serban I, Sordoni A, Lowe R, Charlin L, Pineau J, Courville A, Bengio Y (2017) A hierarchical latent variable Encoder-Decoder model for generating dialogues. In: Proceedings of the thirty-first AAAI conference on artificial intelligence, pp 3295–3301

Acknowledgements

The research work descried in this paper has been supported by the National Key R&D Program of China (2019YFB1405200) and the National Natural Science Foundation of China (No. 61976015, 61976016, 61876198 and 61370130). The authors would like to thank the anonymous reviewers for their valuable comments and suggestions to improve this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cui, F., Di, H., Huang, H. et al. Multi-source inverse-curriculum-based training for low-resource dialogue generation. Appl Intell 53, 13665–13676 (2023). https://doi.org/10.1007/s10489-022-04190-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-04190-z