Abstract

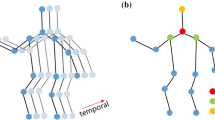

The critical problem in skeleton-based action recognition is to extract high-level semantics from dynamic changes between skeleton joints. Therefore, Graph Convolutional Networks (GCNs) are widely applied to capture the spatial-temporal information of dynamic joint coordinates by graph-based convolution. However, previous GCNS with fixed graph convolution kernel are limited to the static topology of graphs and the geometric variations of actions. Moreover, the local information of adjacent nodes of the graph is aggregated layer by layer, which increases the model complexity. In this work, a Deformable Graph Convolutional Transformer (DGT) for skeleton-based action recognition is proposed to extract adaptive features via a flexible receptive field that is learnable. In our DGT model, a multiple-input-branches (MIB) architecture is adopted to obtain multiple information, such as joints, bones, and motions. The multiple features are fused in the Transformer Classifier. Then, the Spatial-Temporal Graph Convolution units (STGC) are used to learn a preliminary feature representation indicating both spatial and temporal dependencies on the graph. Next, a Deformable spatial-temporal compound attention backbone is followed, which learns to represent a robust feature via adaptive deformable skeleton features. The adaptive representation is obtained by dynamically adjusting its receptive field owing to the offset-based convolution method. In addition, a self-attention-based transformer classifier (TC) is designed to encode the sequence of features flattened on the spatial and temporal dimensions. The fully-connected attention mechanism further helps the high-level semantic representation by focusing on essential nodes in the graph. We evaluated DGT on two challenging large-scale datasets, NTU-RGBD 60 and NTU-RGBD 120. Experiment results support the efficacy of DGT to optimize the attention for different joints adaptively. A comparable performance but much more efficient than the state-of-the-art demonstrates the effectiveness of the proposed method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Rahmani H, Bennamoun M (2017) Learning action recognition model from depth and skeleton videos. In: IEEE International conference on computer vision, ICCV 2017, Venice, Italy, October 22-29, 2017, pp 5833–5842

Vemulapalli R, Arrate F, Chellappa R (2014) Human action recognition by representing 3D skeletons as points in a lie group. In: 2014 IEEE Conference on computer vision and pattern recognition, CVPR 2014, Columbus, OH, USA, June 23-28, 2014, pp 588–595

Jiang X, Xu K, Sun T (2020) Action recognition scheme based on skeleton representation with DS-LSTM network. IEEE Trans Circuits Syst Video Technol 30(7):2129–2140

Song S, Lan C, Xing J, Zeng W, Liu J (2017) An End-to-End Spatio-Temporal Attention Model for Human Action Recognition from Skeleton Data. In: Singh, S.P., Markovitch, S. (eds.) Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, February 4-9, 2017, San Francisco, California, USA, pp 4263–4270

Zhang P, Lan C, Xing J, Zeng W, Xue J, Zheng N (2017) View adaptive recurrent neural networks for high performance human action recognition from skeleton data. In: IEEE International conference on computer vision, ICCV 2017, Venice, Italy, October 22-29, 2017, pp 2136–2145

Zheng W, Li L, Zhang Z, Huang Y, Wang L (2019) Relational network for Skeleton-Based action recognition. In: IEEE International conference on multimedia and expo, ICME 2019, Shanghai, China, July 8-12, 2019, pp 826–831

Liu M, Liu H, Chen C (2017) Enhanced skeleton visualization for view invariant human action recognition. Pattern Recognit 68:346–362

Li B, Dai Y, Cheng X, Chen H, Lin Y, He M (2017) Skeleton based action recognition using translation-scale invariant image mapping and multi-scale deep CNN. In: 2017 IEEE International conference on multimedia & expo workshops, ICME workshops, Hong Kong, China, July 10-14, 2017, pp 601–604

Li C, Zhong Q, Xie D, Pu S (2017) Skeleton-based action recognition with convolutional neural networks. In: 2017 IEEE International conference on multimedia & expo workshops, ICME workshops, Hong Kong, China, July 10-14, 2017, pp 597–600

Zhang P, Lan C, Xing J, Zeng W, Xue J, Zheng N (2019) View adaptive neural networks for high performance skeleton-based human action recognition. IEEE Trans Pattern Anal Mach Intell 41 (8):1963–1978

Wang H, Yu B, Xia K, Li J, Zuo X (2021) Skeleton edge motion networks for human action recognition. Neurocomputing 423:1–12

Yan S, Xiong Y, Lin D (2018) Spatial temporal graph convolutional networks for skeleton-based action recognition. In: Proceedings of the Thirty-Second AAAI Conference on artificial intelligence, New Orleans, Louisiana, USA, February 2-7, 2018, pp 7444–7452

Shi L, Zhang Y, Cheng J, Lu H (2019) Two-Stream Adaptive graph convolutional networks for skeleton-based action recognition. IEEE conference on computer vision and pattern recognition, CVPR 2019, long beach, CA, USA, June 16-20, 2019, pp 12026–12035

Cheng K, Zhang Y, He X, Chen W, Cheng J, Lu H (2020) Skeleton-based action recognition with shift graph convolutional network. In: 2020 IEEE/CVF Conference on computer vision and pattern recognition, CVPR 2020, Seattle, WA, USA, June 13-19, 2020, pp 180–189

Song Y, Zhang Z, Shan C, Wang L (2021) Richly activated graph convolutional network for robust skeleton-based action recognition. IEEE Trans Circuits Syst Video Technol 31(5):1915–1925

Shi L, Zhang Y, Cheng J, Lu H (2020) Skeleton-based action recognition with multi-stream adaptive graph convolutional networks. IEEE Trans Image Process 29:9532–9545

Song Y, Zhang Z, Shan C, Wang L (2021) Constructing stronger and faster baselines for skeleton-based action recognition. arXiv:2106.15125

Liu X, Li Y, Xia R (2021) Adaptive multi-view graph convolutional networks for skeleton-based action recognition. Neurocomputing 444:288–300

Plizzari C, Cannici M, Matteucci M (2021) Skeleton-based action recognition via spatial and temporal transformer networks. Computer Vision and Image Understanding 103219

Nie W, Liu A, Li W, Su Y (2016) Cross-view action recognition by cross-domain learning. Image Vis Comput 55:109–118

Liu A-A, Su Y-T, Nie W-Z, Kankanhalli M (2016) Hierarchical clustering multi-task learning for joint human action grouping and recognition. IEEE Trans Pattern Anal Mach Intell 39(1):102–114

Jiang X, Xu K, Sun T (2020) Action recognition scheme based on skeleton representation with DS-LSTM network. IEEE Trans Circuits Syst Video Technol 30(7):2129–2140

Liu J, Shahroudy A, Xu D, Kot AC, Wang G (2018) Skeleton-based action recognition using spatio-temporal LSTM network with trust gates. IEEE Trans Pattern Anal Mach Intell 40(12):3007–3021

Kim TS, Reiter A (2017) Interpretable 3D human action analysis with temporal convolutional networks. In: 2017 IEEE Conference on computer vision and pattern recognition workshops, CVPR workshops 2017, Honolulu, HI, USA, July 21-26, 2017, pp 1623–1631

Si C, Chen W, Wang W, Wang L, Tan T (2019) An attention enhanced graph convolutional LSTM network for skeleton-based action recognition. In: IEEE Conference on computer vision and pattern recognition, CVPR 2019, long beach, CA, USA, June 16-20, 2019, pp 1227–1236

Zhang X, Xu C, Tao D (2020) Context aware graph convolution for skeleton-based action recognition. In: 2020 IEEE/CVF Conference on computer vision and pattern recognition, CVPR 2020, Seattle, WA, USA, June 13-19, 2020, pp 14321–14330

Yoon Y, Yu J, Jeon M (2021) Predictively encoded graph convolutional network for noise-robust skeleton-based action recognition. Applied Intelligence, pp 1–15

Gao B-K, Dong L, Bi H-B, Bi Y-Z (2021) Focus on temporal graph convolutional networks with unified attention for skeleton-based action recognition. Applied Intelligence, pp 1–9

Liu S, Bai X, Fang M, Li L, Hung C-C (2021) Mixed graph convolution and residual transformation network for skeleton-based action recognition. Applied Intelligence, pp 1–12

Gao X, Hu W, Tang J, Liu J, Guo Z (2019) Optimized skeleton-based action recognition via sparsified graph regression. In: Amsaleg, L., Huet, B., Larson, M.A., Gravier, G., Hung, H., Ngo, C., Ooi, W.T. (eds.) Proceedings of the 27th ACM International Conference on Multimedia, MM 2019, Nice, France, October 21-25, 2019, pp 601–610

Chen S, Xu K, Mi Z, Jiang X, Sun T (2022) Dual-domain graph convolutional networks for skeleton-based action recognition. Machine Learning, pp 1–26

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser L, Polosukhin I (2017) Attention is all you need. In: Guyon, I., von Luxburg, U., Bengio, S., Wallach, H.M., Fergus, R., Vishwanathan, S.V.N., Garnett, R. (eds.) Advances in neural information processing systems 30: Annual conference on neural information processing systems 2017, december 4-9, 2017, Long Beach, CA, USA, pp 5998–6008

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S, Uszkoreit J, Houlsby N (2021) An image is worth 16x16 words: transformers for image recognition at scale. In: 9th international conference on learning representations, ICLR 2021, virtual event, Austria, May 3-7, 2021

Srinivas A, Lin T, Parmar N, Shlens J, Abbeel P, Vaswani A (2021) Bottleneck transformers for visual recognition. In: IEEE Conference on computer vision and pattern recognition, CVPR 2021, virtual, June 19-25, 2021, pp 16519–16529

Yuan L, Chen Y, Wang T, Yu W, Shi Y, Jiang Z-H, Tay FE, Feng J, Yan S (2021) Tokens-to-token vit: training vision transformers from scratch on imagenet. In: Proceedings of the IEEE/CVF International conference on computer vision, pp 558–567

Hassani A, Walton S, Shah N, Abuduweili A, Li J, Shi H (2021) Escaping the big data paradigm with compact transformers. arXiv:2104.05704

Cho S, Maqbool MH, Liu F, Foroosh H (2020) Self-attention network for skeleton-based human action recognition. In: IEEE Winter conference on applications of computer vision, WACV 2020, Snowmass Village, CO, USA, March 1-5, 2020, pp 624–633

Liu Z, Zhang H, Chen Z, Wang Z, Ouyang W (2020) Disentangling and unifying graph convolutions for skeleton-based action recognition. In: 2020 IEEE/CVF Conference on computer vision and pattern recognition, CVPR 2020, Seattle, WA, USA, June 13-19, 2020, pp 140–149

Dai J, Qi H, Xiong Y, Li Y, Zhang G, Hu H, Wei Y (2017) Deformable convolutional networks. In: IEEE International conference on computer vision, ICCV 2017, Venice, Italy, October 22-29, 2017, pp 764–773

Devlin J, Chang M, Lee K, Toutanova K (2019) BERT: pre-training of deep bidirectional transformers for language understanding. In: Burstein, J., Doran, C., Solorio, T. (eds.) Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, June 2-7, 2019, Volume 1 (Long and Short Papers), pp 4171–4186

Shahroudy A, Liu J, Ng T-T, Wang G (2016) NTU RGB+D: a large scale dataset for 3D human activity analysis. In: 2016 IEEE Conference on computer vision and pattern recognition, CVPR 2016, Las Vegas, NV, USA, June 27-30, 2016, pp 1010–1019

Liu J, Shahroudy A, Perez M, Wang G, Duan L, Kot AC (2020) NTU RGB+D 120: a large-scale benchmark for 3d human activity understanding. IEEE Trans Pattern Anal Mach Intell 42 (10):2684–2701

Loshchilov I, Hutter F (2017) SGDR: stochastic gradient descent with warm restarts. In: 5th international conference on learning representations, ICLR 2017, Toulon, France, April 24-26, 2017, conference track proceedings

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE Conference on computer vision and pattern recognition, CVPR 2016, Las Vegas, NV, USA, June 27-30, 2016, pp 770–778

Ramachandran P, Zoph B, Le QV (2018) Searching for activation functions. In: 6th international conference on learning representations, ICLR 2018, Vancouver, BC, Canada, April 30 - May 3, 2018, Workshop Track Proceedings

Banerjee A, Singh PK, Sarkar R (2021) Fuzzy integral-based CNN classifier fusion for 3d skeleton action recognition. IEEE Trans Circuits Syst Video Technol 31(6):2206–2216

Li M, Chen S, Chen X, Zhang Y, Wang Y, Tian Q (2019) Actional-structural graph convolutional networks for skeleton-based action recognition. In: IEEE Conference on computer vision and pattern recognition, CVPR 2019, Long Beach, CA, USA, June 16-20, 2019, pp 3595–3603

Si C, Jing Y, Wang W, Wang L, Tan T (2018) Skeleton-based action recognition with spatial reasoning and temporal stack learning. In: Ferrari, V., hebert, M., sminchisescu, C., weiss, Y. (eds.) computer vision - ECCV 2018 - 15th european conference, Munich, Germany, September 8-14, 2018, vol 11205, pp 106–121

Li S, Li W, Cook C, Zhu C, Gao Y (2018) Independently recurrent neural network (indRNN): building a longer and deeper RNN. In: 2018 IEEE Conference on computer vision and pattern recognition, CVPR 2018, Salt Lake City, UT, USA, June 18-22, 2018, pp 5457–5466

Caetano C, de Souza JS, Brémond F, dos Santos JA, Schwartz WR (2019) Skelemotion: a new representation of skeleton joint sequences based on motion information for 3d action recognition. In: 16th IEEE international conference on advanced video and signal based surveillance, AVSS 2019, Taipei, Taiwan, September 18-21, 2019, pp 1–8

Caetano C, Brémond F, Schwartz WR (2019) Skeleton image representation for 3d action recognition based on tree structure and reference joints. In: 2019 32nd SIBGRAPI conference on graphics, patterns and images (SIBGRAPI), pp 16–23

Zhang P, Lan C, Zeng W, Xing J, Xue J, Zheng N (2020) Semantics-guided neural networks for efficient skeleton-based human action recognition. In: Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition, pp 1112–1121

Funding

This work is funded by the Nature Natural Science Foundation of China (62002220).

Author information

Authors and Affiliations

Contributions

Shuo Chen: Conceptualization, Methodology, Writing-original draft, Software. Ke Xu: Supervision, Validation. Bo Zhu: data curation. Xinghao Jiang: Investigation, Visualization. Tanfeng Sun: Writing-review & editing.

Corresponding author

Ethics declarations

Conflicts of interest/Competing interests

We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, S., Xu, K., Zhu, B. et al. Deformable graph convolutional transformer for skeleton-based action recognition. Appl Intell 53, 15390–15406 (2023). https://doi.org/10.1007/s10489-022-04302-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-04302-9