Abstract

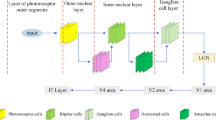

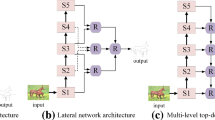

In recent years, deep learning technology has significantly improved the performance of various computer vision tasks. Convolutional neural networks for edge detection tasks are usually composed of two parts: encoding and decoding. Encoding is mainly used for feature extraction and characterization; while decoding is mainly used to effectively integrate the local, detailed features extracted from encoding into global information. The research suggests that convolutional neural networks are a technical replication of biological neural networks in a simplified sense, and the model building of convolutional neural networks relies on the intricate connections and cellular functions of biological neural networks. For visual tasks, the visual “ventral stream” neural pathway plays an essential role. And inspired by the interactive feedback mechanism between cells and tissues in the ventral stream visual pathway, this paper proposes a novel convolutional neural network model for edge detection. We use Visual Geometry Group Net to simulate the visual signal perceptron retina, the relay lateral geniculate nucleus, and the ventral stream neural pathway, and based on the visual neural information transmission mechanism, we build a lateral interactive feedback coding network. Also by using the bottom-up feedback parsing and parallel processing of feature information in the Inferior Temporal layer, the feedforward information, and feedback information are integrated in a longitudinal feedback interactive manner in the decoding network. We conducted experiments on the publicly available datasets BSDS500, NYUD, Multicue-Boundary, and Multicue- Edge, achieving Score 0.824, 0.773, 0.839, and 0.892, respectively. The results show that our method achieves good performance and is highly competitive.

Similar content being viewed by others

Data availability

Data will be made available on reasonable request.

References

Moon J, Hossain MB, Chon KH (2021) AR and ARMA model order selection for time-series modeling with ImageNet classification. Sig Process 183:108026

Zhang Y, Guo X, Ren H et al (2021) Multi-view classification with semi-supervised learning for SAR target recognition. Sig Process 183:108030

Rao Y, Ni J, Xie H (2021) Multi-semantic CRF-based attention model for image forgery detection and localization. Sig Process 183:108051

Arbeláez P, Maire M, Fowlkes C et al (2011) Contour detection and hierarchical image segmentation. IEEE Trans Pattern Anal Mach Intell 33(5):898–916

Dollar P, Zitnick CL (2015) Fast edge detection using structured forests. IEEE Trans Pattern Anal Mach Intell 37(8):1558–1570

Martin DR, Fowlkes CC, Malik J (2004) Learning to detect natural image boundaries using local brightness, color, and texture cues. IEEE Trans Pattern Anal Mach Intell 26(5):530–549

Lim JJ, Zitnick CL, Dollar P (2013) Sketch tokens: a learned mid-level representation for contour and object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. Portland, pp 3158–3165

Xie S, Tu Z (2015) Holistically-nested edge detection. In: Proceedings of the IEEE international comference on computer vision. Santiago, pp 1395–1403

Liu Y, Cheng M-M, Hu X et al (2017) Richer convolutional features for edge detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. Honolulu, pp 3000–3009

Wang Y, Zhao X, Huang K (2017) Deep crisp boundaries. In: Proceedings of the IEEE conference on computer vision and pattern recognition. Honolulu, pp 3892–3900

He J, Zhang S, Yang M et al (2019) Bi-directional cascade network for perceptual edge detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. Long Beach, pp 3828–3837

Cao Y-J, Lin C, Li Y-J (2020) Learning crisp boundaries using deep refinement network and adaptive weighting loss. IEEE Trans Multimedia 23:761–771

Deng R, Liu S (2020) Deep structural contour detection. In: Proceedings of the 28th ACM international conference on multimedia. Online, pp 304–312

Lin C, Cui L, Li F et al (2020) Lateral refinement network for contour detection. Neurocomputing 409:361–371

Szegedy C, Zaremba W, Sutskever I et al (2014) Intriguing properties of neural networks. In: 2nd International Conference on Learning Representations, ICLR 2014

Athalye A, Engstrom L, Ilyas A et al (2018) Synthesizing robust adversarial examples. In: International conference on machine learning. PMLR, pp 284–293

Bashivan P, Kar K, DiCarlo JJ (2019) Neural population control via deep image synthesis. Science 364(6439):eaav9436

Schrimpf M, Kubilius J, Hong H et al (2020) Brain-score: which artificial neural network for object recognition is most brain-like? BioRxiv, pp 407007

Bear M, Connors B, Paradiso MA (2020) Neuroscience: exploring the brain, enhanced edition: exploring the brain. Jones & Bartlett Learning, Burlington

Hao W, Andolina IM, Wang W et al (2021) Biologically inspired visual computing: the state of the art. Front Comput Sci 15(1):1–15

Srivastava N, Hinton G, Krizhevsky A et al (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15(1):1929–1958

Yoo D, Park S, Lee J-Y et al (2015) Attentionnet: aggregating weak directions for accurate object detection. In: Proceedings of the IEEE international conference on computer vision, pp 2659–2667

Ding J, Ye Z, Xu F et al (2022) Effects of top-down influence suppression on behavioral and V1 neuronal contrast sensitivity functions in cats. Iscience 25(1):103683

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. In: International conference on representation learning. San Diego, pp 1049–1556

Arbelaez P, Maire M, Fowlkes C et al (2010) Contour detection and hierarchical image segmentation. IEEE Trans Pattern Anal Mach Intell 33(5):898–916

Silberman N, Hoiem D, Kohli P et al (2012) Indoor segmentation and support inference from rgbd images. In: European conference on computer vision. Springer, Florence, pp 746–760

Mély DA, Kim J, McGill M et al (2016) A systematic comparison between visual cues for boundary detection. Vision Res 120:93–107

Wild B, Treue S (2021) Primate extrastriate cortical area MST: a gateway between sensation and cognition. J Neurophysiol 125(5):1851–1882

Fang C, Yan K, Liang C et al (2022) Function-specific projections from V2 to V4 in macaques. Brain Struct Function 227(4):1317–1330

Grossberg S, Mingolla E, Williamson J (1995) Synthetic aperture radar processing by a multiple scale neural system for boundary and surface representation. Neural Netw 8:7–8

Mingolla E, Ross W, Grossberg S (1999) A neural network for enhancing boundaries and surfaces in synthetic aperture radar images. Neural Netw 12(3):499–511

Kokkinos I, Deriche R, Faugeras O et al (2008) Computational analysis and learning for a biologically motivated model of boundary detection. Neurocomputing 71(10–12):1798–1812

Neumann H, Sepp W (1999) Recurrent V1–V2 interaction in early visual boundary processing. Biol Cybern 81:5–6

Akbarinia A, Parraga CA (2017) Colour constancy beyond the classical receptive field. IEEE Trans Pattern Anal Mach Intell 40(9):2081–2094

Bertasius G, Shi J, Torresani L (2015) Deepedge: a multi-scale bifurcated deep network for top-down contour detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. Boston, pp 4380–4389

Shen W, Wang X, Wang Y et al (2015) Deepcontour: a deep convolutional feature learned by positive-sharing loss for contour detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. Boston, pp 3982–3991

Canny J (1986) A computational approach to edge detection. IEEE Trans Pattern Anal Mach Intell 8(6):679–698

Long J, Shelhamer E, Darrell T (2015) Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition. Boston, pp 3431–3440

Deng R, Shen C, Liu S et al (2018) Learning to predict crisp boundaries. In: Proceedings of the European conference on computer vision. Munich, pp 562–578

Cao C, Huang Y, Yang Y et al (2018) Feedback convolutional neural network for visual localization and segmentation. IEEE Trans Pattern Anal Mach Intell 41(7):1627–1640

Li Z, Yang J, Liu Z et al (2019) Feedback network for image super-resolution. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3867–3876

Haris M, Shakhnarovich G, Ukita N (2018) Deep back-projection networks for super-resolution. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1664–1673

Haris M, Shakhnarovich G, Ukita N (2019) Recurrent back-projection network for video super-resolution. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3897–3906

Tang Q, Sang N, Liu H (2019) Learning nonclassical receptive field modulation for contour detection. IEEE Trans Image Process 29:1192–1203

Zhu X, Yang Z (2013) Multi-scale spatial concatenations of local features in natural scenes and scene classification. Plos one 8(9):e76393

Nurminen L, Merlin S, Bijanzadeh M et al (2018) Top-down feedback controls spatial summation and response amplitude in primate visual cortex. Nat Commun 9(1):1–13

Choi I, Lee J-Y, Lee S-H (2018) Bottom-up and top-down modulation of multisensory integration. Curr Opin Neurobiol 52:115–122

Gilbert CD, Li W (2013) Top-down influences on visual processing. Nat Rev Neurosci 14(5):350–363

He K, Zhang X, Ren S et al (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. Las Vegas, pp 770–778

Han J, Moraga C (1995) The influence of the sigmoid function parameters on the speed of backpropagation learning. In: International workshop on artificial neural networks. Springer, Perth, pp 195–201

John M, Allman et al (1971) A representation of the visual field in the caudal third of the middle temporal gyrus of the owl monkey (Aotus trivirgatus). Brain Res 31(1):85–105

Conway BR (2018) The organization and operation of inferior temporal cortex. Annual Rev Vis Sci 4(1):381–402

Deng J, Dong W, Socher R et al (2009) Imagenet: a large-scale hierarchical image database. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Miami, pp 248–255

Mottaghi R, Chen X, Liu X et al (2014) The role of context for object detection and semantic segmentation in the wild. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Columbus, pp 891–898

Isola P, Zoran D, Krishnan D et al (2014) Crisp boundary detection using pointwise mutual information. In: European conference on computer vision. Springer, pp 799–814

Hallman S, Fowlkes CC (2015) Oriented edge forests for boundary detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. Boston, pp 1732–1740

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant No.61866002), Guangxi Natural Science Foundation (Grant No.2020GXNSFDA297006, Grant No.2018GXNSFAA138122, Grant No.2015GXNSFAA139293), and Innovation Project of Guangxi Graduate Education (Grant No. YCSW2021311)”.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lin, C., Qiao, Y. & Pan, Y. Bio-inspired interactive feedback neural networks for edge detection. Appl Intell 53, 16226–16245 (2023). https://doi.org/10.1007/s10489-022-04316-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-04316-3