Abstract

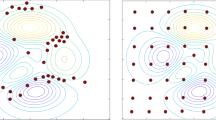

In this paper, a new population initialization method for metaheuristic algorithms is proposed. In our approach, the initial set of candidate solutions is obtained through the sampling of the objective function with the use of the Metropolis–Hastings technique. Under this method, the set of initial solutions adopts a value close to the prominent values of the objective function to be optimized. Different from most of the initialization methods that consider only spatial distribution, in our algorithm, the initial points represent promising regions of the search space, which deserve to be exploited to identify the global optimum. These characteristics allow the proposed approach obtains a faster convergence and improves the quality of the produced solutions. With the objective to demonstrate the capacities of our initialization method, it has been embedded in the classical Differential Evolution algorithm. To evaluate its performance, the complete system has been tested in a set of representative benchmark functions extracted from several datasets. Experimental results show that the proposed technique presents a better convergence speed and an increment in the quality of the solutions than other similar approaches.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Yang X (2010) Engineering optimization. John Wiley & Sons, Inc

Jahn J (2007) Introduction to the theory of nonlinear optimization. Springer-Verlag, Berlin Heidelberg

Cuevas E, Rodriguez A (2020) Metaheuristic computation with MATLAB®. Chapman and Hall/CRC

Maciel O, Cuevas E, Navarro M, Zaldívar D, Hinojosa S (2020) Side-blotched lizard algorithm: a polymorphic population approach. Appl Soft Comput 88:106039

Holland, J. H. (1975). Adaptation in natural and artificial systems, univ. of mich. Press. Ann Arbor

Storn R, Price K (1997) Differential evolution – a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim 11:341–359

De Castro L, Timmis J (2022) An artificial immune network for multimodal function optimiza[1]tion. In: Proc. of the Congress on Evolutionary Computation (CEC), vol 1. IEEE Computer Society, Los Alamitos, pp 699–704

Fogel DB (1998) Artificial intelligence through simulated evolution (pp. 227–296). Wiley-IEEE Press

Rashedi E, Nezamabadi-Pour H, Saryazdi S (2011) Filter modeling using gravitational search algorithm. Eng Appl Artif Intell 24(1):117–122. https://doi.org/10.1016/j.engappai.2010.05.007

Kirkpatrick S, Gelatt CD, Vecchi MP (1983) Optimization by simulated annealing. Science 220(4598):671–680. https://doi.org/10.1126/science.220.4598.671

Birbil ŞI, Fang SC (2003) An electromagnetism-like mechanism for global optimization. J Glob Optim 25(3):263–282. https://doi.org/10.1023/A:1022452626305

Kaveh A, Talatahari S (2010) A novel heuristic optimization method: charged system search. Acta Mech 213(3–4):267–289. https://doi.org/10.1007/s00707-009-0270-4

Cuevas E, Cienfuegos M, Zaldívar D, Pérez-Cisneros M (2013) A swarm optimization algorithm inspired in the behavior of the social-spider. Expert Syst Appl 40(16):6374–6384. https://doi.org/10.1016/j.eswa.2013.05.041

Kennedy J, Eberhart R (1995) Particle swarm optimization. In proceedings of ICNN'95-international conference on neural networks (Vol. 4, pp. 1942-1948). IEEE

Karaboga D (2005) An idea based on honey bee swarm for numerical optimization (Vol. 200, pp. 1-10). Technical report-tr06, Erciyes university, engineering faculty, computer engineering department

Yang X-S, Deb S (2010) Cuckoo search via levy flights. 210–214. http://arxiv.org/abs/1003.1594

Kononova AV, Caraffini F, Bäck T (2021) Differential evolution outside the box. Inf Sci 581:587–604

Ochoa P, Castillo O, Melin P, Soria J (2021) Differential evolution with shadowed and general Type-2 fuzzy Systems for Dynamic Parameter Adaptation in optimal Design of Fuzzy Controllers. Axioms 10:194

Ochoa P, Castillo O, Soria J (2020) High-speed interval Type-2 fuzzy system for dynamic crossover parameter adaptation in differential evolution and its application to controller optimization. Int J Fuzzy Syst 22:414–427

Zhang J, Arthur C (2009) JADE: adaptive differential evolution with optional external archive. IEEE Trans Evol Comput 13(5):945–958

Qin AK, Suganthan PN (2005) Self-adaptive diferential evolution algorithm for numerical optimization. In: 2005 IEEE Congress on Evolutionary Computation, vol 2, Edinburgh, pp 1785–1791. https://doi.org/10.1109/CEC.2005.1554904

Bres J, Greiner S, Boskovic B, Mernik M, Zumer V (2006) Self-adapting control parameters in differential evolution: a comparative study on numerical benchmark problems. IEEE Trans Evol Comput 10(6):646–657. https://doi.org/10.1109/TEVC.2006.872133

Tanabe R, Fukunaga A (2013) Success-history based parameter adaptation for differential evolution. In: 2013 IEEE Congress on Evolutionary Computation, pp 71–78. https://doi.org/10.1109/CEC.2013.6557555

Tanabe R, Fukunaga AS (2014) Improving the search performance of SHADE using linear population size reduction. In: 2014 IEEE Congress on Evolutionary Computation (CEC), pp 1658–1665. https://doi.org/10.1109/CEC.2014.6900380

Wen J, Ma H, Zhang X (2016) Optimization of the occlusion strategy in visual tracking. Tsinghua Sci Technol 21(2):221–230. https://doi.org/10.1109/TST.2016.7442504

Rahnamayan S, Tizhoosh HR, Salama MMA (2007) A novel population initialization method for accelerating evolutionary algorithms. Comput Math Appl 53(10):1605–1614. https://doi.org/10.1016/j.camwa.2006.07.013

Hui W, Zhijian W, Liu Y, Jing W, Dazhi J, Lili C (2009) Space transformation search: a new evolutionary technique. In Proceedings of the first ACM/SIGEVO Summit on Genetic and Evolutionary Computation (GEC '09). Association for Computing Machinery, New York, pp 537–544. https://doi.org/10.1145/1543834.1543907

Geyer CJ (1992) Practical Carlo chain Monte Markov. Stat Sci 7(4):473–483

Pan W, Li K, Wang M, Wang J, Jiang B (2014) Adaptive randomness: a new population initialization method, mathematical problems in engineering, vol 2014, p 14

Ahmad MF, Isa NAM, Limb WH, Ang KM Differential evolution with modified initialization scheme using chaotic oppositional based learning strategy. Alexan Engin J 61(12, 2022):11835–11858

Li Q, Bai Y, Gao W (2021) Improved Initialization Method for Metaheuristic Algorithms: A Novel Search Space View. EEE Access 9:158508–158539

Chib S, Greenberg E (1995) Understanding the Metropolis–Hastings Algorithm. Am Stat 49(4):327–335

Chauveau D, Vandekerkhove P (2002) Improving convergence of the Hastings-Metropolis algorithm with an adaptive proposal. Scand J Stat 29(1):13–29

Rahnamayan S, Tizhoosh HR, Salama MMA (2007) A novel population initialization method for accelerating evolutionary algorithms. Comput Math Appl 53(10):1605–1614

Andre J, Siarry P, Dognon T (2001) An improvement of the standard genetic algorithm fighting premature convergence in continuous optimization. Adv Eng Softw 32(1):49–60

Rahnamayan RS, Tizhoosh HR, Salama MMA (2008) Opposition-based differential evolution. IEEE Trans Evol Comput 12(1):64–79

Wang H, Wu Z, Rahnamayan S (2011) Enhanced oppositionbased differential evolution for solving high-dimensional continuous optimization problems. Soft Comput 15(11):2127–2140

Garcia S, Fernandez A, Luengo J, Herrera F (2010) Advanced nonparametric tests for multiple comparisons in the design of experiments in computational intelligence and data mining: experimental analysis of power. Inf Sci 180(10):2044–2064

Črepinšek M, Liu SH, Mernik M (2013) Exploration and exploitation in evolutionary algorithms. ACM Comput Surv 45(3):1–33

Piotrowski AP (2017) Review of diferential evolution population size. Swarm Evol Comput 32:1–24

Jerebic J, Mernik M, Liu SH, Ravber M, Baketarić M, Mernik L, Črepinšek M (2021) A novel direct measure of exploration and exploitation based on attraction basins. Expert Syst Appl 167:114353

Li Y, Wang S, Yang B, Hu C, Wu Z, Yang H, Population reduction with individual similarity for diferential evolution, Artifcial Intelligence Review In press

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A

Appendix A

Name | MInimum | S | D | Function | |

|---|---|---|---|---|---|

f(x)1 | Ackley | f(x∗) = 0; x∗ = (0, …, 0) | [−30, 30]d | 30 | \( f(x)=-20\exp \left(-0.2\sqrt{\frac{1}{d}\sum \limits_{i=d}^d{x}_i^2}\right)-\exp \left(\frac{1}{d}\sum \limits_{i=1}^d\cos \left(2\pi {x}_i\right)\right)+\exp (1)+20 \) |

f(x)2 | Dixon-Price | f(x∗) = 0; \( {\mathbf{x}}^{\ast}={2}^{-\frac{2^i-2}{2^i}} \) for i = 1, …, n | [−10, 10]d | 30 | \( f(x)={\left({x}_1-1\right)}^2+\sum \limits_{i=2}^di{\left(2{x}_i^2-{x}_{i-1}\right)}^2 \) |

f(x)3 | Griewank | f(x∗) = 0; x∗ = (0, …, 0) | [−600, 600]d | 30 | \( f(x)=\sum \limits_{i=1}^d\frac{x_i^2}{4000}-\prod \limits_{i=1}^d\cos \left(\frac{x_i}{\sqrt{i}}\right)+1 \) |

f(x)4 | Infinity | f(x∗) = 0; x∗ = (0, …, 0) | [−1, 1]d | 30 | \( f(x)=\sum \limits_{i=1}^d{x}_i^6\left( sen\left({x}_i\right)+2\right) \) |

f(x)5 | Levy | f(x∗) = 0; x∗ = (1, …, 1) | [−10, 10]d | 30 | \( f(x)={\mathit{\sin}}^2\left(\pi {\omega}_1\right)+\sum \limits_i^{d-1}{\left({\omega}_1-1\right)}^2\left[1+10{\mathit{\sin}}^2\left(\pi {\omega}_i+1\right)\right]+{\left({\omega}_d-1\right)}^2\left[1+\right]{\mathit{\sin}}^2\left(2\pi {\omega}_d\right) \) \( whre\kern0.5em {\omega}_i=1+\frac{x_i-1}{4} \) |

f(x)6 | Mishra 1 | f(x∗) = 2; x∗ = (1, …, 1) | [0, 1]d | 30 | \( f(x)={\left(1+\left(d-\sum \limits_{i=1}^{d-1}{x}_i\right)\right)}^{d-\sum \limits_{i=1}^{d-1}{x}_i} \) |

f(x)7 | Mishra 2 | f(x∗) = 2; x∗ = (1, …, 1) | [0, 1]d | 30 | \( f(x)={\left(1+\left(d-\sum \limits_{i=1}^{d-1}\frac{x_i+{x}_{i+1}}{2}\right)\right)}^{d-\sum \limits_{i=1}^{d-1}\left(\frac{x_i+{x}_{i+1}}{2}\right)} \) |

f(x)8 | Mishra 11 | f(x∗) = 0; x∗ = (0, …, 0) | [−10, 10]d | 30 | \( f(x)={\left[\frac{1}{d}\sum \limits_{i=1}^d\left|{x}_i\right|-{\left(\prod \limits_{i=1}^d|{x}_i|\right)}^{\frac{1}{d}}\right]}^2 \) |

f(x)9 | MultiModal | f(x∗) = 0; x∗ = (0, …, 0) | [−10, 10]d | 30 | \( f(x)=\sum \limits_{i=1}^d\mid {x}_i\mid \prod \limits_{i=1}^d\mid {x}_i\mid \) |

f(x)10 | Penalty 1 | f(x∗) = 0; x∗ = (−1, …, −1) | [−50, 50]d | 30 | \( f(x)=\frac{\pi }{30}\left(10{\mathit{\sin}}^2\left(\pi {y}_1\right)+\sum \limits_{i=1}^{d-1}{\left({y}_i-1\right)}^2\left[1+10{\mathit{\sin}}^2\left(\pi {y}_{i+1}\right)\right]+{\left({y}_i-1\right)}^2\right)+\sum \limits_{i=1}^du\left({x}_i,\mathrm{10,100,4}\right) \) \( {y}_i=1+\frac{x_i+1}{4},u\left({x}_i,a,k,m\right)\left\{\begin{array}{c}k{\left({x}_i-a\right)}^m\kern0.5em ,{x}_i>a\\ {}0\kern0.5em ,-a\le {x}_i\le a\\ {}\begin{array}{cc}k{\left(-{x}_i-a\right)}^m&, {x}_i<-a\end{array}\end{array}\right. \) |

f(x)11 | Penalty 2 | f(x∗) = 0; x∗ = (1, …, 1) | [−50, 50]d | 30 | \( f(x)=0.1\left({\left(\mathit{\sin}\left(3\pi {x}_1\right)\right)}^2+\sum \limits_{i=1}^{d-1}{\left({x}_i-1\right)}^2\left[1+{\mathit{\sin}}^2\left(3\pi {x}_{i+1}\right)\right]+\left[{\left({x}_i-1\right)}^2{\left(\mathit{\sin}\left(2\pi {x}_i\right)\right)}^2\right]\right)+\sum \limits_{i=1}^du\left({x}_i,\mathrm{5,100,4}\right) \) \( \kern0.5em u\left({x}_i,a,k,m\right)\left\{\begin{array}{c}k{\left({x}_i-a\right)}^m\kern0.5em ,{x}_i>a\\ {}\begin{array}{cc}0&, -a\le {x}_i\le a\end{array}\\ {}\begin{array}{cc}k{\left(-{x}_i-a\right)}^m&, {x}_i<-a\end{array}\end{array}\right. \) |

f(x)12 | Perm 1 | f(x∗) = 0; x∗ = (1, 2, …, n) | [−d, d]d | 30 | \( f(x)=\sum \limits_{k=1}^d{\left[\sum \limits_{i=1}^d\left({i}^k+50\right)\left({\left(\frac{x_i}{i}\right)}^k-1\right)\right]}^2 \) |

f(x)13 | Perm 2 | f(x∗) = 0; x∗ = (1, 1/2, …, 1/n) | [−d, d]d | 30 | \( f(x)=\sum \limits_{i=1}^d{\left[\sum \limits_{j=1}^d\left({j}^i+10\right)\Big({\left({x}_j^i-\frac{1}{j^i}\right)}^i\right]}^2 \) |

f(x)14 | Plateau | f(x∗) = 30; x∗ = (0, …, 0) | [−5.12, 5.12]d | 30 | \( f(x)=30+\sum \limits_{i=1}^d\mid {x}_i\mid \) |

f(x)15 | Powell | f(x∗) = 0; x∗ = (0, …, 0) | [−4, 5]d | 30 | \( f(x)=\sum \limits_{i=1}^{\frac{d}{4}}\left[{\left({x}_{4i-3}+10{x}_{4i-2}\right)}^2+5{\left({x}_{4i-1}-{x}_{4i}\right)}^2+{\left({x}_{4i-2}-{x}_{4i-1}\right)}^4+10{\left({x}_{4i-3}-{x}_{4i}\right)}^4\right] \) |

f(x)16 | Quing | f(x∗) = 0; x∗ = (0, …, 0) | [−1.28, 1.28]d | 30 | \( f(x)=\sum \limits_{i=1}^d{\left({x}_i^2-i\right)}^2 \) |

f(x)17 | Quartic | f(x∗) = 0; x∗ = (−1, …, −1) | [−10, 10]d | 30 | \( f(x)=\sum \limits_{i=1}^di{x}_i^4+\mathit{\operatorname{rand}}\left[0,1\right) \) |

f(x)18 | Quintic | f(x∗) = 0; x∗ = (0, …, 0) | [−5.12, 5.12]d | 30 | \( f(x)=\sum \limits_{i=1}^d\mid {x}_i^5-3{x}_i^4+4{x}_i^3+2{x}_i^2-10{x}_i-4\mid \) |

f(x)19 | Rastrigin | f(x∗) = 0; x∗ = (1, …, 1) | [−5, 10]d | 30 | \( f(x)=10d+\sum \limits_{i=1}^d\left[{x}_i^2-10\cos \left(2\pi {x}_i\right)\right] \) |

f(x)20 | Rosenbrock | f(x∗) = 0; x∗ = (0.5, …, 0.5) | [−100, 100]d | 30 | \( f(x)=\sum \limits_{i=1}^d100{\left({x}_{i+1}-{x_i}^2\right)}^2+{\left({x}_i-1\right)}^2 \) |

f(x)21 | Schwefel 21 | f(x∗) = 0; x∗ = (0, …, 0) | [−100, 100]d | 30 | f(x) = max {|xi|, 1 ≤ i ≤ d} |

f(x)22 | Schwefel 22 | f(x∗) = 0; x∗ = (0, …, 0) | [−100, 100]d | 30 | \( f(x)=\sum \limits_{i=1}^d\left|{x}_i\right|+\prod \limits_{i=1}^d\left|{x}_i\right| \) |

f(x)23 | Schwefel 26 6 | f(x∗) = 0; \( {\mathbf{x}}^{\ast}=\left(\begin{array}{c}420.9687,\\ {}\dots, 420.9687\end{array}\right) \) | [−500, 500]d | 30 | \( f(x)=\sum \limits_{i=1}^d\left(-{x}_i\left(\sin \left(\sqrt{\mid {x}_i\mid}\right)\right)\right) \) |

f(x)24 | Step | f(x∗) = 0; x∗ = (0, …, 0) | [−100, 100]d | 30 | \( f(x)=\sum \limits_{i=1}^d\mid {x}_i^2\mid \) |

f(x)25 | Stybtang | f(x∗) = − 39.1659n; x∗ = (−2.90, …, 2.90) | [−5, 5]d | 30 | \( f(x)=\frac{1}{2}\sum \limits_{i=1}^d\left({x}_i^4-16{x}_i^2+5{x}_i\right) \) |

f(x)26 | Trid | f(x∗) = − n(n + 4)(n − 1)/6; x∗ = [i(n + 1 − i)] for i = 1, …, n | [−d2, d2]d | 30 | \( f(x)=\sum \limits_{i=1}^d{\left({x}_i-1\right)}^2-\sum \limits_{i=2}^d{x}_i{x}_{i-1} \) |

f(x)27 | Vincent | f(x∗) = − n; x∗ = (7.70, …, 7.70) | [0.25, 10]d | 30 | \( f(x)=-\frac{1}{n}\sum \limits_{i=1}^n\sin \left[10\log \left({x}_i\right)\right] \) |

f(x)28 | Zakharov | f(x∗) = 0; x∗ = (0, …, 0) | [−5, 10]d | 30 | \( f(x)=\sum \limits_{i=1}^d{x}_i^2+{\left(\sum \limits_{i=1}^d0.5i{x}_i\right)}^2+{\left(\sum \limits_{i=1}^d0.5i{x}_i\right)}^4 \) |

f(x)29 | Rothyp | f(x∗) = 0; x∗ = (0, …, 0) | [−65.536, 65.536]n | 30 | \( f(x)=\sum \limits_{i=1}^d\sum \limits_{j=1}^i{x}_j^2 \) |

f(x)30 | Schwefel 2 | f(x∗) = 0; x∗ = (0, …, 0) | [−100, 100]d | 30 | \( f(x)=\sum \limits_{i=1}^d{\left(\sum \limits_{j=1}^i{x}_i\right)}^2 \) |

f(x)31 | Sphere | f(x∗) = 0; x∗ = 0, …, 0 | [−5, 5]d | 30 | \( f(x)=\sum \limits_{i=1}^d{x}_i^2 \) |

f(x)32 | Sum2 | f(x∗) = 0; x∗ = (0, …, 0) | [−10, 10]d | 30 | \( f(x)=\sum \limits_{i=1}^d{ix}_i^2 \) |

f(x)33 | Sumpow | f(x∗) = 0; x∗ = (0, …, 0) | [−1, 1]d | 30 | \( f(x)=\sum \limits_{i=1}^d{\left|{x}_i\right|}^{i+1} \) |

f(x)34 | Rastringin + Schwefel22 + Sphere | f(x∗) = 0; x∗ = (0, …, 0) | [−100, 100]d | 30 | \( f(x)=\left[10d+\sum \limits_{i=1}^d\left[{x}_i^2-10\cos \left(2\pi {x}_i\right)\right]\right]+\left[\sum \limits_{i=1}^d\left|{x}_i\right|+\prod \limits_{i=1}^d\left|{x}_i\right|\right]+\left[\sum \limits_{i=1}^d{x}_i^2\right] \) |

f(x)35 | Griewank + Rastrigin + Rosenbrock | f(x∗) = n − 1; x∗ = (0, …, 0) | [−100, 100]d | 30 | \( f(x)=\left[\sum \limits_{i=1}^d\frac{x_i^2}{4000}-\prod \limits_{i=1}^d\cos \left(\frac{x_i}{\sqrt{i}}\right)+1\right]+\left[10d+\sum \limits_{i=1}^d\left[{x}_i^2-10\cos \left(2\pi {x}_i\right)\right]\right]+\left[\sum \limits_{i=1}^d100{\left({x}_{i+1}-{x_i}^2\right)}^2+{\left({x}_i-1\right)}^2\right] \) |

f(x)36 | Ackley + Penalty2 + Rosenbrock + Schwefel22 | f(x∗) = (1.1n) − 1; x∗ = (0, …, 0) | [−100,100]d | 30 | \( f(x)=\left[-20\exp \left(-0.2\sqrt{\frac{1}{d}\sum \limits_{i=d}^d{x}_i^2}\right)-\exp \left(\frac{1}{d}\sum \limits_{i=1}^d\cos \left(2\pi {x}_i\right)\right)+\exp (1)+20\right]+\left[0.1\left({\left(\sin \left(3\pi {x}_1\right)\right)}^2+\sum \limits_{i=1}^{d-1}{\left({x}_i-1\right)}^2\left[1+{\mathit{\sin}}^2\left(3\pi {x}_{i+1}\right)\right]+\left[{\left({x}_i-1\right)}^2{\left(\sin \left(2\pi {x}_i\right)\right)}^2\right]\right)+\sum \limits_{i=1}^du\left({x}_i,\mathrm{5,100,4}\right)\right]+\left[\sum \limits_{i=1}^d100{\left({x}_{i+1}-{x_i}^2\right)}^2+{\left({x}_i-1\right)}^2\right]+\left[\sum \limits_{i=1}^d\left|{x}_i\right|+\prod \limits_{i=1}^d\left|{x}_i\right|\right] \) |

f(x)37 | Ackley + Griewnk + Rastringin + Rosenbrock + Schwefel22 | f(x∗) = n − 1; x∗ = (0, …, 0) | [−100,100]d | 30 | \( f(x)=\left[-20\exp \left(-0.2\sqrt{\frac{1}{d}\sum \limits_{i=d}^d{x}_i^2}\right)-\exp \left(\frac{1}{d}\sum \limits_{i=1}^d\cos \left(2\pi {x}_i\right)\right)+\exp (1)+20\right]+\left[\sum \limits_{i=1}^d\frac{x_i^2}{4000}-\prod \limits_{i=1}^d\cos \left(\frac{x_i}{\sqrt{i}}\right)+1\right]+\left[10d+\sum \limits_{i=1}^d\left[{x}_i^2-10\cos \left(2\pi {x}_i\right)\right]\right]+\left[\sum \limits_{i=1}^d100{\left({x}_{i+1}-{x_i}^2\right)}^2+{\left({x}_i-1\right)}^2\right]+\left[\sum \limits_{i=1}^d\left|{x}_i\right|+\prod \limits_{i=1}^d\left|{x}_i\right|\right] \) |

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cuevas, E., Escobar, H., Sarkar, R. et al. A new population initialization approach based on Metropolis–Hastings (MH) method. Appl Intell 53, 16575–16593 (2023). https://doi.org/10.1007/s10489-022-04359-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-04359-6