Abstract

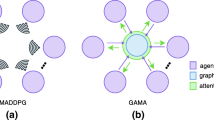

In the multi-agent cooperative decision-making process, an agent needs to learn cooperatively with its neighbors to obtain the optimal strategy. The actions of agents can be classified into independent actions and interactive actions. The overall information and neighbor information obtained by the agent are different in guiding the selection of the two types of actions. Generally, real-time interaction between the representations of overall information and neighborhood information can facilitate the cooperative decision-making of agents. Therefore, this paper proposes a bidirectional real-time gain representation (BRGR) mechanism, which explicitly enables such real-time interactions. On the one hand, real-time effective neighborhood information representation is incorporated into the overall information representation via an attention module to achieve the gain of the overall information. The gains provide a better understanding and utilization of neighborhood information and guide the agents to make independent action selections. On the other hand, real-time overall information representation is integrated into the neighborhood information representation to achieve the gain of neighborhood information, which guarantees that the interactive actions are based on the current state of agent. The gains make the agents select proper interactive actions. Thus, the proposed BRGR mechanism enables the agents to effectively learn the optimal cooperative strategies. The BRGR is applied to state-of-the-art multi-agent reinforcement learning algorithms. The experimental results show that the BRGR significantly improves the performance of the base algorithms, and have more advantage in complex environments.

Similar content being viewed by others

Notes

StarCraft II is a trademark of BlizzardEntertainmentTM.

References

Wurman PR, Barrett S, Kawamoto K, MacGlashan J, Subramanian K, Walsh TJ, Capobianco R, Devlic A, Eckert F, Fuchs F, Gilpin L, Khandelwal P, Kompella V, Lin H, MacAlpine P, Oller D, Seno T, Sherstan C, Thomure MD, Aghabozorgi H, Barrett L, Douglas R, Whitehead D, Dvrr P, Stone P, Spranger M, Kitano H (2022) Outracing champion gran turismo drivers with deep reinforcement learning. Nature 602:223–228

Silver D, Schrittwieser J, Simonyan K, Antonoglou I, Huang A, Guez A, Hubert T, Baker L, Lai M, Bolton A, Chen Y, Lillicrap TP, Hui F, Sifre L, van den Driessche G, Graepel T, Hassabis D (2017) Mastering the game of go without human knowledge. Nature 550(7676):354–359

Yang Z, Merrick KE, Jin L, Abbass HA (2018) Hierarchical deep reinforcement learning for continuous action control. IEEE Transactions on Neural Networks and Learning Systems 29(11):5174–5184

Mendonca M, Chrun I, Neves F, Arruda L (2017) A cooperative architecture for swarm robotic based on dynamic fuzzy cognitive maps. Eng Appl Artif Intell 59:122–132

Chai R, Niu H, Carrasco J, Arvin F, Yin H, Lennox B (2022) Design and experimental validation of deep reinforcement learning-based fast trajectory planning and control for mobile robot in unknown environment. IEEE Transactions on Neural Networks and Learning Systems, 1–15

Zhang R, Xu X, Zhang X, Xiong Q, Yu C, Ma Q, Peng Y (2022) Kernel-based multiagent reinforcement learning for near-optimal formation control of mobile robots. Appl Intell, 1–13

Barros P, Sciutti A (2022) All by myself: learning individualized competitive behavior with a contrastive reinforcement learning optimization. Neural Netw 150:364–376

Vinyals O, Babuschkin I, Czarnecki W, Mathieu M, Dudzik A, Chung J, Choi D, Powell R, Ewalds T, Georgiev P, Oh J, Horgan D, Kroiss M, Danihelka I, Huang A, Sifre L, Cai T, Agapiou J, Jaderberg M, Vezhnevets A, Leblond R, Pohlen T, Dalibard V, Budden D, Sulsky Y, Molloy J, Paine T, Gulcehre C, Wang Z, Pfaff T, Wu Y, Ring R, Yogatama D, Wunsch D, McKinney K, Smith O, Schaul T, Lillicrap T, Kavukcuoglu K, Hassabis D, Apps C, Silver D (2019) Grandmaster level in starcraft ii using multi-agent reinforcement learning. Nature 575:350–354

Wei Q, Li Y, Zhang J, Wang F (2022) Vgn: Value decomposition with graph attention networks for multiagent reinforcement learning. IEEE Transactions on Neural Networks and Learning Systems, 1–14

Zhang Z, Yang J, Zha H (2020) Integrating independent and centralized multi-agent reinforcement learning for traffic signal network optimization. In: Proceedings of the 19th International Conference on Autonomous Agents and Multiagent Systems, pp 2083–2085. International Foundation for Autonomous Agents and Multiagent Systems

Ge H, Gao D, Sun L, Hou Y, Yu C, Wang Y, Tan G (2021) Multi-agent transfer reinforcement learning with multi-view encoder for adaptive traffic signal control. IEEE Trans Intell Transp Syst 23:12572–12587

Yu C, Wang X, Xu X, Zhang M, Ge H, Ren J, Sun L, Chen B, Tan G (2020) Distributed multiagent coordinated learning for autonomous driving in highways based on dynamic coordination graphs. IEEE Trans Intell Transp Syst 21:735–748

Grover A, Al-Shedivat M, Gupta JK, Burda Y, Edwards H (2018) Learning policy representations in multiagent systems. In: Proceedings of the 35th International Conference on Machine Learning, vol 80, pp 1797-1806. PMLR, Stockholmsmässan, Stockholm, Sweden

Ling CK, Fang F, Kolter JZ (2018) What game are we playing? end-to-end learning in normal and extensive form games. In: Proceedings of the 27th International Joint Conference on Artificial Intelligence, pp 396–402. ijcai.org

Majumdar S, Khadka S, Miret S, McAleer S, Tumer K (2020) Evolutionary reinforcement learning for sample-efficient multiagent coordination. In: Proceedings of the 37th International Conference on Machine Learning, vol 119, pp 6651–6660. PMLR

Hennes D, Morrill D, Omidshafiei S, Munos R, Pérolat J, Lanctot M, Gruslys A, Lespiau J, Parmas P, Duéñez-Guzmán EA, Tuyls K (2020) Neural replicator dynamics: multiagent learning via hedging policy gradients. In: Proceedings of the 19th international conference on autonomous agents and multiagent systems, pp 492–501. International Foundation for Autonomous Agents and Multiagent Systems

Wang T, Dong H, Lesser VR, Zhang C (2020) ROMA: multi-agent reinforcement learning with emergent roles. In: Proceedings of the 37th International Conference on Machine Learning, vol 119, pp 9876–9886. PMLR

Wang T, Gupta T, Mahajan A, Peng B, Whiteson S, Zhang C (2021) RODE: learning roles to decompose multi-agent tasks. In: 9Th international conference on learning representations, ICLR 2021. Openreview.net

Zhang SQ, Zhang Q, Lin J (2019) Efficient communication in multi-agent reinforcement learning via variance based control. In: Advances in neural information processing systems 32: Annual conference on neural information processing systems 2019, neurIPS 2019, december 8-14, 2019, vancouver, BC, Canada, pp. 3230–3239

Wang T, Wang J, Zheng C, Zhang C (2020) Learning nearly decomposable value functions via communication minimization. In: 8th International Conference on Learning Representations, ICLR 2020. OpenReview.net, Addis Ababa, Ethiopia

Wang J, Ren Z, Liu T, Yu Y, Zhang C (2021) QPLEX: duplex dueling multi-agent q-learning. In: 9Th international conference on learning representations, ICLR 2021. Openreview.net

Sunehag P, Lever G, Gruslys A, Czarnecki WM, Zambaldi VF, Jaderberg M, Lanctot M, Sonnerat N, Leibo JZ, Tuyls K, Graepel T (2018) Value-decomposition networks for cooperative multi-agent learning based on team reward. In: Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems, pp 2085-2087. International Foundation for Autonomous Agents and Multiagent Systems Richland, SC, USA / ACM, Stockholm, Sweden

Rashid T, Samvelyan M, de Witt CS, Farquhar G, Foerster JN, Whiteson S (2018) QMIX: monotonic value function factorisation for deep multi-agent reinforcement learning. In: Proceedings of the 35th international conference on machine learning, vol 80, pp 4292-4301. PMLR, Stockholmsmässan, Stockholm, Sweden

Son K, Kim D, Kang WJ, Hostallero D, Yi Y (2019) QTRAN: learning to factorize with transformation for cooperative multi-agent reinforcement learning. In: Proceedings of the 36th international conference on machine learning, ICML 2019, vol 97, pp 5887–5896. PMLR

Chai J, Li W, Zhu Y, Zhao D, Ma Z, Sun K, Ding J (2021) Unmas: multiagent reinforcement learning for unshaped cooperative scenarios. IEEE Transactions on Neural Networks and Learning Systems, 1–12

Zhang T, Xu H, Wang X, Wu Y, Keutzer K, Gonzalez JE, Tian Y (2020) Multi-agent collaboration via reward attribution decomposition. CoRR 2010.08531

Wang W, Yang T, Liu Y, Hao J, Hao X, Hu Y, Chen Y, Fan C, Gao Y (2020) Action semantics network: considering the effects of actions in multiagent systems. In: 8Th international conference on learning representations. Openreview.net, Addis Ababa, Ethiopia

Hansen EA, Bernstein DS, Zilberstein S (2004) Dynamic programming for partially observable stochastic games. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp. 709–715. AAAI Press / The MIT Press

Jaderberg M, Czarnecki W, Dunning I, Marris L, Lever G, Castaneda A, Beattie C, Rabinowitz N, Morcos A, Ruderman A, Sonnerat N, Green T, Deason L, Leibo J, Silver D, Hassabis D, Kavukcuoglu K, Graepel T (2019) Human-level performance in 3d multiplayer games with population-based reinforcement learning. Science 364:859–865

Nguyen ND, Nguyen T, Nahavandi S (2019) Multi-agent behavioral control system using deep reinforcement learning. Neurocomputing 359:58–68

Lemos LL, Bazzan ALC (2019) Combining adaptation at supply and demand levels in microscopic traffic simulation: a multiagent learning approach. Transportation Research Procedia 37:465–472

Gong Y, Abdel-Aty M, Cai Q, Rahman MS (2019) Decentralized network level adaptive signal control by multi-agent deep reinforcement learning. Transportation Research Interdisciplinary Perspectives 100020:1

Long Q, Zhou Z, Gupta A, Fang F, Wu Y, Wang X (2020) Evolutionary population curriculum for scaling multi-agent reinforcement learning. In: 8th international conference on learning representations, ICLR 2020. Openreview.net

Wang W, Yang T, Liu Y, Hao J, Hao X, Hu Y, Chen Y, Fan C, Gao Y (2020) From few to more: large-scale dynamic multiagent curriculum learning. In: The thirty-fourth AAAI conference on artificial intelligence, AAAI 2020, the thirty-second innovative applications of artificial intelligence conference, IAAI 2020, the tenth AAAI symposium on educational advances in artificial intelligence, EAAI 2020, pp. 7293–7300. AAAI Press

Hoshen Y (2017) VAIN: attentional multi-agent predictive modeling. In: Advances in neural information processing systems 30: Annual conference on neural information processing systems 2017, december 4-9, 2017, long beach, CA, USA, pp 2701–2711

Mao H, Zhang Z, Xiao Z, Gong Z (2019) Modelling the dynamic joint policy of teammates with attention multi-agent DDPG. In: Proceedings of the 18th International Conference on Autonomous Agents and MultiAgent Systems, AAMAS ’19, Montreal, QC, Canada, May 13-17, 2019, pp 1108–1116

Ge H, Ge Z, Sun L, Wang Y (2022) Enhancing cooperation by cognition differences and consistent representation in multi-agent reinforcement learning. Appl Intell 52(9):9701–9716

Liu X, Tan Y (2022) Attentive relational state representation in decentralized multiagent reinforcement learning. IEEE Trans Cybern 52(1):252–264

Samvelyan M, Rashid T, de Witt CS, Farquhar G, Nardelli N, Rudner TGJ, Hung C-M, Torr PHS, Foerster JN, Whiteson S (2019) The starcraft multi-agent challenge. In: Proceedings of the 18th international conference on autonomous agents and multiagent systems, pp 2186–2188. International Foundation for Autonomous Agents and Multiagent Systems

Kurach K, Raichuk A, Stanczyk P, Zajac M, Bachem O, Espeholt L, Riquelme C, Vincent D, Michalski M, Bousquet O, Gelly S (2020) Google research football: a novel reinforcement learning environment. In: Thirty-fourth association for the advancement of artificial intelligence, vol 34, pp 4501–4510

Tan M (1993) Multi-agent reinforcement learning: independent versus cooperative agents. In: Proceedings of the 10th international conference machine learning, pp 330–337. Morgan Kaufmann

Wang Z, Schaul T, Hessel M, van Hasselt H, Lanctot M, de Freitas N (2016) Dueling network architectures for deep reinforcement learning. In: Proceedings of the 33nd international conference on machine learning, vol 48, pp 1995–2003. JMLR.org, New York City, NY, USA

Acknowledgements

This work is supported by the National Natural Science Foundation of China (61976034,U1808206), the Dalian Science and Technology Innovation Fund (2022JJ12GX013), the Natural Science Foundation of Liaoning Province(2022YGJC20), and the Fundamental Research Funds for the Central Universities (DUT21YG106).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

He, X., Ge, H., Sun, L. et al. BRGR: Multi-agent cooperative reinforcement learning with bidirectional real-time gain representation. Appl Intell 53, 19044–19059 (2023). https://doi.org/10.1007/s10489-022-04426-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-04426-y