Abstract

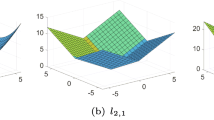

As for single-label learning, feature selection has become a crucial data pre-processing tool for multi-label learning due to its ability to reduce the feature dimensionality to mitigate the influence of “the curse of dimensionality”. In recent years, the multi-label feature selection (MFS) methods based on manifold learning and sparse regression have confirmed their superiority and gained more and more attention than other methods. However, most of these methods only consider exploiting the geometric structure distributed in the data manifold but ignore the geometric structure distributed in the feature manifold, resulting in incomplete information about the learned manifold. Aiming to simultaneously exploit the geometric information of data and feature spaces for feature selection, this paper proposes a novel MFS method named dual-graph based multi-label feature selection (DGMFS). In the framework of DGMFS, two nearest neighbor graphs are constructed to form a dual-graph regularization to explore the geometric structures of both the data manifold and the feature manifold simultaneously. Then, we combined the dual-graph regularization with a non-convex sparse regularization l2,1 − 2-norm to obtain a more sparse solution for the weight matrix, which can better deal with the redundancy features. Finally, we adopt the EM strategy to design an iterative updating optimization algorithm for DGMFS and provide the convergence analysis of the optimization algorithm. Extensive experimental results on ten benchmark multi-label data sets have shown that DGMFS is more effective than several state-of-the-art MFS methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data Availability

All data included in this study is available from the first author and can also be found in the manuscript.

Code Availability

All codes included in this study is available from the first author upon reasonable request.

References

Liu W, Wang H, Shen X, Tsang I (2021) The emerging trends of multi-label learning. IEEE Trans Pattern Anal Mach Intell, 1–1

Kashef S, Nezamabadi-Pour H, Nikpour B (2018) Multilabel feature selection: a comprehensive review and guiding experiments. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery 8 (10):1240

Zhang J, Luo Z, Li C, Zhou C, Li S (2019) Manifold regularized discriminative feature selection for multi-label learning. Pattern Recogn 95:136–150

Huang R, Wu Z (2021) Multi-label feature selection via manifold regularization and dependence maximization. Pattern Recogn 120(8):108149

Fan Y, Liu J, Weng W, Chen B, Wu S (2021) Multi-label feature selection with local discriminant model and label correlations. Neurocomputing 442:98–115

Fan Y, Liu J, Liu P, Du Y, Wu S (2021) Manifold learning with structured subspace for multi-label feature selection. Pattern Recogn 120(2):108169

Zhang Y, Ma Y (2022) Non-negative multi-label feature selection with dynamic graph constraints. Knowl-Based Syst 238:107924

Shang F, Jiao L, Wang F (2012) Graph dual regularization non-negative matrix factorization for co-clustering. Pattern Recogn 45(6):2237–2250

Li X, Cui G, Dong Y (2017) Graph regularized non-negative low-rank matrix factorization for image clustering. IEEE Trans Cybern 47(11):3840–3853

Li Y, Li T, Liu H (2017) Recent advances in feature selection and its applications. Knowl Inf Syst 53(3):551–577

Lin Y, Hu Q, Liu J, Duan J (2015) Multi-label feature selection based on max-dependency and min-redundancy. Neurocomputing 168(30):92–103

Alalga A, Benabdeslem K, Taleb N (2016) Soft-constrained laplacian score for semi-supervised multi-label feature selection. Knowl Inf Syst 47(1):75–98

Sun Z, Zhang J, Dai L, Li C, Zhou C, Xin J, Li S (2019) Mutual information based multi-label feature selection via constrained convex optimization. Neurocomputing 329:447–456

Lee J, Kim DW (2013) Feature selection for multi-label classification using multivariate mutual information. Pattern Recogn Lett 34(3):349–357

Paniri M, Dowlatshahi MB, Nezamabadi-Pour H (2019) Mlaco: a multi-label feature selection algorithm based on ant colony optimization. Knowl-Based Syst 192:105285

Ying Y, Wang Y (2014) Feature selection for multi-label learning using mutual information and ga. In: Proceedings of the 9th international conference on rough sets and knowledge technology, pp 454–463

Yin J, Tao T, Xu J (2015) A multi-label feature selection algorithm based on multi-objective optimization. In: 2015 International joint conference on neural networks, pp 1–7

Huang J, Li G, Huang Q, Wu X (2016) Learning label-specific features and class-dependent labels for multi-label classification. IEEE Trans Knowl Data Eng 28(12):3309–3323

Jian L, Li J, Shu K, Liu H (2016) Multi-label informed feature selection. In: Proceedings of the twenty-fifth international joint conference on artificial intelligence, pp 1627–1633

Braytee A, Liu W, Catchpoole DR, Kennedy PJ (2017) Multi-label feature selection using correlation information. In: Proceedings of the 2017 ACM on conference on information and knowledge management, pp 1649–1656

Zhu Y, Kwok JT, Zhou Z-H (2018) Multi-label learning with global and local label correlation. IEEE Trans Knowl Data Eng 30(6):1081–1094

Cai Z, Zhu W (2018) Multi-label feature selection via feature manifold learning and sparsity regularization. Int J Mach Learn Cybern 9(8):1321–1334

Gu Q, Zhou J (2009) Co-clustering on manifolds. In: Proceedings of the 15th ACM SIGKDD international conference on knowledge discovery and data mining, pp 359–368

Shi L, Du L, Shen Y-D (2014) Robust spectral learning for unsupervised feature selection. In: 2014 IEEE International conference on data mining, pp 977–982

Li P, Bu J, Chen C, He Z, Cai D (2013) Relational multimanifold coclustering. IEEE Trans Cybern 43(6):1871–1881

Shang R, Wang W, Stolkin R, Jiao L (2018) Non-negative spectral learning and sparse regression-based dual-graph regularized feature selection. IEEE Trans Cybern 48(2):793–806

Shang RH, Xu KM, Jiao L (2021) Adaptive dual graphs and non-convex constraint based embedded feature selection (in chinese). Scientia Sinica Informationis 51:1640–1657

He X, Deng C, Niyogi P (2005) Laplacian score for feature selection. In: Advances in neural information processing systems, pp 507–514

Deng C, Zhang C, He X (2010) Unsupervised feature selection for multi-cluster data. In: Proceedings of the 16th ACM SIGKDD international conference on knowledge discovery and data mining, pp 333–342

Zhang M, Ding C, Zhang Y, Nie F (2014) Feature selection at the discrete limit. In: Proceedings of the AAAI conference on artificial intelligence, vol 28, pp 1355–1361

Du X, Yan Y, Pan P, Long G, Zhao L (2016) Multiple graph unsupervised feature selection. Signal Process 120:754–760

Shi Y, Miao J, Wang Z, Zhang P, Niu L (2018) Feature selection with l2,1 − 2 regularization. IEEE Trans Neur Netw Learn Syst 29(10):4967–4982

Karush W (2014) Minima of functions of several variables with inequalities as side conditions. In: Traces and emergence of nonlinear programming, pp 217–245

Tsoumakas G, Spyromitros-Xioufis E, Vilcek J, Vlahavas IP (2011) Mulan: a java library for multi-label learning. J Mach Learn Res 12:2411–2414

Wu XZ, Zhou ZH (2017) A unified view of multi-label performance measures. In: Proceedings of the 34th international conference on machine learning, pp 3780–3788

Nie F, Huang H, Xiao C, Ding C (2010) Efficient and robust feature selection via joint l2,1-norms minimization. In: International conference on neural information processing systems, vol 2, pp 1813–1821

Huang R, Jiang W, Sun G (2018) Manifold-based constraint laplacian score for multi-label feature selection. Pattern Recogn Lett 112:346–352

Hashemi A, Dowlatshahi MB, Nezamabadi-pour H (2020) Mfs-mcdm: multi-label feature selection using multi-criteria decision making. Knowl-Based Syst 206:106365

Hashemi A, Bagher Dowlatshahi M, Nezamabadi-pour H (2021) An efficient pareto-based feature selection algorithm for multi-label classification. Inform Sci 581:428–447

Zhang ML, Zhou ZH (2007) Ml-knn: a lazy learning approach to multi-label learning. Pattern Recogn 40(7):2038–2048

Demiar J, Schuurmans D (2006) Statistical comparisons of classifiers over multiple data sets. J Mac Learn Res 7(1):1–30

Acknowledgements

This work is supported by Grants from the Natural Science Foundation of Xiamen (No.3502Z20227045), the National Natural Science Foundation of China (No.61873067) and the Scientific Research Funds of Huaqiao University(NO.21BS121).

Funding

The first author is supported by the Natural Science Foundation of Xiamen (No.3502Z20227045) and the Scientific Research Funds of Huaqiao University (No.21BS121).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Conflicts of interest

The authors declare that they have no conflict of interest.

Informed consent

Not applicable.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The derivation of objective function (14) are derived as:

the second equality comes from that H = HT = HHT = HTH and Ld = Dd −Sd.

The derivation of objective function (17) are derived as:

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sun, Z., Xie, H., Liu, J. et al. Dual-graph with non-convex sparse regularization for multi-label feature selection. Appl Intell 53, 21227–21247 (2023). https://doi.org/10.1007/s10489-023-04515-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04515-6