Abstract

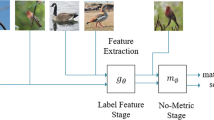

The traditional supervised learning models rely on high-quality labeled samples heavily. In many fields, training the model on limited labeled samples will result in a weak generalization ability of the model. To address this problem, we propose a novel few-shot image classification method by self-supervised and metric learning, which contains two training steps: (1) Training the feature extractor and projection head with strong representational ability by self-supervised technology; (2) taking the trained feature extractor and projection head as the initialization meta-learning model, and fine-tuning the meta-learning model by the proposed loss functions. Specifically, we construct the pairwise-sample meta loss (ML) to consider the influence of each sample on the target sample in the feature space, and propose a novel regularization technique named resistance regularization based on pairwise-samples which is utilized as an auxiliary loss in the meta-learning model. The model performance is evaluated on the 5-way 1-shot and 5-way 5-shot classification tasks of mini-ImageNet and tired-ImageNet. The results demonstrate that the proposed method achieves the state-of-the-art performance.

Similar content being viewed by others

References

Vinyals O, Blundell C, Lillicrap T, Wierstra D, et al. (2016) Matching networks for one shot learning. Adv Neural Inf Process Syst 29

Chen W-Y, Liu Y-C, Kira Z, Wang Y-C F, Huang J-B (2019) A closer look at few-shot classification. arXiv:1904.04232

Guo Y, Codella NC, Karlinsky L, Codella JV, Smith JR, Saenko K, Rosing T, Feris R (2020) A broader study of cross-domain few-shot learning. In: European conference on computer vision, Springer pp 124–141

Tseng H-Y, Lee H-Y, Huang J-B, Yang M-H (2020) Cross-domain few-shot classification via learned feature-wise transformation. arXiv:2001.08735

Jaiswal A, Babu AR, Zadeh MZ, Banerjee D, Makedon F (2020) A survey on contrastive self-supervised learning. Technologies 9(1):2

Sun Q, Liu Y, Chua T-S, Schiele B (2019) Meta-transfer learning for few-shot learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 403–412

Chen Y, Wang X, Liu Z, Xu H, Darrell T (2020) A new meta-baseline for few-shot learning

Lake B, Salakhutdinov R, Gross J, Tenenbaum J (2011) One shot learning of simple visual concepts. In: Proceedings of the annual meeting of the cognitive science society, vol 33

Wang X, Yu F, Wang R, Darrell T, Gonzalez JE (2019) Tafe-net : Task-aware feature embeddings for low shot learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1831–1840

Rusu AA, Rao D, Sygnowski J, Vinyals O, Pascanu R, Osindero S, Hadsell R (2018) Meta-learning with latent embedding optimization. arXiv preprint arXiv:1807.05960

Lee K, Maji S, Ravichandran A, Soatto S (2019) Meta-learning with differentiable convex optimization. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10657–10665

Ge W (2018) Deep metric learning with hierarchical triplet loss. In: Proceedings of the European conference on computer vision (ECCV), pp 269–285

He X, Zhou Y, Zhou Z, Bai S, Bai X (2018) Triplet-center loss for multi-view 3d object retrieval. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1945–1954

Sohn K (2016) Improved deep metric learning with multi-class n-pair loss objective. Adv Neural Inf Process Syst 29

Wang J, Zhou F, Wen S, Liu X, Lin Y (2017) Deep metric learning with angular loss. In: Proceedings of the IEEE international conference on computer vision, pp 2593–2601

Wu C-Y, Manmatha R, Smola A J, Krahenbuhl P (2017) Sampling matters in deep embedding learning. In: Proceedings of the IEEE international conference on computer vision, pp 2840– 2848

Doersch C, Gupta A, Efros AA (2015) Unsupervised visual representation learning by context prediction. In: Proceedings of the IEEE international conference on computer vision, pp 1422–1430

Noroozi M, Favaro P (2016) Unsupervised learning of visual representations by solving jigsaw puzzles. In: European conference on computer vision, Springer, pp 69–84

Pathak D, Krahenbuhl P, Donahue J, Darrell T, Efros AA (2016) Context encoders : feature learning by inpainting. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2536–2544

Zhang R, Isola P, Efros AA (2016) Colorful image colorization. In: European conference on computer vision, Springer, pp 649–666

Zhang R, Isola P, Efros A A (2017) Split-brain autoencoders : unsupervised learning by cross-channel prediction. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1058–1067

Gidaris S, Singh P, Komodakis N (2018) Unsupervised representation learning by predicting image rotations. arXiv:1803.07728

Hjelm R D, Fedorov A, Lavoie-Marchildon S, Grewal K, Bachman P, Trischler A, bengio Y (2018)

Tian Y, Krishnan D, Isola P (2020) Contrastive multiview coding. In: European conference on computer vision, Springer, pp 776–794

Chen T, Kornblith S, Norouzi M, Hinton G (2020) A simple framework for contrastive learning of visual representations. In: International conference on machine learning, PMLR, pp 1597–1607

Gidaris S, Bursuc A, Komodakis N, Pérez P, Cord M (2019) Boosting few-shot visual learning with self-supervision. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 8059–8068

Lee H, Hwang SJ, Shin J (2019) Rethinking data augmentation. Self-supervision and self-distillation

Wang X, Han X, Huang W, Dong D, Scott MR (2019) Multi-similarity loss with general pair weighting for deep metric learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 5022–5030

Weinberger KQ, Saul LK (2009) Distance metric learning for large margin nearest neighbor classification. J Mach Learn Res 10(2)

Ren M, Triantafillou E, Ravi S, Snell J, Swersky K, Tenenbaum JB, Larochelle H, Zemel RS (2018) Meta-learning for semi-supervised few-shot classification. arXiv preprint arXiv:1803.00676

Li K, Zhang Y, Li K, Fu Y (2020) Adversarial feature hallucination networks for few-shot learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 13470–13479

Yu Z, Chen L, Cheng Z, Luo J (2020) Transmatch : a transfer-learning scheme for semi-supervised few-shot learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 12856–12864

Liu Y, Schiele B, Sun Q (2020) An ensemble of epoch-wise empirical bayes for few-shot learning. In: European conference on computer vision, Springer, pp 404–421

Liu B, Cao Y, Lin Y, Li Q, Zhang Z, Long M, Hu H (2020) Negative margin matters : understanding margin in few-shot classification. In: European conference on computer vision, Springer, pp 438–455

Simon C, Koniusz P, Nock R, Harandi M (2020) Adaptive subspaces for few-shot learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4136–4145

Tian Y, Wang Y, Krishnan D, Tenenbaum JB, Isola P (2020) Rethinking few-shot image classification : a good embedding is all you need?. In: European conference on computer vision, Springer, pp 266–282

Kim J, Kim H, Kim G (2020) Model-agnostic boundary-adversarial sampling for test-time generalization in few-shot learning. In: European conference on computer vision, Springer, pp 599–617

Ye H-J, Hu H, Zhan D-C, Sha F (2020) Few-shot learning via embedding adaptation with set-to-set functions. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8808–8817

Dhillon GS, Chaudhari P, Ravichandran A, Soatto S (2019) A baseline for few-shot image classification. arXiv:1909.02729

Zhang C, Cai Y, Lin G, Shen C (2020) Deepemd : few-shot image classification with differentiable earth mover’s distance and structured classifiers. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 12203–12213

Afrasiyabi A, Lalonde J-F, Gagné C (2020) Associative alignment for few-shot image classification. In: European conference on computer vision, Springer, pp 18–35

Laenen S, Bertinetto L (2021) On episodes, prototypical networks, and few-shot learning. Adv Neural Inf Process Syst 34:24581–24592

Afrasiyabi A, Lalonde J-F, Gagné C (2021) Mixture-based feature space learning for few-shot image classification. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 9041–9051

Chen Z, Ge J, Zhan H, Huang S, Wang D (2021) Pareto self-supervised training for few-shot learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 13663–13672

Shen Z, Liu Z, Qin J, Savvides M, Cheng K-T (2021) Partial is better than all : revisiting fine-tuning strategy for few-shot learning. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol 35. pp 9594–9602

Xu W, Xu Y, Wang H, Tu Z (2021) Attentional constellation nets for few-shot learning. In: International conference on learning representations

Zhang H, Koniusz P, Jian S, Li H, Torr PH (2021) Rethinking class relations : absolute-relative supervised and unsupervised few-shot learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9432–9441

Hu Y, Gripon V, Pateux S (2021 ) Leveraging the feature distribution in transfer-based few-shot learning. In: International conference on artificial neural networks, Springer, pp 487–499

Qiao S, Liu C, Shen W, Yuille AL (2018) Few-shot image recognition by predicting parameters from activations. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7229–7238

Ravichandran A, Bhotika R, Soatto S (2019) Few-shot learning with embedded class models and shot-free meta training. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 331–339

Gidaris S, Komodakis N (2019) Generating classification weights with gnn denoising autoencoders for few-shot learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 21–30

Li H, Eigen D, Dodge S, Zeiler M, Wang X (2019) Finding task-relevant features for few-shot learning by category traversal. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1–10

Xing C, Rostamzadeh N, Oreshkin BO, Pinheiro PO (2019) Adaptive cross-modal few-shot learning. Adv Neural Inf Process Syst 32

Wu F, Smith JS, Lu W, Pang C, Zhang B (2020) Attentive prototype few-shot learning with capsule network-based embedding. In: European conference on computer vision, Springer, pp 237–253

Zhou Z, Qiu X, Xie J, Wu J, Zhang C (2021) Binocular mutual learning for improving few-shot classification. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 8402–8411

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D (2017) Grad-cam : visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE international conference on computer vision, pp 618–626

McInnes L, Healy J, Melville J (2018) Umap : uniform manifold approximation and projection for dimension reduction. arXiv:1802.03426

Acknowledgements

This work was funded by the National Natural Science Foundation of China under Grant 51774219, Key R&D Projects in Hubei Province under grant 2020BAB098.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, W., Xie, L., Gan, P. et al. Self-supervised pairwise-sample resistance model for few-shot classification. Appl Intell 53, 20661–20674 (2023). https://doi.org/10.1007/s10489-023-04525-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04525-4