Abstract

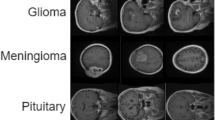

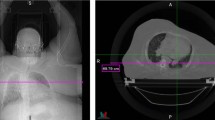

Generative Adversarial Networks (GANs) have gained significant attention in several computer vision tasks for generating high-quality synthetic data. Various medical applications including diagnostic imaging and radiation therapy can benefit greatly from synthetic data generation due to data scarcity in the domain. However, medical image data is typically kept in 3D space, and generative models suffer from the curse of dimensionality issues in generating such synthetic data. In this paper, we investigate the potential of GANs for generating connected 3D volumes. We propose an improved version of 3D α-GAN by incorporating various architectural enhancements. On a synthetic dataset of connected 3D spheres and ellipsoids, our model can generate fully connected 3D shapes with similar geometrical characteristics to that of training data. We also show that our 3D GAN model can successfully generate high-quality 3D tumor volumes and associated treatment specifications (e.g., isocenter locations). Similar moment invariants to the training data as well as fully connected 3D shapes confirm that improved 3D α-GAN implicitly learns the training data distribution, and generates realistic-looking samples. The capability of improved 3D α-GAN makes it a valuable source for generating synthetic medical image data that can help future research in this domain.

Similar content being viewed by others

Data Availability

All the datasets are publicly available, and can be obtained using the described methods.

References

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative Adversarial Nets. In: Advances in Neural Information Processing Systems, pp 2672–2680

Lee H-Y, Tseng H-Y, Huang J-B, Singh M, Yang M-H (2018) Diverse image-to-image translation via disentangled representations. In: Proceedings of the European Conference on Computer Vision (ECCV), pp 35–51

Wang T-C, Liu M-Y, Zhu J-Y, Tao A, Kautz J, Catanzaro B (2018) High-resolution image synthesis and semantic manipulation with conditional GANs. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 8798–8807

Zhong X, Qu X, Chen C (2019) High-quality face image super-resolution based on generative adversarial networks. In: 2019 IEEE 4th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC). IEEE, vol 1, pp 1178–1182

Brock A, Donahue J, Simonyan K (2019) Large scale GAN training for high fidelity natural image synthesis. In: International Conference on Learning Representations. https://openreview.net/forum?id=B1xsqj09Fm

Mohammadjafari S, Ozyegen O, Cevik M, Kavurmacioglu E, Ethier J, Basar A (2021) Designing mm-wave electromagnetic engineered surfaces using generative adversarial networks. Neural Comput Appl 1–15

Suzuki K (2017) Overview of deep learning in medical imaging. Radiol Phys Technol 10(3):257–273

Chan ER, Monteiro M, Kellnhofer P, Wu J, Wetzstein G (2021) Pi-GAN: Periodic implicit generative adversarial networks for 3D-aware image synthesis. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 5799–5809

Or-El R, Luo X, Shan M, Shechtman E, Park JJ, Kemelmacher-Shlizerman I (2022) Stylesdf: High-resolution 3D-consistent image and geometry generation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 13503–13513

Han C, Kitamura Y, Kudo A, Ichinose A, Rundo L, Furukawa Y, Umemoto K, Li Y, Nakayama H (2019) Synthesizing diverse lung nodules wherever massively: 3D multi-conditional GAN-based CT image augmentation for object detection. In: 2019 International Conference on 3D Vision (3DV). IEEE, pp 729–737

Mahmood R, Babier A, McNiven A, Diamant A, Chan TC (2018) Automated treatment planning in radiation therapy using generative adversarial networks. In: Machine Learning for Healthcare Conference. PMLR, pp 484–499

Berdyshev A, Cevik M, Aleman D, Nordstrom H, Riad S, Lee Y, Sahgal A, Ruschin M (2020) Knowledge-based isocenter selection in radiosurgery planning. Med Phys 47(9):3913–3927

Kwon G, Han C, Kim D-s (2019) Generation of 3D brain MRI using auto-encoding generative adversarial networks. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, pp 118–126

Wu J, Zhang C, Xue T, Freeman B, Tenenbaum J (2016) Learning a probabilistic latent space of object shapes via 3D generative-adversarial modeling. Adv Neural Inf Proces Syst 29

Choy C, Xu D, Gwak J, Chen K, Savarese S (2016) 3D-r2n2: A unified approach for single and multi-view 3D object reconstruction. In: European Conference on Computer Vision. Springer, pp 628–644

Everingham M, Winn J (2011) The pascal visual object classes challenge 2012 (voc2012) development kit. Pattern Analysis, Statistical Modelling and Computational Learning, Tech. Rep 8(5)

Oh Song H, Xiang Y, Jegelka S, Savarese S (2016) Deep metric learning via lifted structured feature embedding. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 4004–4012

Weiner MW (2004) Alzheimer’s Disease Neuroimaging Initiative (ADNI). https://adni.loni.usc.edu/,

Bakas S, Akbari H, Sotiras A, Bilello M, Rozycki M, Kirby JS, Freymann JB, Farahani K, Davatzikos C (2017) Advancing the cancer genome ATLAS glioma MRI collections with expert segmentation labels and radiomic features. Scientific Data 4(1):1–13

Babier A, Mahmood R, McNiven AL, Diamant A, Chan TC (2020) Knowledge-based automated planning with three-dimensional generative adversarial networks. Med Phys 47(2):297–306

Hong S, Marinescu R, Dalca AV, Bonkhoff AK, Bretzner M, Rost NS, Golland P (2021) 3D-StyleGAN: A Style-based generative adversarial network for generative modeling of three-dimensional medical images. In: Deep Generative Models, and Data Augmentation, Labelling, and Imperfections. Springer, New York, pp 24–34

Jangid DK, Brodnik NR, Khan A, Goebel MG, Echlin MP, Pollock TM, Daly SH, Manjunath B (2022) 3D, grain shape generation in polycrystals using generative adversarial networks. Integrating Materials and Manufacturing Innovation, 1–14

Xiang Y, Kim W, Chen W, Ji J, Choy C, Su H, Mottaghi R, Guibas L, Savarese S (2016) ObjectNet3D: A large scale database for 3D object recognition. In: European Conference on Computer Vision. Springer, pp 160–176

Zhi S, Liu Y, Li X, Guo Y (2018) Toward real-time 3D object recognition: A lightweight volumetric cnn framework using multitask learning. Comput Graph 71:199–207

Wu Z, Song S, Khosla A, Yu F, Zhang L, Tang X, Xiao J (2015) 3D shapenets: A deep representation for volumetric shapes. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 1912–1920

Maturana D, Scherer S (2015) Voxnet: A 3D convolutional neural network for real-time object recognition. In: 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, pp 922–928

Li Y, Su H, Qi CR, Fish N, Cohen-Or D, Guibas LJ (2015) Joint embeddings of shapes and images via cnn image purification. ACM Trans Graph (TOG) 34(6):1–12

Jimenez Rezende D, Eslami S, Mohamed S, Battaglia P, Jaderberg M, Heess N (2016) Unsupervised learning of 3D, structure from images. Adv Neural Inf Proces Syst 29

Smith E, Fujimoto S, Meger D (2018) Multi-view silhouette and depth decomposition for high resolution 3D, object representation. Adv Neural Inf Process Syst 31

Sharma A, Grau O, Fritz M (2016) Vconv-dae: Deep volumetric shape learning without object labels. In: European Conference on Computer Vision. Springer, pp 236–250

Zhang X, Zhang Z, Zhang C, Tenenbaum J, Freeman B, Wu J (2018) Learning to reconstruct shapes from unseen classes. Adv Neural Inf Proces Syst 31

Wu J, Wang Y, Xue T, Sun X, Freeman B, Tenenbaum J (2017) Marrnet: 3D, shape reconstruction via 2.5 D sketches. Adv Neural Inf Proces Syst 30

Karras T, Laine S, Aittala M, Hellsten J, Lehtinen J, Aila T (2020) Analyzing and improving the image quality of StyleGAN. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 8110–8119

Karras T, Laine S, Aila T (2019) A style-based generator architecture for generative adversarial networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 4401–4410

Rosca M, Lakshminarayanan B, Warde-Farley D, Mohamed S (2017) Variational approaches for auto-encoding generative adversarial networks. arXiv preprint arXiv:1706.04987

Gulrajani I, Ahmed F, Arjovsky M, Dumoulin V, Courville AC (2017) Improved training of wasserstein GANs. In: Advances in Neural Information Processing Systems, pp 5767–5777

Volokitin A, Erdil E, Karani N, Tezcan KC, Chen X, Gool LV, Konukoglu E (2020) Modelling the distribution of 3D brain MRI using a 2D slice vae. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, pp 657–666

Chong CK, Ho ETW (2021) Synthesis of 3D MRI brain images with shape and texture generative adversarial deep neural networks. IEEE Access 9:64747–64760

Cevik M, Ghomi PS, Aleman D, Lee Y, Berdyshev A, Nordstrom H, Riad S, Sahgal A, Ruschin M (2018) Modeling and comparison of alternative approaches for sector duration optimization in a dedicated radiosurgery system. Phys Med Biol 63(15):155009

Isola P, Zhu J-Y, Zhou T, Efros AA (2017) Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 1125–1134

Kingma DP, Welling M (2014) Auto-encoding variational bayes. In: Bengio Y, LeCun Y (eds) 2nd International Conference on Learning Representations, ICLR, 2014, Banff, AB, Canada, April 14-16, 2014, Conference Track Proceedings

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 1–9

Ghobadi K, Ghaffari HR, Aleman DM, Jaffray DA, Ruschin M (2012) Automated treatment planning for a dedicated multi-source intracranial radiosurgery treatment unit using projected gradient and grassfire algorithms. Med Phys 39(6Part1):3134–3141

Lin Z, Khetan A, Fanti G, Oh S (2018) Pacgan: The power of two samples in generative adversarial networks. In: Advances in Neural Information Processing Systems, pp 1498–1507

Schonfeld E, Schiele B, Khoreva A (2020) A U-Net based discriminator for generative adversarial networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 8207–8216

Kazhdan M, Funkhouser T, Rusinkiewicz S (2003) Rotation invariant spherical harmonic representation of 3D shape descriptors. In: Symposium on Geometry Processing, pp 156–164

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

No potential conflict of interest was reported by the authors.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Notations

We provide a summary of mathematical notations used in the paper in Table 7.

1.2 Model architectures

Table 8 presents the details of improved α-GAN discriminator’s architecture using the inception block showed in Table 9. The other networks have a similar structure as Table 2.

1.3 Summary of performance metrics

Table 10 presents a summary of performance metrics, their description, the range of possible values, and the desired target value. The performance measured by some of the metrics requires comparing the corresponding data/metric distributions for training and test data, which is achieved via KL divergence. For instance, the convexity ratio is obtained for each data instance as the ratio of the number of generated voxels that are inside the convex shape and the total number of generated voxels. We expect the distribution of convexity ratios to be the same between training data and generated instances, and lower KL divergence values between these two distributions are desirable. Note that KL divergence values are in the range of \([0,\infty )\).

1.4 Visual comparison of training and generated samples

In this section, we present side-by-side illustrations of the training data and generated samples by improved α-GAN to highlight the model’s performance. Figure 23 and 24 illustrate training samples and most similar generated data samples for 3D connected and tumor volumes. Generated shapes maintain the connectivity of the voxels, but the level of the convexity is slightly reduced compared in the provided sample compared to the training data.

Fig. 25 and 26 illustrate sample training and generated data instances as obtained by improved α-GAN for 3D volumes and tumors filled with subspheres. Generated shapes for the 3D volumes have smaller subspheres and thus, more concentrated isocenters. However, isocenters’ distribution follows a uniform pattern similar to the training data.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jafari, S.M., Cevik, M. & Basar, A. Improved α-GAN architecture for generating 3D connected volumes with an application to radiosurgery treatment planning. Appl Intell 53, 21050–21076 (2023). https://doi.org/10.1007/s10489-023-04567-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04567-8