Abstract

Off-policy learning, where the goal is to learn about a policy of interest while following a different behavior policy, constitutes an important class of reinforcement learning problems. It is well-known that emphatic temporal-difference (TD) learning is a pioneering off-policy reinforcement learning method involving the use of the followon trace. Although the gradient emphasis learning (GEM) algorithm has recently been proposed to fix the problems of unbounded variance and large emphasis approximation error introduced by the followon trace from the perspective of stochastic approximation. This approach, however, is limited to a single gradient-TD2-style update instead of considering the update rules of other GTD algorithms. Overall, it remains an open question on how to better learn the emphasis for off-policy learning. In this paper, we rethink GEM and investigate introducing a novel two-time-scale algorithm called TD emphasis learning with gradient correction (TDEC) to learn the true emphasis. Further, we regularize the update to the secondary learning process of TDEC and obtain our final TD emphasis learning with regularized correction (TDERC) algorithm. We then apply the emphasis estimated by the proposed emphasis learning algorithms to the value estimation gradient and the policy gradient, respectively, yielding the corresponding emphatic TD variants for off-policy evaluation and actor-critic algorithms for off-policy control. Finally, we empirically demonstrate the advantage of the proposed algorithms on a small domain as well as challenging Mujoco robot simulation tasks. Taken together, we hope that our work can provide new insights into the development of a better alternative in the family of off-policy emphatic algorithms.

Similar content being viewed by others

Availability of Data and Materials

The datasets generated during and analysed during the current study are available from the corresponding author on reasonable request.

Code Availability

The code is publicly available at https://github.com/Caojiaqing0526/DeepRL.

References

Sutton RS, Barto AG (2018) Reinforcement learning: an introduction, 2nd edn. MIT press, Cambridge

Liang D, Deng H, Liu Y (2022) The treatment of sepsis: an episodic memory-assisted deep reinforcement learning approach. Appl Intell, pp 1–11

Narayanan V, Modares H, Jagannathan S, Lewis FL (2022) Event-Driven Off-Policy Reinforcement learning for control of interconnected systems. IEEE Trans Cybern 52(3):1936–1946

Meng W, Zheng Q, Shi Y, Pan G (2022) An Off-Policy trust region policy optimization method with monotonic improvement guarantee for deep reinforcement learning. IEEE Trans Neural Netw Learn Syst 33(5):2223–2235

Xu R, Li M, Yang Z, Yang L, Qiao K, Shang Z (2021) Dynamic feature selection algorithm based on Q-learning mechanism. Appl Intell 51(10):7233–7244

Li J, Xiao Z, Fan J, Chai T, Lewis FL (2022) Off-policy Q-learning: Solving Nash equilibrium of multi-player games with network-induced delay and unmeasured state. Automatica 136:110076

Jaderberg M, Mnih V, Czarnecki WM, Schaul T, Leibo JZ, Silver D, Kavukcuoglu K (2017) Reinforcement learning with unsupervised auxiliary tasks. In: Proceedings of the 5th International conference on learning representations

Zahavy T, Xu Z, Veeriah V, Hessel M, Oh J, van Hasselt H, Silver D, Singh S (2020) A self-tuning actor-critic algorithm. In: Advances in neural information processing systems, pp 20913–20924

Mnih V, Kavukcuoglu K, Silver D, Rusu AA, Veness J, Bellemare MG, Graves A, Riedmiller M, Fidjeland AK, Ostrovski G et al (2015) Human-level control through deep reinforcement learning. Nature 518(7540):529–533

Espeholt L, Soyer H, Munos R, Simonyan K, Mnih V, Ward T, Doron Y, Firoiu V, Harley T, Dunning I, Legg S, Kavukcuoglu K (2018) IMPALA: Scalable distributed deep-RL with importance weighted actor-learner architectures. In: Proceedings of the 35th International conference on machine learning, pp 1406–1415

Metelli AM, Russo A, Restelli M (2021) Subgaussian and differentiable importance sampling for Off-Policy evaluation and learning. In: Advances in neural information processing systems, pp 8119–8132

Kallus N, Uehara M (2020) Double reinforcement learning for efficient Off-Policy evaluation in markov decision processes. J Mach Learn Res 21(167):1–63

Puterman ML (2014) Markov decision processes: discrete stochastic dynamic programming. Wiley, Hoboken

Xie T, Ma Y, Wang Y (2019) Towards optimal Off-Policy evaluation for reinforcement learning with marginalized importance sampling. In: Advances in neural information processing systems, pp 9665–9675

Shen SP, Ma YJ, Gottesman O, Doshi-velez F (2021) State relevance for off-policy evaluation. In: Proceedings of the 38th International conference on machine learning, pp 9537–9546

Zhang S, Liu B, Whiteson S (2020) GradientDICE: Rethinking generalized Offline estimation of stationary values. In: Proceedings of the 37th International conference on machine learning, pp 11194–11203

Liu Y, Swaminathan A, Agarwal A, Brunskill E (2020) Off-Policy Policy gradient with stationary distribution correction. In: Uncertainty in artificial intelligence, pp 1180–1190

Zhang R, Dai B, Li L, Schuurmans D (2020) GenDICE: Generalized Offline estimation of stationary values. In: Proceedings of the 8th International conference on learning representations

Tsitsiklis JN, Van Roy B (1997) An analysis of temporal-difference learning with function approximation. IEEE Trans Auto Control 42(5):674–690

Baird L (1995) Residual algorithms: reinforcement learning with function approximation. In: Proceedings of the 12th International conference on machine learning, pp 30–37

Xu T, Yang Z, Wang Z, Liang Y (2021) Doubly robust Off-Policy actor-critic: convergence and optimality. In: Proceedings of the 38th International conference on machine learning, pp 11581–11591

Degris T, White M, Sutton RS (2012) Off-policy actor-critic, arXiv:1205.4839

Sutton RS, Mahmood AR, White M (2016) An emphatic approach to the problem of off-policy temporal-difference learning. J Mach Learn Res 17(1):2603–2631

Imani E, Graves E, White M (2018) An Off-policy policy gradient theorem using emphatic weightings. In: Advances in neural information processing systems, pp 96–106

Zhang S, Liu B, Yao H, Whiteson S (2020) Provably convergent Two-Timescale Off-Policy Actor-Critic with function approximation. In: Proceedings of the 37th International Conference on Machine Learning, 11204–11213

Sutton RS, Maei HR, Precup D, Bhatnagar S, Silver D, Szepesvári C, Wiewiora E (2009) Fast gradient-descent methods for temporal-difference learning with linear function approximation. In: Proceedings of the 26th International conference on machine learning, pp 993–1000

Sutton RS, Szepesvári C, Maei HR (2008) A convergent o(n) temporal-difference algorithm for off-policy learning with linear function approximation. In: Advances in neural information processing systems, pp 1609–1616

Maei HR (2011) Gradient temporal-difference learning algorithms, Phd thesis, University of Alberta

Xu T, Zou S, Liang Y (2019) Two time-scale off-policy TD learning: Non-asymptotic analysis over Markovian samples. In: Advances in neural information processing systems, pp 10633–10643

Ma S, Zhou Y, Zou S (2020) Variance-reduced off-policy TDC Learning: Non-asymptotic convergence analysis. In: Advances in neural information processing systems, pp 14796–14806

Ghiassian S, Patterson A, Garg S, Gupta D, White A, White M (2020) Gradient temporal-difference learning with regularized corrections. In: Proceedings of the 37th International conference on machine learning, pp 3524–3534

Sutton RS (1988) Learning to predict by the methods of temporal differences. Mach Learn 3 (1):9–44

Jiang R, Zahavy T, Xu Z, White A, Hessel M, Blundell C, van Hasselt H (2021) Emphatic algorithms for deep reinforcement learning. In: Proceedings of the 38th International conference on machine learning, pp 5023–5033

Jiang R, Zhang S, Chelu V, White A, van Hasselt H (2022) Learning expected emphatic traces for deep RL. In: Proceedings of the 36th AAAI Conference on artificial intelligence, pp 12882–12890

Guan Z, Xu T, Liang Y (2022) PER-ETD: A polynomially efficient emphatic temporal difference learning method. In: 10Th international conference on learning representations

Yu H (2015) On convergence of emphatic Temporal-Difference learning. In: Proceedings of The 28th Conference on learning theory, pp 1724–1751

Yu H (2016) Weak convergence properties of constrained emphatic temporal-difference learning with constant and slowly diminishing stepsize. J Mach Learn Res 17(1):7745–7802

Ghiassian S, Rafiee B, Sutton RS (2016) A first empirical study of emphatic temporal difference learning. In: Advances in neural information processing systems

Gu X, Ghiassian S, Sutton RS (2019) Should all temporal difference learning use emphasis? arXiv:1903.00194

Hallak A, Tamar A, Munos R, Mannor S (2016) Generalized emphatic temporal difference learning: Bias-Variance analysis. In: Proceedings of the 30th AAAI Conference on artificial intelligence, pp 1631–1637

Cao J, Liu Q, Zhu F, Fu Q, Zhong S (2021) Gradient temporal-difference learning for off-policy evaluation using emphatic weightings. Inf Sci 580:311–330

Zhang S, Whiteson S (2022) Truncated emphatic temporal difference methods for prediction and control. J Mach Learn Res 23(153):1–59

van Hasselt H, Madjiheurem S, Hessel M, Silver D, Barreto A, Borsa D (2021) Expected eligibility traces. In: Proceedings of the 35th AAAI Conference on artificial intelligence, pp 9997–10005

Hallak A, Mannor S (2017) Consistent on-line off-policy evaluation. In: Proceedings of the 34th International conference on machine learning, pp 1372–1383

Liu Q, Li L, Tang Z, Zhou D (2018) Breaking the curse of horizon: Infinite-horizon off-policy estimation. In: Advances in neural information processing systems, pp 5361–5371

Gelada C, Bellemare MG (2019) Off-Policy Deep reinforcement learning by bootstrapping the covariate shift. In: Proceedings of the 33th AAAI Conference on artificial intelligence, pp 3647–3655

Nachum O, Chow Y, Dai B, Li L (2019) DualDICE: Behavior-Agnostic estimation of discounted stationary distribution corrections. In: Advances in neural information processing systems, pp 2315–2325

Zhang S, Yao H, Whiteson S (2021) Breaking the deadly triad with a target network. In: Proceedings of the 38th International conference on machine learning, pp 12621–12631

Zhang S, Wan Y, Sutton RS, Whiteson S (2021) Average-Reward Off-Policy Policy evaluation with function approximation. In: Proceedings of the 38th International conference on machine learning, pp 12578–12588

Wang T, Bowling M, Schuurmans D, Lizotte DJ (2008) Stable dual dynamic programming. In: Advances in neural information processing systems, pp 1569–1576

Hallak A, Mannor S (2017) Consistent on-line off-policy evaluation. In: Proceedings of the 34th International conference on machine learning, pp 1372–1383

Zhang S, Veeriah V, Whiteson S (2020) Learning retrospective knowledge with reverse reinforcement learning. In: Advances in neural information processing systems, pp 19976– 19987

Precup D, Sutton RS, Dasgupta S (2001) Off-Policy Temporal difference learning with function approximation. In: Proceedings of the 18th International conference on machine learning, pp 417–424

Zhang S, Boehmer W, Whiteson S (2019) Generalized off-policy actor-critic. In: Advances in neural information processing systems, pp 1999–2009

Robbins H, Monro S (1951) A stochastic approximation method. Ann Math Stat, pp 400–407

Levin DA, Peres Y (2017) Markov chains and mixing times, vol 107, American Mathematical Soc.

Kolter JZ (2011) The fixed points of Off-Policy TD. In: Advances in neural information processing systems, pp 2169–2177

White A, White M (2016) Investigating practical linear temporal difference learning. In: Proceedings of the 15th International conference on autonomous agents & multiagent systems, pp 494–502

Brockman G, Cheung V, Pettersson L, Schneider J, Schulman J, Tang J, Zaremba W (2016) Openai gym, arXiv:1606.01540

Borkar VS, Meyn SP (2000) The ODE method for convergence of stochastic approximation and reinforcement learning. SIAM J Control Optim 38(2):447–469

Golub GH, Van Loan CF (2013) Matrix computations, vol 3, Johns Hopkins University Press

White M (2017) Unifying task specification in reinforcement learning. In: Proceedings of the 34th International conference on machine learning, pp 3742–3750

Acknowledgements

We would like to thank Fei Zhu, Leilei Yan, and Xiaohan Zheng for their technical support. We would also like to thank the computer resources and other support provided by the Machine Learning and Image Processing Research Center of Soochow University.

Funding

This work is supported by the National Natural Science Foundation of China (Nos. 61772355, 61702055, 61876217, 62176175), Jiangsu Province Natural Science Research University major projects (18KJA520011, 17KJA520004), Key Laboratory of Symbolic Computation and Knowledge Engineering of Ministry of Education, Suzhou Industrial application of basic research program part (SYG201422), the Priority Academic Program Development of Jiangsu Higher Education Institutions (PAPD).

Author information

Authors and Affiliations

Contributions

Jiaqing Cao: Conceptualization, Methodology, Software, Validation, Writing-original draft, Writing-review & editing. Quan Liu: Conceptualization, Resources, Writing-review & editing, Validation, Project administration, Funding acquisition, Supervision. Lan Wu: Investigation, Software, Visualization. Qiming Fu: Investigation, Software, Visualization. Shan Zhong: Investigation, Software, Visualization. All authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval

Not applicable.

Consent to Participate

Not applicable.

Consent for Publication

Not applicable.

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 A.1 Proof of theorem 1

Proof

This proof is inspired by Sutton et al. [26], Maei [28], and Ghiassian et al. [31]. We provide the full proof here for completeness. Let \({\boldsymbol {y}_{t}^{\top }} \doteq [\boldsymbol {\kappa }^{\top }_{t}, \boldsymbol {w}^{\top }_{t}]\), we begin by rewriting the TDERC updates (20) and (21) as

where

Then the limiting behavior of TDERC is governed by

The iteration equation (A1) at this time can be rewritten as yt+ 1 = yt + ζt(h(yt) + Lt+ 1), where \(h({{\boldsymbol {y}}}) \doteq {\mathbf {G}}{{\boldsymbol {y}}} + {\boldsymbol {g}}\) and \({L_{t + 1}} \doteq ({{\mathbf {G}}_{t+1}} - {\mathbf {G}}){{\boldsymbol {y}}_{t}} + ({{\boldsymbol {g}}_{t+1}} - {\boldsymbol {g}})\) is the noise sequence. Let \({{\Omega }_{t}} \doteq ({{\boldsymbol {y}}_{1}}, {L_{1}} ,..., {{\boldsymbol {y}}_{t-1}}, {L_{t}})\) be σ-fields generated by the quantities yi,Li,i ≤ k,k ≥ 1.

Now we apply the conclusions from Theorem 2.2 provided in Borkar and Meyn [60], i.e., the following preconditions must be satisfied: (i) The function h(y) is Lipschitz, and there exists \({h_{\infty } }({\boldsymbol {y}}) \doteq \lim \limits _{c \to \infty } h(c{\boldsymbol {y}})/c\) for all \({\boldsymbol {y}} \in {{\mathbb {R}}^{2n}}\); (ii) The sequence (Lt,Ωt) is a martingale difference sequence, and \({\mathbb {E}}[{\left \| {{M_{t + 1}}} \right \|^{2}}\vert {{\Omega }_{t}}] \le K(1 + {\left \| {{{\boldsymbol {y}}}} \right \|^{2}})\) holds for some constant K > 0 and any initial parameter vector y1; (iii) The nonnegative stepsize sequence at satisfies \(\sum \nolimits _{t} {{a_{t}}} = \infty \) and \(\sum \nolimits _{t} {{a_{t}^{2}}} < + \infty \); (iv) The origin is a globally asymptotically stable equilibrium for the ordinary differential equation (ODE) \(\dot {\boldsymbol {y}} = {h_{\infty } }({\boldsymbol {y}})\); and (v) The ODE \(\dot {\boldsymbol {y}} = {h}({\boldsymbol {y}})\) has a unique globally asymptotically stable equilibrium. First for condition (i), because \({\left \| {h({{\boldsymbol {y}}_{i}}) - h({{\boldsymbol {y}}_{j}})} \right \|^{2}} = {\left \| {{\mathbf {G}}({{\boldsymbol {y}}_{i}} - {{\boldsymbol {y}}_{j}})} \right \|^{2}} \le {\mathbf {G}}{\left \| {({{\boldsymbol {y}}_{i}} - {{\boldsymbol {y}}_{j}})} \right \|^{2}}\) for ∀yi, yj, therefore h(⋅) is Lipschitz. Meanwhile, \(\lim \limits _{c \to \infty } h(c{\boldsymbol {y}})/c = \lim \limits _{c \to \infty } (c{\mathbf {G}\boldsymbol {y}} + {\boldsymbol {g}})/c = \lim \limits _{c \to \infty } {\boldsymbol {g}}/c + \lim \limits _{c \to \infty } {\mathbf {G}\boldsymbol {y}}\). Assumption 5 ensures that g is bounded. Thus, when \(c \to \infty \), \(\lim \limits _{c \to \infty } {\boldsymbol {g}}/c = 0\), \(\lim \limits _{c \to \infty } h(c{\boldsymbol {y}})/c = \lim \limits _{c \to \infty } {\mathbf {G}\boldsymbol {y}}\). Next, we establish that condition (ii) is true: because

let \(K = {\max \limits } \{ {\left \| {({{\mathbf {G}}_{t}} - {\mathbf {G}})} \right \|^{2}}, {\left \| {({{\boldsymbol {g}}_{t}} - {\boldsymbol {g}})} \right \|^{2}}\}\), we have \({\left \| {{L_{t + 1}}} \right \|^{2}} \le K(1 + {\left \| {{{\boldsymbol {y}}_{t}}} \right \|^{2}})\). As a result, we see that condition (ii) is met. Further, condition (iii) is satisfied by Assumption 6 in Theorem 1. Finally, for conditions (iv) and (v), we need to prove that the real parts of all the eigenvalues of G are negative. We define \(\chi \in \mathbb {C}\) as the eigenvalue of matrix G. From Box 1, we can obtain

Then there must exist a non-zero vector \({\mathbf {x}} \in {{\mathbb {C}}^{n}}\) such that x∗(G − χI)x = 0, which is equivalent to

We define \({b_{c}} \doteq \frac {{{{\mathbf {x}}^{\ast }}{\mathbf {C}}{\mathbf {x}}}}{{{{\left \| {\mathbf {x}} \right \|}^{2}}}}\), \({b_{a}} \doteq \frac {{{{\mathbf {x}}^{\ast }}{{\bar {\mathbf {A}}}}{{\bar {\mathbf {A}}}}^{\top {\mathbf {x}}}}}{{{{\left \| {\mathbf {x}} \right \|}^{2}}}}\), and \({{\chi }_{z}} \doteq \frac {{{{\mathbf {x}}^{\ast }}{{\bar {\mathbf {A}}}}{\mathbf {x}}}}{{{{\left \| {\mathbf {x}} \right \|}^{2}}}} \equiv {{\chi }_{r}} + {{\chi }_{c}}i\) for \({{\chi }_{r}} , {{\chi }_{c}} \in \mathbb {R}\). The constants bc and ba are real and greater than zero for all nonzero vectors x. Then the above equation can be written as

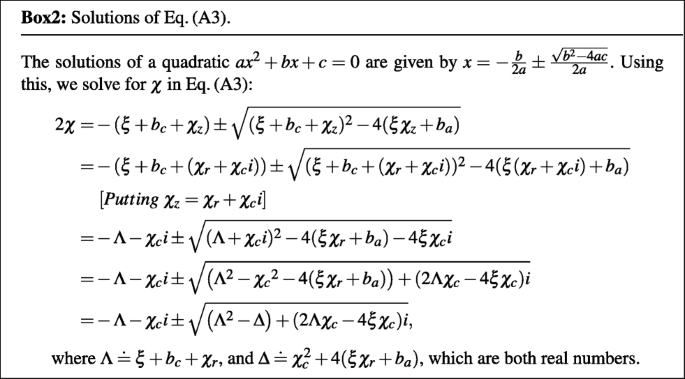

Through the full derivation of Box 2, we solve for χ in (A3) to obtain \(2\chi = -{\Lambda } - {\chi _{c}}i \pm \sqrt {({\Lambda }^{2}-{\Delta }) + (2{\Lambda }{\chi _{c}} - 4 \xi {\chi _{c}})i}\), where we introduced intermediate variables Λ = ξ + bc + χr, and \({\Delta } = {{\chi ^{2}_{c}}} + 4(\xi {{\chi }_{r}}+{b_{a}})\), which are both real numbers.

Then using \({\text {Re}}(\sqrt {x+yi})= \pm \frac {1}{{\sqrt 2 }} \sqrt {\sqrt {x^{2}+y^{2}} + x}\), we obtain \({\text {Re}}(2\chi ) = -{\Lambda } \pm \frac {1}{{\sqrt 2 }} \sqrt {\Upsilon }\), with the intermediate variable \({\Upsilon } = \sqrt {({\Lambda }^{2}-{\Delta })^{2} + (2{\Lambda }{\chi _{c}} - 4 \xi {\chi _{c}})^{2}} + ({\Lambda }^{2}-{\Delta })\). Next we obtain conditions on ξ such that the real parts of both the values of χ are negative for all nonzero vectors \({\mathbf {x}} \in {{\mathbb {C}}^{n}}\).

Case 1: We first consider \({\text {Re}}(2\chi ) = -{\Lambda } + \frac {1}{{\sqrt 2 }} \sqrt {\Upsilon }\). Then Re(χ) < 0 is equivalent to

Since the right hand side of this inequality is clearly positive, we obtain the first condition on ξ:

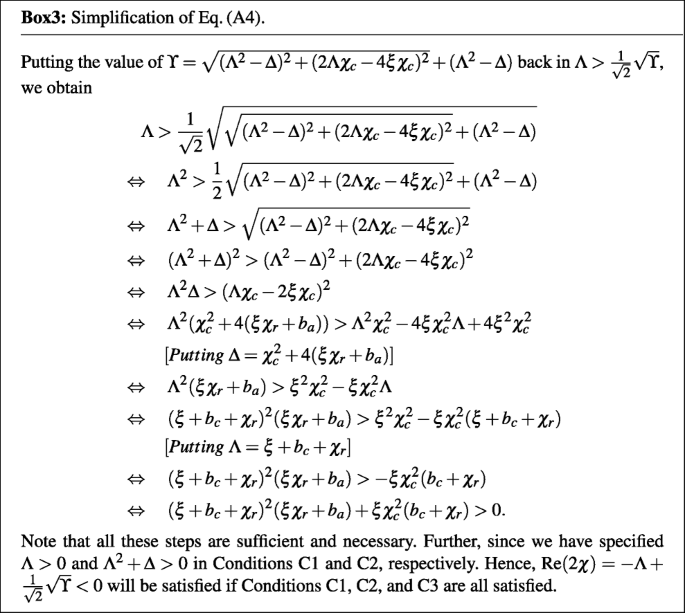

Then simplifying (A4) and putting back the values for the intermediate variables (see Box 3 for details), we obtain

Again, since the right hand side of the above inequality is positive, then we obtain the second condition on ξ:

Continuing to simplify the inequality in (A4) (again see Box 3 for details), we end up with the third and final condition:

If χr > 0 for all \({\mathbf {x}} \in {{\mathbb {R}}}\), then each of the Conditions C1, C2, and C3 hold true and consequently TDERC converges. This case corresponds to the on-policy setting where the matrix \({\bar {\mathbf {A}}}\) is positive definite.

Now we show that TDERC converges even when \({\bar {\mathbf {A}}}\) is not positive definite (the case where χr < 0). Clearly, if we assume ξχr + ba > 0 and ξ ≥ 0, then each of the Conditions C1, C2, and C3 again hold true and TDERC would converge. As a result we obtain the following bound on ξ:

with \({\mathbf {U}} \doteq \frac {{{\bar {\mathbf {A}}}}+{{\bar {\mathbf {A}}}}^{\top }}{2}\). This bound can be made more interpretable. Using the substitution \({\mathbf {y}} = {{\mathbf {U}}^{\frac {1}{2}}}{\mathbf {x}}\) we obtain

where \({{\chi }_{\min \limits }}\) and \({{\chi }_{\max \limits }}\) represent the minimum and maximum eigenvalues of the matrix, respectively. Finally, we can write the bound in (A6) equivalently as

If this bound are satisfied by ξ then the real parts of all the eigenvalues of G would be negative and TDERC will converge.

Case 2: Next we consider \({\text {Re}}(2\chi ) = -{\Lambda } - \frac {1}{{\sqrt 2 }} \sqrt {\Upsilon }\). The second term is always negative and we assumed Λ > 0 in Condition C1. As a result, Re(χ) < 0 and we are done. Therefore, we obtain that the real part of the eigenvalues of G are negative and consequently condition (iv) above is satisfied. To prove that condition (v) holds true, note that since we assumed A + ξI to be nonsingular, G is also nonsingular. This means that for the ODE \(\dot {\boldsymbol {y}} = {h_{\infty } }({\boldsymbol {y}})\), y∗ = −G− 1g is the unique asymptotically stable equilibrium with \({\bar {\mathbf {V}}}({\boldsymbol {y}}) \doteq \frac {1}{2} ({\mathbf {G}}{{\boldsymbol {y}}} + {\boldsymbol {g}})^{\top }({\mathbf {G}}{{\boldsymbol {y}}} + {\boldsymbol {g}})\) as its associated strict Lyapunov function. □

1.2 A.2 Proof of lemma 2

Proof

As shown by y Sutton et al. [23], \(\mathbf {D}_{\bar {\boldsymbol {m}}}(\mathbf {I} - \gamma {\mathbf {P}_{\pi }})\) is positive definite, i.e., for any real vector y, we have \(g({\textbf {y}}) \doteq {\textbf {y}}^{\top }\mathbf {D}_{\bar {\boldsymbol {m}}}(\mathbf {I} - \gamma {\mathbf {P}_{\pi }}){\textbf {y}}>0\). Since g(y) is a continuous function, it obtains its minimum value in the compact set \({\mathcal {Y}} \doteq \{{\textbf {y}}: \left \| {\textbf {y}} \right \| = 1\}\), i.e., there exists a positive constant 𝜗0 > 0 such that g(y) ≥ 𝜗0 > 0 holds for any \({\textbf {y}} \in {\mathcal {Y}}\). In particular, for any \({\textbf {y}} \in {\mathbb {R}}^{\vert \mathcal {S} \vert }\), we have \(g(\frac {{\textbf {y}}}{{\left \| {\textbf {y}} \right \|}}) \ge {{\vartheta }_{0}}\), i.e., \({\textbf {y}}^{\top }\mathbf {D}_{\bar {\boldsymbol {m}}}(\mathbf {I} - \gamma {\mathbf {P}_{\pi }}){\textbf {y}} \ge {{\vartheta }_{0}}{\left \| {\textbf {y}} \right \|}^{2}\). Hence, we have

for any y.

Let \({{\vartheta }} \doteq \frac {{{\vartheta }_{0}}}{{\left \| {\mathbf {I} - \gamma {\mathbf {P}_{\pi }}} \right \|}}\). Clearly, we can obtain that when \({\left \| {{\mathbf {D}_{\epsilon }}} \right \|} < {{\vartheta }}\) holds, \({\mathbf {\Phi }^{\top }}{\mathbf {D}_{\boldsymbol {m}_{\boldsymbol {w}}}}(\mathbf {I} - \gamma {\mathbf {P}_{\pi }})\mathbf {\Phi }\) is positive definite, which, together with Assumption 2, finally implies that A is positive definite and completes the proof. □

1.3 A.3 Proof of theorem 2

Proof

To demonstrate the equivalence, we first show that any GEM and TDEC fixed point is a TDERC fixed point. Clearly, when \({\bar {\mathbf {A}}}{\boldsymbol {w}}^{\ast } = {\bar {\mathbf {b}}}\), then \({\bar {\mathbf {b}}}-{\bar {\mathbf {A}}}{\boldsymbol {w}}^{\ast }=0\) and so \({\bar {\mathbf {A}}}^{\top }_{\xi } {\mathbf {C}}^{-1}_{\xi } ({\bar {\mathbf {b}}}-{\bar {\mathbf {A}}}{\boldsymbol {w}}^{\ast })=0\). Finally, we simply need to show that under the additional conditions, a TDERC fixed point is a fixed point of GEM and TDEC. if − ξ does not equal any of the eigenvalues of \({\bar {\mathbf {A}}}\), then \({\bar {\mathbf {A}}}_{\xi } = {\bar {\mathbf {A}}} + \xi {\mathbf {I}}\) is a full rank matrix. Because both \({\bar {\mathbf {A}}}_{\xi }\) and Cξ are full rank, the nullspace of \({\bar {\mathbf {A}}}^{\top }_{\xi } {\mathbf {C}}^{-1}_{\xi } ({\bar {\mathbf {b}}}-{\bar {\mathbf {A}}}{\boldsymbol {w}}^{\ast })\) equals to the nullspace of \({\bar {\mathbf {b}}}-{\bar {\mathbf {A}}}{\boldsymbol {w}}^{\ast }\). Therefore, w∗ satisfies \({\bar {\mathbf {A}}}^{\top }_{\xi } {\mathbf {C}}^{-1}_{\xi } ({\bar {\mathbf {b}}}-{\bar {\mathbf {A}}}{\boldsymbol {w}}^{\ast })=0\) iff \({\bar {\mathbf {b}}}-{\bar {\mathbf {A}}}{\boldsymbol {w}}^{\ast }=0\). □

1.4 A.4 Proof of proposition 1

Proof

We begin by obtaining that the unbiased fixed point of ETD is

For the sake of brevity, we define \(\mathbf {H} \doteq \mathbf {I} - \gamma {\mathbf {P}_{\pi }}\). Using \(\left \| {{{\mathbf {X}}^{- 1}} - {\mathbf {Y}^{- 1}}} \right \| \le \left \| {{{\mathbf {X}}^{- 1}}} \right \|\left \| {{\mathbf {Y}^{- 1}}} \right \|\left \| {{\mathbf {X}} - \mathbf {Y}} \right \|\), we have

Now we utilize the results from Corollary 8.6.2 presented in Golub and Van Loan [61]: For any two matrices X and Y, we have

where \({\sigma _{{\min \limits } }}(\cdot )\) denotes the minimum eigenvalue of the matrix. Therefore, if X is nonsingular, and there exist a constant \({c_{0}} \in (0, {\sigma _{{\min \limits } }}(\mathbf {X}))\) that satisfies \(\left \| {\mathbf {Y}}\right \| \le {\sigma _{\min \limits }}(\mathbf {X}) - {c_{0}}\), then we can get

Here we consider \({\mathbf {\Phi }^{\top }}{\mathbf {D}_{\bar {\boldsymbol {m}}}}\mathbf {H}\mathbf {\Phi }\) as X and \({\mathbf {\Phi }^{\top } }{\mathbf {D}_{\epsilon }}\mathbf {H}\mathbf {\Phi }\) as Y. Clearly, if

we can obtain

The non-singularity of \({\mathbf {\Phi }^{\top }}{\mathbf {D}_{\bar {\boldsymbol {m}}}}\mathbf {H}\mathbf {\Phi }\) is proved in Sutton et al. [23]. Hence, combining the spectral radius bound of \({\mathbf {\Phi }^{\top }}{\mathbf {D}_{\bar {\boldsymbol {m}}}}\mathbf {H}\mathbf {\Phi }\) with (A8) and (A9), there exists a constant c1 > 0 such that

As a result, we obtain

Further, according to Lemma 1 and Theorem 1 in White [62], there exists a constant c2 > 0 such that

Finally, using the equivalence between norms, we obtain that there exists a constant c3 > 0 such that

which completes the proof. □

1.5 A.5 Features of Baird’s counterexample

Original Features:

According to Sutton and Barto [1], we have \({\boldsymbol {\phi }}({s_{1}}) \doteq {[2,0,0,0,0,0,0,1]^ \top }\), \({\boldsymbol {\phi }}({s_{2}}) \doteq {[0,2,0,0,0,0,0,1]^ \top }\), \({\boldsymbol {\phi }}({s_{3}}) \doteq {[0,0,2,0,0,0,0,1]^ \top }\), \({\boldsymbol {\phi }}({s_{4}}) \doteq {[0,0,0,2,0,0,0,1]^ \top }\), \({\boldsymbol {\phi }}({s_{5}}) \doteq {[0,0,0,0,2,0,0,1]^ \top }\), \({\boldsymbol {\phi }}({s_{6}}) \doteq {[0,0,0,0,0,2,0,1]^ \top }\), \({\boldsymbol {\phi }}({s_{7}}) \doteq {[0,0,0,0,0,0,1,2]^ \top }\).

One-Hot Features:

\({\boldsymbol {\phi }}({s_{1}}) \doteq {[1,0,0,0,0,0,0]^ \top }\), \({\boldsymbol {\phi }}({s_{2}}) \doteq {[0,1,0,0,0,0,0]^ \top }\), \({\boldsymbol {\phi }}({s_{3}}) \doteq {[0,0,1,0,0,0,0]^ \top }\), \({\boldsymbol {\phi }}({s_{4}}) \doteq {[0,0,0,1,0,0,0]^ \top }\), \({\boldsymbol {\phi }}({s_{5}}) \doteq {[0,0,0,0,1,0,0]^ \top }\), \({\boldsymbol {\phi }}({s_{6}}) \doteq {[0,0,0,0,0,1,0]^ \top }\), \({\boldsymbol {\phi }}({s_{7}}) \doteq {[0,0,0,0,0,0,1]^ \top }\).

Zero-Hot Features:

\({\boldsymbol {\phi }}({s_{1}}) \doteq {[0,1,1,1,1,1,1]^ \top }\), \({\boldsymbol {\phi }}({s_{2}}) \doteq {[1,0,1,1,1,1,1]^ \top }\), \({\boldsymbol {\phi }}({s_{3}}) \doteq {[1,1,0,1,1,1,1]^ \top }\), \({\boldsymbol {\phi }}({s_{4}}) \doteq {[1,1,1,0,1,1,1]^ \top }\), \({\boldsymbol {\phi }}({s_{5}}) \doteq {[1,1,1,1,0,1,1]^ \top }\), \({\boldsymbol {\phi }}({s_{6}}) \doteq {[1,1,1,1,1,0,1]^ \top }\), \({\boldsymbol {\phi }}({s_{7}}) \doteq {[1,1,1,1,1,1,0]^ \top }\).

Aliased Features:

\({\boldsymbol {\phi }}({s_{1}}) \doteq {[2,0,0,0,0,0]^ \top }\), \({\boldsymbol {\phi }}({s_{2}}) \doteq {[0,2,0,0,0,0]^ \top }\), \({\boldsymbol {\phi }}({s_{3}}) \doteq {[0,0,2,0,0,0]^ \top }\), \({\boldsymbol {\phi }}({s_{4}}) \doteq {[0,0,0,2,0,0]^ \top }\), \({\boldsymbol {\phi }}({s_{5}}) \doteq {[0,0,0,0,2,0]^ \top }\), \({\boldsymbol {\phi }}({s_{6}}) \doteq {[0,0,0,0,0,2]^ \top }\), \({\boldsymbol {\phi }}({s_{7}}) \doteq {[0,0,0,0,0,2]^ \top }\).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cao, J., Liu, Q., Wu, L. et al. Temporal-difference emphasis learning with regularized correction for off-policy evaluation and control. Appl Intell 53, 20917–20937 (2023). https://doi.org/10.1007/s10489-023-04579-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04579-4