Abstract

Traffic forecasting is a typical spatio-temporal graph modeling problem, which has become one of the key technical issues in modern intelligent transportation systems. However, existing methods cannot capture the long-range spatial and temporal characteristics very well because of the complexity and heterogeneity of the traffic flows. In this paper, a new deep learning framework called Graph Convolutional Dynamic Recurrent Network with Attention (GCDRNA) is proposed to predict the traffic state in the traffic network. GCDRNA mainly consists of two components, which are Graph Convolutional with Attention (GCA) block and Dynamic GRU with Attention (DGRUA) block. GCA block can capture both global and local spatial correlations of the traffic flows by k-hop GC, similarity GC and spatial attention modules. DGRUA block captures the long-term temporal correlation of the traffic flows by Dynamic GRU (DGRU) and Node Attention Unit (NAU) modules. Experimental results show that GCDRNA achieves the best prediction performance compared with other baseline models on two public real-world traffic datasets.

Similar content being viewed by others

Data Availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Atwood J, Towsley D (2016) Diffusion-convolutional neural networks. Advances in neural information processing systems pp 1993–2001

Bai S, Kolter JZ, Koltun V (2018) An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv preprint http://arxiv.org/abs/1803.01271arXiv:1803.01271

Borovykh A, Bohte S, Oosterlee CW (2017) Conditional time series forecasting with convolutional neural networks. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) 10614:729–730

Bruna J, Zaremba W, Szlam A, LeCun Y (2014) Spectral networks and locally connected networks on graphs. In: Proceedings of the 2nd International Conference on Learning Representations

Chen C, Petty K, Skabardonis A, Varaiya P, Jia Z (2001) Freeway performance measurement system: Mining loop detector data. Transportation Research Record 1748(1):96–102

Chiang W, Liu X, Si S, Li Y, Bengio S (2019) Cluster-GCN: An efficient algorithm for training deep and large graph convolutional networks. In: Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp 257–266

Chung J, Gulcehre C, Cho K, Bengio Y (2014) Empirical evaluation of gated recurrent neural networks on sequence modeling. In: Proceedings of the 2014 NIPS Workshop on Deep Learning

Defferrard M, Bresson X, Vandergheynst P (2016) Convolutional neural networks on graphs with fast localized spectral filtering. In: Proceedings of the 30th International Conference on Neural Information Processing Systems, pp 3844–3852

Diao Z, Wang X, Zhang D, Liu Y, Xie K, He S (2019) Dynamic spatial-temporal graph convolutional neural networks for traffic forecasting. Proceedings of the AAAI Conference on Artificial Intelligence 33:890–897

Gao Y, Zhao L, Wu L, Ye Y, Xiong H, Yang C (2019) Incomplete label multi-task deep learning for spatio-temporal event subtype forecasting. Proceedings of the AAAI Conference on Artificial Intelligence 33:3638–3646

Guo S, Lin Y, Feng N, Song C, Wan H (2019) Attention based spatial-temporal graph convolutional networks for traffic flow forecasting. Proceedings of the AAAI Conference on Artificial Intelligence 33:922–929

Hamilton ZW, Ying Leskovec J (2017) Inductive representation learning on large graphs. In Advances in Neural Information Processing Systems 34:1024–1034

Hsiao PW, Chen CP (2018) Effective attention mechanism in dynamic models for speech emotion recognition. In: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)

Huang C, Zhang J, Zheng Y, Chawla NV (2018) DeepCrime: Attentive hierarchical recurrent networks for crime prediction. In: Proceedings of the 27th ACM International Conference on Information and Knowledge Management, pp 1423–1432

Huang C, Zhang C, Dai P, Bo L (2020) Cross-interaction hierarchical attention networks forurban anomaly prediction. In: IJCAI

Huang S, Wang D, Wu X, Tang A (2019) DSANet: Dual self-attention network for multivariate time series forecasting. In: Proceedings of the 28th ACM International Conference on Information and Knowledge Management (CIKM), pp 2129–2132

Huber PJ (1992) Robust estimation of a location parameter. Springer, New York

Kipf TN, Welling M (2017) Semi-supervised classification with graph convolutional networks. In: Proceedings of the International Conference on Learning Representations

Li C, Bai L, Liu W, Yao L, Waller ST (2020) Knowledge adaption for demand prediction based on multi-task memory neural network. In: Proceedings of the 29th ACM International Conference on Information & Knowledge Management, pp 715–724

Li X, Ding L, Li W, Fang C (2017) FPGA accelerates deep residual learning for image recognition. In: Proceedings of the 2017 IEEE 2nd Information Technology, Networking, Electronic and Automation Control Conference (ITNEC)

Li Y, Yu R, Shahabi C, Liu Y (2018) Diffusion convolutional recurrent neural network: Data-driven traffic forecasting. In: Proceedings of the 6th International Conference on Learning Representations

Long J, Zhang R, Yang Z, Huang Y, Liu Y, Li C (2022) Self-adaptation graph attention network via meta-learning for machinery fault diagnosis with few labeled data. IEEE Transactions on Instrumentation and Measurement 71:1–11

Maruf S, Martins AFT, Haffari G (2019) Selective attention for context-aware neural machine translation. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp 3092–3102

Sen R, Yu HF, Dhillon I (2019) Think globally, act locally: A deep neural network approach to high-dimensional time series forecasting. In: Advances in Neural Information Processing Systems, vol 32

Seo Y, Defferrard M, Vandergheynst P, Bresson X (2018) Structured sequence modeling with graph convolutional recurrent networks. In: Proceedings of the International Conference on Neural Information Processing, Springer, pp 362–373

Shen B, Liang X, Ouyang Y, Liu M, Zheng W, Carley KM (2018) Stepdeep: A novel spatial-temporal mobility event prediction framework based on deep neural network. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp 724–733

Song C, Lin Y, Guo S, Wan H (2020) Spatial-temporal synchronous graph convolutional networks: A new framework for spatial-temporal network data forecasting. Proceedings of the AAAI Conference on Artificial Intelligence 34:914–921

Tang X, Yao H, Sun Y, Aggarwal C, Wang S (2020) Joint modeling of local and global temporal dynamics for multivariate time series forecasting with missing values. In: Proceedings of the 34th AAAI Conference on Artificial Intelligence, pp 5956–5963

Velickovic P, Cucurull G, Casanova A, Romero A, Lio P, Bengio Y (2017) Graph attention networks. In: Proceedings of the International Conference on Learning Representations

Wang H, Li Z (2017) Region representation learning via mobility flow. In: Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, pp 237–246

Wei H, Zheng G, Yao H, Li Z (2018) Intellilight: A reinforcement learning approach for intelligent traffic light control. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp 2496–2505

Williams BM, Hoel LA (2003) Modeling and forecasting vehicular traffic flow as a seasonal ARIMA process: Theoretical basis and empirical results. Journal of transportation engineering 129(6):664–672

Xu K, Ba J, Kiros R, Cho K, Courville A, Salakhutdinov R, Zemel R, Bengio Y (2015) Show, attend and tell: Neural image caption generation with visual attention. Computer Science pp 2048–2057

Yan S, Xiong Y, Lin D (2018) Spatial temporal graph convolutional networks for skeleton-based action recognition. In: Proceedings of the AAAI conference on artificial intelligence, vol 32

Yao H, Wu F, Ke J, Tang X, Jia Y, Lu S, Gong P, Ye J, Li Z (2018) Deep multi-view spatial-temporal network for taxi demand prediction. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol 32

Yu B, Yin H, Zhu Z (2018) Spatio-temporal graph convolutional networks: A deep learning framework for traffic forecasting. In: Proceedings of the 27th International Joint Conference on Artificial Intelligence, pp 3634–3640

Zhang J, Zheng Y, Qi D (2017) Deep spatio-temporal residual networks for citywide crowd flows prediction. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol 31

Zhao B, Zhang X, Wu Q, Yang Z, Zhan Z (2023) A novel unsupervised directed hierarchical graph network with clustering representation for intelligent fault diagnosis of machines. Mechanical Systems and Signal Processing 183:109615

Zheng C, Fan X, Wang C, Qi J (2020) GMAN: A graph multi-attention network for traffic prediction. Proceedings of the AAAI Conference on Artificial Intelligence 34:1234–1241

Zivot E, Wang J (2006) Vector autoregressive models for multivariate time series. Modeling Financial Time Series with S-Plus® pp 385–429

Acknowledgements

This research is supported by National Natural Science Foundation of China under Grants Nos. 61872191 and 41571389.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare no conflict of interest or competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Junxia Fu, HongyanJi and Linfeng Liu are contributed equally to this work.

Complexity Analysis of GCDRNA

Complexity Analysis of GCDRNA

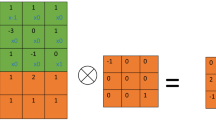

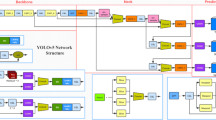

As Fig. 2, GCDRNA mainly consists of two blocks, i.e., GCA block and DGRUA block. Furthermore, GCA block consists of k-hop GC, similarity GC and spatial attention modules, and DGRUA block consists of DGRU and NAU modules. We will analyze the complexity of these modules one by one as follows.

Since the time complexities of Eqs. (2) and (3) are \(O(KN^3)\) and \(O(N^2C)\) respectively, the time complexity of the k-hop GC module will be \(O(K(KN^3+N^2C))\). Next, the time complexities of Eqs. (4), (5) and (6) are \(O(N^2C)\), O(KNC) and \(O(K^2NC)\), respectively. Then, the time complexity of the spatial attention module will be \(O(K^2NC+N^2C)\). Similarly, the time complexity of similarity GC is \(O(N^2(D+C))\) according to Eqs. (7) and (8). Now, put the time complexities of these three modules together and consider that \(C, D<< N\) as usual, we can obtain the time complexity of GCA block as \(O(K^2N^3)\). And with the same method, it is easy to obtain the space complexity of GCA as \(O(KN^2)\).

The time complexities of Eqs. (10)-(13), (15) and (17) are all O(NCQ), and the time complexities of Eqs. (14), (16) and (18) are all O(NQ). Then, the time complexity of DGRU module is O(NCQ). Similarly, the time complexity of NAU module is \(O(NQQ^{\prime })\). Since Q and \(Q^{\prime }\) are also no greater than N, the time complexity of DGRUA block can be expressed as \(O(N^3)\). With the same approach, we can obtain the the space complexity of DGRUA as \(O(N^2)\).

Finally, according the above analysis, we can find the time complexity of GCDRNA as \(O(K^2N^3)\), and space complexity as \(O(KN^2)\). When K is small, the time and space complexities of GCDRNA will approximately be \(O(N^3)\) and \(O(N^2)\), respectively, which are similar to most related graph convolution models for traffic prediction. Therefore, the computation cost of the proposed GCDRNA is moderate and acceptable.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wu, J., Fu, J., Ji, H. et al. Graph convolutional dynamic recurrent network with attention for traffic forecasting. Appl Intell 53, 22002–22016 (2023). https://doi.org/10.1007/s10489-023-04621-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04621-5