Abstract

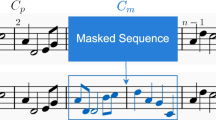

Arranging and orchestration are critical aspects of music composition and production. Traditional accompaniment arranging is time-consuming and requires expertise in music theory. In this work, we utilize a deep learning model, the flow model, to generate music accompaniment including drums, guitar, bass, and strings based on the input piano melody, which can assist musicians in creating popular music. The main contributions of this paper are as follows: 1) We propose a new pianoroll representation that solves the problem of recognizing the onset of a musical note and saves space. 2) We introduce the MuseFlow accompaniment generation model, which can generate multi-track, polyphonic music accompaniment. To the best of our knowledge, MuseFlow is the first flow-based music generation model. 3) We incorporate a sliding window into the model to enable long sequence music generation, breaking the length limitation. Comparisons on datasets such as LDP, FreeMidi, and GPMD verify the effectiveness of the model. MuseFlow produces better results in accompaniment quality and inter-track harmony. Additionally, the note pitch and duration distributions of the generated accompaniment are much closer to the real data.

Similar content being viewed by others

Data Availibility

The source codes of the model introduced in this paper are publicly available at https://github.com/nuoyi-618/MuseFlow.

References

Adams, R.: MusicTheory.net. Accessed: 2023-01-10 (2002–2022). https://www.musictheory.net

Creswell, A., White, T., Dumoulin, V., Arulkumaran, K., Sengupta, B., Bharath, A.A.: Generative adversarial networks: An overview. IEEE Signal Processing Magazine 35 (2018)

Kingma DP, Welling M (2014) Auto-encoding variational bayes. Banff, AB, Canada

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, L., Polosukhin, I.: Attention is all you need 30 (2017)

Dong, H.-W., Hsiao, W.-Y., Yang, L.-C., Yang, Y.-H.: Musegan: Multi-track sequential generative adversarial networks for symbolic music generation and accompaniment. In: Proceedings of the 32nd AAAI Conference on Artificial Intelligence. AAAI Press, New Orleans, Louisiana, USA (2018)

Yang L-C, Chou S-Y, Yang Y-H (2017) Midinet: A convolutional generative adversarial network for symbolic-domain music generation. Suzhou, China, pp 324–331

Roberts, A., Engel, J., Raffel, C., Hawthorne, C., Eck, D.: A hierarchical latent vector model for learning long-term structure in music 80 (2018)

Zhu H, Liu Q, Yuan NJ, Qin C, Li J, Zhang K, Zhou G, Wei F, Xu Y, Chen E (2018) Xiaoice band: A melody and arrangement generation framework for pop music. London, United kingdom, pp 2837–2846

Ren, Y., He, J., Tan, X., Qin, T., Zhao, Z., Liu, T.-Y.: Popmag: Pop music accompaniment generation. In: Proceedings of the 28th ACM International Conference on Multimedia, pp. 1198–1206. Association for Computing Machinery, New York, NY, USA (2020)

Dai, Z., Yang, Z., Yang, Y., Carbonell, J.G., Le, Q., Salakhutdinov, R.: Transformer-xl: Attentive language models beyond a fixed-length context. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pp. 2978–2988 (2019)

Donahue C, Mao HH, Li YE, Cottrell GW, McAuley J (2019) Lakhnes: Improving multi-instrumental music generation with cross-domain pre-training. Delft, Netherlands, pp 685–692

Ens, J., Pasquier, P.: MMM: Exploring conditional multi-track music generation with the transformer (2020)

Dinh L, Krueger D, Bengio Y (2015) Nice: Non-linear independent components estimation. San Diego, CA, United states

Dinh L, Sohl-Dickstein J, Bengio S (2017) Density estimation using real nvp. Toulon, France

Kingma, D.P., Dhariwal, P.: Glow: Generative flow with invertible 1x1 convolutions. In: Prcoceedings of Advances in Neural Information Processing Systems (NeuIPS 2018), vol. 31 (2018)

Prenger, R., Valle, R., Catanzaro, B.: Waveglow: A flow-based generative network for speech synthesis, vol. 2019-May. Brighton, United kingdom, pp. 3617–3621 (2019)

Zhu, X., Xiong, Y., Dai, J., Yuan, L., Wei, Y.: Deep feature flow for video recognition, vol. 2017-January. Honolulu, HI, United states, pp. 4141–4150 (2017)

Henter, G.E., Alexanderson, S., Beskow, J.: Moglow: Probabilistic and controllable motion synthesis using normalising flows. ACM Trans. Graph. 39 (2020)

Zhou, P., Shi, W., Tian, J., Qi, Z., Li, B., Hao, H., Xu, B.: Attention-based bidirectional long short-term memory networks for relation classification. Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics 2 (2016)

Raffel, C.: Learning-based methods for comparing sequences, with applications to audio-to-midi alignment and matching (10134335), 222 (2016)

Guo, R., Herremans, D., Magnusson, T.: Midi miner - a python library for tonal tension and track classification (2019)

Yang L-C, Lerch A (2020) On the evaluation of generative models in music. Neural Computing and Applications 32(9):4773–4784

Ramoneda, P., Bernardes, G.: Revisiting harmonic change detection. In: Proceedings of the 149th Audio Engineering Society Convention 2020, Virtual, Online (2020)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ding, F., Cui, Y. MuseFlow: music accompaniment generation based on flow. Appl Intell 53, 23029–23038 (2023). https://doi.org/10.1007/s10489-023-04664-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04664-8