Abstract

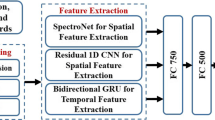

Object classification based on tactile perception plays an essential role in robot manipulation process, as it serves for decision-making for the the downstream manipulation tasks. The demand for precise execution by industrial robots in smart factories has increased, and like humans, robots can infer tactile properties and identify object categories through brief motions. However, traditional practices only consider grasping as an instant state, resulting in the absence of time-series information. To address this issue, we propose a spatio-temporal attention-based Long Short-Term Memory (LSTM) network to solve the time-series problem for object classification. The proposed model utilizes a temporal attention mechanism that can dynamically trace the time-related features of the tactile data. Moreover, a spatial attention mechanism coordinates the integration of tactile information from various input features. The model classifies objects based on the entire temporal process of robot-object contact rather than data from a particular moment. To further enhance the model’s performance, we also incorporate PCA and Kalman filter. Our extensive experiments demonstrate the proposed model’s accuracy and efficiency, validating its ability to perform object classification based on tactile perception.

Graphical abstract

Similar content being viewed by others

Abbreviations

- IMU:

-

Inertial Measurement Unit

- RNN:

-

Recurrent Neural Network

- LSTM:

-

Long Short-Term Memory

- MAE:

-

Mean Absolute Error

- RMSE:

-

Root Mean Square Error

- SVM:

-

Support Vector Machine

- EP:

-

Exploratory Procedures

- NN:

-

Neural Network

- GPU:

-

Graphics Processing Unit

- PCA:

-

Principal Component Analysis

- MAPE:

-

Mean Absolute Percentage Error

- KNN:

-

K-nearest Neighbors

- LR:

-

Logistic Regression

References

Joolee JB, Uddin MA, Jeon S (2022) Deep multi-model fusion network based real object tactile understanding from haptic data. Appl Intell 1–16

Zhao D, Sun F, Wang Z, Zhou Q (2021) A novel accurate positioning method for object pose estimation in robotic manipulation based on vision and tactile sensors. Int J Adv Manuf Technol 116:2999–3010

Nottensteiner K, Sachtler A, Albu-Schäffer A (2021) Towards autonomous robotic assembly: using combined visual and tactile sensing for adaptive task execution. J Intell Robot Syst 101(3):49

Verleysen A, Biondina M, Wyffels F (2022) Learning self-supervised task progression metrics: a case of cloth folding. Appl Intell 1–19

Yang S, Tan J, Chen B (2022a) Robust spike-based continual meta-learning improved by restricted minimum error entropy criterion. Entropy 24(4):455

Yang S, Linares-Barranco B, Chen B (2022b) Heterogeneous ensemble-based spike-driven few-shot online learning. Front Neurosci 16

Spiers AJ, Liarokapis MV, Calli B, Dollar AM (2016) Single-grasp object classification and feature extraction with simple robot hands and tactile sensors. IEEE Trans Haptics 9(2):207–220

da Fonseca VP, Jiang X, Petriu EM, de Oliveira TEA (2022) Tactile object recognition in early phases of grasping using underactuated robotic hands. Intel Serv Robot 15(4):513–525

Huang X, Halwani M, Muthusamy R, Ayyad A, Swart D, Seneviratne L, Gan D, Zweiri Y (2022) Real-time grasping strategies using event camera. J Intell Manuf 1–23

Congcong M, Wang Y, Mei D, Wang S (2022) Development of robotic hand tactile sensing system for distributed contact force sensing in robotic dexterous multimodal grasping. Int J Intell Robot Appl 6(4):760–772

Cui Y, Ooga J, Ogawa A, Matsubara T (2020) Probabilistic active filtering with Gaussian processes for occluded object search in clutter. Appl Intell 50:4310–4324

James JW, Church A, Cramphorn L, Lepora NF (2021) Tactile Model O: fabrication and testing of a 3D-printed, three-fingered tactile robot hand. Soft Rob 8(5):594–610

Yang J, Kim M, Kim D, Yun D (2021) Protrusion type slip detection soft sensor and application to anthropomorphic robot hand. In: 2021 24th International Conference on Mechatronics Technology (ICMT). IEEE, pp 1–5

Thuruthel TG, Shih B, Laschi C, Tolley MT (2019) Soft robot perception using embedded soft sensors and recurrent neural networks. Sci Robot 4(26):1

Kim S-H, Sunjong O, Kim KB, Jung Y, Lim H, Cho K-J (2018a) Design of a bioinspired robotic hand: magnetic synapse sensor integration for a robust remote tactile sensing. IEEE Robot Autom Lett 3(4):3545–3552

He L, Qiujie L, Abad S-A, Rojas N, Nanayakkara T (2020) Soft fingertips with tactile sensing and active deformation for robust grasping of delicate objects. IEEE Robot Autom Lett 5(2):2714–2721

Belzile B, Birglen L (2014) A compliant self-adaptive gripper with proprioceptive haptic feedback. Auton Robot 36(1):79–91

Dollar AM, Jentoft LP, Gao JH, Howe RD (2010) Contact sensing and grasping performance of compliant hands. Auton Robot 28(1):65–75

Jentoft LP, Howe RD (2011) Determining object geometry with compliance and simple sensors. In: 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE

Vargas L, Huang H, Zhu Y, Xiaogang H (2021) Object recognition via evoked sensory feedback during control of a prosthetic hand. IEEE Robot Autom Lett 7(1):207–214

Ning F, Shi Y, Cai M, Weiqing X (2020) Various realization methods of machine-part classification based on deep learning. J Intell Manuf 31(8):2019–2032

Yang S, Gao T, Wang J, Deng B, Azghadi MR, Lei T, Linares-Barranco B (2022c) SAM: a unified self-adaptive multicompartmental spiking neuron model for learning with working memory. Front Neurosci 16

Li G, Liu S, Wang L, Zhu R (2020) Skin-inspired quadruple tactile sensors integrated on a robot hand enable object recognition. Sci Robot 5(49):eabc8134

Kim D-E, Kim K-S, Park J-H, Ailing L, Lee J-M (2018b) Stable grasping of objects using air pressure sensors on a robot hand. In: 2018 18th International Conference on Control, Automation and Systems (ICCAS). IEEE, pp 500–502

Spiers AJ, Morgan AS, Srinivasan K, Calli B, Dollar AM (2019) Using a variable-friction robot hand to determine proprioceptive features for object classification during within-hand-manipulation. IEEE Trans Haptics 13(3):600–610

Liarokapis MV, Calli B, Spiers AJ, Dollar AM (2015) Unplanned, model-free, single grasp object classification with underactuated hands and force sensors. In: 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, pp 5073–5080

Gorges N, Navarro SE, Göger D, Wörn H (2010) Haptic object recognition using passive joints and haptic key features. In: 2010 IEEE International Conference on Robotics and Automation. IEEE, pp 2349–2355

Pastor F, García-González J, Gandarias JM, Medina D, Closas P, García-Cerezo AJ, Gómez-de Gabriel JM (2020) Bayesian and neural inference on LSTM-based object recognition from tactile and kinesthetic information. IEEE Robot Autom Lett 6(1):231–238

Millar C, Siddique N, Kerr E (2021) LSTM classification of functional grasps using SEMG data from low-cost wearable sensor. In: 2021 7th International Conference on Control, Automation and Robotics (ICCAR). IEEE, pp 213–222

Funabashi S, Morikuni S, Geier A, Schmitz A, Ogasa S, Torno TP, Somlor S, Sugano S (2018) Object recognition through active sensing using a multi-fingered robot hand with 3D tactile sensors. In: 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, pp 2589–2595

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444

Wang K-J, Rizqi DA, Nguyen H-P (2021) Skill transfer support model based on deep learning. J Intell Manuf 32(4):1129–1146

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15(56):1929–1958. http://jmlr.org/papers/v15/srivastava14a.html

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Rumelhart DE, Hinton GE, Williams RJ (1986) Learning representations by back-propagating errors. Nature 323(6088):533–536

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical approval

Hereby, I Dongkun Wang consciously assure that for the manuscript Object classification for tactile robot hand utilizing deep learning model facing the demand of smart factory the following is fulfilled:

1. This material is the authors’ own original work, which has not been previously published elsewhere.

2. The paper is not currently being considered for publication elsewhere.

3. The paper reflects the authors’ own research and analysis in a truthful and complete manner.

4. The paper properly credits the meaningful contributions of co-authors and co-researchers.

5. The results are appropriately placed in the context of prior and existing research.

6. All sources used are properly disclosed (correct citation). Literally copying of text must be indicated as such by using quotation marks and giving proper reference.

7. All authors have been personally and actively involved in substantial work leading to the paper, and will take public responsibility for its content.

The violation of the Ethical Statement rules may result in severe consequences.

I agree with the above statements and declare that this submission follows the policies of Solid State Ionics as outlined in the Guide for Authors and in the Ethical Statement.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, D., Teng, Y., Peng, J. et al. Deep-learning-based object classification of tactile robot hand for smart factory. Appl Intell 53, 22374–22390 (2023). https://doi.org/10.1007/s10489-023-04683-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04683-5