Abstract

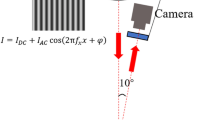

The visible light image and Time of Flight image fusion technology can effectively remedy the limitation of single orchard scene data source. The Dual-channel Pulse Coupled Neural Network is often used for multi-scale fusion rules in transform domain. However, in the ignition process of Dual-channel Pulse Coupled Neural Network, it ignores the impact of image changes and fluctuations on the results, resulting in pixel artifacts, region blurring and unclear edges. In order to solve this problem, a Heterogenous Image Fusion Model with Dual Channel Pulse Coupled Neural Network Significance Region for Nonsubsampled Shearlet Transform in an Apple Orchard is proposed. Three aspects of adaptive parameters improvement of Dual Channel Pulse Coupled Neural Network are proposed, including using root mean square error to improve the dynamic link domain, image gradient to define the link strength and the average gray value of pixels in the ignition region as the dynamic threshold respectively. Moreover, a Significant Region Extraction Method is proposed to calculate the low-frequency significant regions. The model improves the segmentation effect of significant regions, and the significance ratio of the three groups of samples under front light and back light reaches 100.00%, with the fastest segmentation reaching 2.13 s. The six evaluation index values of the three groups of samples in different periods of front light and back light are superior to other fusion models. The fusion rate of model recognition reaches 100.00%, and the fusion speed reaches 8.02 s at the fastest. The model has good effect in precision, time consumption, model size and so on, which can supplement and improve the fusion theory of multi-source image transform domain.

Highlights

• First, a Dual Channel Pulse Coupled Neural Network with Significant Region (SR-dual- channel PCNN) is proposed.

• Second, three aspects of adaptive parameters improvement of Dual PCNN are proposed, including using root mean square error to improve the dynamic link domain, image gradient to define the link strength and the average gray value of pixels in the ignition region as the dynamic threshold respectively.

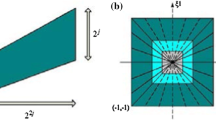

• Third, a Significant Region Extraction Method (SRE-M) is proposed to calculate the low-frequency significant regions. A low frequency fusion rule is built to use the salient region as the low frequency subband fusion coefficient to suppress the background of the salient region.

AbstractSection Graphical Abstract

Similar content being viewed by others

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Yan B, Fan P, Lei X et al (2021) A real-time apple targets detection method for picking robot based on improved YOLOv5. Remote Sens 13(9):1619

Jahromi S, Jansson JP, Keränen P et al (2020) A 32× 128 SPAD-257 TDC receiver IC for pulsed ToF solid-state 3-D imaging. IEEE J Solid-State Circuits 55(7):1960–1970

Xuecheng Wu et al (2022) A Method for Medical Microscopic Images’ Sharpness Evaluation Based on NSST and Variance by Combining Time and Frequency Domains. Sensors 22(19):7607–7607

Chang L, Haifeng Z, Wu C (2020) Gaussian Pyramid Transform Retinex Image Enhancement Algorithm based on Bilateral Filtering. Laser Optoelectron Progress 57(16):209–215

Yao Y, Ma J, Ye Y (2023) Regularizing autoencoders with wavelet transform for sequence anomaly detection. Pattern Recognition 134(2023):1–18

Muhammad H et al (2022) Illumination invariant face recognition using contourlet transform and convolutional neural network. J Intell Fuzzy Syst 43(1):383–396

Manikandan T et al (2023) Adaptive Fuzzy Logic Despeckling in Non-Subsampled Contourlet Transformed Ultrasound Pictures. Intell Autom Soft Comput 35(3):2755–2771

Lian J, Yang Z, Liu J et al (2021) An overview of image segmentation based on pulse-coupled neural network. Arch Comput Methods Eng 28:387–403

Das M et al (2022) Multimodal image sensor fusion in a cascaded framework using optimized dual channel pulse coupled neural network. J Ambient Intell Humanized Comput 22(2022):1–20

Cheng B, Jin L, Li G (2018) Infrared and visual image fusion using LNSST and an adaptive dual-channel PCNN with triple-linking strength. Neurocomputing 310:135–147

Qian J et al (2019) Image Fusion Method Based on Structure-Based Saliency Map and FDST-PCNN Framework. IEEE Access 7:83484–83494

Panigrahy C, Seal A, Mahato NK (2020) Fractal dimension based parameter adaptive dual channel PCNN for multi-focus image fusion[J]. Opt Lasers Eng 133:106141

Dharini S, Jain S (2021) A novel metaheuristic optimal feature selection framework for object detection with improved detection accuracy based on pulse-coupled neural network. Soft Comput 21(2021):1–13

Huang C, Tian G, Lan Y, Peng Y, Ng EYK, Hao Y, Cheng Y, Che W (2019) A new pulse coupled neural network (PCNN) for brain medical image fusion empowered by shuffled frog leaping algorithm. Front Neurosci 13:210

Zhou T, Qi L, Huiling L et al (2023) GAN Review: Models and Application of Medical Image Fusion. Inf Fusion 91:134–148

Temer AM (2018) Basler ToF Camera User’s Manual[EB/OL]. https://www.baslerweb.com/cn/sales-support/downloads/document-downloads/basler-tof-camera-users-manual/. Accessed 5 June 2021

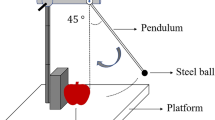

Yubo Z, Liqun L (2022) Heterologous sources images in the apple orchard registration method using EM-PCNN. Trans Chin Soc Agric Eng 38(5):175–183

Wang H, Li Z, Li Y et al (2020) Visual saliency guided complex image retrieval. Pattern Recogn Lett 130:64–72

Alwazzan MJ, Ismael MA, Ahmed AN (2021) A hybrid algorithm to enhance colour retinal fundus images using a Wiener filter and CLAHE. J Digit Imaging 34(3):750–759

Huang Y-J et al (2022) Efficient quasi-brittle fracture simulations of concrete at mesoscale using micro CT images and a localizing gradient damage model. Comput Methods Appl Mech Eng 400(2022):1–17

Guo MH, Xu TX, Liu JJ et al (2022) Attention mechanisms in computer vision: A survey. Comput Visual Media 8(3):331–368

Pang Y, Lin J, Qin T et al (2021) Image-to-image translation: Methods and applications. IEEE Trans Multimed 24:3859–3881

Yang Z, Ma Y, Lian J et al (2018) Saliency motivated improved simplified PCNN model for object segmentation. Neurocomputing 275:2179–2190

Panigrahy C, Seal A, Kumar Mahato N (2020) MRI and SPECT Image Fusion Using a Weighted Parameter Adaptive Dual Channel PCNN. IEEE Signal Process Lett 27:690–694

Nair RR, Singh T (2019) Multi-sensor medical image fusion using pyramid-based DWT: a multi-resolution approach. IET Image Proc 13(9):1447–1459

Wang Z, Xu J, Jiang X et al (2020) Infrared and visible image fusion via hybrid decomposition of NSCT and morphological sequential toggle operator. Optik 201:163497

Wu C, Chen L (2020) Infrared and visible image fusion method of dual NSCT and PCNN. PLoS ONE 15(9):e0239535

Azam MA, Khan KB, Salahuddin S et al (2022) A review on multimodal medical image fusion: Compendious analysis of medical modalities, multimodal databases, fusion techniques and quality metrics. Comput Biol Med 144:105253

Gené-Mola J, Vilaplana V, Rosell-Polo JR, Morros JR, Ruiz-Hidalgo J, Gregorio E (2019) Multi-modal Deep Learning for Fruit Detection Using RGB-D Cameras and their Radiometric Capabilities. Comput Electron Agric 162:689–698

Gené-Mola J, Vilaplana V, Rosell-Polo JR, Morros JR, Ruiz-Hidalgo J, Gregorio E (2019) KFuji RGB-DS database: Fuji apple multi-modal images for fruit detection with color, depth and range-corrected IR data. Data Brief 25:104289

Acknowledgements

Liqun Liu and Yubo Zhou designed and wrote the paper. Jiuyuan Huo, Ye Wu and Renyuan Gu collected data and analyzed the experiments. All authors read and approved the final manuscript.

Funding

This research was supported by the Gansu Provincial University Teacher Innovation Fund Project [grant number 2023A-051]; and Young Supervisor Fund of Gansu Agricultural University [grant number GAU-QDFC-2020–08]; and Gansu Science and Technology Plan [grant number 20JR5RA032].

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors of this publication declare there is no conflict of interest.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, L., Zhou, Y., Huo, J. et al. Heterogenous image fusion model with SR-dual-channel PCNN significance region for NSST in an apple orchard. Appl Intell 53, 21325–21346 (2023). https://doi.org/10.1007/s10489-023-04690-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04690-6