Abstract

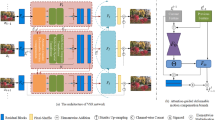

Although deep-learning based video super-resolution (VSR) studies have achieved excellent progress in recent years, the majority of them do not take into account the impact of lossy compression. A large number of real-world videos are characterized by compression artifacts (e.g., blocking, ringing, and blurring) due to transmission bandwidth or storage capacity limitations, which makes the VSR task more challenging. To balance compression artifacts reduction and detail preservation, this paper proposes a nonlocal-guided enhanced interaction spatial-temporal network for compressed video super-resolution (EISTNet). EISTNet consists of the nonlocal-guided enhanced interaction feature extraction module (EIFEM) and the attention-based multi-channel feature self-calibration module (MCFSM). The pixel-shuffle-based nonlocal feature guidance module (PNFGM) is designed to explore the nonlocal similarity of video sequences and then it is used to guide the extraction and fusion of inter-frame spatial-temporal information in EIFEM. Considering that compression noise and video content are strongly correlated, MCFSM introduces features from the compression artifacts reduction stage for recalibration and adaptive fusion, which closely associates the two parts of the network. To reduce the computational memory pressure on nonlocal modules, we add pixel-shuffle operation to PNFGM, which also expands its receptive field. Experimental results demonstrate that our method achieves better performance compared to the existing methods.

Similar content being viewed by others

References

Wiegand T, Sullivan GJ, Bjontegaard G, Luthra A (2003) Overview of the h. 264/avc video coding standard. IEEE Transactions Circ Syst Video Tech 13(7):560–576

Sullivan GJ, Ohm JR, Han WJ, Wiegand T (2012) Overview of the high efficiency video coding (hevc) standard. IEEE Transactions Circ Syst Video Tech 22(12):1649–1668

Li Y, Liu D, Li H, Li L, Wu F, Zhang H, Yang H (2017) Convolutional neural network-based block up-sampling for intra frame coding. IEEE Transactions Circ Syst Video Tech 28(9):2316–2330

Lin J, Liu D, Yang H, Li H, Wu F (2018) Convolutional neural networkbased block up-sampling for hevc. IEEE Transactions Circ Syst Video Tech 29(12):3701–3715

Ur H, Gross D (1992) Improved resolution from subpixel shifted pictures. CVGIP: Graphical models and image processing 54(2):181–186

Takeda H, Milanfar P, Protter M, Elad M (2009) Super-resolution without explicit subpixel motion estimation. IEEE Transactions on Image Processing 18(9):1958–1975

Protter M, Elad M, Takeda H, Milanfar P (2008) Generalizing the nonlocalmeans to super-resolution reconstruction. IEEE Transactions on image processing 18(1):36–51

Zhang Y, Tao M, Yang K, Deng Z (2015) Video superresolution reconstruction using iterative back projection with critical-point filters based image matching. Adv Multimed 2015

Babacan SD, Molina R, Katsaggelos AK (2008) Total variation super resolution using a variational approach. In: 2008 15th IEEE International Conference on Image Processing 641–644 IEEE

Yang J, Wright J, Huang TS, Ma Y (2010) Image super-resolution via sparse representation. IEEE transactions on image processing 19(11):2861–2873

Wang P, Hu X, Xuan B, Mu J, Peng S (2011) Super resolution reconstruction via multiple frames joint learning. In: 2011 International Conference on Multimedia and Signal Processing 1:357–361 IEEE

Yang MC, Wang YCF (2012) A self-learning approach to single image super-resolution. IEEE Transactions multimed 15(3):498–508

Dong C, Deng Y, Loy CC, Tang X (2015) Compression artifacts reduction by a deep convolutional network. In: Proceedings of the IEEE International Conference on Computer Vision 576–584

Dong C, Loy CC, He K, Tang X (2015) Image super-resolution using deep convolutional networks. IEEE transactions on pattern analysis and machine intelligence 38(2):295–307

Zhang K, Zuo W, Chen Y, Meng D, Zhang L (2017) Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE transactions on image processing 26(7):3142–3155

Liu, D, Wen B, Fan Y, Loy CC, Huang TS (2018) Non-local recurrent network for image restoration. Adv neural information processing syst 31

Yang R, Xu M, Wang Z, Li T (2018) Multi-frame quality enhancement for compressed video. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 6664–6673

Guan Z, Xing Q, Xu M, Yang R, Liu T, Wang Z (2019) Mfqe 2.0: A new approach for multi-frame quality enhancement on compressed video. IEEE transactions on pattern analysis and machine intelligence 43(3):949–963

Deng J, Wang L, Pu S, Zhuo C (2020) Spatio-temporal deformable convolution for compressed video quality enhancement. Proceedings of the AAAI Conference on Artificial Intelligence 34:10696–10703

Zhao M, Xu Y, Zhou S (2021) Recursive fusion and deformable spatiotemporal attention for video compression artifact reduction. In: Proceedings of the 29th ACM International Conference on Multimedia 5646–5654

Zhang T, Teng Q, He X, Ren C, Chen Z (2022) Multi-scale intercommunication spatio-temporal network for video compression artifacts reduction. Express Briefs, IEEE Transactions Circ Syst II

Liao R, Tao X, Li R, Ma Z, Jia J (2015) Video super-resolution via deep draft-ensemble learning. In: Proceedings of the IEEE International Conference on Computer Vision 531–539

Wang L, Guo Y, Liu L, Lin Z, Deng X, An W (2020) Deep video superresolution using hr optical flow estimation. IEEE Transactions on Image Processing 29:4323–4336

Haris M, Shakhnarovich G, Ukita N (2019) Recurrent back-projection network for video super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 3897–3906

Xue T, Chen B, Wu J, Wei D, Freeman WT (2019) Video enhancement with task-oriented flow. International J Comput Vision 127(8):1106–1125

Caballero J, Ledig C, Aitken A, Acosta A, Totz J, Wang Z, Shi W (2017) Real-time video super-resolution with spatio-temporal networks and motion compensation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 4778–4787

Chen Z, Yang W, Yang J (2022) Video super-resolution network using detail component extraction and optical flow enhancement algorithm. Appl Intelligence 1–13

Tian Y, Zhang Y, Fu Y, Tdan CX (2020) temporally-deformable alignment network for video super-resolution. in 2020 ieee. In: CVF Conference on Computer Vision and Pattern Recognition (CVPR) 3357–3366

Wang X, Chan KC, Yu K, Dong C, Change Loy C (2019) Edvr: Video restoration with enhanced deformable convolutional networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops 0–0

Chan KC, Zhou S, Xu X, Loy CC (2022) Basicvsr++: Improving video super-resolution with enhanced propagation and alignment. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 5972–5981

Chan KC, Wang X, Yu K, Dong C, Loy CC (2021) Basicvsr: The search for essential components in video super-resolution and beyond. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 4947–4956

Lin J, Hu X, Cai Y, Wang H, Yan Y, Zou X, Zhang Y, Van Gool L (2022) Unsupervised flow-aligned sequence-to-sequence learning for video restoration. In: ICML

Isobe T, Jia X, Tao X, Li C, Li R, Shi Y, Mu J, Lu H, Tai YW (2022) Look back and forth: Video super-resolution with explicit temporal difference modeling. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 17411–17420

Yi P, Wang Z, Jiang K, Jiang J, Lu T, Tian X, Ma J (2021) Omniscient video super-resolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision 4429–4438

Ho MM, He G,Wang Z, Zhou J (2020) Down-sampling based video coding with degradation-aware restoration-reconstruction deep neural network. In: International Conference on Multimedia Modeling 99–110 Springer

Ho MM, Zhou J, He G (2021) Rr-dncnn v2. 0: enhanced restorationreconstruction deep neural network for down-sampling-based video coding. IEEE Transactions on Image Processing 30:1702–1715

Li Y, Jin P, Yang F, Liu C, Yang MH, Milanfar P (2021) Comisr: Compression-informed video super-resolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision 2543–2552

Chen P, Yang W, Wang M, Sun L, Hu K, Wang S (2021) Compressed domain deep video super-resolution. IEEE Transactions on Image Processing 30:7156–7169

Wang X, Girshick R, Gupta A, He K (2018) Non-local neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 7794–7803

Shi W, Caballero J, Huszér F, Totz J, Aitken AP, Bishop R, Rueckert D,Wang Z (2016) Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 1874–1883

Charbonnier P, Blanc-Feraud L, Aubert G, Barlaud M (1994) Two deterministic half-quadratic regularization algorithms for computed imaging. In: Proceedings of 1st International Conference on Image Processing 2:168–172 IEEE

Zhang X, Dong H, Hu Z, Lai WS, Wang F, Yang MH (2019) Gated fusion network for joint image deblurring and super-resolution. In: 29th British Machine Vision Conference, BMVC 2018

Ohm JR, Sullivan GJ, Schwarz H, Tan TK, Wiegand T (2012) Comparison of the coding efficiency of video coding standards–including high efficiency video coding (hevc). IEEE Transactions on Circuits and Systems for Video Technology 22(12):1669–1684

Montgomery C, et al (2021) Xiph. org video test media (derf’s collection),the xiph open source community, 1994. Online,https://media.xiph.org/video/derf 3

Lai WS, Huang JB, Ahuja N, Yang MH (2017) Deep laplacian pyramid networks for fast and accurate super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 624–632

Kingma DP, Ba J (2014) Adam A method for stochastic optimization. arXiv preprint arXiv:1412.6980

Zhao H, Kong X, He J, Qiao Y, Dong C (2020) Efficient image superresolution using pixel attention. In: European Conference on Computer Vision 56–72 Springer

Liu C, Sun D (2013) On bayesian adaptive video super resolution. IEEE transactions on pattern analysis and machine intelligence 36(2):346–360

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant 62271336 & 62211530110

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest statement

The authors of the submitted manuscript declare that there is no any conflict of interest in this work.

Compliance with Ethical Standards

The authors of the submitted manuscript declare that this work does not involve any ethical issues.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cheng, J., Xiong, S., He, X. et al. Nonlocal-guided enhanced interaction spatial-temporal network for compressed video super-resolution. Appl Intell 53, 24407–24421 (2023). https://doi.org/10.1007/s10489-023-04798-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04798-9