Abstract

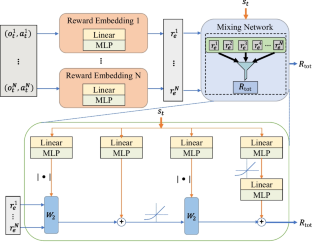

Credit assignment poses a significant challenge in heterogeneous multi-agent reinforcement learning (MARL) when tackling fully cooperative tasks. Existing MARL methods assess the contribution of each agent through value decomposition or agent-wise critic networks. However, value decomposition techniques are not directly applicable to control problems with continuous action spaces. Additionally, agent-wise critic networks struggle to differentiate the distinct contributions from the shared team reward. Moreover, most of these methods assume agent homogeneity, which limits their utility in more diverse scenarios. To address these limitations, we present a novel algorithm that factorizes and reshapes the team reward into agent-wise rewards, enabling the evaluation of the diverse contributions of heterogeneous agents. Specifically, we devise agent-wise local critics that leverage both the team reward and the factorized reward, alongside a global critic for assessing the joint policy. By accounting for the contribution differences resulting from agent heterogeneity, we introduce a power balance constraint that ensures a fairer measurement of each heterogeneous agent’s contribution, ultimately promoting energy efficiency. Finally, we optimize the policies of all agents using deterministic policy gradients. The effectiveness of our proposed algorithm has been validated through simulation experiments conducted in fully cooperative and heterogeneous multi-agent tasks.

Similar content being viewed by others

Data Availability

The data that support the findings of this study are available on request from the first author.

References

Vinyals O, Babuschkin I, Czarnecki WM, Mathieu M, Dudzik A, Chung J, Choi DH, Powell R, Ewalds T, Georgiev P et al (2019) Grandmaster level in starcraft ii using multi-agent reinforcement learning. Nature, 350-354

Chen Y, Zheng Z, Gong X (2022) Marnet: Backdoor attacks against cooperative multi-agent reinforcement learning. IEEE Trans Dependable Sec Comput, 1-11

Liu X, Wang G, Chen K (2022) Option-based multi-agent reinforcement learning for painting with multiple large-sized robots. IEEE Trans Intell Transp Syst, 15707-15715

Chen YJ, Chang DK, Zhang C (2020) Autonomous tracking using a swarm of uavs: A constrained multi-agent reinforcement learning approach. IEEE Trans Veh Technol. 13702-13717

Zhou W, Chen D, Yan J, Li Z, Yin H, Ge W (2022) Multi-agent reinforcement learning for cooperative lane changing of connected and autonomous vehicles in mixed traffic. Auton Intell Syst

Dinneweth J, Boubezoul A, Mandiau R, Espié S (2022) Multi-agent reinforcement learning for autonomous vehicles: a survey. Auton Intell Syst, 27

Sun C, Liu W, Dong, L (2021) Reinforcement learning with task decomposition for cooperative multiagent systems. IEEE Trans Neural Netw Learn Syst, 2054-2065

Liu X, Tan Y (2022) Feudal latent space exploration for coordinated multi-agent reinforcement learning. IEEE Trans Neural Netw Learn Syst, 1-9

Yarahmadi H, Shiri ME, Navidi H, Sharifi A, Challenger M (2023) Bankruptcyevolutionary games based solution for the multi-agent credit assignment problem. Swarm Evol Comput, 101229

Ding S, Du W, Ding L, Guo L, Zhang J, An B (2023) Multi-agent dueling qlearning with mean field and value decomposition. Pattern Recognition, 109436

Du W, Ding S, Guo L, Zhang J, Zhang C, Ding L (2022) Value function factorization with dynamic weighting for deep multi-agent reinforcement learning. Information Sciences, 191-208

Rashid T, Samvelyan M, Schroeder C, Farquhar G, Foerster J, Whiteson S (2018) QMIX: Monotonic value function factorisation for deep multi-agent reinforcement learning. Proceedings of the 35th Int Conf Mac Learn, 4295-4304

Lowe R, Wu Y, Tamar A, Harb J, Abbeel P, Mordatch I (2017) Multiagent actor-critic for mixed cooperative-competitive environments. Adv Neural Info Process Syst, 6379-6390

Lyu X, Xiao Y, Daley B, Amato C (2021) Contrasting centralized and decentralized critics in multi-agent reinforcement learning. 20th Int Conf Auton Agents & Multiagent Syst, 844-852

Oroojlooy A, Hajinezhad D (2022) A review of cooperative multi-agent deep reinforcement learning. Appl Intell, 1-46

Wang J, Yuan M, Li Y, Zhao Z (2023) Hierarchical attention master-slave for heterogeneous multi-agent reinforcement learning. Neural Netw, 359-368

Mahajan A, Rashid T, Samvelyan M, Whiteson S (2019) MAVEN: multiagent variational exploration. In: Adv Neural Info Process Syst, pp. 7611-7622

Li W, He S, Mao X, Li B, Qiu C, Yu J, Peng F, Tan X (2023) Multiagent evolution reinforcement learning method for machining parameters optimization based on bootstrap aggregating graph attention network simulated environment. J Manuf Syst, 424-438

Qiu D, Wang J, Dong Z, Wang Y, Strbac G (2022) Mean-field multi-agent reinforcement learning for peer-to-peer multi-energy trading. IEEE Trans Power Syst, 1-13

Lee HR, Lee T (2021) Multi-agent reinforcement learning algorithm to solve a partially-observable multi-agent problem in disaster response. Euro J Oper Res, 296-308

Foerster J, Farquhar G, Afouras T, Nardelli N, Whiteson S (2018) Counterfactual multi-agent policy gradients. Proceedings of the AAAI Conf Artif Intell, 2974-2982

Guo D, Tang L, Zhang X, Liang YC (2020) Joint optimization of handover control and power allocation based on multi-agent deep reinforcement learning. IEEE Trans Veh Technol, 13124-13138

Hou Y, Sun M, Zeng Y, Ong YS, Jin Y, Ge H, Zhang Q (2023) A multi-agent cooperative learning system with evolution of social roles. IEEE Trans Evol Comput

Yang J, Nakhaei A, Isele D, Fujimura K, Zha H (2020) CM3: cooperative multi-goal multi-stage multi-agent reinforcement learning. In: 8th International Conference on Learning Representations

Nguyen DT, Kumar A, Lau HC (2017) Collective multiagent sequential decision making under uncertainty. Proceedings of the 31st Conference on Artificial Intelligence, 3036-3043

Du Y, Han L, Fang M, Liu J, Dai T, Tao D (2019) LIIR: learning individual intrinsic reward in multi-agent reinforcement learning. Adv Neural Inf Process Syst, 4405-4416

Sunehag P, Lever G, Gruslys A, Czarnecki WM, Zambaldi VF, Jaderberg M, Lanctot M, Sonnerat N, Leibo JZ, Tuyls K, Graepel T (2018) Value-decomposition networks for cooperative multi-agent learning based on team reward. Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems, 2085-2087

Son K, Kim D, Kang WJ, Hostallero DE, Yi Y (2019) Qtran: Learning to factorize with transformation for cooperative multi-agent reinforcement learning. Int Conf Mach Learn, p 5887-5896

Rashid T, Farquhar G, Peng B, Whiteson S (2020) Weighted qmix: Expanding monotonic value function factorisation for deep multi-agent reinforcement learning. Adv neural inf process syst, 10199-10210

Zhou D, Gayah VV (2023) Scalable multi-region perimeter metering control for urban networks: A multi-agent deep reinforcement learning approach. Transp Res Part C Emerg Technol, p 104033

Liu S, Liu W, Chen W, Tian G, Chen J, Tong Y, Cao J, Liu Y (2023) Learning multi-agent cooperation via considering actions of teammates. IEEE Trans Neural Netw Learn Syst, p 1-12

DENG H, LI Y, YIN Q (2023) Improved qmix algorithm from communication and exploration for multi-agent reinforcement learning. J Comput Appl, p 202

Zhang Y, Ma H, Wang Y (2021) Avd-net: Attention value decomposition network for deep multi-agent reinforcement learning. 25th International Conference on Pattern Recognition, p 7810-7816

Qin Z, Johnson D, Lu Y (2023) Dynamic production scheduling towards selforganizing mass personalization: A multi-agent dueling deep reinforcement learning approach. J Manuf Syst, 242-257

Wang X, Zhang L, Lin T, Zhao C,Wang K, Chen Z (2022) Solving job scheduling problems in a resource preemption environment with multi-agent reinforcement learning. Robot Comput Integr Manuf, 102324

Yu T, Huang J, Chang Q (2021) Optimizing task scheduling in human-robot collaboration with deep multi-agent reinforcement learning. J Manuf Syst, 487-499

Wu H, Ghadami A, Bayrak AE, Smereka JM, Epureanu BI (2021) Impact of heterogeneity and risk aversion on task allocation in multi-agent teams. IEEE Robotics and Automation Letters, 7065-7072

Zhao Y, Xian C, Wen G, Huang P, Ren W (2022) Design of distributed eventtriggered average tracking algorithms for homogeneous and heterogeneous multiagent systems. IEEE Transactions on Automatic Control, 1269-1284

Jiang W, Feng G, Qin S, Yum TSP, Cao G (2019) Multi-agent reinforcement learning for efficient content caching in mobile d2d networks. IEEE Trans Wirel Commun, 1610-1622

Jahn J (2020) Introduction to the theory of nonlinear optimization. Springer Nature

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

No potential conflict of interest was reported by the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jiang, K., Liu, W., Wang, Y. et al. Credit assignment in heterogeneous multi-agent reinforcement learning for fully cooperative tasks. Appl Intell 53, 29205–29222 (2023). https://doi.org/10.1007/s10489-023-04866-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04866-0