Abstract

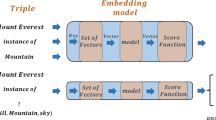

In knowledge graph embedding, an attempt is made to embed the objective facts and relationships expressed in the form of triplets into multidimensional vector space, facilitating various applications, such as link prediction and question answering. Structure embedding models focus on the graph structure while the importance of language semantics in inferring similar entities and relations is ignored. Semantic embedding models use pretrained language models to learn entity and relation embeddings based on text information, but they do not fully exploit graph structures that reflect relation patterns and mapping attributes. Structure and semantic information in knowledge graphs represent different hierarchical properties that are indispensable for comprehensive knowledge representation. In this paper, we propose a general knowledge graph embedding framework named SSKGE, which considers both the graph structure and language semantics and learns these two complementary characteristics to integrate entity and relation representations. To compensate for semantic embedding approaches that ignore the graph structure, we first design a structure loss function to explicitly model the graph structure attributes. Second, we leverage a pretrained language model that has been fine-tuned by the structure loss to guide the structure embedding approaches in enhancing the semantic information they lack and obtaining universal knowledge representations. Specifically, guidance is provided by a distance function that makes the spatial distribution of the two types of graph embeddings have a certain similarity. SSKGE significantly reduces the time cost of using a pretrained language model to complete a knowledge graph. Common knowledge graph embedding models such as TransE, DistMult, ComplEx, RotatE, PairRE, and HousE have achieved better results with multiple datasets, including FB15k, FB15k-237, WN18, and WN18RR, using the SSKGE framework. Extensive experiments and analyses have verified the effectiveness and practicality of SSKGE.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data Availibility Statement

The datasets generated and/or analysed during the current study will be made available on reasonable request.

References

Fang Q, Zhang X, Hu J, Wu X, Xu C (2022) Contrastive multi-modal knowledge graph representation learning. IEEE Transactions on Knowledge and Data Engineering

Ji S, Pan S, Cambria E, Marttinen P, Philip S (2021) A survey on knowledge graphs: Representation, acquisition, and applications. IEEE Trans Neural Netw Learn Syst 33(2):494–514

Rossi A, Barbosa D, Firmani D, Matinata A, Merialdo P (2021) Knowledge graph embedding for link prediction: A comparative analysis. ACM Trans Knowl Disc Data (TKDD) 15(2):1–49

Tiddi I, Schlobach S (2022) Knowledge graphs as tools for explainable machine learning: A survey. Art Intell 302:103627

Bordes A, Usunier N, Garcia-Duran A, Weston J, Yakhnenko O (2013) Translating embeddings for modeling multi-relational data. Adv Neural Inf Process Syst 26

Sun Z, Deng Z-H, Nie J-Y, Tang J (2019) Rotate: Knowledge graph embedding by relational rotation in complex space. arXiv:1902.10197

Chao L, He J, Wang T, Chu W (2021) Pairre: Knowledge graph embeddings via paired relation vectors. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 4360–4369

Nickel M, Tresp V, Kriegel H-P (2011) A three-way model for collective learning on multi-relational data. In Proceedings of the 28th International Conference on International Conference on Machine Learning, pages 809–816

Yang B, Yih W-T, He X, Gao J, Deng L (2014) Embedding entities and relations for learning and inference in knowledge bases. arXiv:1412.6575

Trouillon T, Welbl J, Riedel S, Éric Gaussier, Bouchard G (2016) Complex embeddings for simple link prediction. In International conference on machine learning, pages 2071–2080. PMLR

Dettmers T, Minervini P, Stenetorp P, Riedel S (2018) Convolutional 2d knowledge graph embeddings. In Proceedings of the AAAI conference on artificial intelligence, volume 32

Schlichtkrull M, Kipf T N, Bloem P, van den Berg R, Titov I, Welling M (2018) Modeling relational data with graph convolutional networks. In European semantic web conference, pages 593–607. Springer

Shang C, Tang Y, Huang J, Bi J, He X, Zhou B (2019) End-to-end structure-aware convolutional networks for knowledge base completion. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence and Thirty-First Innovative Applications of Artificial Intelligence Conference and Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, pages 3060–3067

Wang B, Shen T, Long G, Zhou T, Wang Y, Chang Y (2021) Structure-augmented text representation learning for efficient knowledge graph completion. In Proceedings of the Web Conference 2021:1737–1748

Wang X, Gao T, Zhu Z, Zhang Z, Liu Z, Li J, Tang J (2021) Kepler: A unified model for knowledge embedding and pre-trained language representation. Trans Assoc Comput Linguistics 9:176–194

Liu X, Hussain H, Razouk H, Kern R (2022) Effective use of bert in graph embeddings for sparse knowledge graph completion. In Proceedings of the 37th ACM/SIGAPP Symposium on Applied Computing, pages 799–802

Yao L, Mao C, Luo Y (2019) Kg-bert: Bert for knowledge graph completion. arXiv:1909.03193

Wang L, Zhao W, Wei Z, Liu J (2022) Simkgc: Simple contrastive knowledge graph completion with pre-trained language models. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 4281–4294

Qiu X, Sun T, Xu Y, Shao Y, Dai N, Huang X (2020) Pre-trained models for natural language processing: A survey. Sci China Technol Sci 63(10):1872–1897

Nayyeri M, Wang Z, Akter M, Alam MM, Rony MA, Lehmann J, Staab S, et al. (2022) Integrating knowledge graph embedding and pretrained language models in hypercomplex spaces. arXiv:2208.02743

Li Q, Wang D, Song SFK, Zhang Y, Yu G (2022) Oerl: Enhanced representation learning via open knowledge graphs. IEEE Trans Knowl Data Eng

Bollacker K, Evans C, Paritosh P, Sturge T, Taylor J (2008) Freebase: A collaboratively created graph database for structuring human knowledge. In Proceedings of the 2008 ACM SIGMOD international conference on Management of data, pages 1247–1250

Toutanova K, Chen D (2015) Observed versus latent features for knowledge base and text inference. In Proceedings of the 3rd workshop on continuous vector space models and their compositionality, pages 57–66

Miller GA (1995) Wordnet: a lexical database for english. Commun ACM 38(11):39–41

Shen J, Wang C, Gong L, Song D (2022) Joint language semantic and structure embedding for knowledge graph completion. In Proceedings of the 29th International Conference on Computational Linguistics, pages 1965–1978

Li W, Peng R, Li Z (2022) Improving knowledge graph completion via increasing embedding interactions. Appl Intell 1–19

Zhang S, Sun Z, Zhang W (2020) Improve the translational distance models for knowledge graph embedding. J Intell Inf Syst 55(3):445–467

Li R, Zhao J, Li C, He D, Wang Y, Liu Y, Sun H, Wang S, Deng W, Shen Y, et al. (2022) House: Knowledge graph embedding with householder parameterization. In International Conference on Machine Learning, pages 13209–13224. PMLR

Householder AS (1958) Unitary triangularization of a nonsymmetric matrix. J ACM (JACM) 5(4):339–342

Jiang D, Wang R, Yang J, Xue L (2021) Kernel multi-attention neural network for knowledge graph embedding. Knowl-Based Syst 227:107188

Guo L, Sun Z, Hu W (2019) Learning to exploit long-term relational dependencies in knowledge graphs. In International Conference on Machine Learning, pages 2505–2514. PMLR

Wang Q, Huang P, Wang H, Dai S, Jiang W, Liu J, Lyu Y, Zhu Y, Wu H (2019) Coke: Contextualized knowledge graph embedding. arXiv:1911.02168

Youn J, Tagkopoulos I (2022) Kglm: Integrating knowledge graph structure in language models for link prediction. arXiv:2211.02744

Liu Y, Sun Z, Li G, Hu W (2022) I know what you do not know: Knowledge graph embedding via co-distillation learning. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management, pages 1329–1338

Bhargava P, Ng V (2022) Commonsense knowledge reasoning and generation with pre-trained language models: a survey. In Proc AAAI Conf Art Intell 36:12317–12325

Li M, Wang B, Jiang J (2021) Siamese pre-trained transformer encoder for knowledge base completion. Neural Process Lett 53(6):4143–4158

Devlin J, Chang MW, Lee K, Toutanova K (2019) Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of NAACL-HLT, pages 4171–4186

Ji G, He S, Xu L, Liu K, Zhao J (2015) Knowledge graph embedding via dynamic mapping matrix. In Proceedings of the 53rd annual meeting of the association for computational linguistics and the 7th international joint conference on natural language processing (volume 1: Long papers), pages 687–696

Vig J (2019) A multiscale visualization of attention in the transformer model. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics: System Demonstrations, pages 37–42

Van der Maaten L, Hinton G (2008) Visualizing data using t-sne. J Mach Learn Res 9(11)

Acknowledgements

This work was supported by the National Key Research and Development Program of China (grant number 2021YFC3300204).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, T., Shen, B. & Zhong, Y. SSKGE: a time-saving knowledge graph embedding framework based on structure enhancement and semantic guidance. Appl Intell 53, 25171–25183 (2023). https://doi.org/10.1007/s10489-023-04896-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04896-8