Abstract

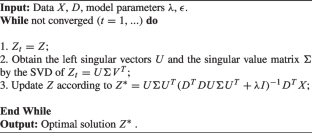

Dictionary learning is an effective feature learning method, leading to many remarkable results in data representation and classification tasks. However, dictionary learning is performed on the original data representation. In some cases, the capability of representation and discriminability of the learned dictionaries may need to be performed better, i.e., with only sparse but not low rank. In this paper, we propose a novel efficient data representation learning method by combining dictionary learning and self-representation, which utilizes both properties of sparsity in dictionary learning and low-rank in low-rank representation (LRR) simultaneously. Thus both the sparse and low-rank properties of the data representation can be naturally captured by our method. To obtain the solution of our proposed method effectively, we also innovatively introduce a more generalized data representation model in this paper. To our best knowledge, its closed-form solution is first derived analytically through our rigorous mathematical analysis. Experimental results show that our method not only can be used for data pre-processing but also can realize better dictionary learning. The samples in the same class can have similar representations by our method, and the discriminability of the learned dictionary can also be enhanced.

Similar content being viewed by others

Data Availability Statement

Data is available on request from the authors.

References

Hastie T, Simard P (1997) Metrics and Models for Handwritten Character Recognition. Birkh user Basel

Vidal R (2011) Subspace clustering. Signal Proc Mag IEEE 28(2):52–68

Wu Z, Liu S, Ding C, Ren Z, Xie S (2021) Learning graph similarity with large spectral gap. IEEE Trans Syst Man Cybern Syst 51(3):1590–1600

Wright J, Yang AY, Ganesh A, Sastry SS, Ma Y (2009) Robust face recognition via sparse representation. IEEE Trans Pattern Anal Mach Intell 31(2):210–227

Yang M, Zhang L (2010) Gabor feature based sparse representation for face recognition with gabor occlusion dictionary. In: European conference on computer vision pp 448–461

Zhao S, Yao H, Gao Y, Ji R, Ding G (2017) Continuous probability distribution prediction of image emotions via multitask shared sparse regression. IEEE Trans Multimed 19(3):632–645

Donoho DL (2000) High-dimensional data analysis: The curses and blessings of dimensionality

Gao Y, Ji R, Cui P, Dai Q, Hua G (2014) Hyperspectral image classification through bilayer graph-based learning. IEEE Trans Image Process 23(7):2769–2778

Peng X, Lu J, Yi Z, Yan R (2016) Automatic subspace learning via principal coefficients embedding. IEEE Trans Cybern 47(11):3583–3596

Chen CP, Liu Z (2017) Broad learning system: An effective and efficient incremental learning system without the need for deep architecture. IEEE Trans Neural Netw Learn Syst 29(1):10–24

Chen B, Wang X, Lu N, Wang S, Cao J, Qin J (2018) Mixture correntropy for robust learning. Pattern Recognit 79:318–327

Wu Z, Su C, Yin M, Ren Z, Xie S (2021) Subspace clustering via stacked independent subspace analysis networks with sparse prior information. Pattern Recognit Lett 146:165–171

Xie Y, Qu Y, Tao D, Wu W, Yuan Q, Zhang W (2016) Hyperspectral image restoration via iteratively regularized weighted schatten -norm minimization. IEEE Trans Geosci Remote Sens 54:04

Chen J, Yi Z (2014) Subspace clustering by exploiting a low-rank representation with a symmetric constraint. Comput Sci

Ehsan E, Rene V (2013) Sparse subspace clustering: algorithm, theory, and applications. IEEE Trans Pattern Anal Mach Intell 35(11):2765–2781

Zhang Z, Xu Y, Yang J, Li X, Zhang D (2015) A survey of sparse representation: algorithms and applications. IEEE access 3:490–530

Liu G, Lin Z, Yu Y (2010) Robust subspace segmentation by low-rank representation. In: International conference on machine learning pp 663–670

Yang M, Zhang L, Feng X, Zhang D (2011) Fisher discrimination dictionary learning for sparse representation. Proceedings 24(4):543–550

Aharon M, Elad M, Bruckstein A (2006) K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans Signal Process 54(11):4311–4322

Gu S, Zhang L, Zuo W, Feng X (2014) Projective dictionary pair learning for pattern classification. Adv Nucl Inf Process Syst 1:793–801

Gangeh MJ, Ghodsi A, Kamel MS (2013) Kernelized supervised dictionary learning. IEEE Trans Signal Process 61(19):4753–4767

Li Z, Zhang Z, Qin J, Zhang Z, Shao L (2019) Discriminative fisher embedding dictionary learning algorithm for object recognition. IEEE Trans Neural Netw Learn Syst 31(3):786–800

Zhang Z, Lai Z, Xu Y, Shao L, Wu J, Xie G-S (2017) Discriminative elastic-net regularized linear regression. IEEE Trans Image Process 26(3):1466–1481

Guo L, Dai Q (2021) Laplacian regularized low-rank sparse representation transfer learning. Int J Mach Learn Cybern 12(3):807–821

Wang Y-X, Xu H, Leng C (2019) Provable subspace clustering: When lrr meets ssc. IEEE Trans Inf Theory 65(9):5406–5432

Liu G, Yan S (2011) Latent low-rank representation for subspace segmentation and feature extraction. In: 2011 International conference on computer vision. IEEE, pp 1615–1622

Luo D, Nie F, Ding C, Huang H (2011) Multi-subspace representation and discovery. In: Joint european conference on machine learning and knowledge discovery in databases. pp 405–420

Ding Y, Chong Y, Pan S (2020) Sparse and low-rank representation with key connectivity for hyperspectral image classification. IEEE J Sel Top Appl Earth Obs Remote Sens 13:5609–5622

Chen J, Mao H, Wang Z, Zhang X (2021) Low-rank representation with adaptive dictionary learning for subspace clustering. Knowl-Based Syst 223:107053

Roweis ST, Saul LK (2000) Nonlinear dimensionality reduction by locally linear embedding. Science 290(5500):2323–2326

Elhamifar E, René V (2009) Sparse subspace clustering. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), vol 00, vol 6, pp 2790–2797

Masaeli M, Yan Y, Cui Y, Fung G, Dy JG (2010) Convex principal feature selection. In: Proceedings of the 2010 SIAM international conference on data mining. SIAM, pp 619–628

Candès EJ, Li X, Ma Y, Wright J (2011) Robust principal component analysis? J ACM (JACM) 58(3):1–37

Favaro P, Vidal R, Ravichandran A (2011) A closed form solution to robust subspace estimation and clustering. In: CVPR 2011. IEEE, pp 1801–1807

Yu YL, Schuurmans D (2012) Rankrm regularization with closed-form solutions: application to subspace clustering. Comput Sci abs/1202.3772

Zhang H, Zhang Y, Huang TS (2013) Robust face recognition via sparse representation. Pattern Recognit 46(5):1511–1521

Acknowledgements

This work was supported in part by the Grants of National Key R &D Program of China- 2020AAA0108302, and the Shenzhen University 2035 Program for Excellent Research (00000224).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that there is no conflict of interest that could be perceived as prejudicing the impartiality of the research reported.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zeng, D., Sun, J., Wu, Z. et al. Data representation learning via dictionary learning and self-representation. Appl Intell 53, 26988–27000 (2023). https://doi.org/10.1007/s10489-023-04902-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04902-z