Abstract

Self-supervision learning (SSL) has shown exceptionally promising results in natural language processing and, more recently, in image classification and recognition. Recent research works have demonstrated SSL’s benefits on large unlabeled datasets. However, relatively little investigation has been done into how well it works with smaller datasets. Typically, this challenge entails training a model on a very small quantity of data and then evaluating the model on out-of-distribution data. Few-shot image classification aims to classify classes that haven’t been seen before using a limited number of training examples. Recent few-shot learning research focuses on developing good representation models that can quickly adapt to test tasks. In this paper, we investigate the role of self-supervision in the context of few-shot learning. We devised a model that improves the network’s representation learning by employing a self-supervised auxiliary task that is based on composite rotation. We propose a composite rotation-based auxiliary task that rotates the image on two levels: inner and outer, and assigns one of 16 rotation classes to the modified image. Then, we further trained our model, which enables us to capture the robust learnable features that assist in focusing on better visual details of an object present in the given image. We find that the network is able to learn to extract more generalized and discriminative features, which in turn helps to enhance the effectiveness of its few-shot classification. This approach significantly outperforms the state-of-the-art on several public benchmarks. In addition, we demonstrated empirically that models trained using the proposed approach perform better than the baseline model even when the query examples in the episode are not aligned with the support examples. Extensive ablation experiments are performed to validate the various components of our approach. We also investigate our strategy’s impact on the network’s ability to discriminate visual features.

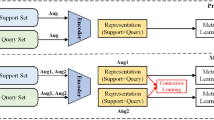

Graphical abstract

Graphical Abstract

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Finn C, Abbeel P, Levine S (2017) Model-agnostic meta-learning for fast adaptation of deep networks. In: International conference on machine learning, pp 1126–1135. PMLR

Gidaris S, Bursuc A, Komodakis N, Pérez P, Cord M (2019) Boosting few-shot visual learning with self-supervision. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 8059–8068

Snell J, Swersky K, Zemel R (2017) Prototypical networks for few-shot learning. Advances in neural information processing systems 30

Lee K, Maji S, Ravichandran A, Soatto S (2019) Meta-learning with differentiable convex optimization. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10657–10665

Ren M, Triantafillou E, Ravi S, Snell J, Swersky K, Tenenbaum JB, Larochelle H, Zemel RS (2018) Meta-learning for semi-supervised few-shot classification. arXiv:1803.00676

Doersch C, Gupta A, Efros AA (2015) Unsupervised visual representation learning by context prediction. In: Proceedings of the IEEE international conference on computer vision, pp 1422–1430

Wang B, Li L, Verma M, Nakashima Y, Kawasaki R, Nagahara H (2023) Match them up: visually explainable few-shot image classification. Applied Intelligence, pp 1–22

Tian P, Yu H (2023) Can we improve meta-learning model in few-shot learning by aligning data distributions? Knowl Based Syst 277:110800

Yu H, Zhang Q, Liu T, Lu J, Wen Y, Zhang G (2022) Meta-add: A meta-learning based pre-trained model for concept drift active detection. Inf Sci 608:996–1009

Noroozi M, Favaro P (2016) Unsupervised learning of visual representations by solving jigsaw puzzles. European Conference on Computer Vision, pp 69–84

Noroozi M, Pirsiavash H (2017) Representation learning by learning to count. In: Proceedings of the IEEE international conference on computer vision, pp 5898–5906

Zhang R, Isola P, Efros AA (2016) Colorful image colorization. In: European conference on computer vision

Fini E, Astolfi P, Alahari K, Alameda-Pineda X, Mairal J, Nabi M, Ricci E (2023) Semi-supervised learning made simple with self-supervised clustering. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3187–3197

Rajasegaran J, Khan S, Hayat M, Khan FS, Shah M (2020) Self-supervised knowledge distillation for few-shot learning. arXiv:2006.09785

Tian Y, Krishnan D, Isola P (2020) Contrastive multiview coding. In: European conference on computer vision, pp 776–794. Springer

Singh P, Mazumder P (2022) Dual class representation learning for few-shot image classification. Knowl Based Syst 238:107840

Yang Z, Wang J, Zhu Y (2022) Few-shot classification with contrastive learning. In: European Conference on Computer Vision, pp 293–309. Springer

Tian Y, Wang Y, Krishnan D, Tenenbaum J.B, Isola P (2020) Rethinking few-shot image classification: a good embedding is all you need. In: European conference on computer vision, pp 266–282. Springer

Howard AG (2013) Some improvements on deep convolutional neural network based image classification. arXiv:1312.5402

Guo Y, Cheung N-M (2020) Attentive weights generation for few shot learning via information maximization. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 13499–13508

Ji Z, Chai X, Yu Y, Zhang Z (2021) Reweighting and information-guidance networks for few-shot learning. Neurocomputing 423:13–23

Song H, Torres MT, Özcan E, Triguero I (2021) L2ae-d: Learning to aggregate embeddings for few-shot learning with meta-level dropout. Neurocomputing 442:200–208

Chen L-C, Papandreou G, Kokkinos I, Murphy K, Yuille AL (2014) Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv:1412.7062

Oreshkin B, Rodríguez López P, Lacoste A (2018) Tadam: Task dependent adaptive metric for improved few-shot learning. Advances in neural information processing systems 31

Sung F, Yang Y, Zhang L, Xiang T, Torr P.H, Hospedales TM (2018) Learning to compare: Relation network for few-shot learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1199–1208

Gidaris S, Komodakis N (2018) Dynamic few-shot visual learning without forgetting. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4367–4375

Bertinetto L, Henriques JF, Torr PH, Vedaldi A (2018) Meta-learning with differentiable closed-form solvers

Rusu AA, Rao D, Sygnowski J, Vinyals O, Pascanu R, Osindero S, Hadsell R (2018) Meta-learning with latent embedding optimization. arXiv:1807.05960

Chen C, Li K, Wei W, Zhou JT, Zeng Z (2021) Hierarchical graph neural networks for few-shot learning. IEEE Trans Circuits Syst Video Technol 32(1):240–252

Jiang W, Huang K, Geng J, Deng X (2020) Multi-scale metric learning for few-shot learning. IEEE Trans Circuits Syst Video Technol 31(3):1091–1102

Huang H, Zhang J, Yu L, Zhang J, Wu Q, Xu C (2021) Toan: Target-oriented alignment network for fine-grained image categorization with few labeled samples. IEEE Transactions on Circuits and Systems for Video Technology

Shen Z, Liu Z, Qin J, Savvides M, Cheng K-T (2021) Partial is better than all: Revisiting fine-tuning strategy for few-shot learning. Proceedings of the AAAI conference on artificial intelligence 35:9594–9602

Xu W, Wang H, Tu Z, et al (2020) Attentional constellation nets for few-shot learning. In: International conference on learning representations

Abdel-Basset M, Chang V, Hawash H, Chakrabortty RK, Ryan M (2021) Fss-2019-ncov: A deep learning architecture for semi-supervised few-shot segmentation of covid-19 infection. Knowl Based Syst 212:106647

Li M, Wang R, Yang J, Xue L, Hu M (2021) Multi-domain few-shot image recognition with knowledge transfer. Neurocomputing 442:64–72

He K, Fan H, Wu Y, Xie S, Girshick R (2020) Momentum contrast for unsupervised visual representation learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9729–9738

Chen T, Kornblith S, Norouzi M, Hinton G (2020) A simple framework for contrastive learning of visual representations. In: International conference on machine learning, pp 1597–1607. PMLR

Mazumder P, Singh P, Namboodiri VP (2022) Few-shot image classification with composite rotation based self-supervised auxiliary task. Neurocomputing

Ji Z, Zou X, Huang T, Wu S (2019) Unsupervised few-shot learning via self-supervised training. arXiv:1912.12178

Amac MS, Sencan A, Baran B, Ikizler-Cinbis N, Cinbis RG (2022) Masksplit: Self-supervised meta-learning for few-shot semantic segmentation. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 1067–1077

Gidaris S, Singh P, Komodakis N (2018) Unsupervised representation learning by predicting image rotations. arXiv:1803.07728

Qi H, Brown M, Lowe DG (2018) Low-shot learning with imprinted weights. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5822–5830

Vinyals O, Blundell C, Lillicrap T, Wierstra D et al (2016) Matching networks for one shot learning. Advances in neural information processing systems 29

Qin Y, Zhang W, Zhao C, Wang Z, Zhu X, Shi J, Qi G, Lei Z (2021) Prior-knowledge and attention based meta-learning for few-shot learning. Knowl Based Syst 213:106609

Zhang L, Zhou F, Wei W, Zhang Y (2023) Meta-hallucinating prototype for few-shot learning promotion. Pattern Recognit 136:109235

Yang F, Wang R, Chen X (2023) Semantic guided latent parts embedding for few-shot learning. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 5447–5457

Ravichandran A, Bhotika R, Soatto S (2019) Few-shot learning with embedded class models and shot-free meta training. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 331–339

Chen M, Fang Y, Wang X, Luo H, Geng Y, Zhang X, Huang C, Liu W, Wang B (2020) Diversity transfer network for few-shot learning. In: Proceedings of the AAAI conference on artificial intelligence vol 34, pp 10559–10566

Dhillon GS, Chaudhari P, Ravichandran A, Soatto S (2020) A baseline for few-shot image classification. In: International conference on learning representations

Wang Y, Xu C, Liu C, Zhang L, Fu Y (2020) Instance credibility inference for few-shot learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 12836–12845

Lu Y, Wen L, Liu J, Liu Y, Tian X (2022) Self-supervision can be a good few-shot learner. In: European conference on computer vision, pp 740–758. Springer

Chen J, Zhan L-M, Wu X-M, Chung F-l (2020) Variational metric scaling for metric-based meta-learning. In: Proceedings of the AAAI conference on artificial intelligence, vol 34, pp 3478–3485

Liu Y, Lee J, Park M, Kim S, Yang Y (2018) Transductive propagation network for few-shot learning

Lai N, Kan M, Han C, Song X, Shan S (2020) Learning to learn adaptive classifier-predictor for few-shot learning. IEEE Trans Neural Netw Learn Syst 32(8):3458–3470

Flennerhag S, Rusu AA, Pascanu R, Visin F, Yin H, Hadsell R (2020) Meta-learning with warped gradient descent. In: International conference on learning representations

Zhang H, Zhang J, Koniusz P (2019) Few-shot learning via saliency-guided hallucination of samples. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2770–2779

Sun Q, Liu Y, Chua T-S, Schiele B (2019) Meta-transfer learning for few-shot learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 403–412

Lu J, Jin S, Liang J, Zhang C (2020) Robust few-shot learning for user-provided data. IEEE Trans Neural Netw Learn Syst 32(4):1433–1447

Lifchitz Y, Avrithis Y, Picard S, Bursuc A (2019) Dense classification and implanting for few-shot learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9258–9267

Chen X, He K (2021) Exploring simple siamese representation learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 15750–15758

Marquez RG, Berens P, Kobak D (2022) Two-dimensional visualization of large document libraries using t-sne. In: ICLR 2022 workshop on geometrical and topological representation learning

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D (2017) Grad-cam: Visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE international conference on computer vision, pp 618–626

Author information

Authors and Affiliations

Contributions

Prashant Kumar: Conceptualization, Methodology, Validation, Writing - original draft. Durga Toshniwal: Writing - review & editing.

Corresponding author

Ethics declarations

Competing of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Ethical and informed consent for data used

In this paper, the dataset names are mentioned clearly, and it is stated that these datasets are publicly available. Additionally, it is stated that no ethical approval or informed consent was required for the usage of these datasets.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kumar, P., Toshniwal, D. Self-Supervison with data-augmentation improves few-shot learning. Appl Intell 54, 2976–2997 (2024). https://doi.org/10.1007/s10489-024-05340-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-024-05340-1